Project 1

Title: Son and Daughter

Project Description:

Project 1 is my very first experiment in producing an audiovisual piece. Since we were expected to generate completely abstract content and there was no need to prepare external materials in advance for the project, I started working on a patch on Max already before coming up with a detailed concept. It wasn’t till later that I realized most of the decisions I made concerning the final outcome were all intentionally led to a projection of my own mood while piecing out the project – the feeling of excitement, the sense of discovery, and novelty. In further accomplishing the project, I became clear that my version of the project should appear and sound playful, curious, and fresh as something right after its birth without obvious sophistication.

Perspective and Context: According to Hinderk M. Emrich and his fellows, synesthesia is “the simultaneous, involuntary perception of different, unrelated sensory impressions”, a “blending of the senses”. In my understanding, the most important knowledge revealed by the notion of synesthesia is that the various senses humans possess are not separated from one another at all, the differentiation of different senses are merely for the convenience of theorization rather than being a result of the empirical knowledge of perceptions. While a synesthete by definition tends to perceive a much stronger pairing of two or more senses, everybody is more or less synesthete to different degree.

When applying the notion of synesthesia to designing an audiovisual project, the crucial challenge is to create a possible experience for audience to visualize a piece of music or hear a specific visual pattern – to make sense to the coupling between the visual and audio compositions. In other words, to combine the visual and audio information seamlessly that they become one unity. In creating my project, I paid special attention to matching the tempo of the sounds and the visual movement that they appear to fall under the same narration. Furthermore, I attempted to match the main colors used in the visuals with the timbre in order for the consistence of style. In this way, the audience is more likely to receive the information from two sources altogether and experience synesthesia.

Development & Technical Implementation: The basic idea of the project is to generate visual content with sound signals. Therefore, I came up with the plan to build two synthesizers using BEAP, running the audio or midi signals through convertors so that they could be applied to trigger VIZZIE modules and eventually generate visual content that corresponds to the sound signals.

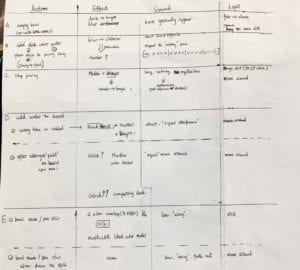

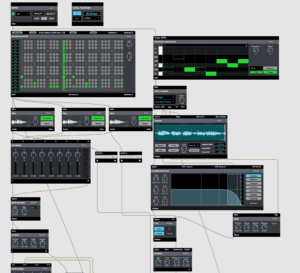

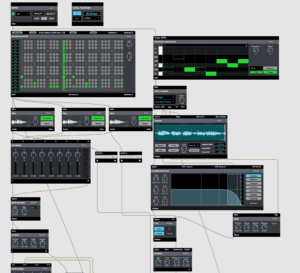

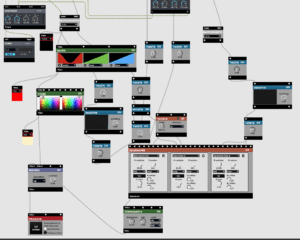

Synthesizers: The first synthesizer consists of a DRUM SEQUENCER, three CELL modules for sample inputs, a PAN MIXER to balance the pan and volume of the three sounds, a GAIN AND BIAS as level control, and a CHORUS effect module. The second synthesizer consists of a PIANO ROLL SEQUENCER, a MIDI TO SIGNAL signal convertor, a SAMPLR, a CLASSROOM FILTER, a VCA level control with an ADSR envelop inserted, and REVERB 1 effect. I run both of them through a AUDIO MIXER to balance the two sound inputs before connecting them to the STEREO output. I created each of them following the order of sequencer – oscillator – mixer – level control – effect. My plan for the audio part is to have a combination of a melodic and a rhythmic section. My initial outlook was to have the drum sequencer being responsible for the rhythm and the piano roll sequencer controlling a melody. However, after testing out different samples and their arrangements, I ended up with two guitar samples and one shaker on the drum sequencer and one conga sample on the piano roll sequencer – mixing the melodic and rhythmic parts mainly on the drum sequencer and one more layer of rhythmic section on the piano roll sequencer. The rhythm is highly syncopated for the sake of interest. I also played around with the high pass filter, reverb and chorus effect to further enrich the sounds.

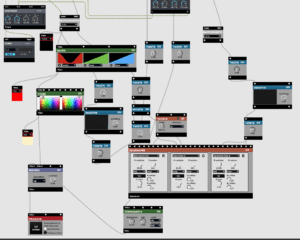

Converting BEAP to VIZZIE: after the experiment with the audio content, I used BEAPCONVERTRs to convert the audio and midi signal from synthesizer 1 and the midi signal from synthesizer 2 to trigger the VIZZIE modules. The basic idea is to combine (using MIXFADR) a colored filter (MAPPR and 2TONR )and a pattern created by any GENERATOR (3EASEMAPPR) for the visual outcome. In altering the signals, I also connected modules including SMOOTHR, and INVERTR. In order to have more control of how the outcome may appear, I didn’t let the signals control as many options on the generator as possible but instead only connected them to a limited amount of knobs/menu selections. Following is a video of the visual content without the sounds –

Link to GitHub: https://gist.github.com/HoiyanGuo/36daec9a276908aec3c96eb769dc37d5

Presentation: The biggest regret for my presentation is to have prepared it elsewhere on a computer before reopening everything on an IMA laptop shortly before the class, which resulted in losing the original arrangement of the audio part. It would have been great if I could share the original version with my classmates! Furthermore, I didn’t remember to adjust the fader knob control to have a better balance between the patterns and the colored filter, resulting in a quite vague visual presentation especially through the projector. Although the presentation of the project didn’t go as planed and appeared somewhat messy, I believe I did showcase the basic aesthetics of it and present one more different outlook of what the project could be like.

Conclusion:Looking back at my project 1, I think it was all about experiments and having an open mind of all the surprising things Max can do. I learned about the necessity of coming up with a framework before further accomplishing each detail. At the same time, I also realized that each project’s final outcome is always based on the combination of plan and unexpected results. For projects in the future, I hope to spend equal attention to the presentation of projects as to the development process.