Duet Beat – Guangbo Niu – Rudi Cossovich

Individual Reflection

- Context and Significance

Our last group project is about a feeding machine that feeds the user according to user’s voice command. Although there is possibility that the machine makes its user lazier and lazier, we do believe that it would actually make things a lot easier for the user, in an interactive way. It frees user’s hands and allows them to do what they want while eating. So this time, we take a step further to lift burden for humans, we want to do something that allows nonprofessionals to create stuff in a professional way. For example, we want our user to compose wonderful music even when they have disabilities or are not trained in any musical instrument. I want our project to align with my definition of interaction: simple inputs, sophisticated processing, and wonderful outputs, with the idea or human-centric.

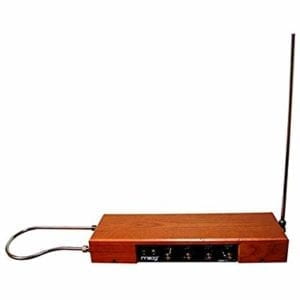

The first project that came to our mind is the App Garageband developed by Apple. The App has many installed chores and drumbeats with which you can compose a song easily by combining them with your own melody or notes . So we decided that our device needs to automatically generate chores according to the user’s need. The other project that has had significant impact on our project is the world’s first electronic musical instrument called Theremin made by Soviet Union scientist Léon Theremin. The instrument uses sophisticated sensors to detect hand movement and allow the user to play different notes with hands in the air. We want our user to have minimum physical contact with the device just like the Teremin, because then it would probably allow people with disabilities to use.

- Conception and Design

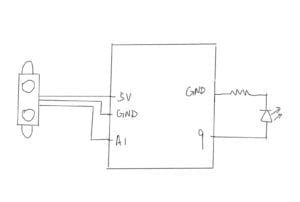

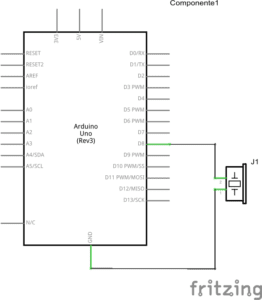

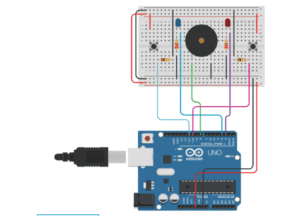

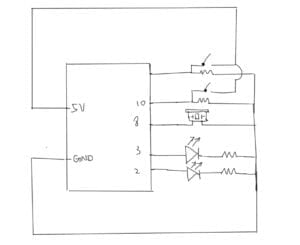

Now we have two principles of our project: automatic chord generating and minimum physical touch. For the first principle, after we saw another group using the heart rate sensor, we decided to apply that sensor to our project as well. It is because we need a kind of sensor to know the user’s need in order to generate the right chord, and the heart rate sensor can be used to detect the user’s mood so that our device can generate the chord based on the user’s current mood. Although hear rate may not the best indicator for mood, this is the best we can use for the time being. For the second principle, we decided to use what we learn from previous classes, which is an IR distance sensor. We set the distance range into different intervals and let each interval represent one note. So once the IR distance sensor detects an object in some distance interval, the buzzer would generate a note that is associated with that interval. This is the best way we cant think of to minimize physical contact. The reason why we didn’t use the ultrasound distance sensor is because we were told that the ultrasound sensor uses sound waves to detect distance. The sound waves could get scattered so the sensor doesn’t give accurate readings when the distance to be detected is long.

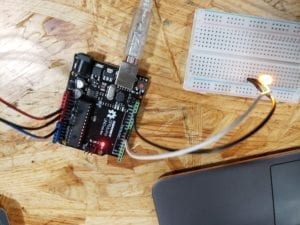

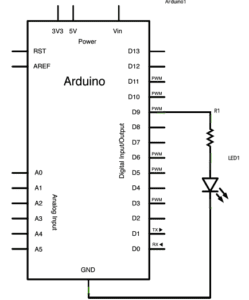

Other materials we used include laser-cut wood boxes (3D printed stuff are not a good one to contain components since they took its shape once printed out), 2 Arduinos (it’s not possible to use a single Arduino board since we later found out that the IR distance sensor doesn’t work well when there is another sensor on the board), 2 buzzers (for chord and for notes), 2 battery cases (we wanted our device to look wireless), countless wires and LEDs (3 for mood indicator, 1 for heart rate detecting indicator, 7 more for different notes. We wanted our device to provide adequate feedback for the user).

- Fabrication and Production

Hmmmm, we did not have a formal user test because we brought the creepy dog to the user test session. And in terms of failures, I guess our biggest failure is building that creepy dog in the first place. I don’t want to talk much about it but it did provide us with valuable experiences. For example, it let us know that 3D printing is difficult to handle. Once the 3D printed stuff took shape, it would be extremely difficult to put something in it or change its shape. For the dog head we had to use electric soldering iron to melt its mouth so that the ultrasound sensor could fit in. And the outcome is just as horrible as the video shows. Therefore, we turned to laser-cut boxes when we built the new device.

In spite of that, we also came across the problem that the IR distance sensor often give spikes and the readings jump up and down. To solve this problem, we looked up in Arduino forums and found a instruction online. We then followed the instruction and put a capacitor near the sensor’s power input in order to smooth the circuit flow. After doing this, the sensor worked a lot better though it still sometimes gives spikes. Also, we wanted to prepared three chords that respectively associate with three kind of mood: calm, just fine, and excited, and the mood is determined by the heart rate. However, we had a big trouble on coding. The sensor was set to give a heart rate reading once in 20 seconds which is too slow and it took me an entire evening to figure out the code and make it give a reading every 5 seconds. Later, it took me another evening to figure out how to make the LEDs blink along with the heart rate and the sensor’s status. In general, it was a painful process to read and write the codes.

One of the biggest success I think is when Leon praised my heart rate sensor status indicator. I added this LED the night before presentation just to make it blink when the heart rate sensor is detecting heartbeat, so that it can tell the user whether the sensor is actually working properly when they use it. Previous user test of that creepy dog has taught me that feedback is one of the most important aspects in an interactive design, and Leon’s praise proved that!

- Conclusions

“Simple inputs, sophisticated processing, and wonderful outputs, with the idea of human-centric”, my definition of interaction is well represented by Duet Beat. It was a generally successful project because it allows us to compose our own music easily even when we don’t have much training in music. Every human being can use it to make wonderful music as long as that human has a beating heart and has some body part that can move. Most people with disabilities can also use our device just as well as we do. I was so glad to see the whole class applauded when my partner Like started to play with it. With the some simple body movements and a heart beat reading, everyone can compose their own music even more easily than Garageband. We successfully let people create new stuff with minimum barriers.

Duet Beat also has some room for improvement. For example, if we had more time, we should rent a more accurate distance sensor, or we can laser cut a new box that can better contain all of our components (this one can’t hold all the wires), or we can invite people with disabilities to actually test it so that we can make some adaptions for them. The biggest takeaway I learned from this process is that: think more before you start. The reason why our head-shaking dog failed is that we did not expect so many difficulties – we started building it even without any sketch or analyzing. I now know that before we start, we have to actually analyze, for example, what’s applicable and what’s not, and make a detailed plan of each step we have to take.