By: Gabrielle Branche

Introduction:

For the first half of the semester we looked at behavioral characteristics of organisms and how they could be applied to machines. The next step was to look at how these robots could not only mimic behavioral traits but also physical traits of living organisms. Thus, this lab aimed to explore locomotion by creating a prototype of animal movement.

Our prototype looked at the movement of a caterpillar/inch worm. By using servos we were able to create a caterpillar that moved slowly across the floor dragging its body behind him.

Idea Development:

Our first choice of movement was that of an armadillo. We wanted to use motors and cylinders to simulate the rolling of the armadillo and if successful, further develop it to move its head in and out of its shell/ However after some discussion and some preliminary testing of the mechanics we realized that in the time given we would not be able to carry this through. Moreover, the mechanics were very similar to that of a wheel which defeated the purpose of the lab.

Next, we looked at the movement of a monkey swinging from vines but quickly came across problems of hooking which made us switch again. Finally, we settled on a caterpillar. First we created a purely physical set up using cardboard, string and pipe cleaner. We then moved to a single servo with card board and string. The final project used two servos cardboard and pipe cleaner

Prototype development

Prototype 1: String mechanic.

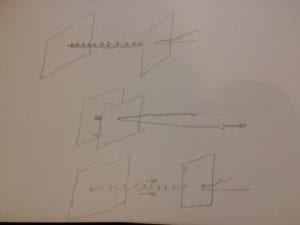

The string mechanic relied on the springiness of the pipe cleaner. By inserting a string and a coil between two pieces of card board, they could be used to bring the back panel closer to the front panel. Then due to the tension in the coil, the front panel is pushed forward causing and forward movement.

The challenge of this mechanism was the fact that the string needed to be pulled manually. Additionally, due to the lack of weight in the card board panels, instead of having the push and pull movement, the whole structure slid when the string was pulled.

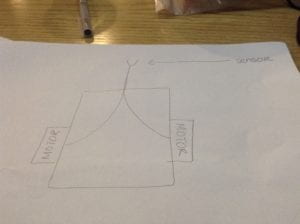

Prototype 2: The servo mechanic

This idea was adopted after finding a YouTube video that achieved this movement.

As shown in the video, a servo is placed on the piece or bent card board. Just like that of the coil, the tension created when the two sides are compressed results in an opposite reaction which propels the structure forward. Instead of using a paper clip we used string which later was changed to pipe cleaner to improve the strength when moving.

This structure worked well for our purposes but the movement was very slow. We tried different methods to increase friction on the cardboard in an attempt to reduce the slipping and thus increase the distance moved with each rotation. First, we put hot glue on the edge of the card board. When that didn’t work we tried using sand on a straw attached to the bottom. However, that too proved futile. Both trials resulted in the structure not moving forward at all. We realized that the cardboard worked best just as it was and stuck to it. However, we thought that to truly show the movement of a caterpillar we should put more than one of the structures together. This led to the third and final prototype.

Prototype 3: Final Product

The final product was a combination of two secondary prototype structures. The biggest challenge when putting these two structures together was to balance the weights and time correctly the movement of each part such that the caterpillar would move forward and not be overwhelmed by the force of any one part of the hybrid structure. We used batteries to balance the weight of each part of the structure. Through trial and error we were able to find the best location to place the batteries such that the caterpillar moved. Additionally, by offsetting the second structure by 0.5s, the structure had a more authentic structure of each part moving to catch up with the one before it.

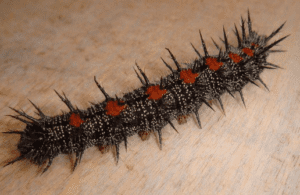

Finally, after developing the final prototype we looked up different caterpillars and used fabric to make our prototype resemble an actual caterpillar. Since we were limited by the fabrics available in the lab we tried to find caterpillars that were red/black to ensure as much accuracy as possible. We settled on our final design inspired by the type of caterpillar shown below.

Reflection:

This lab was very beneficial as it improved my understanding of the prototyping process. With each step we were able to build upon the success of the product which was created before.  Prototyping allows for the creator to concretely identify the challenges of their invention. It allows for a more robust product. It also allows for a more stepwise process as the preliminary tests are more to understand and develop the mechanics of the robot which can then be made aesthetic and showcase worthy.

Prototyping allows for the creator to concretely identify the challenges of their invention. It allows for a more robust product. It also allows for a more stepwise process as the preliminary tests are more to understand and develop the mechanics of the robot which can then be made aesthetic and showcase worthy.

At first I thought that trying to simulate movement without wheels would be difficult particularly as my mind was being limited to solely organisms that walk or fly. However after finishing our project and observing the presentations of my peers I realized that there are actually a plethora of ways in which organisms move. This truly put into perspective the importance of locomotion.

Looking ahead:

If this project were to be developed the next steps would be to create more links in the worm and perhaps finding a more rigid method of balancing the weights on each substructure which is not just trial and error. After that, finding a way to make the structure smaller such that it can be close in size to an actual caterpillar would help with the overall design of the robot. Finally improving the aesthetic of the caterpillar would give the final touches on the overall structure.