Map(Tweeter)

Purpose of Project

The idea to create this project came from my experience going throughout the American school system. In my school district, there was not a focus on current event in any of the classes, despite the knowledge of current events being an important part of education. Because of this, I wished to introduce a more interactive way to have news presented. My original plan was to take the most current events that I would find on Google, and program processing to display what ever news story I chose. While this would have worked, I wished to have information that was a recent as possible. The project “Moon” by Ai Weiwei and Olafur Eliasson, gave me the inspiration to include the news from regular people around the world, and from this we chose to use Twitter as our new source. This project was targeted more toward children, as it has a very whimsical look to it. It would be best used in a classroom setting where children can actively see where in the world they are receiving news from. This helps create knowledge on current events, and it also helps children further develop geography skills.

Process of Creation

Coding-

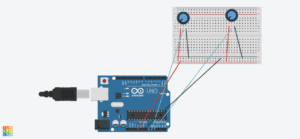

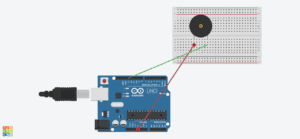

To integrate the use of Twitter’s information into our project, we used the API that connects Twitter with Processing. We had a difficult time getting this to work. In the beginning, we could not figure out how to get the permission to use the API at all. After this, it was a matter of integrating the components of the project into the API coding. We had to integrate the use of buttons, LEDs, the whistle sound, and code the interface in Processing to look nice. The most interesting part about using the Twitter API was that we would place any keyword we wanted into the code, and it would find the most recent tweet that has to do with that key word. This means that this project could be tweaked in many ways to serve more specific projects. We actually thought about focusing our entire project on climate, but we decided to keep the key word as news in order to generate more tweets. This was the most interactive project that I have made because of the programs ability to search for a keyword and then find the most recent tweet. It aligns perfectly with my definition of interaction, two parties able to receive information, think about it, and then respond. Overall, the coding proved to be the most difficult part of this project, but it reaped very cool result when we figured out how it worked.

Physical Project-

We were originally going to create the physical box by laser cutting a box, but the map that we used was far too large to cut a box. From this, we decided to use cardboard, but this meant that our original plan of our project being something that you can step on would not work. This proved to be a better option because the project would last longer through testing and presenting. After adding supports to the bottom, the project was very sturdy. The only thing that was a problem was integrating the buttons and LEDs. A lot of hot glue was necessary.

Fabrication-

Our original fabrication plan was to print a compass and have it spin with a servo motor. We had the compass printed, but then we came back to find it after we had finished the rest of the project and it was no where to be found. In a mild state of panic, we decided to use the old printed parts from our midterm project to create another compass. While we were disappointed to have lost the original compass, our makeshift did the job.

User Testing

Our physical project did not change very much from user testing. The buttons for Australia and the world did not work, so we had to fix that. The main change came in the interface that the viewer saw in Processing. We originally had the webcam working, and then the tweet would pop up next to the user’s face. The idea behind this was that we wanted to highlight the inclusivity of Twitter, that everyday people are able to voice their opinions. This was not received as well as we had hoped during user testing. We loved the feedback we received, and it defiantly moved our project to a higher level. We were suggested and decided to change the interface to resemble the physical map, and have the tweets pop up over the continent that the user pressed. This was to give the project more cohesion, and I think it paid off.

Conclusions

I really enjoyed making this project. The interaction between the user and project was interesting because it took the the familiar idea of Twitter and put it into a new kind of interaction. Our final project received very good feedback, people were interested in continuing to interact with it because of the constant updates of information. My continuation of this project would be to make the physical display more like our original idea of having it be a carpet. I would like to continue to work with the Twitter API; to see what kind of projects can be made with it, and to see other ways we can spread news.