https://ml5js.github.io/ml5-examples/p5js/Sentiment/

The sentiment analysis demo is a fairly simple model. All you have to do is type in words or sentences and it will evaluate the sentiment of what you wrote on a scale of 0 (unhappy) to 1 (Happy). It sounds simple and it is if you just follow the rules and write either sad or happy words. However, if you challenge the model and push the boundaries, you will be able to observe that the model has issues solving contexts.

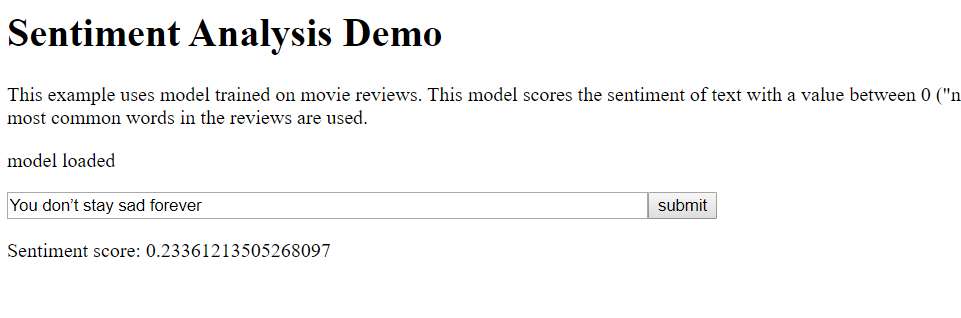

I first tried using the sentence “You don’t stay sad forever” which resulted in 0.0682068020105362. This means that the system deemed that the sentence is an unhappy sentence. However, anyone who reads this sentence will realize that this actually has a positive sentiment to it.

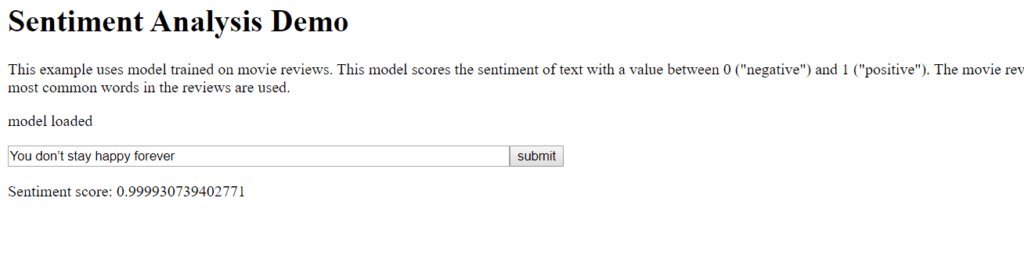

Then I thought, the system must be detecting words that it deems either happy or sad. So I changed the word “sad” to “happy”. This resulted in a 0.9993866682052612 sentiment score. This seems like the happiest thing you can say to a person if you only looked at the score. However, we all know that this is a pretty sad quote.

I think that through models like these we can observe how the future of systems like Bixby and Siri will be. Things like sarcasm and context are very difficult to program, let alone for some humans to understand. If we can crack how people interact and within what context, we could possibly transfer that knowledge to our machines, creating AI companions for fields like healthcare, customer service and so on.