GitHub: https://github.com/luunamjil/AI-ARTS-midterm

For the midterm, I decided to create an interactive sound visualization experiment using posenet. I downloaded and used a library called “Simple Tones” containing multiple different sounds of various pitches. The user will use their left wrist to choose what sound they want to play by placing their wrist along the x-axis. This project was inspired by programs such as Reason and FL Studio as I like to create music in my spare time.

Although I originally planned to create a framework for webVR on A-Frame using posenet, the process turned out to be too difficult and beyond my capabilities and understanding of coding. Although the idea itself is relatively doable compared to my initial proposal, I still needed more time to understand how A-Frame works and the specific coding that goes into the 3D environment.

Methodology

I used the professor’s week 3 posenet example 1 as a basis for my project. It already had the code which allows the user to paint circles with their nose. I wanted to incorporate music into the project, so I looked online and came across an open-source library with different simple sounds called “Simple Tones”.

I wanted the position of my hand in the posenet framework to play sounds. Therefore I decided that the x-axis of my left wrist would be used to determine the pitch.

if (partname == “leftWrist”) {

if (score > 0.8) {

playSound(square, x*3, 0.5);

let randomX = Math.floor(randomNumber(0,windowWidth));

let randomY = Math.floor(randomNumber(0,windowHeight));

console.log(‘x’ + randomX);

console.log(‘y’ + randomY);

graphic.noStroke();

graphic.fill(180, 120, 10);

graphic.ellipse(randomX, randomY, x/7, x/7);

the “playSound” command and its attributes relate to the library that I have in place. Because the x-axis might not have high enough numbers to play certain pitches and sounds, I decided to multiply the number by 3. Left is high-pitch, while the right is low-pitch.

I ran it by itself and it seemed to work perfectly.

After some experimentation, I also wanted some sort of visual feedback that would represent what is being heard. I altered the graphic.ellipse to follow the x-axis coordinate of the left wrist. The higher the pitch (the more left it was on the axis) – the bigger the circle.

The end result is something like this. The color and sounds that it produces give off the impression of an old movie.

Experience and difficulties

I really wanted to add a fading effect on the circles, but for some reason, it would always crash when I write a “for” loop. I looked into different ways to produce the fading effect, but I wasn’t able to include it in the code.

I would also try to work on my visual appearance for the UI. It does seem basic and could use further adjustment. However, currently, this is as much as my coding skills can provide.

This idea and concept did seem to be a very doable task at first, but it required a lot more skill than I expected. However, I did enjoy the process, especially the breakthrough moment when I could hear the sounds reacting to my movement.

Overall, I have now learned how to use the positioning of a bodypart to do something. Going further, I do want to work on the webVR project and this experience can help in the understanding and implementation.

Social Impact:

In the process of my midterm, I worked on two different projects. The first project was pairing WebVR with posenet in order to develop a means to control the VR experience with the use of the equipment required. The second project was the one I presented in class – Theremin-inspired posenet project. Although I only managed to complete one posenet project, I believe that both projects have a lot of potential for social impact.

First, let’s talk about the WebVR project. The initial idea behind the project was to make VR more inclusive by allowing people without the funds to buy the equipment to experience VR. HTC Vive and other famous brands all cost over 3000RMB to purchase. By allowing posenet to be used inside WebVR, we can allow anyone with an internet connection to experience VR. Obviously, the experience won’t exactly be the same, but it should give a similar enough experience

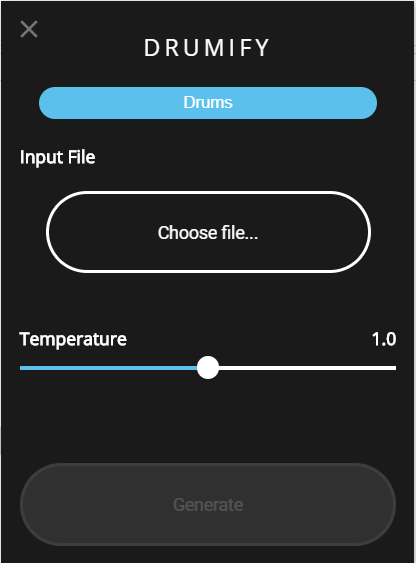

Secondly, the Theremin-inspired project. I found out about the instrument a while back and thought to myself “What an interesting instrument?”. While the social impact of this project isn’t as important or serious as the previous one, I can see people using this project to get a feel or understand of the instrument. The theremin differs from traditional instruments in that it is more approachable for children, or anyone for that matter. It is easy to create sounds with the theremin but it has a very steep learning curve. By allowing this kind of project to exist, people of any background can experience music and sound without buying the instrument.

Future Development:

For the first project, I can see the project developing into an add-on that works for every WebVR project. For this to be real, one has to have an extensive understanding of the framework A-Frame. By understanding the framework, one can possibly use it to develop the necessary tools for the external machine learning program to be integrated. The machine learning algorithm also needs to be more accurate in order to allow as many functions to be used as possible.

For the second project, I can see music classes using this project to explain the concept of frequencies and velocities to younger children or those with beginner knowledge in music production. It allows a visual and interactive experience for these people. For the future, it can be possible to add the velocity and volume of each point on the x and y-axis to make the sounds more quantifiable for the person who is using it. The types of sounds that can be played can also be placed on the sidebar for the user to pick and choose.