Link to video: https://youtu.be/X-HujM0LWVg

Background:

Without a doubt, the entertainment industry is a big part of everyone’s lives. As we become more and more connected to the larger digital landscape, forms of media such as movies, music, etc. will stimulate our imagination and inspire us even more. Many people use pop culture to reference their everyday lives and I’m no different. As a fan of the sci-fi genre, I have always imagined the night time of Shanghai to be incredibly breath-taking. As I listen to songs of the synthwave genre and look outside my window, I imagine Shanghai as a city from a popular movie such as TRON and Bladerunner.

One of the reasons for this is because gentrification has become a topic among the citizens of the city. The city has been gentrified to create space for shopping malls and high-rise buildings, not to mention that the Chinese government has started to experiment with facial recognition technology and social credit scores. Although the development of technology is extremely important for society as a whole, it is also reminiscent of dystopian science fiction pop cultures, such as 1984 by George Orwell and Bladerunner. Synthwave and the cyberpunk genre display what the future of society could be if technological development continues without regarding the impact on humanity. Therefore in a way, the scenery and aesthetics of the genre are extremely beautiful, however, this is only on the surface. If one were to look past the eerie beauty, they will find that it is not as perfect as it seems.

Motivation:

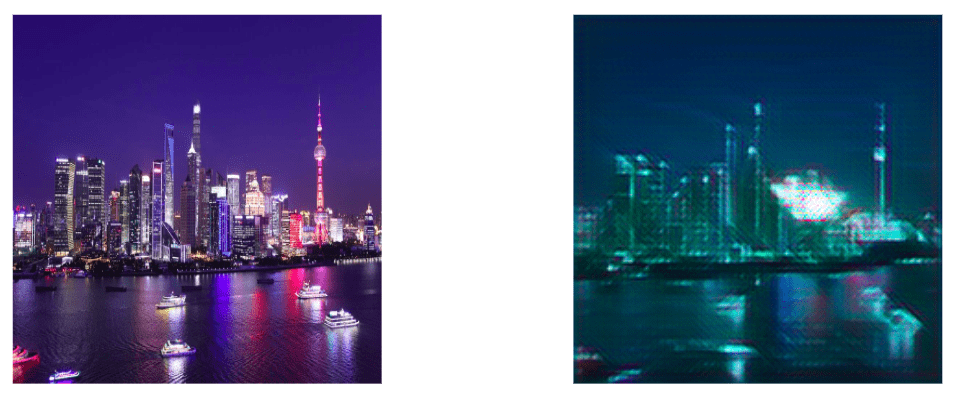

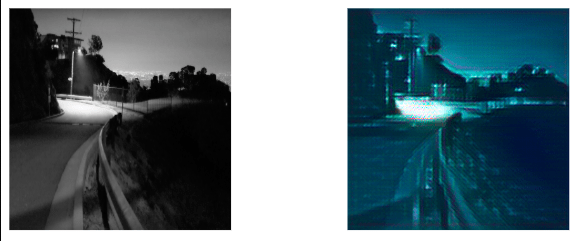

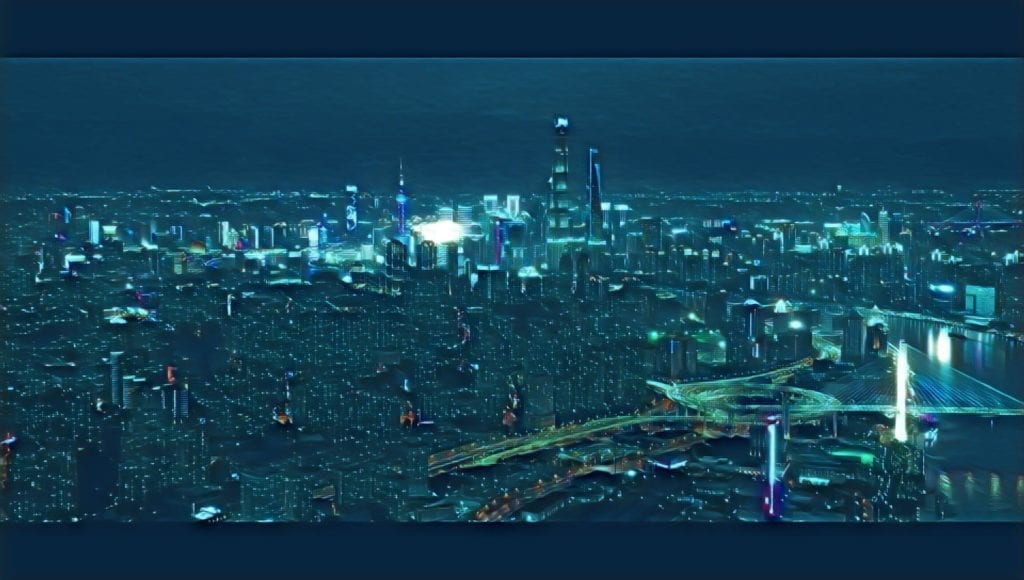

I wanted to create a video project using the style transfer model that was given to us by training it with a series of different images. The images themselves were cityscapes reimagined to be futuristic. An example is the image on the top, a frame pulled from one of the videos I incorporated into my project. The style was transferred from a TRON landscape that I pulled from the internet. For a portion of the class, we have focused on style transfer and it really interested me. Is it truly art if you are just imitating a style of something and applying it to something else? If not, then what could be the purpose?

My motivation was the exploration of our newfound ability to reproduce styles of popular media through machine learning and produce a video that would help me display sonically and visually what I see in Shanghai’s nighttime. Furthermore, I wanted to explore how style transfer can interact with media and help shape it. In this case, “Can style transfer be used for a music video, to invoke the same feelings or perceptions of the style that is being transferred?”

Methodology:

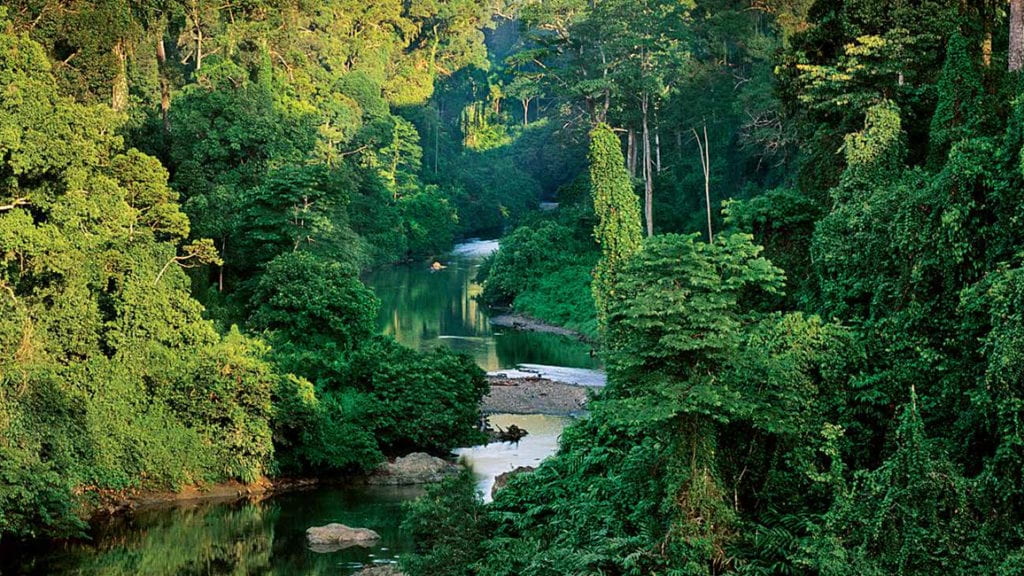

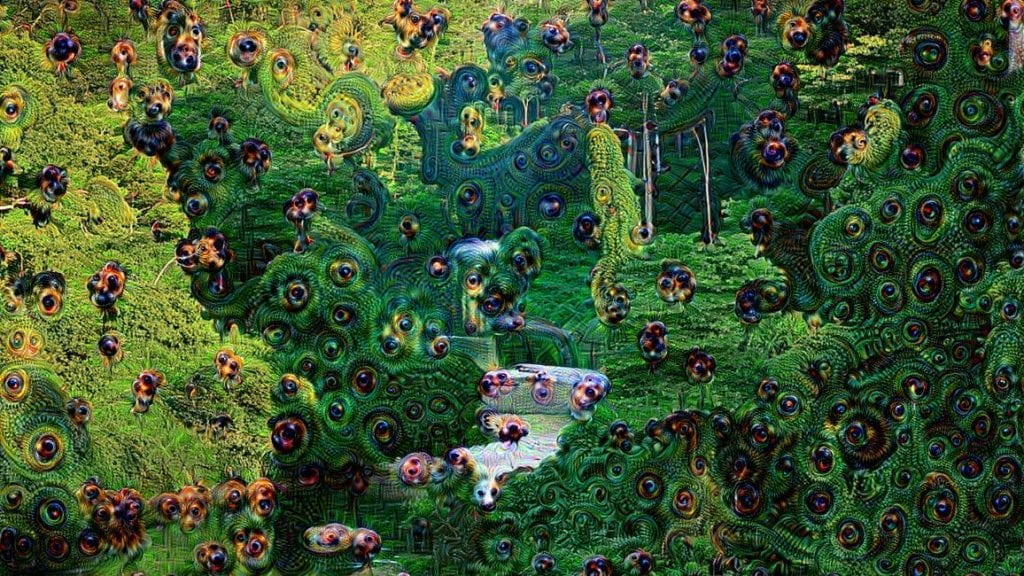

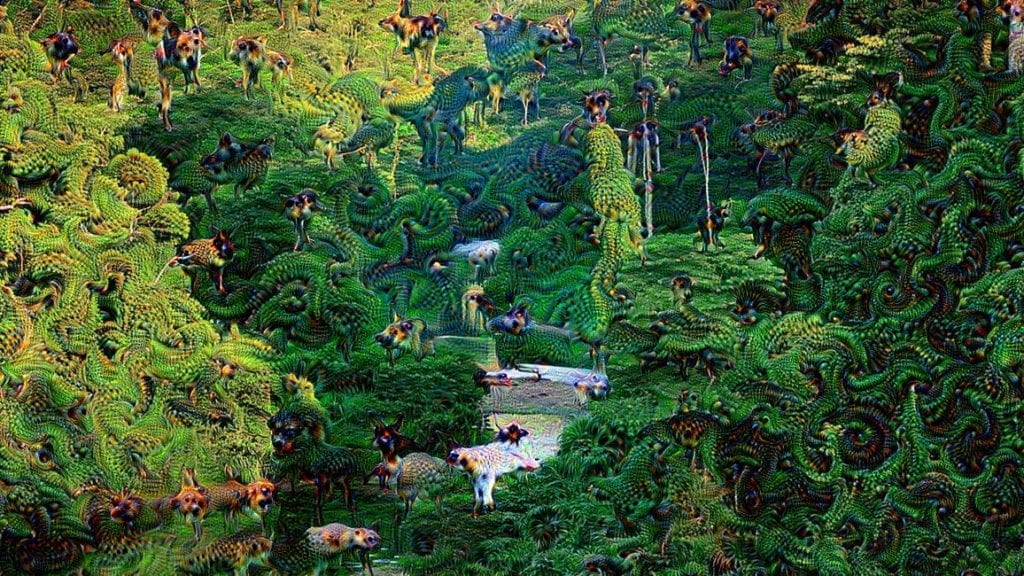

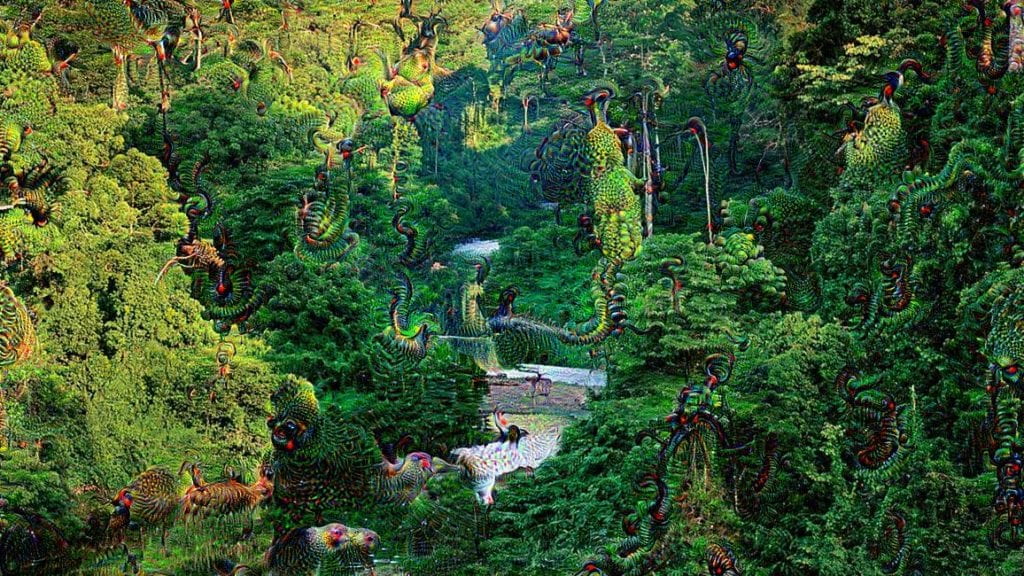

The methodology for the project mostly involves the usage of style transfer at its core. The important part is training the model with pictures that are most representative of the cyberpunk genre. The images involve something like this:

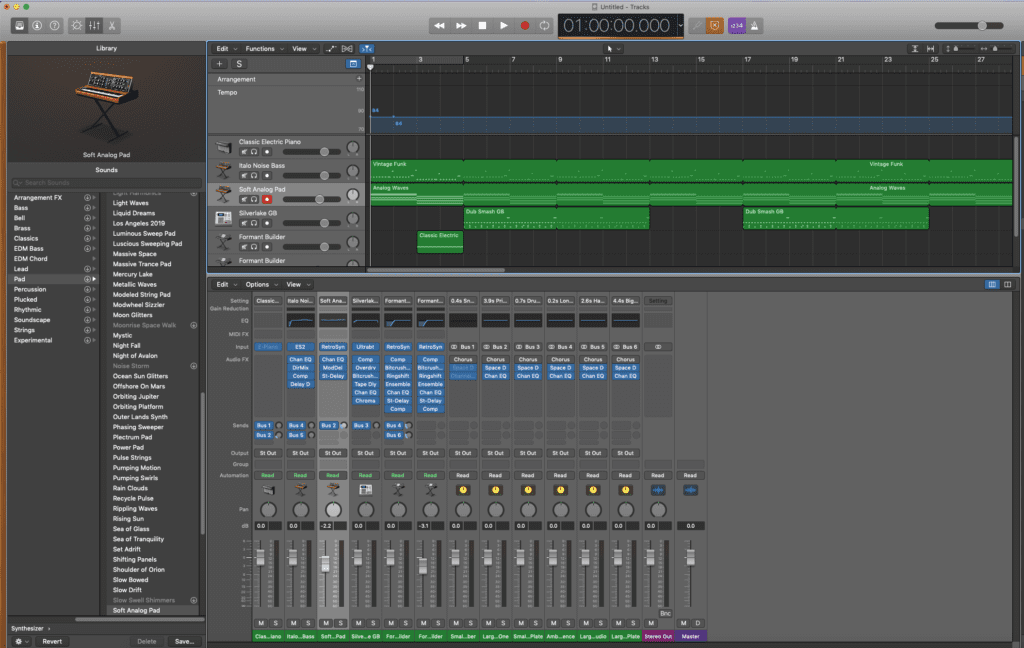

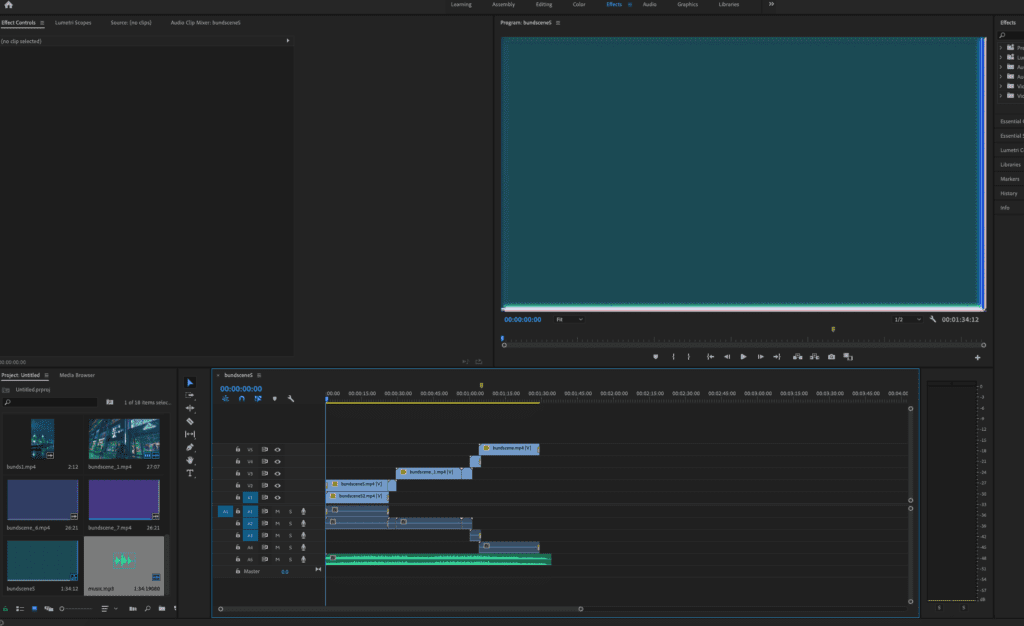

These images are all images pulled off the internet by searching “cyberpunk cityscape”. But just transferring images were not enough, I wanted to create videos indicative of my vision for Shanghai. So Adobe Premiere, VLC, Logic Pro for the music, and Adobe After effects were all involved in the process of making the video. Devcloud also played an important role in allowing me to complete most of my training and conversion at a manageable time.

Experiments:

First Iteration:

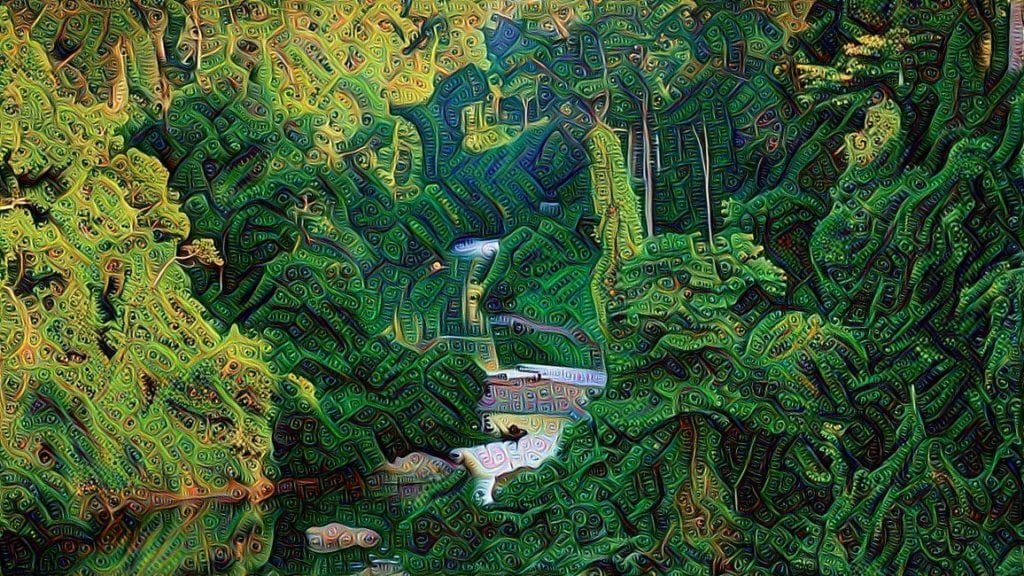

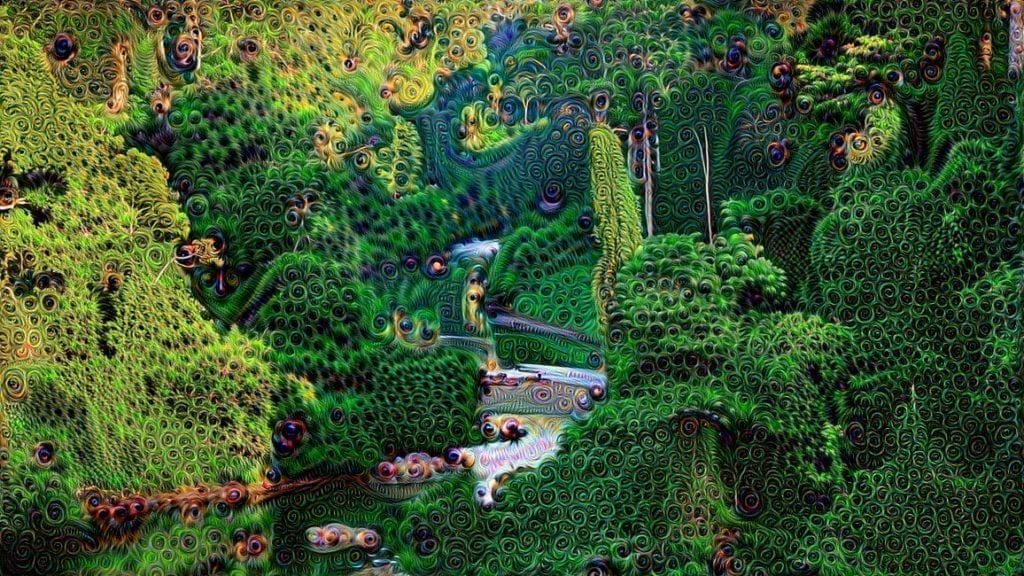

For my very first iteration of the project, I ended up creating what was a lo-fi video. I trained the models with the original three photos that I have added in this post. The results were decent as you can see from the examples down here:

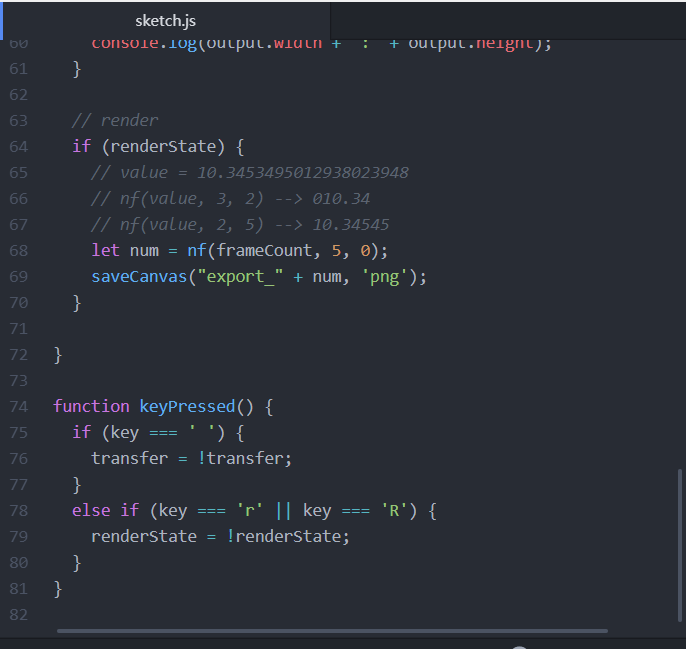

For the first time, I was actually converting an image into multiple different styles that I trained myself. I thought it was amazing! However, it was now time to find a way to convert the videos that I had into multiple individual frames that ideally result in a high fps video. After consulting with the IMA faculty present at the time, I ended up going with the line of code “saveCanvas”.

This allowed me to save the frames that were being played from the transferred video set. The issue with this technique was that it resulted in a very low fps video that seemed and felt extremely glitchy. When the frames were saving, it also saved the frames that were stuck in the process of transferring style, so for some videos I ended up with more than 500 frames of just static imagery. Not to mention the quality of the style transfer itself didn’t help much. I later added the frames into Adobe Premier and transformed it into a whole video. I then wanted the video to also invoke the feelings of the cyberpunk genre so I ended up downloading a song from the internet called “Low earth orbit” by Mike Noise. The end result was a video that fundamentally did what I wanted it to, but was a very low-quality video of what I needed it to be.

Second Iteration

For the second iteration, I took into account the advice that critics gave me and incorporated them into my project. But, more than that, I really wanted to increase the quality of the videos that I pulled together.

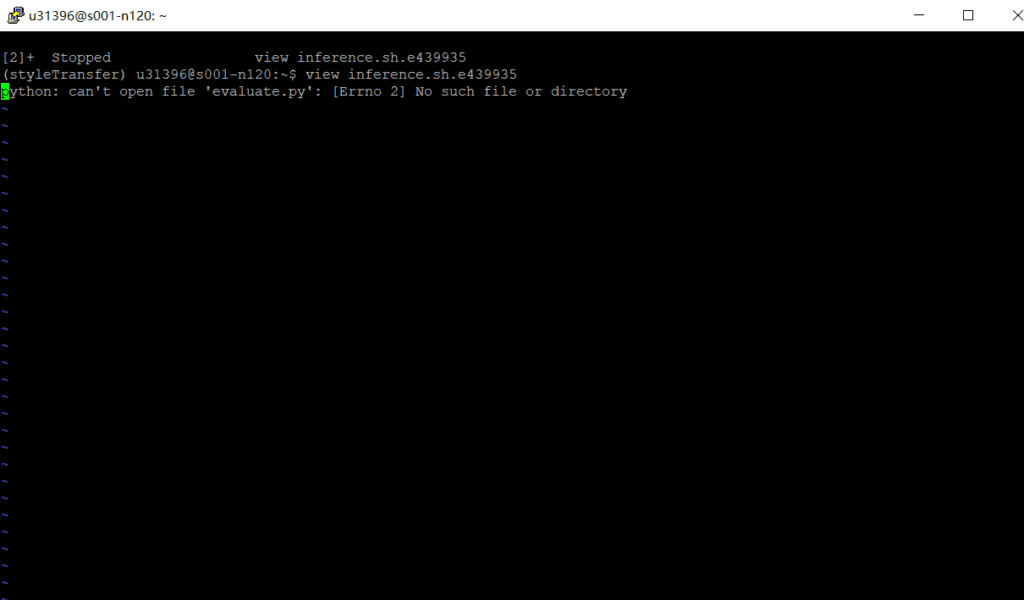

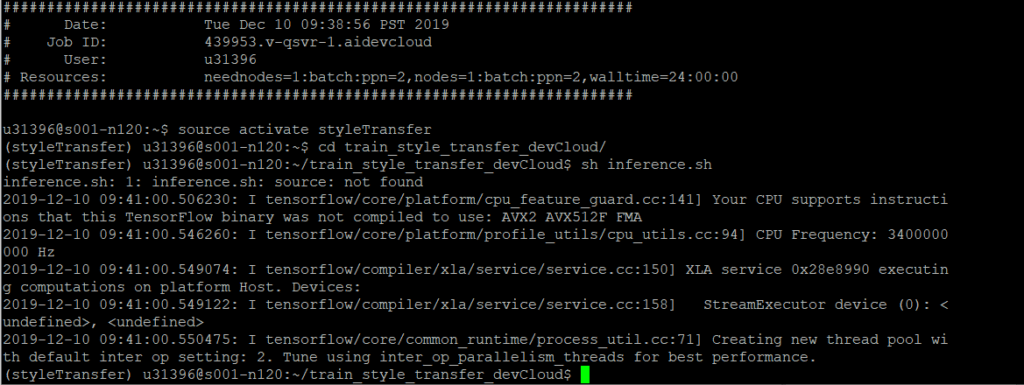

This time I started out with experimenting with the inference.py file that is found on our devcloud folder for the style transfers.

The first time I tried, I didn’t quite understand the code that needed to be written. I understood that it was to direct it somewhere to get it to work, but I didn’t realize what exactly I needed to input. So, I ended up failing quite a few times and eventually getting quite disheartened. But then I cracked it after a couple of rounds of failure, then I ended up with ABSOLUTELY STUNNING results.

Results:

The quality exceeded my expectations and provided me with a way to produce breathtaking results. More importantly, I was able to keep the resolution of the original file without the model squashing it into something that was not ideal.

Later on, I decided I needed to transfer the frames efficiently so that my transferred video would be able to reproduce the high fps rates of the original video.

I used VLC and played around with the option of Scene Filter that allowed me to automatically save frames to a designated folder. I ended up saving over 500 frames per video, some even reaching 1200 frames. The important part of the process was to collect these frames and upload them onto devcloud and put the style transfer inference.py on it. This way, I was to reproduce 3+ styles for every single video that I had. Safe to say that this process took most of my time as I uploaded, transferred, and downloaded them back due to devcloud’s connection was only pulling at a low 20kbps at times.

After downloading all the style transferred frames, I proceeded to put them into Adobe After effects and added all the frames from one style and video into an image sequence. What this allows is the automated process of detecting the fps of the original video and creating a high-res video with the correct order of images. I did this for all 28 folders. I ended up only choosing certain styles and videos for the final product as some of the styles didn’t look different enough while some of them were too different from my intended cyberpunk theme.

I also implemented an additional video from youtube that had a drone’s perspective of the pearl tower, an iconic architectural piece indicative of Shanghai. I chose the frames from that part of the video (800) frames and added it to my video. This piece had a neat detail, it had a text from the original video style transferred which said “The greatest city of the far east”, which I thought was extremely cool to have so I left it in the final cut.

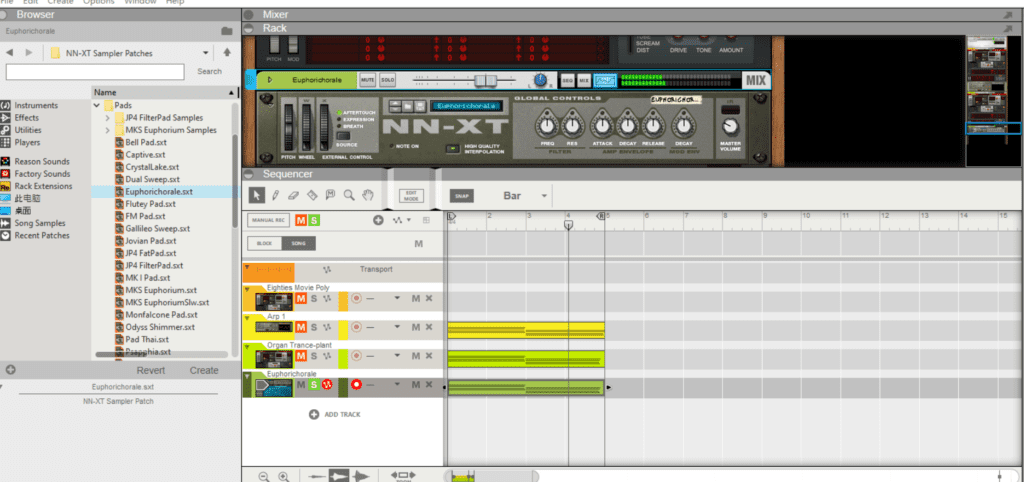

I wanted to implement music, so I hopped on my own program called Reason and tried different ideas.

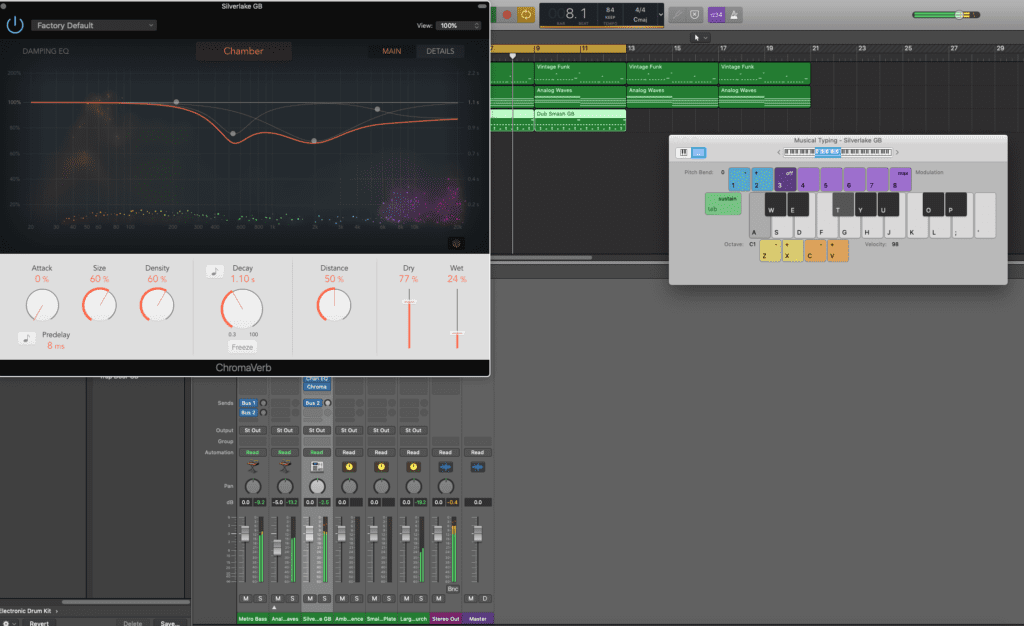

I tried different presets of sounds and resulted in getting close to what I wanted but not exactly. I later proceeded to use the Logic Pro program on the school’s computers to use the presets on there and resulted in the music that you hear in the final piece. I added reverb, and an EQ to cut out some of the lower frequencies that clashed with the dominant bass.

After that, it was just a matter of adding them all into Adobe Premier to turn them into a whole video. I added multiple transitions and effects that would allow a smoother transition from video to video.

Also, I layered the same video on top of each other and changed the opacity so that it would seamlessly change styles from the TRON all blue, to a bit more colorful neon-esque hue from a different style.

The music would also last a bit longer and I made it so that in the end one by one the instruments would stop playing until one was left and made it fade out.

The resulting video was what I had hoped for and left me very proud of the work that I did.

Social Impact:

The social impact of this video is not that significant in my opinion. The video was created as a way for me to show others how my imagination thinks of Shanghai. Therefore, this video is a bit more personal than my midterm project.

Nevertheless, the project fundamentally challenges what it means to be artistic. I think that the usage of style transfer is extremely important. What is real art when style transfer is involved? Is my video considered art when I incorporated someone else’s style to my project? I think artistically the machine learning model can produce some amazing results, however, I believe that it also raises some very important questions about the usage of style transfer such as the question of originality and plagiarism.

This model does show a lot of promise if used in the correct way, such as providing an infinite source of inspiration for an artist using his own work as a trained model. An example is Roman Lipski’s Unfinished. I can also see someone with the ability to create his own frame of images that he wants to train the model on, and create his vision of the world around him through style transferred videos. Artistically, I also think that it can help with the creation of movies and music videos.

Further development:

Some further development for this project could potentially include further refinement of the model. We can try to make the model produce something as close to the original image as possible with minimal loss. Further development could also include incorporating acting and more shots of humans and how they interact with the style transfer model. More aerial shots can also help with the video and produce something much more focused on the cityscape.

I mostly used night shots of the city into my video. Trying to style transfer images of the city during day-time produced very lackluster products, it mostly turned the screen green or blue and that wasn’t very satisfying. I believe that it has something to do with the style of the image that it was created on. The original that I used to train the model was very dark in overall tonality and thus, it might have helped when I used night pictures of the city. Further looking into the development of day-time shots and transferring it into night time shots can also help in the future.

References:

Drone video of Pearl Tower https://www.youtube.com/watch?v=NOO8ba58Fps

Drone video of Shanghai https://www.youtube.com/watch?v=4nIxR_k1l30