Group Mates: Karen Zhang, Sharon Xu, Alice Frank

Project Partner: Kenneth Wang

Sharon Xu:

The Project

Sharon’s project for the final will be based on the traditional mask-changing performance known to originate from Sichuan opera. The title of the project is still in the making and thus, will be termed “Bian Lian(变脸; the Chinese name for the traditional performance)” for the duration of this documentation. Sharon intends on creating a project that, to my understandings, works similar to that of functions from Snapchat and Snow. Similar to these applications, she will have software that recognizes human faces and cover them will different versions of masks throughout the history of 变脸. Through the use of OpenCV, she will allow the user to choose between these masks through the use of a sensor involving hand movements. The project will allow users to take videos of their performances, where Sharon will also provide wardrobe for the users to personalize and wear throughout their 变脸 video. The videos can then be accompanied by background music and be sent out to the user by means of Airdrop or email. The purpose for the project, as Sharon explains, is to introduce more people about the cultures of China and the many beautiful traditions that Sichuan and performance can show to the rest of the world, raising awareness amongst countries and identities, an aspect I truly admire.

The Feedback

Karen, Alison and I, all enjoyed Sharon’s idea of transgressing borders and the learning aspect of her project. We thought that this assignment would work even better if this teaching of the 变脸 dance and culture could be emphasized. The main suggestion was for Sharon to have text alongside each mask to provide additional information to the user about its culture. We also suggested that the point of interaction be changed and much more intuitive and creative by placing the sensor next to the camera. At the very end, though the thought may be difficult, we left her with the question of whether or not she could implement different cultures into the project, we also suggested and asked if she could insert different forms of interaction. Overall, Sharon’s project conforms to her own definition of interaction that it must enable users to create art but also reflect on the relationship between humans and machines. This definition is not necessarily different from my own definition but does provide a new angle and perspective to the ambiguous genre.

Karen Zhang:

The Project

The project Karen will be creating is the “Pickmon,” a play on between the words pokemon and pick, which is exactly what the project entails. Karen and her partner will create a software that distributes the user with one of 4 pokemon based off their heartbeat as well as answers to a set of questions. It is supposed to entice memories of our childhood with pokemon and the sorting hat from Harry Potter. The final result granted to the potential user will be presented through 3D printed poke boxes, an aspect that I find significantly adorable and very understanding of visual attraction. It will, from my perspective truly give the user an aspect of personalized material and information, a factor I admire. It did, however, lack in terms of intractability and encouragement for me to use the project.

The Feedback

In terms of feedback, we all pitched in ideas we thought would help Karen’s project receive more interest, something that compels other students to continue implementing input, an aspect of my interaction definition. For the most part, I pitched the big idea that Karen could possibly make the project into somewhat of a personalized game, where 2 players go through various ‘tests’ that will determine their pokemon for them. The tests will include the original heartbeat sensor but also movement, button pressing agility and much more. Once the results are made through this input, the two pokemon the users were given would then ‘battle’ to resemble that of a true pokemon episode. This will encourage users to continue indulging in the game in order to change their pokemon and battle their friends again. Karen’s main definition of interaction is that the process must result in a reward for the user, which I believe is something that they have achieved through Pickmon. It also, like Karen’s project, provides me new insight into the definition of interaction without contradicting my own.

Alison Frank:

The Project

Alison’s project will revolve around audio for its essence with a name that currently has yet to be decided. It will be a video game that resembles popular storytelling formats that ultimately make the user’s decisions and actions the main source for input and focus of the story. She will follow the story created by her partner and use her understanding of coding to create the project, that will, for its purpose, test the relation of interaction exploration and the connection of the senses to ourselves. The main point of interaction will be made through the use of distance sensors that will record if whether or not the user’s hand movements have been detected, continuing their story with ease. The project will allow users to create a meaningful experience by placing users into the driver’s seat, providing them with something to govern and control over, liberating them of what one usually finds in a video game. To me, Alison and her partner seem to have everything organized and ready for creation. I’ve always enjoyed user based storytelling games like Detroit: Become Human, and am, therefore, excited to see the outcome of their project.

The Feedback

For the main part, Alison’s project received few suggestions from the group. We all enjoyed her idea of the storytelling through audio and instead of just suggesting, we wanted to ask her more about the project so that she can further clarify for the group as well as herself. We asked her how she will be implementing audio and where she would be finding these audio links from and if whether or not they would be copyrighted. We also suggested new forms of interaction that would further entice the user to continue playing with the story. Although the distance sensors seem interesting, I do not think that they will be enough to suffice the overall project and its requirements. Similar to Karen, Alison emphasizes the focus of art and natural interaction in her definition of interaction. Natural interaction and art are both aspects of interaction I had previously not thought of myself but now agree with after this recitation.

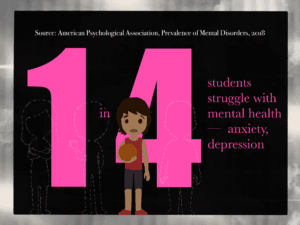

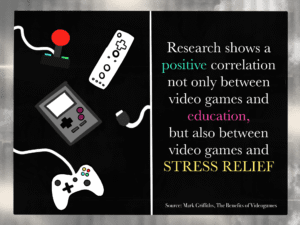

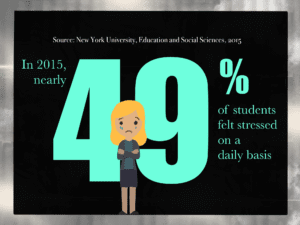

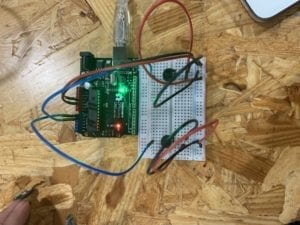

My Own Project: Feeback

The suggestions and advice I received from my tablemates were quite helpful to my overall project. For the most part, they liked the focus of stress and anxiety on the project and enjoyed the implementation of escape room mechanisms. They wanted me to watch out for a balance in difficulty, if the user can not achieve success because the interface is too difficult, they will opt not to continue playing, however, if the game is too easy and simple, users will also choose not to play. This balance will be difficult to achieve. They also suggested the joystick as the main point of interaction in place of the 2×2 button pad, advice that I will relay to my partner as fast as possible. Then they suggested that there be multiple rooms for the user to escape from, which will create a sense of continuity and achievement for the user. I know that this will be difficult to achieve through Processing and Arduino, but Kenneth and I will be considering it for our final proposal. According to my group members, the most successful aspect of my first proposal is the research I’ve done in regards to NYU and the stress that students suffer from on a daily basis. They suggested that I implement a page after the user has successfully escaped from the room that explains the reason I’ve created the project and implement such research and information for the user to understand more about the subject at hand. The part that lacks the most would be the point of interaction for at the time, Kenneth and I were still researching and exploring the forms of interaction we will use, which now include: potentiometers, buttons, joysticks, live camera feed and much more. For the most part, I wholeheartedly agree with the decisions and suggestions my group mates have provided because they will allow me to create the project through a combination of all of our definitions of interaction, which is something I find quite beautiful