Title: The Abyss

Group Members: Gabriel Chi, Kyle Brueggemann, and Celine Yu

Project Abstract: The Abyss is an audio-visual project conducted to test the limits of Lumia production with the usage of water and light. It occurs through the usage of a mysterious atmosphere that questions the stereotypical stigma we have around the concrete as well as abstract perceptions of water.

Project Description:

When all 3 of us went to research ideas for our midterm project, we knew that we wanted to look back into our studies of light art pioneer, Thomas Wilfred. We agreed that after having rewatched his many works with the Clavilux machine, that they somewhat, seemed to resemble the imagery of water. From there on, we unanimously decided that the element of water would become the centerpiece for our project.

The usage of water has been partnered with a mysterious and unsettling atmosphere that seeks to challenge and question the status-quo of our connotation as well as the denotation of water. When one recalls the imagery of water in their heads, they most likely begin to think of a serene and tranquil ambiance. With this project, we wanted the chaotic renditions of monks, chanting and ominous melodies to play a part in intriguing the audience member as they view the imagery of water in motion. We wanted our combination of contrasting visuals and audio to challenge what most people connote water as in the real world as it works to signify change and the passage of time.

Perspective and Context:

Though our project is rooted in the concrete subject of water, the outcomes we create with the input do not resemble nor attempt at referencing reality outside of the screen. “The Abyss” is our take in the fields of abstract film and visual music for it is a non-narrative Lumia art performance incorporating aspects of auditory and visual information related through a mutual entailment. Through which, neither is articulated without the existence of the other, similar to the effect of synesthesia.

Our concept for this project was heavily influenced through readings and documentaries into the works of Thomas Wilfred and his famous Clavilux Lumia machines. Wanting to follow in his footsteps, Gabriel, Kyle and I also designed a self-contained light art machine that would focus on the 3 most important elements of the “8th Fine Art” as conveyed by Wilfred himself: form, color, and motion. To him, in any form of light art, “motion is a necessary dimension” (Orgeman 21) for it plays a crucial role in showcasing the true nature of light itself. While the elements of form and color were already in mind, the concept of motion had not yet been implemented in our project, at least not until after our research into Wilfred’s work. For this matter, we decided that we would allow the addition of movement to occur through our usage of water by creating a physical Lumia box (containing the liquid) we could manipulate in various directions and at varying forces.

Development and Technical Implementation:

The 3 main areas our project encompasses in its development and technical set up/creation include the audio, the physical Lumia box as well as the visuals.

Audio

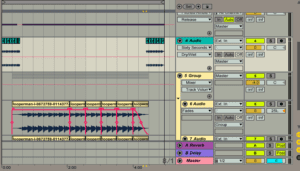

Due to my inexperience in audio creation and manipulation through the applications of Ableton and Logic Pro X, my team members, Gabriel and Kyle volunteered to create the background melody as well as the live filler manipulations. With the help of Ableton, Gabriel created the background file that would work as the basis for the entire project, which was crucial in setting the overall theme. He sampled several layers of monks chanting and later combined them with tracks that resembled the movement of liquids (water). The resulting combination was then merged with an ambient synthesizer to further develop the prevalent atmosphere. The effects of reverb and delay were then added to the composition to complete the base layer, a track emitting both ethereal and peculiar feelings as well as imagery.

While the unchanging background music was created with Ableton, the audio files we intended to manipulate during the live performance were developed through the usage of Logic Pro X. Kyle managed to download all of the audio files provided and sifted through each and every piece to locate the tracks that would best fit our intended direction. He identified sounds seemingly straight out of sci-fi horrors, meditation rooms, and zen-like atmospheres. Once we limited the chosen number of tracks to a maximum of 5, Kyle began to work with the in-application keyboard to create short melodies and snippets we could better utilize throughout the performance. These audio files were recorded and saved for future usage within our Max patchwork.

Physical Machine

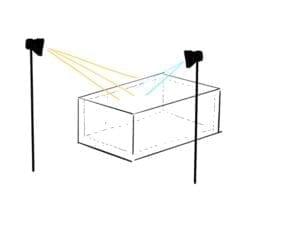

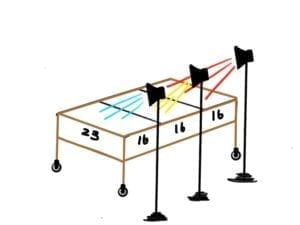

The physical lightbox we created for this midterm project was, as mentioned in the last section, heavily inspired by the inventions of Thomas Wilfred. Wilfred’s own self-contained Lumia machines were revolutionary and as a group, we knew that we wanted to create a product with similar yet distinct features. Just like Wilfred’s many versions of the Clavilux, Gabriel, Kyle and I had also gone through a significant number of trials and errors to get to our final design of a self-contained ‘Lumia’ box. The images that follow are diagrams and drawings that have led up to the current version. We modeled the sizings, the walls and even incorporated the idea of handles and wheels to go with the physical box in order to better integrate it with the project as a whole. I will discuss the functionality and mechanics of the lightbox in the next paragraph.

Attempts 1, 2, 3:

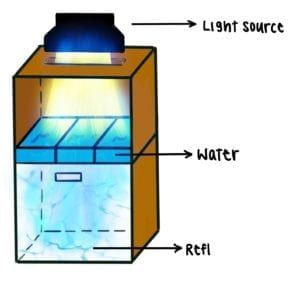

Final Design:

Through this detailed diagram, it is important to note that there are 4 crucial factors to the creation and recording of our desired wave-like shadows: a light source, a water source, a base for light projection and a live video camera. The box itself comes in 2 separate parts, a bottom, and a top, with a removable middle layer in between. On the top of the box sits the light source shining its light downwards through a long and narrow horizontal slit we made with the laser cutter. The idea of minimizing the light that entered the otherwise, fully enclosed box, surfaced from when we were still in the midst of prototyping the actual project. We realized then, that the light we were shining on the cases of water was too bright to allow any shadows or reflections of light to occur. Thus, we created a restrictive opening that limited the amount of light that was shone onto the water below.

Inside the box, sitting on top of the transparent middle layer are three identically proportioned plastic cases filled to the brim with tap water. They lay side by side inside of the box, using up the entire area of the middle section. Being our main source for visual variety, the waves and movement of the enclosed water shine downwards to the base of the lightbox with the help of the light source mounting over on top.

The inside of the remaining section of the lightbox (underneath the water/middle layer) is covered in a white backdrop we utilized from the IMA resource center. We had initially conducted the experiments with the light shining downwards through the water and onto the original wooden base of the machine, however, the results proved to be mediocre. As a team, we thought of how a white background would allow the shadows and highlights of the reflections to become more visible and concrete, thus, the white walls that combine to create the base layer.

In order to maximize the stability of our audiovisual source footage, Gabriel, Kyle and I decided that it would best for us to secure the video camera onto the lightbox itself. For this attachment, we created yet another cut/slit on the back wall of our base layer, one big enough to fit the camera’s cords through and balance the camera across but small enough to prevent it from standing out to the audiences’ eyes. The actual live camera which sits within the base of the box is tilted downwards to film the bottom layer’s wave projections of light and is ultimately taped down to secure its position.

The video camera then sends the live footage of the reflections created upon physical manipulation of the lightbox into the computer containing our Max patch for coordination with our audio as well as visuals.

Patchwork (Audio + Visual)

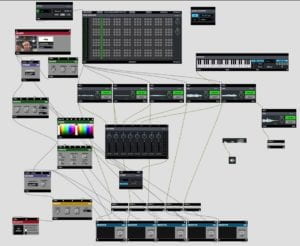

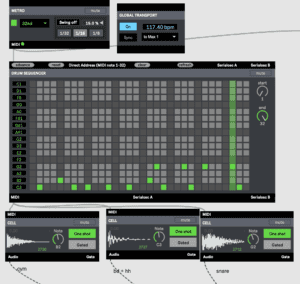

In order to incorporate the audio files we had created outside of Max, we uploaded them through cell midi components. Each of the tracks we intended on using during the live performance was fit into their own section and connected to the main drum sequencer on top. We then sent each of the tracks through a pan mixer before sending all of them out through a stereo output. To then interact the audio with our VIZZIE creations, we made sure that each cell to MIDI component was converted through an AUDIO2VIZZE component before being sent towards separate SMOOTHR modules that would help ease the BEAP information into the VIZZIE section.

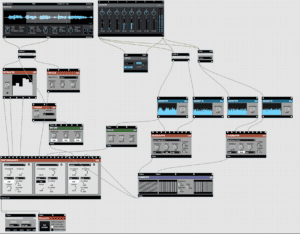

For the visuals, the three of us worked hard to explore the VIZZIE components in relation to the audio factors through conversions of data and numbers but also agreed that we wanted to gather inspiration from our in-class assignments to build upon the basis of our realtime effects.

With the GRABBR module set in place and connected to our live camera, we got to work with the visual manipulation. With physical movement checked off the list, we focused on the elements of color and form according to Wilfred’s standards. We first attached the OPER8R to combine two separate input videos which we indicated as the original feed as well as the delayed version (with the DELAYR). This output is then sent through an effect module known as the ZAMPLR, which works to alter the color input as well as horizontal and vertical gains of the video entering the component. This input was then put through yet another effect module, this time the HUSALIR component, which functions to alter the hue, saturation as well as lightness for our video. The ZAMPLR and HUSALIR modules both had functions controlled by our audio components as their information was converted over, allowing them to manipulate the hues as well as gains for this first visual input.

The above compilation is then combined together with another color-heavy visual input of the 2TONR through usage of the MIXFADR module. For the 2TONR we chose the prevalent colors of blue/green, thinking that it would best correspond with our focus on water.

To complete the full compilation of our video output, we sent the resulting visuals through a ROTATR module (wrap) to increase the implementation of movement through our digital visuals, adding on to that of our physical movement of the LUMIA/WATER box. This aspect finalized our video before being sent out through a PROJECTR component that allowed us to view and showcase the audiovisual performance in realtime.

The functions of the gain, hue, saturation, offsets, rotations and much more were then programmed to our midi board for usage during the actual performance. As we had learned from class, we thought it would be best to create a presentation mode. We selected only the aspects we programmed onto the board as well as the dum sequencer we intended to use and change during the performance. This cleaned the entire patchwork up very quickly, allowing us to work with the most urgent aspects much more easily.

GitHub Link: https://gist.github.com/kcb403/43ff09f15f59c622571d287b8c1f22ee

Performance:

For our live performance in the auditorium, we knew that we needed to split our roles somewhat evenly both in terms of length in time and significance in relation to the project outcome. We decided that there would be 3 main factors that were in need of manipulation during the actual presentation: Audio, video, and movement. While Kyle took responsibility for the live audio portion, Gabriel and I decided that we would switch between our roles of the video and movement throughout the 5-minute production. While I would be manipulating the live visuals, Gabriel would physically be manipulating the self-contained lightbox in realtime. Once the timer hit the 2:30 (2 min and a half) mark, we would change our positions and ultimately, our roles in the performance. We had practiced for the performance a number of times before the actual showing and were satisfied with the results we had, however, we ran into a major complication at the time of the actual event.

Right as we were setting up for our live presentation, the Max patch we had prepared ahead of time had unexpectedly, and unfortunately, crashed. As we raced to relaunch the application and set up the components in time, all 3 of us were anxious about the time we had remaining and the outcome of our project after this unanticipated hit. The audios were working perfectly fine as before, however, our visuals took a turn for the worst. Our color scheme, which had once encompassed a large ROYGBIV range with the 2tone VIZZIE component had now been restrained to just neon blue and neon green. We tried our best to salvage the visuals but were met with defeat when we knew that time was running out. We decided to go on with the show despite this unfortunate occurrence.

During the performance, almost everything went smoothly as planned. The beginning started out smooth, with a fade-in component we tied in with the gain controller. We wanted to achieve the same effect with our closing, but were unable to given the unforeseen complications.

The overall performance was admirable and enjoyable as a whole, however, my fellow team members and I believe that it was a pity that we were unable to present the final product we intended to showcase at the performance. We know that we could have somehow prevented the situation by practicing even more on our own time and practicing the sequence in the auditorium before the actual class.

Conclusion:

Overall, I believe that as a team for this midterm project, Gabriel, Kyle and I worked amazingly together. Each of us was able to practice upon our audiovisual skills while showcasing the knowledge we were already more confident and familiar with. Together, we brainstormed various ideas and were able to finalize our opinions and concepts faster than any other team project I have ever been a member of. Our research into Thomas Wilfred was exhaustive, but I hope that in the future we could seek inspiration from a list of artists who have made a mark in the world of audiovisual art. In terms of creation, I believe that our group could have benefited from further extension into our visual components, making it seem more cohesive as a whole and practicing even more effects that will pivot us closer to the likes of Wilfred’s 3 crucial elements of Lumia art. Finally, in relation to our official execution during the performance, I think that we did the best we could with the circumstances we were stuck with at the time. In the previous section, I mentioned how we could have minimized the problem by practicing even more in order to prevent complications like ours from occurring in the future.

What I learned from an individual perspective is how I need to conduct more practice in terms of my auditory compilation skills as well as hands-on research into the VIZZIE modules and components of Max. I wish that I can improve upon my skills in the future and with the upcoming project.

To end this documentation, I can honestly say that the project was a great lesson for me in terms of the teamwork it provided and the visually stimulating perceptions it granted me throughout its process. I look forward to working with my partners again.

Works Cited:

Orgeman, Keely. “A Radiant Manifestation in Space: Wilfred, Lumia.” Lumia: Thomas Wilfred and the Art of Light, Yale University Press, 2017, pp. 21–47.

\

\