partner: Lana Henrich

CONCEPTION AND DESIGN:

Initially, my partner and I wanted to create a guessing game where an individual had to race against the clock to guess a distorted image. We realized that this project would not consist of any meaningful interaction so we decided to change our entire project concept. Also, taking feedback from the sharing of the final project idea with the class, we were able to acknowledge how hard it would be to create a working, interactive game. We were inspired by Mick Ebeling “The Eyewriter” that was shown to us at the beginning of this course. “The Eyewriter” is a tool originally created for a paralyzed graffiti artist, that tracks an individuals eye movement to create art. We ended up creating an interactive art piece that is aimed at helping disabled and injured kids practice using their hand muscles. Since technology is becoming so prominent today, this is a fun way to encourage kids to move their arms. Because this is intended to be a physical therapy tool for kids we had to consider our intended audience. With this in mind, we knew it had to be simple enough for kids to use.

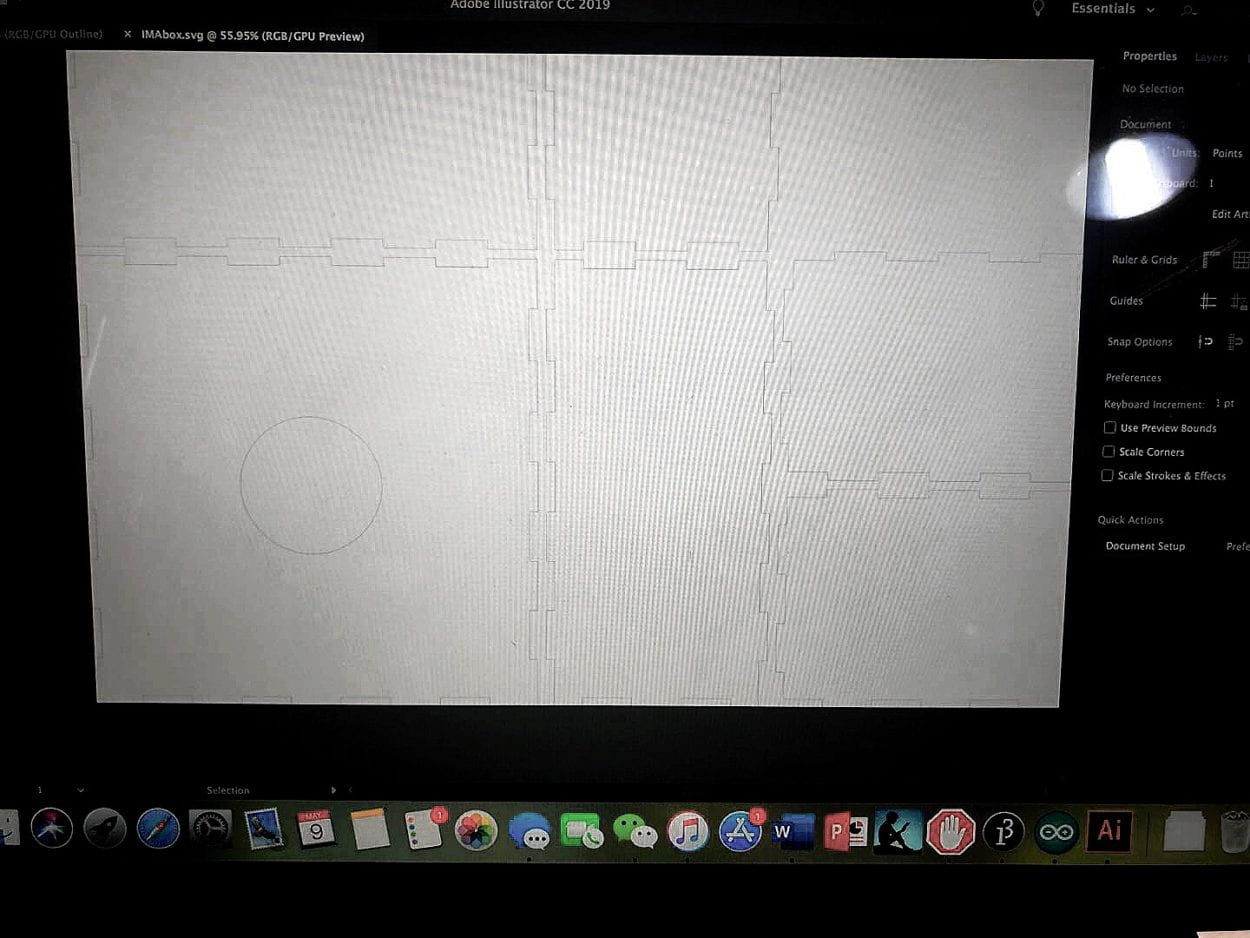

Our project consisted of a leap motion and a laser-cut box that had a button. We used a laser-cut wooden box because we didn’t want any wires showing. We wanted the user to feel comfortable using the device without being confused or intimidated. It is also designed to be compact so that it is a portable tool. We used a big red button with a built-in LED because it is part of the experience. Since our project is space themed, our project is meant to be used in the dark so using a big LED button makes it visible. With it being both big and red, kids will be intrigued and want to push it. On the box, it states “PUSH to shoot to a new galaxy” we incorporated this because it added a nice touch to out space theme.

Initially, we wanted to use the computer built-in camera so that it would follow motion but we were advised by a fellow to use a Leap Motion Sensor instead. We settled on using a leap motion because it aided in the interaction part of our project. We wanted individuals to feel like they are virtually touching the stars and we believed that the tool that would work best would be the leap motion since it is utilized for augmented reality based virtual experiments. We also added futuristic calming music so that it would really encapsulate the space theme and incorporate another human sense. In addition, with the leap motion, we had an idea to laser-cut a clear casing so that people would not be inclined to touch the leap motion. We ended up rejecting this because we realized the light within the leap-motion might reflect off the clear casing and cause the leap motion to not have accurate readings of a users hand placement.

FABRICATION AND PRODUCTION:

For the particles, we used an existing code as a template (https://www.openprocessing.org/sketch/529835). Because this code is in another programming language I went in and changed it into a language that Processing could read which is JAVA. Using this code, I altered it so that it would change into a random color when the button was pressed and. We also altered it so that instead of the mouse moving the particles, it was the movement of the hand motion that was captured through the Leap Motion. We downloaded the Leapmotion example code within Processing.

The shooting stars were not hard to program as it was just creating a separate class, and having ellipse start from the center and randomly come forward. With this class we incorporated pushStyle(); and popStyle(); to change the settings and then be able to return to the original.

For the background image, we got it off an opensource website (https://www.pexels.com/photo/starry-sky-998641/) . For the background music we got it off (bensound.com).

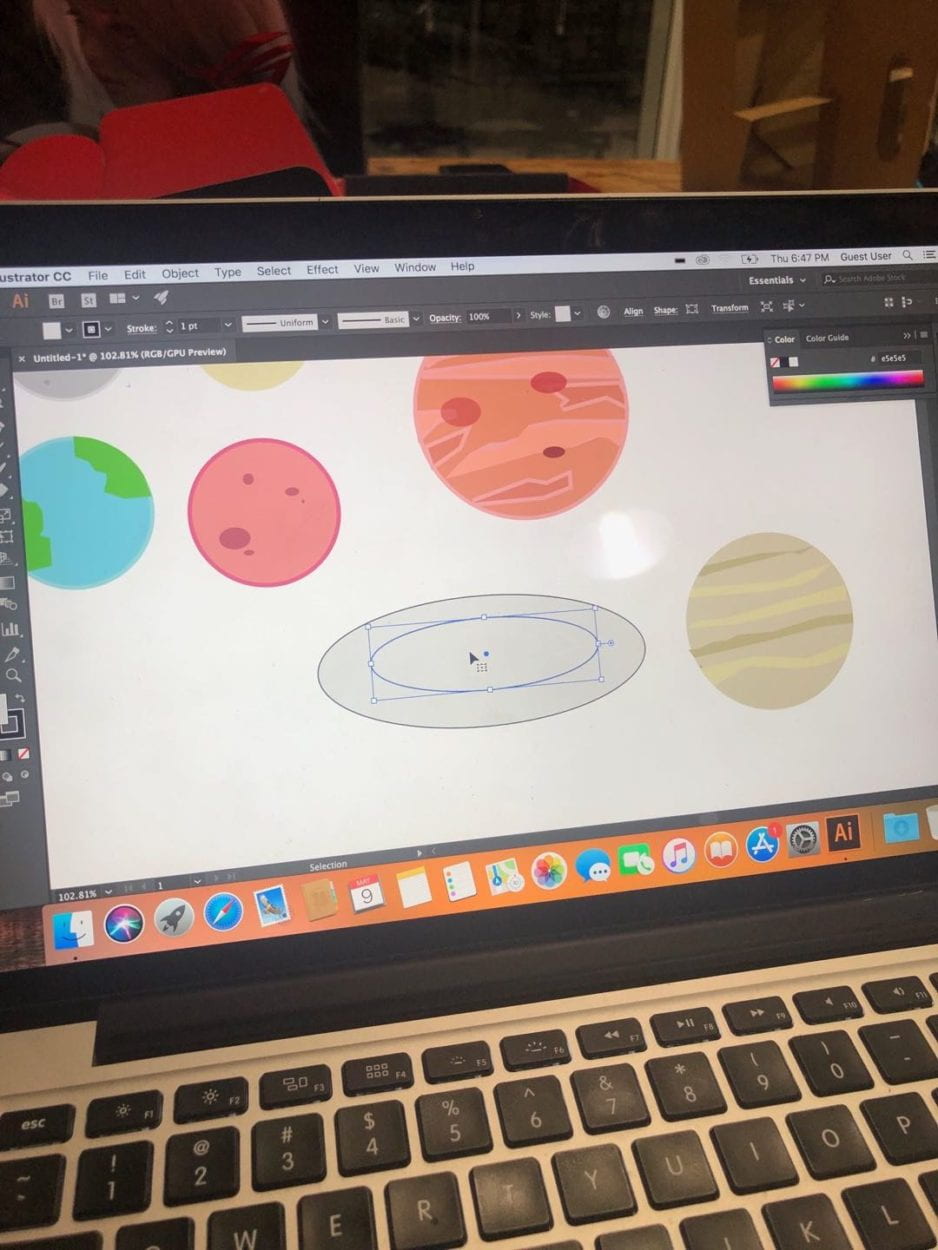

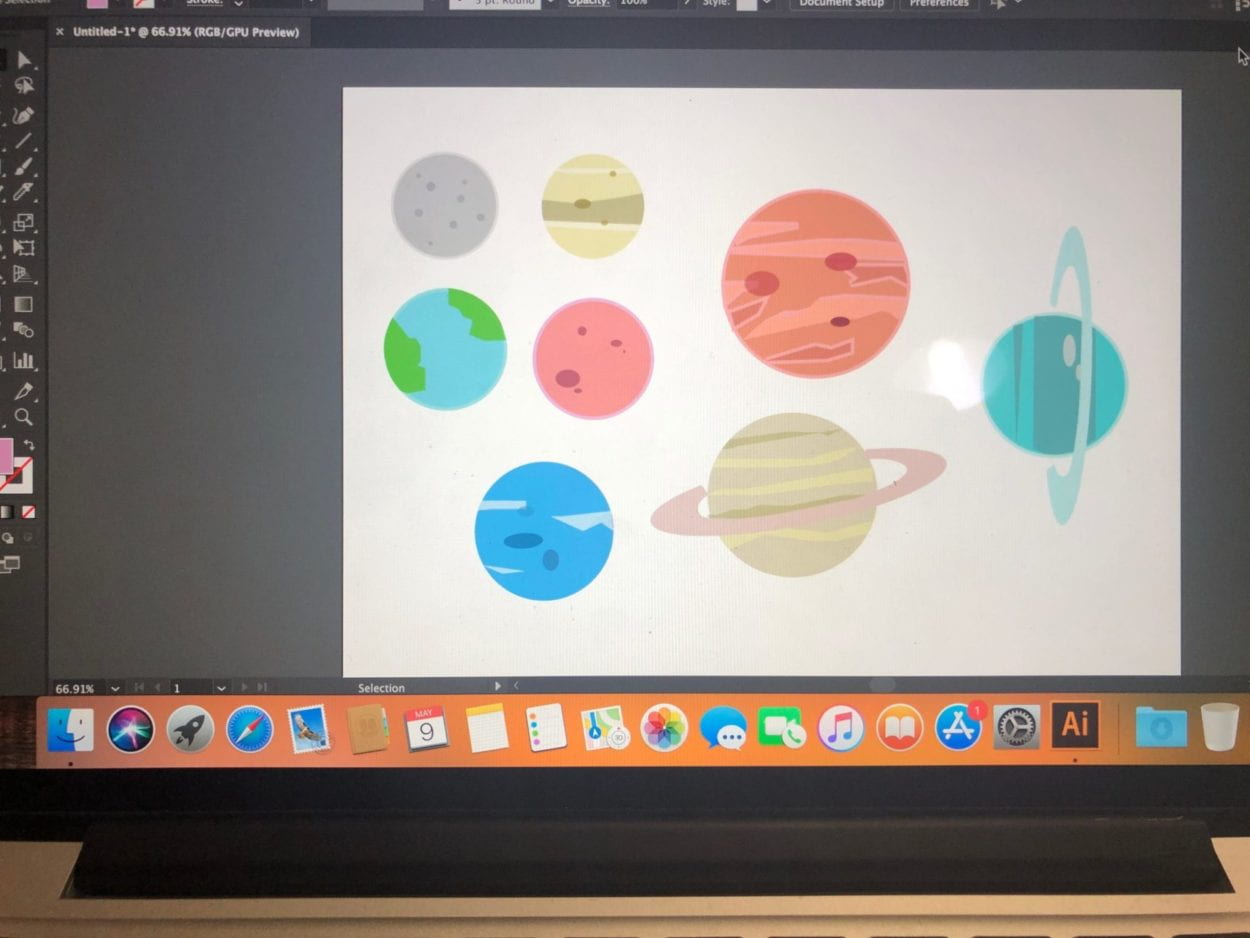

The most challenging part for my partner and I was setting up the code for the planets. Since we drew all the planets on adobe illustrator we uploaded the planets as individual photos. Because of this, we had to create an array and a separate class for the planets. The most challenging part of all this was trying to get the planets to reset to a new position every time the button was pressed. This was extremely difficult for us because we had to incorporate it into an already created code. With the help of a fellow who advised us to use boolean, we were finally able to get it working.

During user testing, we got a lot of feedback. Most of the feedback that we got we included it into our project. For instance, we were told that our initial animation was too simple and that we should add animated shooting stars as a background. We decided to follow through with this advice because we realized what we had did not seem 3D. We were also told that we should find a way to store all the wires, which we then laser cut a wooden box to fit all the wires. A suggestion that we didn’t go ahead it was reformatting the Leap Motion so that instead of lying flat on the table it would be facing the individual so that they know not to touch the leap motion. We didn’t go ahead with this because we felt that it would be a distraction and people wouldn’t understand what to do when they have a leap motion facing directly at them and a screen in front of them. A lot of people enjoyed our project, they found it to be very soothing and relaxing. The changes that were made benefitted the overall project. It allowed for a better experience because it made the visuals more compelling and realistic.

CONCLUSIONS:

The intended goal of our project was to create an interactive art tool that aids as a physical therapy tool for kids. Our project results aligned with my definition of interaction because as an individual moves their hands a certain way, the “stars” on the screen moves with their hand. There is a reciprocal action taking place where different actors, the hand and the “stars” on the screen, are engaging with one another. In my definition of interaction I also noted that in an interactive piece, it incorporates our different senses where different senses are interacting with one another. This is what our project entails, the human senses of hearing, touching and seeing are all working with one another. One aspect that doesn’t align with my definition of interaction is our interaction can be argued as too simple. One might ask “An individual is just moving their arms, how does that interaction fit with a space theme?”, the answer is, a part of virtual artworks is the use of imagination, through imagination the individual is faced with many different types and levels of interaction.

For the most part, individuals reacted positively to our project. They enjoyed the calmness that came with interacting with it. I have had experiences working with kids with disabilities such as Cerebral Palsy and I think developing fun tools for disabled kids to interact with allows them to have fun and not this of their disability as a constraint within their lives. If I had more time and resources I think I would test this on the disability community and see how they interact with it. I also want to make it instead of a one screen thing, perhaps making it a dome where the experience seems more realistic.

With this project, we ran into a lot of failures. Having never used the leap motion, we had to learn how to use it on our own we also had to familiarize ourselves with using classes within our code. Although challenging at first, we were able to push through and at the end achieved our intended goal. From these setbacks, I learned that as a designer you should not have a set blueprint that you follow from beginning to end, instead, you should be open to change and know that everything might not work the way you intended it. It’s about learning to find different solutions to solve a single problem so that you have options. Designing, fabrication, and production is not a linear process. Like with our coding for processing, we were initially set on just having stars on a black background, but after feedback and re-analyzing our project, we had to come up with a different design better suited for our intended goal.

This project matters because kids who go into physical therapy for arm and hand injuries or muscular injuries may dread going to therapy. This puts a fun twist to therapy which not only encourages kids to move their muscles but also allows their different senses to interact with one another. We should care because often times the disabled community is overlooked when it comes to creating and designing mediums of entertainment. By creating a device that is meant for the disabled community, but can also serve as a fun interactive art piece, it bridges both worlds together.

PROCESSING CODE:

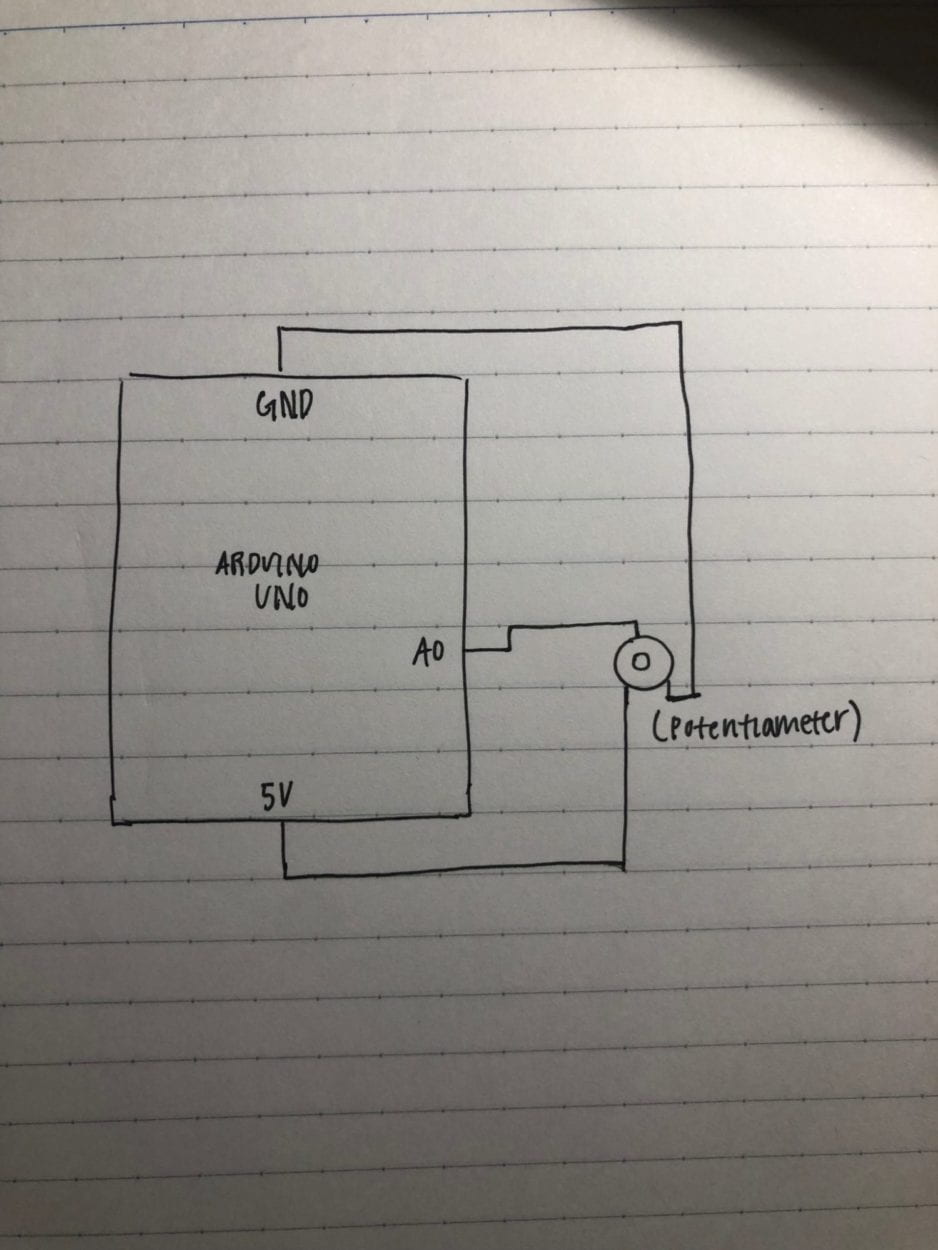

ARDUINO CODE: