For this week’s assignment, I played around with an ml5.js project called BodyPix_Webcam. I was drawn to this particular project because it reminded me a lot of a green screen or the Photo Booth app on Macbooks.

Basically, it detects the presence of a body through your laptop’s webcam. The output is a real-time video of everything covered in black except for the detected bodies. When I was testing it, it worked pretty well when I was by myself with no one else to detect.

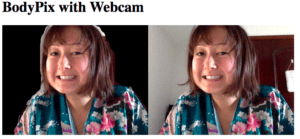

I was curious how well it would work with multiple people, so I asked my friend to test it out with me. This didn’t turn out as well, as parts of our faces were covered in black.

This project was interesting to me because of its potential applications. The fact that the bodies were detected in real time would be very useful and could be used to improve a lot of existing green screen systems. In Photo Booth, for example, there are some effects where you can change the background of your photo. However, in order to use these effects, you have to step out of the camera frame first so that the app can detect the difference in the background with and without the person in it in order to detect where the body is. BodyPix_Webcam, however, eliminates this step. If its methodology was applied to Photo Booth, I think it would create a better user experience for users wanting to use the different background effects. However, it would just need to be further trained so that multiple people could be detected more accurately.