Purpose of the Project

The aim of the final is to design a comprehensive experimental project, taking into account the theoretical and practical concepts learned throughout the semester, that would culminate into a research paper that explores one of the following topics:

-

-

- Biology knowledge that inspires, informs and nurtures robotic systems (e.g., swarm behaviors)

- Robotic experimental information that helps to create a new understanding about biology (e.g., intelligence, decisions or behaviors of individuals)

- Hybrid systems that are inspired in biology by design (e.g., new mechanical structures or locomotion systems)

Initial Ideas and Research

In my initial project proposal, I had decided to work individually and hone my focus into investigating swarm behavior and designing a robotic implementation to gain further insights into biological swarms. I then did more research and also decided that I wanted to work with ants. However, I soon realized that it would be more valuable to work together with a partner because we can align our research interests and add a layer of creativity to the project, by combining our ideas. Our research revealed that ant behavior is very dynamic and there are so many different elements of sophistication involved in their decision-making and behavioral patterns. These insights relate not only to swarming behavior, but also biological understanding.

As outlined on the Gaps in Knowledge section of my research paper, there were a few paths that we were able to take in our investigation. We could investigate the rigidity of the sugar and protein ant categories. Another option was to discern whether more pungent or strong-smelling food particles that appeal to the foraging ant would affect the speed of the ant. Lastly, another interesting topic of exploration we came across was the correlation between the accuracy of the ant’s movements and the strength of the scent from the pheromone trail.

Final Project Focus

Kennedy and I finally decided on focusing our experiment to explore the relationship between the strength of a food’s scent and the time it takes for the ant to find and reach it. We would design a robotic model in order to simulate the ant behavior and conduct the experiment. Upon deciding our focus, we immediately started working on its development and evaluating different approaches to achieve our goal.

Robot Development Timeline and Process

Initial Planning

There are two parts to this project: the ant and the food that attracts it. Deciding on what approach to take in developing the robot involved a lot of discussion with our professor, peers, and IMA fellows, as well as research to figure out what method best aligned with both our technical capabilities and the purpose of this project. In my initial proposal, I wanted to use Arduino as the basis of the ant robots. My initial model consisted of an Arduino powered robot with wheels and DC motors for locomotion, fitted with an infrared receiver to detect the signals. This would’ve made the project unnecessarily more challenging, because it has been a long time since I programmed with Arduino.

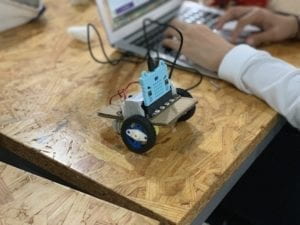

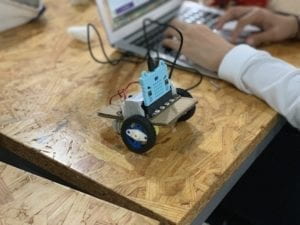

We then figured out that it was possible to build the robot using Microbit and the Kittenbot kit already had its own infrared receiver as well as motors and wheels. Thus, it made more sense to take this approach because we could consolidate the programming knowledge we had accumulated all semester, and we could program the whole thing on the same platform. The food part was a pretty simple concept. We decided to use an infrared emitter as a beacon that attracts the ant robot, with the infrared signals representing the scent of the food. The more beacons we place, the stronger the signal is, and we hypothesized that the ant robots would find it and reach its location faster.

Thus, our final plan was to build our own robot using the Kittenbot. Whilst the concept for the food beacon was simple, as we started on the project we did not have an initial plan as to how we were going to build a free-standing structure fitted with an infrared emitter so we decided to figure that out as we went along.

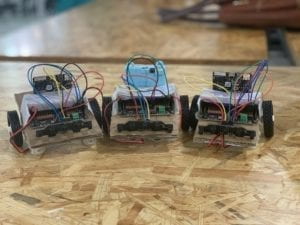

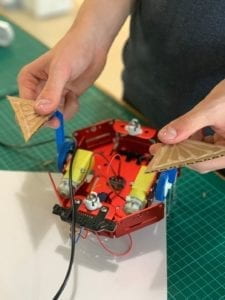

Initial Prototyping and Grasping MakeCode

As soon we started prototyping we realized that we did not need the fully-assembled Kittenbot for the purpose of project. We also felt that the Kittenbot chassis was to heavy and clunky, and at this phase of development we only really needed the Microbit connected to the motors. So, we decided to build our own chassis made out of cardboard, as this also gave us flexibility of designing the robot, allowing us to create slots and make custom modifications specific to the purpose of our project. We chose cardboard instead of 3D printing a chassis or laser cutting plastic/wood, because it was cheaper and would make the prototyping process faster. If we made a mistake or created too many holes, we were able to easily build a new one that fit all our components better as well, and we could continue doing so multiple times.

Upon fitting the Microbit and motors to the cardboard chassis, we first explored the locomotion of our robot. Kennedy and I were not well-versed on MakeCode, so this was an easy starting point for us to get things working. We flashed a simple program to figure out how the motors worked.

As shown on the video, we immediately ran into first problem. The robot would only spin around. We had to make an adjustment on the code. We had programmed both motors, M1A and M2B, with the value 150. We realized that in order for it to move straight we had to program M2B with the value -150.

We tinkered around further, and I tried to familiarize myself with the mechanisms of MakeCode by looking at other sample projects as well. Now that we had figured out the locomotion and got our robot moving, we had to figure out the sensors, which is the core of our project.

Implementing Infrared Communication

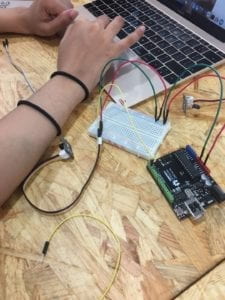

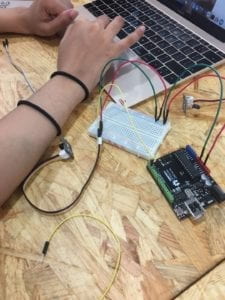

This part of the project involved mounting an infrared receiver on our prototype, connecting it to Microbit, and then making it detect signals from an infrared-emitting beacon. Since I used the infrared sensors from the Kittenbot kit for my midterm, I thought we could mount it on the front of our robot and use the Robotbit extension. For the beacon, we created a simple circuit with an Arduino infrared emitter and downloaded the code for it online. However, when we tested it, we weren’t getting any results and it appeared that the infrared receiver could not detect any signals from the emitter, even though we placed them very closely to each other.

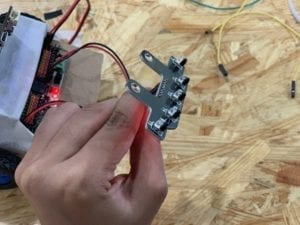

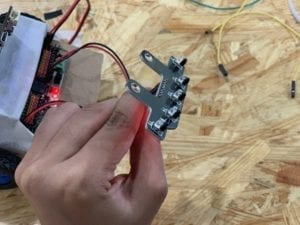

We sought help from Rudi and Tristan, and discovered that the Robotbit infrared receiver was also an infrared emitter; it would shoot infrared signals as we turned it on and wash out any of the signals coming from the Arduino emitter.

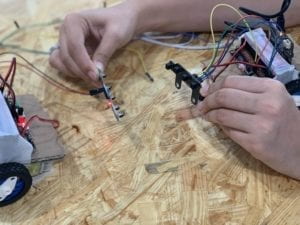

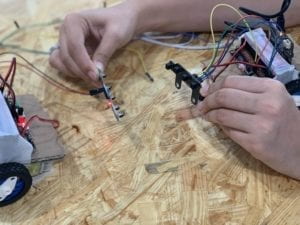

So, we performed a little robot surgery and cut out the white colored bulbs on the Robotbit infrared sensor, because they were emitters. We were then only left with the infrared receivers, so Kennedy cut out slots on the cardboard and mounted it. The ant portion of the infrared communication was done, now we needed the figure out the beacon.

We had saved the infrared emitting bulbs that we cut out, so Tristan and Rudi suggested that we use them as our infrared-emitting beacons. We tested this by using an infrared sensor that we had not cut, to see if any signals were actually detected.

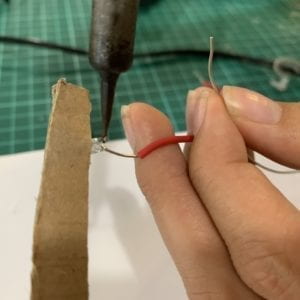

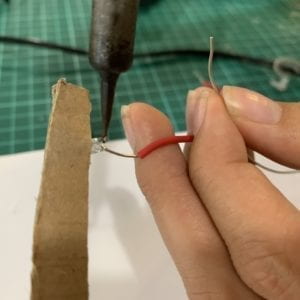

Fortunately, we got clear values right away, so it was definitely the right direction to take for the project. To construct our beacon, we soldered each bulb to two wires, which we plugged into a solderless breadboard and connected to Arduino as a power supply.

We then tested it to see what kind of values we were getting, so that we can establish a threshold when we start coding for the entire robotic simulation that would be the setup for our experiment. We had finally finished establishing the infrared communication.

Implementing Obstacle Avoidance

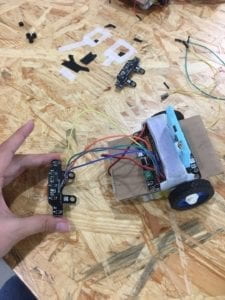

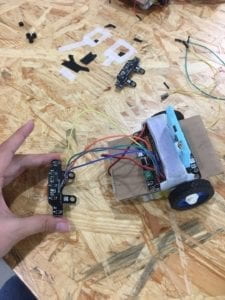

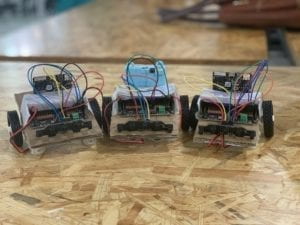

The next part was to implement the obstacle avoidance element. This element is necessary for the robot to easily move around and find the beacon without running into walls or other robots within the area, since we built multiple ant robots to roam in the arena together, replicating an ant swarm.

For this part, we opted to use an ultrasonic sensor to detect obstacles, and we figured we can use the Robotbit extension as well. We used an HC-5204 sensor and initially attached at the bottom of our robot, under the infrared receiver.

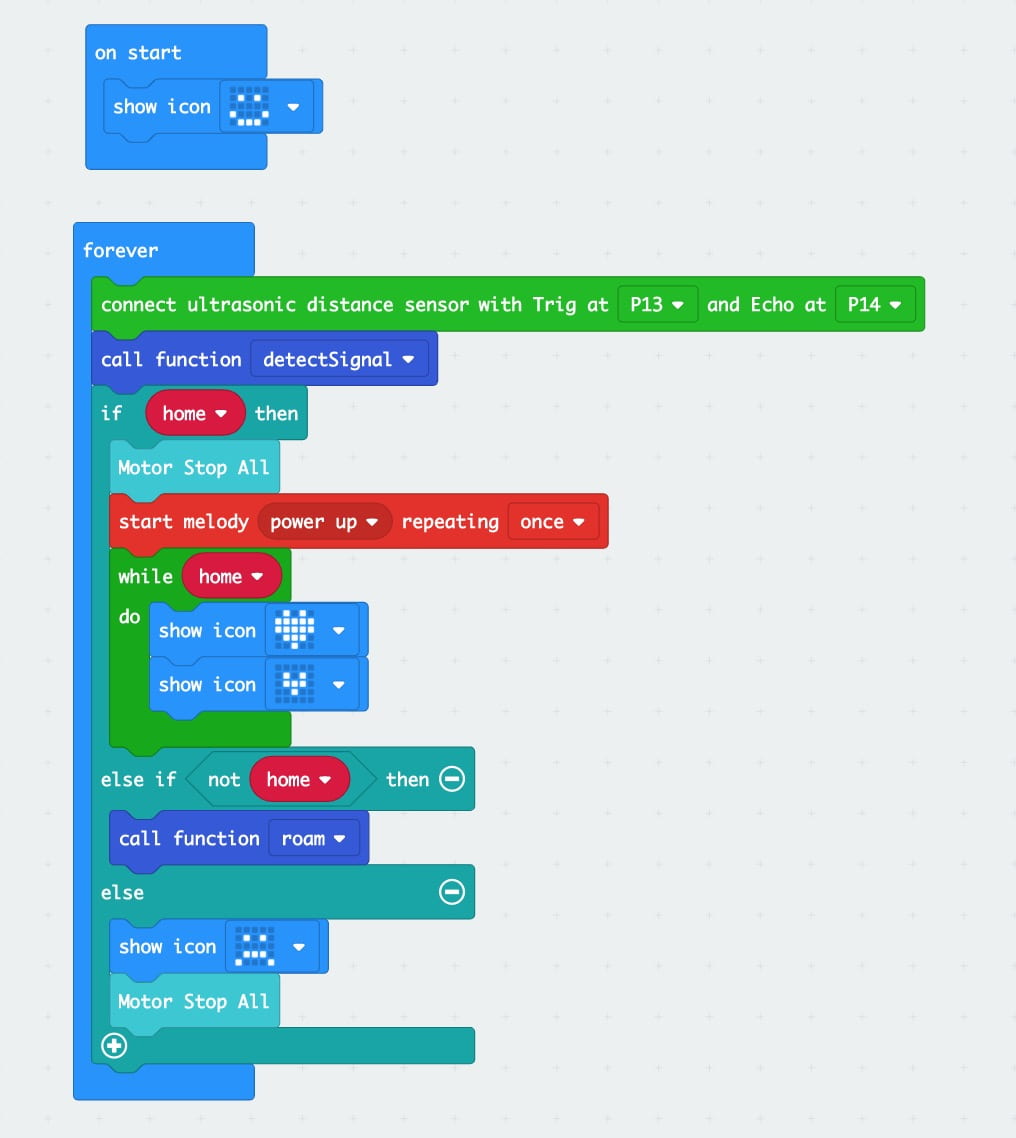

I then wrote a very simple obstacle avoidance program on MakeCode to test its function. However, the sensor wouldn’t work. I tried to make modifications to the code, looked through online forums for advice, and even tried switching out the sensors, to no avail. I was very confused by this and sought help from Rudi. As it turns out, I hadn’t realized that the sensor we used is different to the one from the Kittenbot kit and so it couldn’t be programmed with the Robotbit extension on MakeCode. So I looked for the ultrasonic extension for the HC-5204 sensor and after finding it, I very quickly able to put together a new program and it ran smoothly. With the obstacle avoidance feature working, I had all the separate pieces of the robot ready. I was finally able to start writing the final program to integrate all the components and create the setup for our experiment.

Final Program

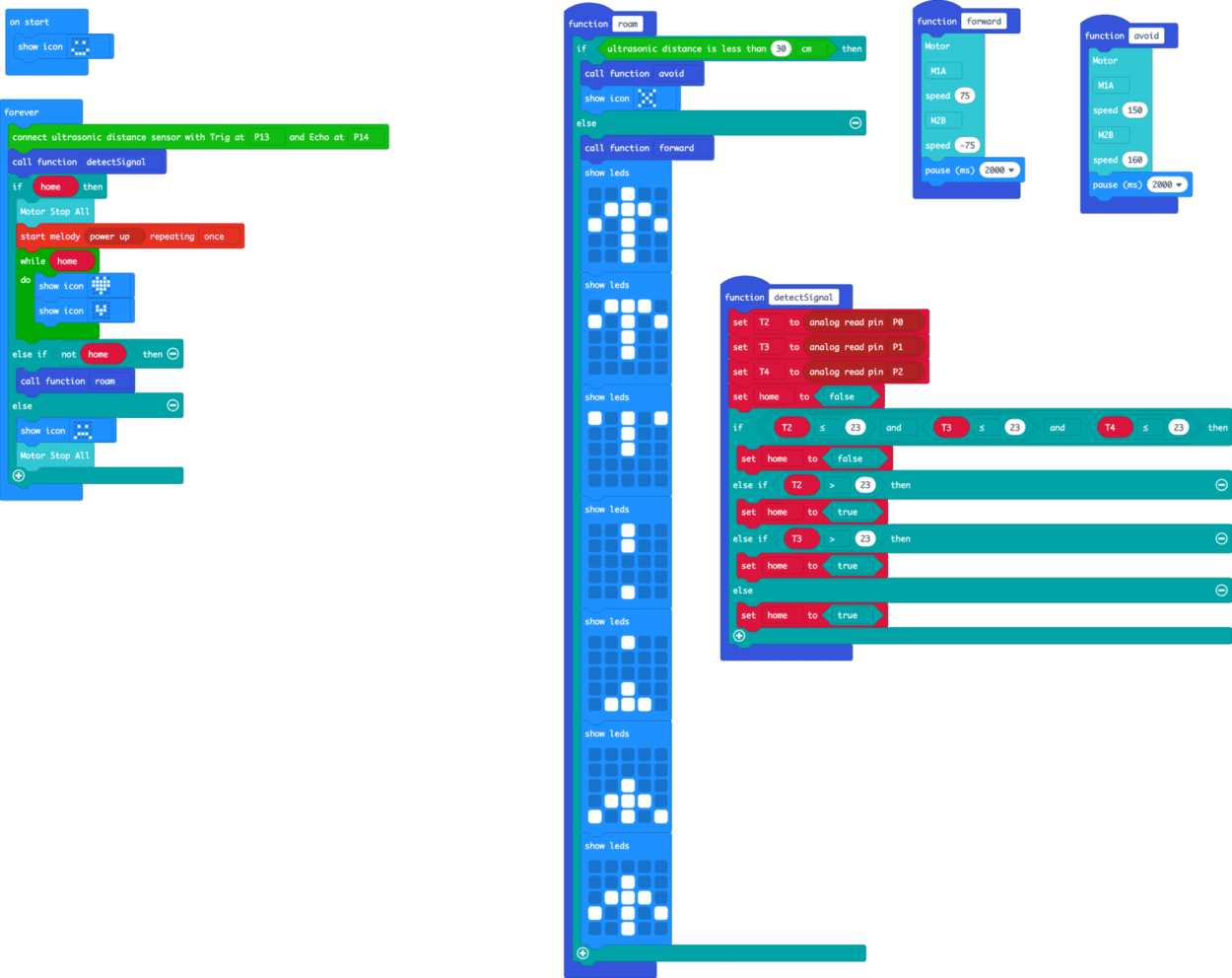

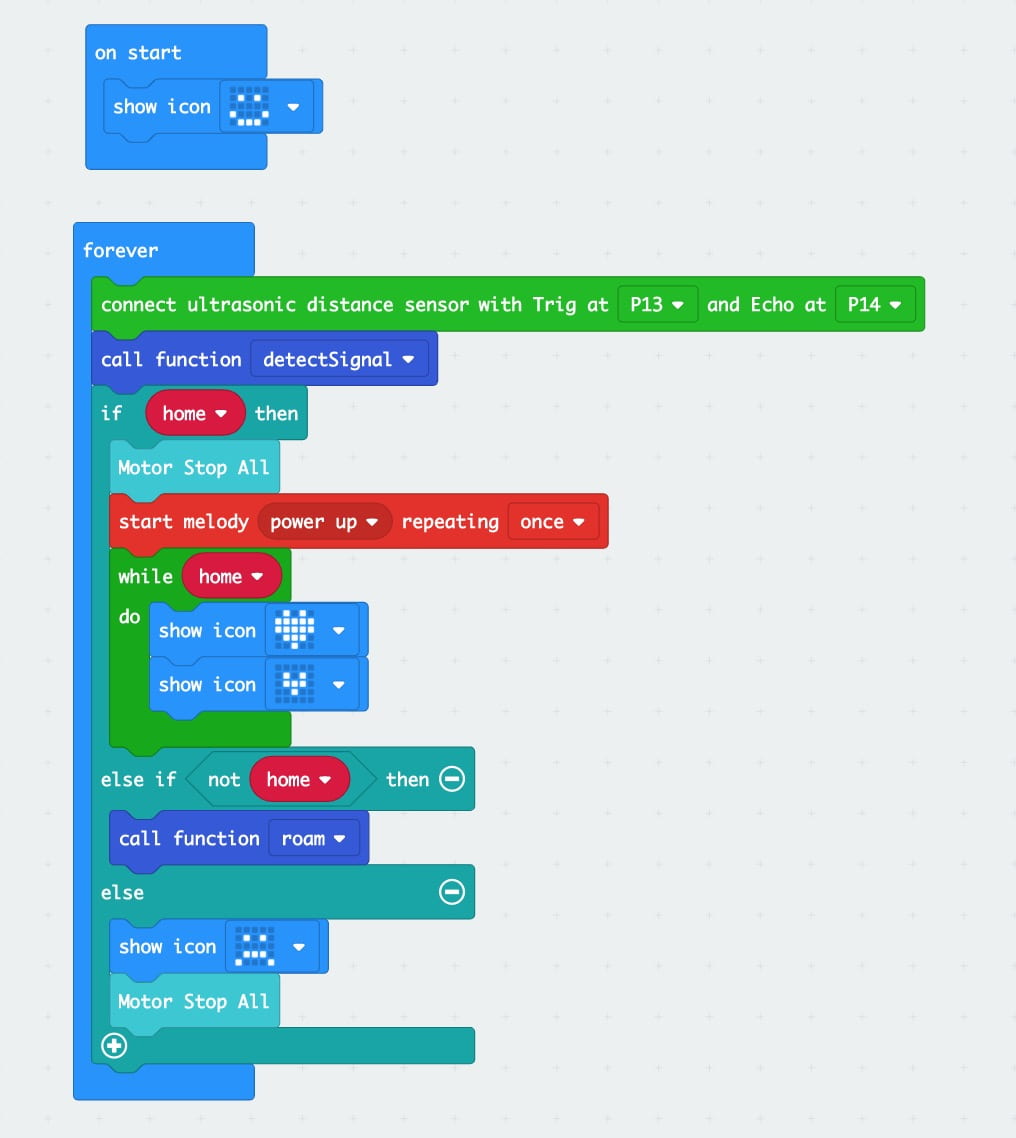

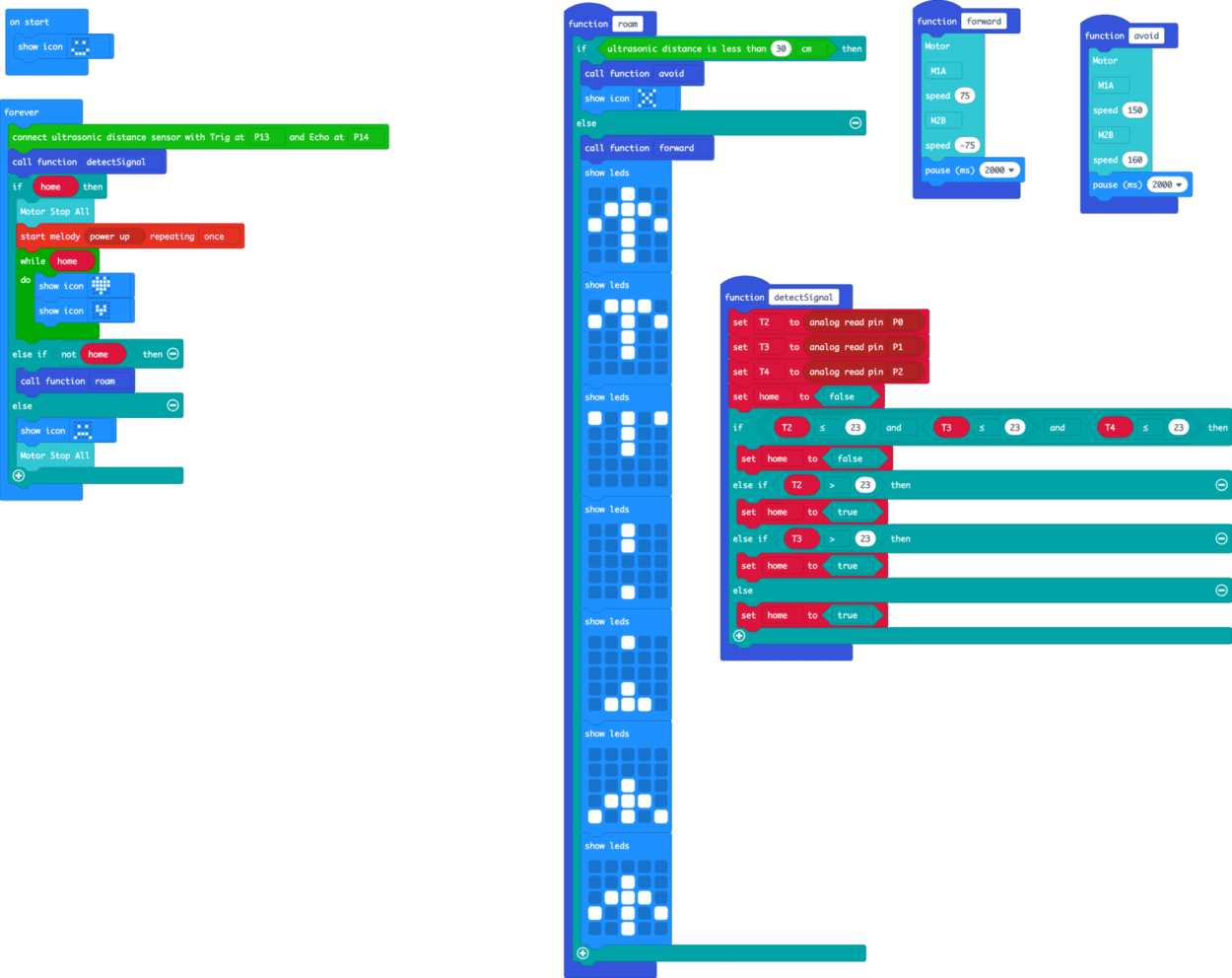

To integrate all the features together and create one dynamic program, I found the use of the Functions blocks very helpful. It would’ve been very tedious and disorderly to create one long program within the Forever block. I found this part of the project to be the most challenging, as we had no blueprint on how to put them together. There were so many different ways to design the code. In my early iterations I created separate functions for movement, infrared detection, obstacle avoidance and then tried to integrate everything in a “do” function. This was ineffective and my robot would only do the first part of the sequence, which was to move forward, and did not do any of the crucial steps such as infrared detection and obstacle avoidance, and finding its way to the beacon. This phase had about iterations of the program, and with each iteration as I tried to add more elements to improve the whole function, my code got more chaotic without much improvement. I finally abandoned the messy and complicated structure, deciding to take a different approach to simplify the whole program, which led to the second phase of coding.

The main goal during this phase was to keep things simple without sacrificing function, so with each iteration I focused on removing redundancies and condensing the code. I first got rid of a lot of unnecessary variables which optimized the infrared detection and obstacle avoidance features. Next, I eliminated the lengthy “do” function, and implemented the obstacle avoidance as a part of the movement function.

I also added an LED symbol to correspond to each task on the program, so I could see the states changing and know what the robot is doing as it moves. Finally, I simplified the actions the robot would do if it reached the beacon. In the first phase, I had tried to program it to do a spinning action, playing a melody, and then stopping. That complicated things and the robot was not able to do it effectively, so in the second phase I programmed the robots to simply stop, play a short melody, and show an LED sequence of a beating heart when it reached the beacon.

Our robot finally started showing signs of working and it was moving around whilst avoiding obstacles, however, it wasn’t quite perfect. It did not appear to detect the infrared signals well or respond to it accordingly. In addition, whilst the obstacle avoidance part worked fairly well, having the ultrasonic sensor at the bottom of the robot created a drag which hindered effective movement.

This led to the final phase of programming the final code, which mostly involved simple debugging and optimizing the features. First we moved and rearranged the wiring for the ultrasonic sensor, placing it behind the infrared receiver but positioned just above it to keep its path clear. Next, we had to tinker with the value thresholds for both the ultrasonic and infrared sensors, aiming to get the robot to function as effectively as possible. Although the most challenging part was over, this part was definitely the most painstaking and tedious. This was because it was very repetitive; we had to repeat the steps of changing a value in the code and then testing the robot, over and over again.

It took several iterations to finally get the right values to serve as the threshold for determining whether the robot had arrived at the food beacon. It took more iterations to also establish the values for the ultrasonic sensor. In total, from the start of writing the final code to finally getting everything working right, we wrote 16 different iterations of our program.

Experiment

Conducting the experiment was very easy because we had the whole setup working perfectly, so all we needed to do was add the right number of beacons, position and turn on the robots, and then record the data.

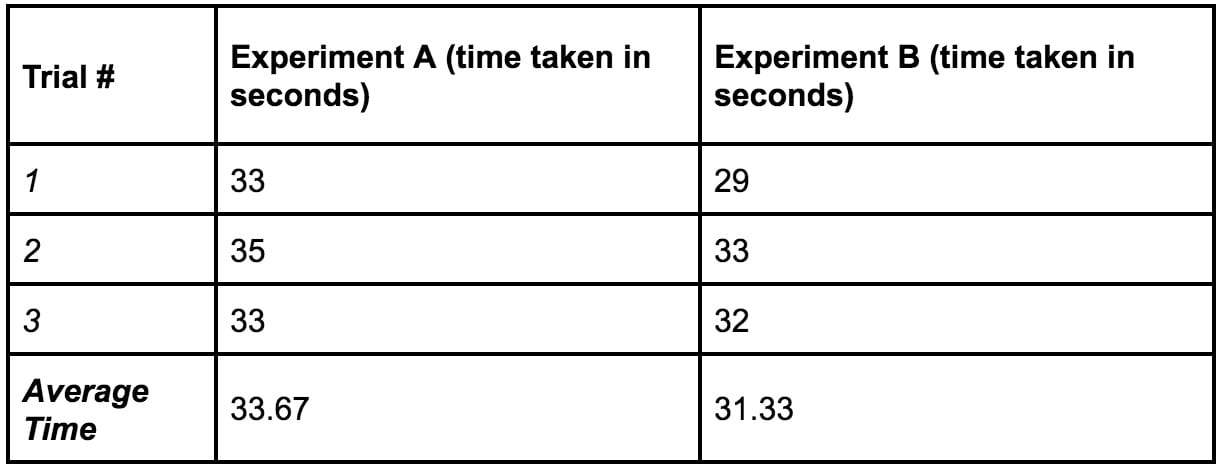

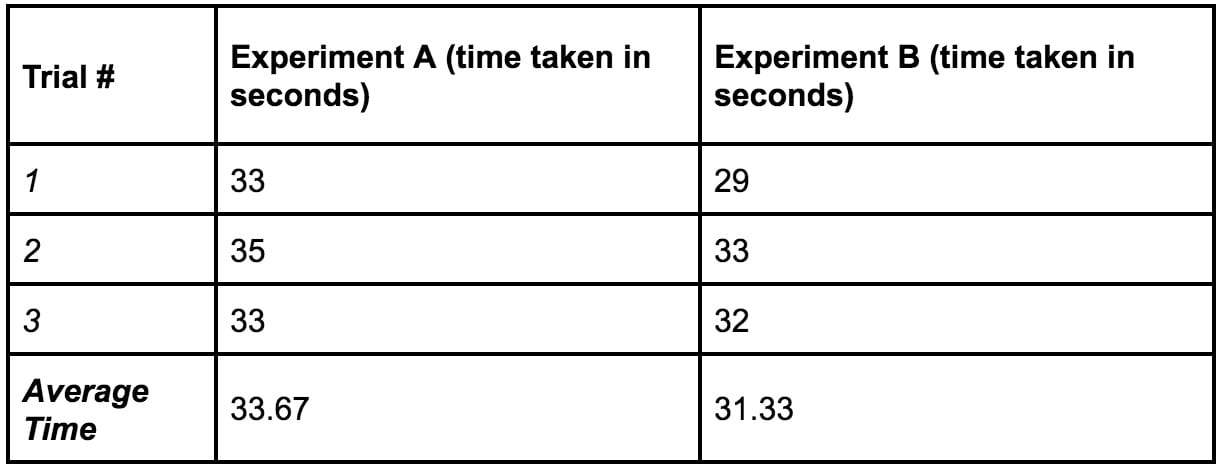

Afterwards, I used the chi-squared test to analyze the significance of our data, which determined that there is no significant relationship between the strength of the infrared signal and the time it takes for all the ants to arrive at the food beacon zone. The full details of this experiment can be found on my final research paper.

Final Code

Microsoft MakeCode

JavaScript

https://github.com/bishchand/BIRSFinal/blob/master/BIRSFinal.js