Task:

Melody Project: Design and implement an interactive exploration of melody. Record a 20-second composition using it *.

Final Result:

Inspiration and concept:

I based my work on top of the previous melody sequencer that I made, My initial concept was to create a melody sequencer with the option of generating a random melody to inspire new ideas. However, without the use of Markov chains or neural nets, the melody generated does not sound very good. I spent a lot of time to refine some common music theory knowledge into the generation algorithm to make it less random, such as giving more chance of having the next note land closer to the previous note as well as greater chances of landing on a comfortable interval of the scale. The end result was ok but I decided to switch the focus of this project from randomly generating melodies to recognizing melodies, using an ML5 model called crepe.

Description of your main music and design decisions:

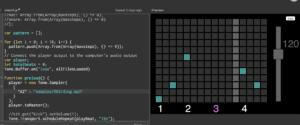

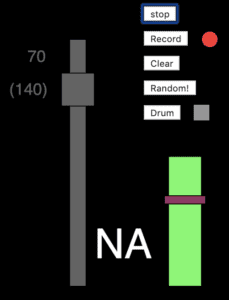

most of the design decisions made in this project is carried over from the melody sequencer sketch from the previous assignment, very clean and simple look with a dark background and light blue blocks. Some improvement from the melody sequencer is the addition of more buttons and features as well as minor design improvements such as showing the halftime bpm in parentheses when the current bpm is under a hundred. This is a design completely out of my personal preference because I like to program in halftime and do not consider music to be 70 bpm as opposed to 140 bpm, for example.

Overview of how it works:

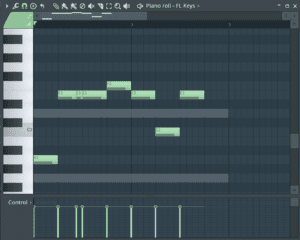

The melody sequencer itself works just like a regular melody sequencer you would expect in a daw, I created a two dimensional array with one dimension being time and the other being note pitch. 12 X 32, 12 available notes across a 32 beat sequence.

For the pitch recognition I used a ML5 model called crepe, the model returns frequency from the mic input provided. I originally planned to utilize this model to directly draw on the melody sequencer, kind of like a recording to midi conversion program. But the process of cleaning up the information to a working interface requires a lot more work than I thought. I used multiple “filters” to clean up this information in order to have the program working stably.

avoiding jumps in frequency

the frequency values picked up by mics might be unstable even if there is a dominating sound in the audio input, picking up a single other tone in the audio can lead to a different frequency in a sequence of dominant frequencies.

For example the pitch recognition value when A is played on the piano:

440,440,439,440,700,440,440

there may be a very different number in the sequence either because another sound is picked up, or a harmonic of the note being played.

I used a counter variable that checks whether the current frequency being played matches with the previous frequency, and increase the counter if they match. Only registering the note onto the sequencer once the counter reaches a certain value.

also limiting frequency values that exceeds the 12 note available in my interface also improves with performance.

avoiding fluctuations of pitch

I also made sure that the pitch does not has some flexibility to it, so a instrument can be accurately recorded even if it is slightly out of tune.

I achieved this by using a modulus equation finding the remainder of detected frequency with expected frequency.

(detected frequency: 443)%(expected frequency: 440) = 3

and as long as the fluctuation is between 10, I accept that as the same note.

setting input level:

Another improvement was to set a threshold of sound amplitude, to only take frequencies into consideration when the sound is louder than the threshold I set. So that only when playing instruments will the recording start, soft sounds that also goes into the mic will not affect the result. I also created a visual for this, showing the threshold and current loudness. With a color changing fill that goes from blue to green to red.

Challenges + future work:

This is probably one of my most unpolished projects so far, only because how hard it is to accurately track and record sounds and interpret them as midi. There should be more enhancements made so that the program can be functional enough for actual use as opposed to a tech demo, after that I would also like to explore the possibilities of combining this with more machine learning interaction. I envision a program similar to the duet shown in class, but opposed to keyboard input the program takes humming via pitch detection.