The thoughts that Yoko Ono shared on her creative processes certainly made me rethink about the way we source out inspiration for practically any sort of project. I found it very intriguing that she was able to transform and transcribe the environment variables around her into her own musical notes. In essence, she was able to take the reality around her and transform it into something of her own – her own interpretation of her reality. This also brought up another point about artistic interpretation – while one may look at a particular art piece and give their own critique and comments about, it may not have conveyed the message that the artists themselves wished to convey to their audience. This maybe due to the passing of time and the subsequent decline in the people who actually knew what the piece meant, or simply because the piece itself was not executed properly.

Another important point from this dialogue was the idea that one must not constrain themselves to the regular, three-dimensional space that they are used to and must feel to think about how different elements may interact or fuse in higher dimensions. As Yoko said, “You can mix two paintings together in your mind- though physically that’s impossible to do.”

She brings up the idea of “mixing” the wind and a building, and while that sounds physically preposterous, her creativity and potential does not need to be tied down to the constraints of physical reality. Rather, she would think of how they would interact in higher dimensions, and bring them down to some form that is interpret-able in our familiar space. I think this point was particularly important to me because it was essentially talking about a concept-driven approach versus a practical, technical implementation oriented approach. As a computer scientist and programmer, I often neglect the concept and focus more on the actual implementation and technology so this dialogue helped me rethink the way I approach a creative project.

Week 06 : Response to Homecoming – Abdullah Zameek

Listening to the first episode of Homecoming wasn’t my first experience with podcasts. In the past, I have listened to a bunch of podcasts related to tech-related topics on Spotify, and it was simply something I used to do as way of passing time while working out, etc. I never thought of podcasts to be particularly immersive, but this ~30 clip was quite captivating in many senses. First of all, the background. I think most of the immersion can be credited to the smart use of background sounds. For example, the sound of sirens, the opening and closing of doors and the setting of a microphone, and so on, helped conjure a mental picture of what the setting looked like. One particular interesting “auditory” scene was when Heidi was talking to the officer outside of her workplace and her boss opened the backdoor to tell Heidi that her break time’s up. The sound effects accompanying that moment really conveyed what the scene must have looked like. Additionally, the background sounds helped set the mood of the different scenes, along with the different vocal expressions that each of the character said.

However, one thing that I had trouble with keeping up was the chronological flow of time in the story. Although I did get it at the end, I was confused during the course of the audio and would stop and ponder at exactly what part of the timeline I was listening to.

My final comment on the audio is a more general statement on the use of audio as a medium of story-telling. We often interpret audio stories by trying to pictorially visualize what is being told, but what does it mean to vizualize the audio itself? Would that even be possible? Even though audio is a strong medium for the deliver of a story, it could be very well substituted for a video with subtitles, so that raises the question as to which forms of stories are best able to capitalize on the medium itself.

Week 05 – CIFAR-10 Training – Abdullah Zameek

For this assignment, it came down to a question of which hyperparameters I was going to tweak and experiment with. Since I’m still uncertain as to what the num_classes are (and still reading up on it), I decided to just tweak the batch_size and epochs. I set the number of epochs to be 3, and adjusted the batch sizes starting from 16 all the way upto 2048.

I chose an epoch size of three because it allowed me to obtain results in a relatively short period of time. Ideally, three cycles is not sufficient whatsover to obtain a good result, but since I’m measuring how the loss changes relative to the batch size, I think it doesn’t matter as much anymore.

The results have been summarised below.

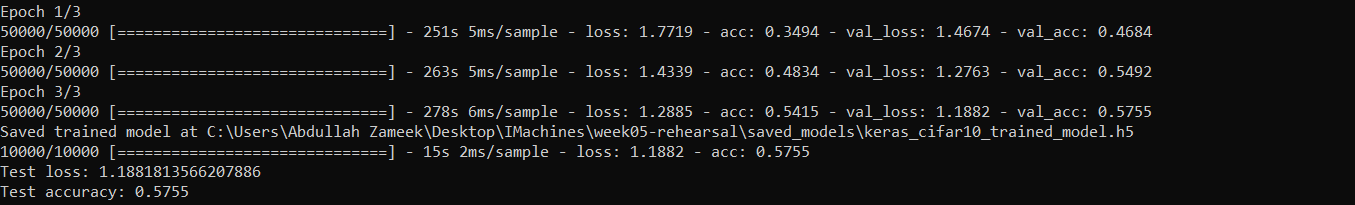

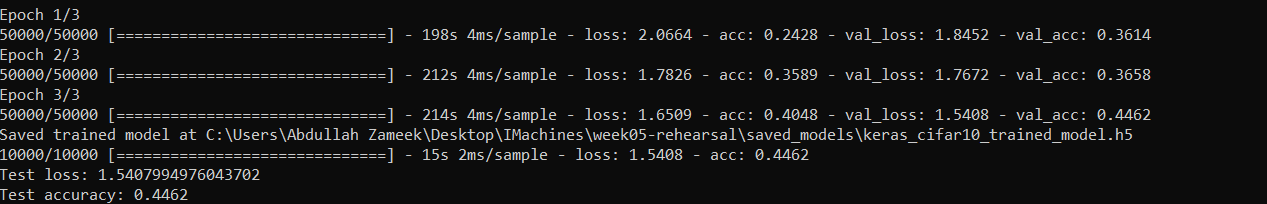

Batch Size of 16

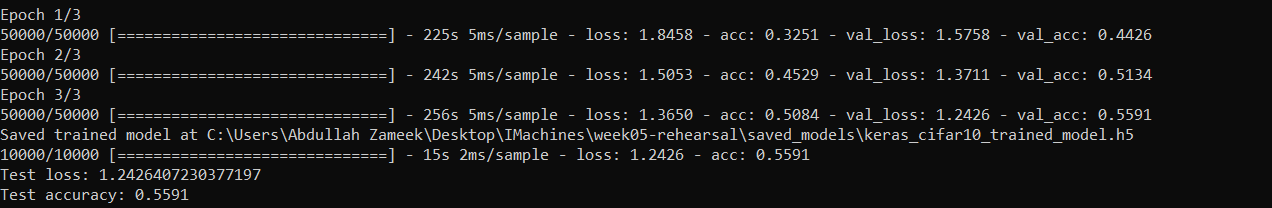

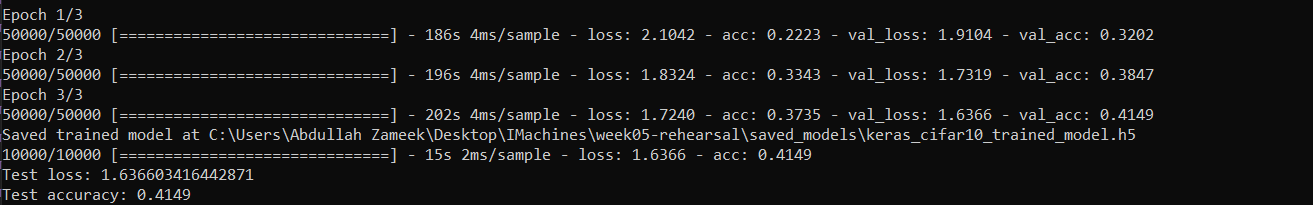

Batch Size of 32

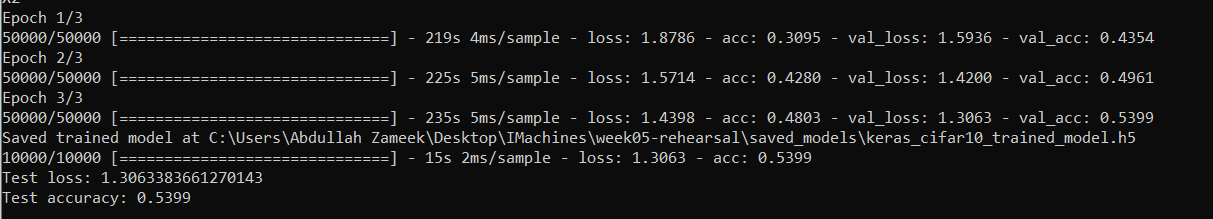

Batch Size of 64

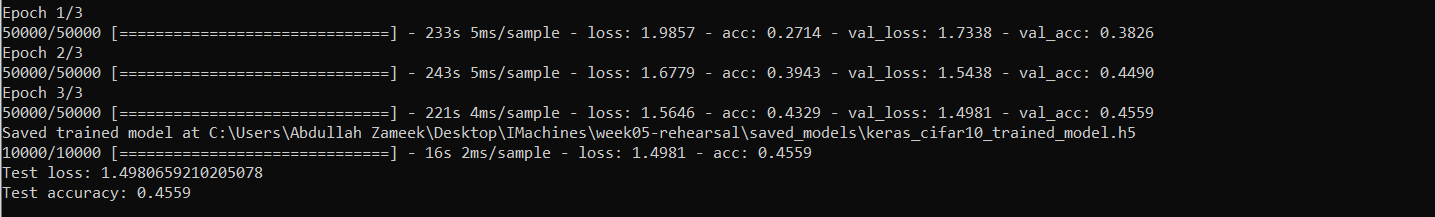

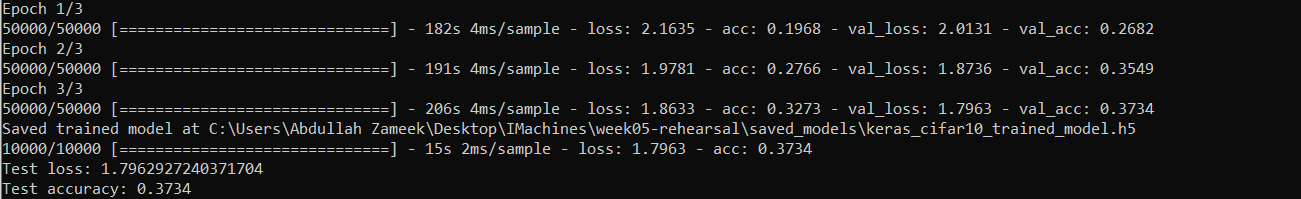

Batch Size of 128

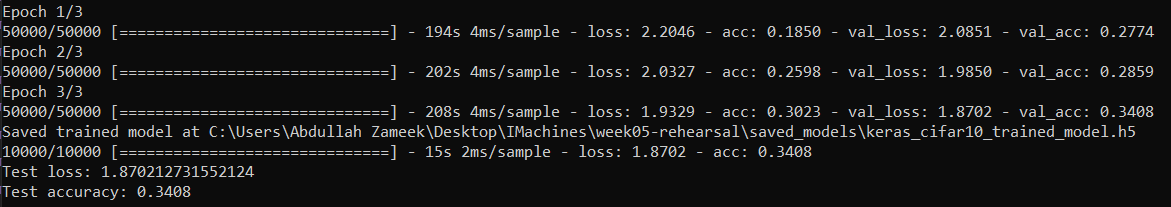

Batch Size of 256

Batch Size of 512

Batch Size of 1024

Batch Size of 2048

| Batch Size | Test Accuracy | Test Loss |

| 16 | 0.5755 | 1.1882 |

| 32 | 0.5591 | 1.2426 |

| 64 | 0.5399 | 1.3063 |

| 128 | 0.4559 | 1.4981 |

| 256 | 0.4462 | 1.5408 |

| 512 | 0.4149 | 1.6366 |

| 1024 | 0.3734 | 1.7963 |

| 2048 | 0.3408 | 1.8702 |

As can be seen from the table above, a batch size of 32 seems to be optimum.

I’m still not sure about the technical rationale as to why the Test Accuracy drops as the batch size increases, but I think it is because of the following. As the batch size increases, there are more data points to train/classify. However, since we are only iterating over them thrice, there isn’t enough time to train the examples sufficiently and completely classify them which makes the model not very accurate. However, with a smaller batch, such as 32, there are fewer samples to train which would lead to a better result.

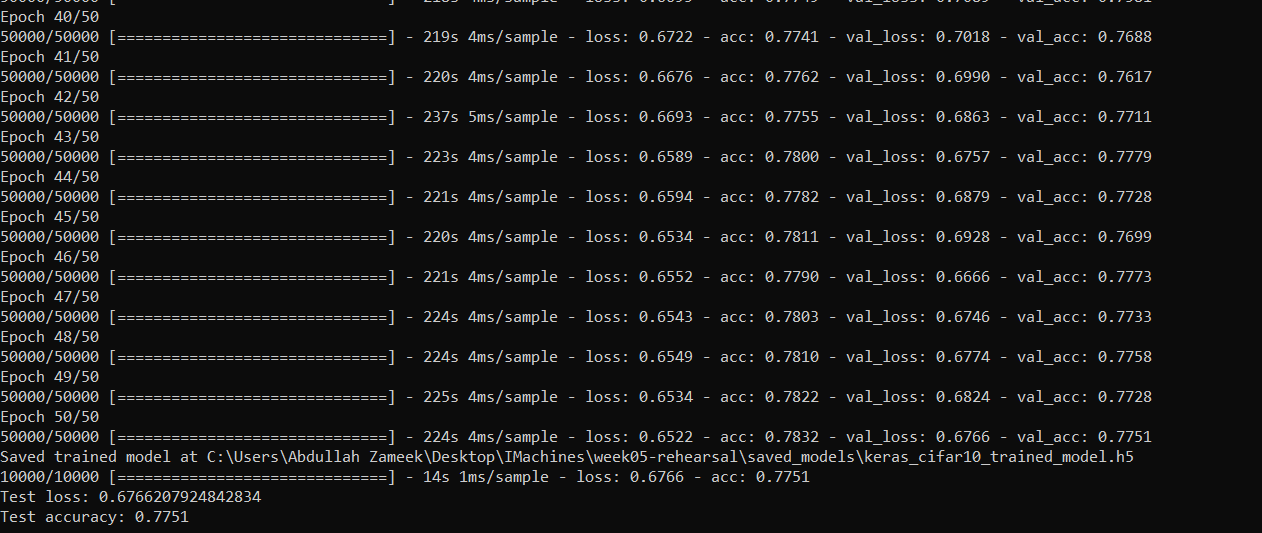

I decided to increase the number of epochs for a batch size of 32, so I set it to 50 and let it run overnight. I got the following result.

The test loss reported was 0.67667 and an accuracy of 0.7751. Great!

It seems to have greatly improved since the epoch of 3, and I expect that it might converge to around 0.95+ after 300 epochs or so. Maybe this is something I would like to test once I know how to use the Intel Server.

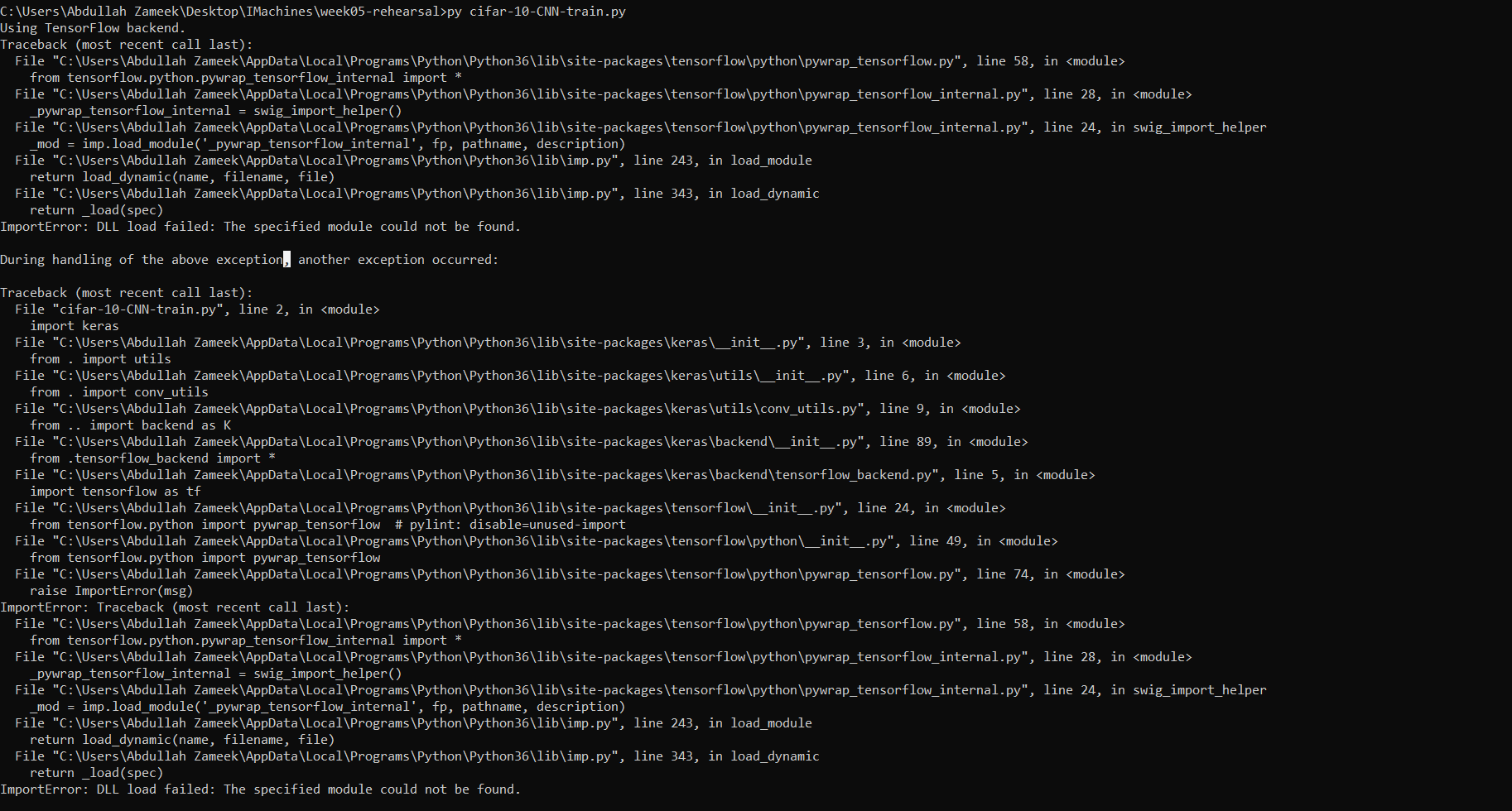

When attempting the Data Augmentation part, I got the following error straight off the bat

Interactive Comic Project Documentation : Abdullah Zameek

The comic can be viewed here

[Disclaimer : The Javascript used in this code uses a fetch function that retrieves a few resources that are on separate, remote servers. Modern browsers (for some reason or the other) prevent the reading of such files causing a CORS (Cross Origin Resource Sharing) error. To circumvent this, there are extensions for Chrome and Firefox and the like to enable CORS, and this would allow all the text to be loaded and displayed normally. I will be looking into a method that allows me to load text files in a much more stable, predictable manner, such as through a JSON file, but for now, this method seemed to be the most convenient]Concept :

The comic itself is heavily inspired by the “Zero Escape” visual novel trilogy, especially the last installment in the series, “Zero Time Dilemma”. Initially, we were going for a comic-book style layout, but ended up opting for a visual novel style format since that form is able to convey the storyline a lot better than a comic from our perspective.

The protagonist (you) wakes up in his car in an abandoned parking lot. He is confused as to how he got there, but even before he begins to think about it, he sees a silhouette in front of him, and he passes out…

When he wakes up, he finds himself in a sort of dark dungeon. But he isn’t alone- there seem to be others with him. A young teenage girl. A little boy and what looks like his granddad. A young delinquent with tattoos. A nervous looking teenage boy. And, a meek, young woman. You do not recognize any of these people, and they don’t seem to know each other either. For what its worth, you and your newfound group start to explore the dungeon in an attempt to escape. You make your way to a hall where there seems to be a message pained in blood on the wall. “Trust No One”, it seems to say. With this, a seed of distrust has immediately been sowed in your group.

From this point on, you make your way through different parts of the dungeon, where you will make some startling discoveries and unravel certain secrets(both in the game and out) , some of which will be completely life-changing (to your character, not you).

Implementation :

The layout is simple – a background image with a character sprite image overlayed on top, with the dialogue beneath it. The user clicks a button to move to the next scene, and will eventually be presented 3 choices. The three choices lead to a certain ending, and the user himself has to unlock the fourth, final, good ending. This was easy to do with a bit of code, and I believe this really taps into the potential of the medium where the user is put through a new level of immersion where they have to interact with the mechanics of the game itself. This also seems to break the 4th wall in some sense. since the character that you play as (or whose eyes you see the world through) seems to be reliving the same events again and again, until you finally reach the true ending.

HTML & CSS-

The entire comic runs solely on a single html page (index.html) and a single style sheet (style.css). Because of the way the comic is structured, it made sense to have just a single page and swap out the different elements as the story progresses.

Fairly straightforward implementation on the HTML+CSS end. I used very straightforward elements such as divs, p tags and so on, got the elements onto the right spots using translate with percentages. However, I did encounter some trouble after gathering all the assets at the last moment. The divs were all out of place, and the background images and character sprites wouldn’t overlap. After playing around with the position property of each element and adding z-indices, I was able to fix it somewhat. Also, included audio on the pages using the audio tag. My initial plan was to use JavaScript for the audio but it got too obfuscated through promises and other ES6 jargon. Shoutout to Kimmy for giving some direction to getting the audio up and running!

Javascript-

This is where most of the ‘magic’ happens. And, this is also where I ran into all the problems during this project.

First of all, the script dialogues itself. It would be horribly cumbersome and inelegant to include the dialogue in the JS file itself as an array or whatever, so I decided to dump all the text into a text file and make my script read from the said text file. However, I soon came to realise that doing so in JS is quite a pain, because, by default, JS does not have a method to read txt files directly. After a quick Google Search, I found multiple workarounds. The first was the use of the ‘fs’ module. But, that meant introducing Node.js, npm , node_modules and all the clutter that comes with a node application. Immediately crossed out. The next option was to use an XMLHttpRequest(). It seemed fairly reasonable, and tried it out, and it worked. However, I ran into trouble when I tried to use the loaded text outside of the function, and later learnt that was because of the fact that JS is asynchronous and does not wait for a function to run to completion. The solution was to wrap the function in a callback, but that soon proved to be too cumbersome and not very reliable. I ended up using the Fetch API to “fetch” the data from a remote source (in this case, I just uploaded the text file onto the IMANAS server, and pointed to that location) and the function returned a Promise from which I was able to extract the text, and use it well out of the function scope.

Next up, I wanted to include some BGM to make the experience more “thrilling”. My initial idea was to do most of it through Javascript, but apparently modern browsers (Chrome, etc) do not allow the .play() function to execute directly, but rather it returns a play Promise of sorts. After spending a sizeable amount of time dealing with broken code (straight out of Google’s documentation), I noticed Kimmy had sound on her website, and turns out she used an iframe for the sound. I didn’t use an iframe in the end, but just the audio html tag with an id and that seemed to work just fine after.

The background and character sprite images were once again controlled through an array that was preloaded from a textfile that was hosted on a remote server, read using the Fetch API, and then decoded from the Promise that was returned. The sound was added at the last moment, so I did not have time to write a clean, elegant block of code to control the sound, so I just hardcoded the points at which to control the sound at.

Final Thoughts :

This project took me much more time than I expected, mostly arising from unexpected circumstances that I had to deal with. I did enjoy coding up the JavaScript components though, since it taught me very valuable lessons about JS’s asynchronous workflow which became very apparent from the beginning. I would have loved to have made more choices and give the characters more backstory and context, but that would have only increased the runtime of the entire story. All in all, I am quite pleased with the overall outcome, even though it does have a few bugs and inconsistent design elements that may or may not be entirely apparent at first glance. Talking about the comic/game/visual novel itself, I think it could be thought of as a sort of short teaser to a bigger comic/visual novel/game since there were so many untied threads at the end.

With that being said, all the background music, and character sprites are the intellectual property of Chunsoft Games, and to the artists who produced them, namely, Kinu Nishimura, Rui Tomono for the character designs, Shinji Hosoe for the background music, and Kotaro Uchikoshi for being the world of Zero Escape alive.

Week 4 : Guess Color JS Exercise – Abdullah Zameek

This exercise was fairly straightforward and a nice introduction to if() statements in JS. I didn’t have any problems in getting the base code to run, so I proceeded to add an extra feature to the code. I wanted the image to change at random each time you hover over a particular section, and that was pretty straightforward and took 4 extra lines of code.

You can try out my modified Guess Color with random image picker over here:

http://imanas.shanghai.nyu.edu/~arz268/communications-lab/Week4/