Project Concept:

Sam came up with the idea of creating a sort of fun, thought-provoking documentary whereby we go around campus and record peoples’ responses to a particular question. The question we posed was inspired by a popular album by Billy Eilish, “When you fall asleep, where do you go?”

Some of the respondents linked it immediately to Billy, while others were quite perplexed by such a random, strange question. The respondents first gave their initial thoughts on the question and then tried to recall an interesting dream of theirs, if they remembered any.

The link to the project can be found here and the code can be found in this repository. (Note : the assets are not available on the repo)

Implementation

Sam, Kyra and I were able to divide the work up among the three of us reasonably evenly. Initially, all three of us went around campus to collect the audio and video for the project. Afterwards, Kyra and I did a bit of sound editing on Audacity to remove the background noise from the audio clips before synchronising it with the video. The initial results were reasonably satisfactory so we went along with it. However, after the initial feedback, Kyra re-shot more video and put together a second rough cut, while Sam worked on the first rough cut.

Deciding the interaction was quite a challenge, and the three of us finally decided to go with a simple concept whereby we present two videos, a regular one, and one with a “dream” filter. The user can then swap between the two videos in real time by clicking a button. The dream filter was meant to have an effect that is very reminiscent of what it means to be in an actual dream. A common response was that people often forgot their dreams, or it was very hazy and they couldn’t really distinguish the faces and voices that they saw, and that is precisely what Sam implemented in the Premiere Pro filter.

Since Sam was already well-acquainted with Adobe Premiere Pro, she handled the bulk of the final edits and adding of effects, while Kyra focused on creating the rough cut storyboard that Sam would then polish. Meanwhile, I gathered a couple of image assets that would be used in the final video.

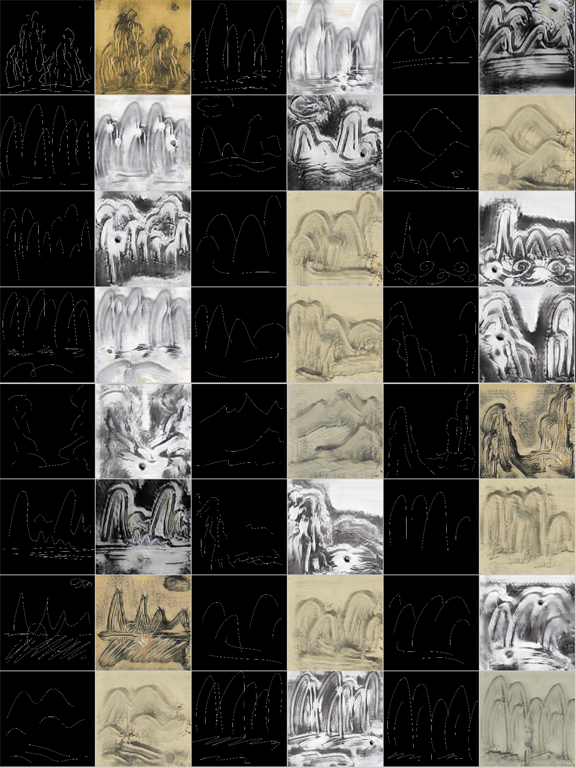

In addition to that, since the concept revolved around dreams and the ethereal, I created a short intro clip using DeepDream, which is a machine learning technique developed by a Google Researcher. It is essentially a computer vision model that takes an image and amplifies certain features present depending on the parameters that were set.

Here’s a clip that shows the raw, unedited DeepDream video:

Sam and I put together a simple website for the rough cut, where I provided a basic HTML skeleton to work with and Sam worked more on improving the visual front of the site through styling with CSS. Afterwards, I worked on more of the Javascript functionality of creating the toggle between the reality and dream modes.

An additional feature I wanted to add was a basic p5js particle sketch that would run in the background of the video in dream mode. But, because of a few last minute, technical issues, I removed the sketch since it was interfering with the functionality of the rest of the site.

Here’s a quick demo of what the sketch would have looked like:

The actual website, however, was quite straightforward. We went with a very simple layout to make it as intuitive and as easy as possible to navigate while retaining the video as the center of attention.

Final Thoughts:

We took a bit of time to decide on a concept and means of execution for this project since we changed our project idea completely from the initial proposal. The actual question itself sparked a myriad of responses which was great because we wanted to see how people would react to such an “odd” question.

Once we decided on the concept, we were able to expedite most of the technical tasks reasonably quickly. However, once again, the matter of interactivity was a huge question to address and we couldn’t necessarily come up with more ways to make the video more interactive without distorting the overarching theme of the project. I feel that if we had more time to conceptualize and think about how we could approach the question in a more engaging manner, we might have done things differently. But, given the time frame and the scope with which we were working, I think I’m reasonably satisfied with the outcome.