I come from Lima, Peru, a city where traffic accidents are the norm in some parts of the city. In the year 2017, it was reported that for 70% of the total traffic accidents that occurred in Lima, it was a pedestrian who was either at fault or injured. Therefore, I proposed to Jackson we tweaked a pedestrian traffic light so that it acts not only as a warning, but as a deterrent too for people wanting to cross when the light was red. The idea was that during the red light period, the traffic light detected whether someone was attempting to cross and sound an alarm. We looked at similar concepts that were already implemented in other countries in order to figure out how we would get this to work and be interactive at the same time. In this case, the user will interact with our project by following the traffic light’s cues (red is stop and green means go), and not following that would result in our traffic light sounding an alarm. We looked at a video that showed different ways different cities in China tried to deter jaywalkers from crossing, many of them too complicated for our current skill level with programming, supplies available, and time. After watching the video, we decided we would create a barrier with a laser that would activate a speaker if ‘broken’. The video we watched is embedded below:

We decided to use a laser aiming at an LDR (Light Dependent Resistor), which would measure the intensity of the laser and act like a tripwire (once it stopped detecting the laser, the value it read would surpass a threshold and activate a speaker). There, we ran into our first problem: we would have to put the LDR on the other side of the table using a very long cable which could be stepped on and would be unstable, thus, our professor (Rudi) gave us an acrylic mirror which we used to reflect the laser into the LDR. It proved to be stable and allowed us to aim the laser into the LDR while keeping them both in the same spot. We tried this for distances up to 1.30 meters (the distance we estimated would be between tables for the user testing session) with favorable results (the laser was reflected into the LDR and giving consistent values). We then added a speaker to the mix and programmed it so that when the laser was interrupted it played a tune.

We first tested it aiming the laser directly at the LDR, to ensure the program did what we wanted it to do (which it did), and then proceeded to set it up again with the mirror. The speaker would play an RTTL tune, since those were what occupied the least amount of memory. Our professor showed us a webpage with a list of tunes and the code that would make the Arduino play them, which we used. The list included tunes similar to the Star Wars theme song and the Top Gun theme song, which did not resemble alarms (they were all snippets from popular movies’ theme songs), so we picked the first 5 notes from “The Good, the Bad and the Ugly”, which were the ones that resembled an alarm the most. We tested the full ‘tripwire’ assembly with the mirror, again at full distance, and got the results we wanted, so I started to work on the traffic light while Jackson did some final adjustments.

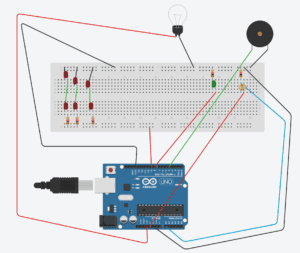

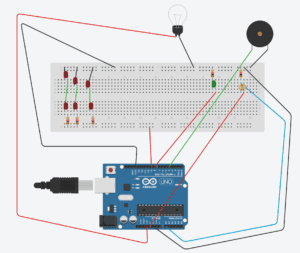

After we got the tripwire done I started to work on the lights. Before doing that, however, I worked with Leon (an IMA fellow) to design and laser-cut the box that would house our project. It had two large round holes on one side we would cover with paper so that the light emitted by the LEDs was diffused and it looked like a real traffic light, two smaller holes on the side from which the laser and LDR would work, as well as a USB-sized hole in the back to power the Arduino and connect it to our computer to tweak, edit and improve the code in real time. We decided we would not use a yellow light, and thus would just use red (on top) and green (on the bottom). We first thought about using only one LED per color, as we though the light would get diffused, but it was too dim, so I experimented with 6 LEDs connected in parallel but to the same source of power, which did the trick; however, Nick (an IMA fellow) warned me that I could exceed the Arduino’s power output, and thus recommended that I arranged the LEDs in 3 groups of two LEDs connected in series, each to a 220 Ohm resistor, to the same power source and ground. With this arrangement, the light was strong enough so that it was visible when diffused. I tried to do the same with the green light; however, the LED by itself was too dim, so Tristan (an IMA fellow) gave us a larger, brighter green LED (we were using 5mm LEDs up to this point, and kept doing so for the red light), which did the trick when we connected it as a traditional LED. I then tweaked the “Blink” example in the Arduino to alternate the two lights, like a traffic light would. After we made sure we had the circuit and program ready for both parts of the project (the tripwire and the traffic light), we proceeded to put them all into one large breadboard and Arduino, trying our best to avoid a wire-spaghetti. The final circuit looked like this (because I couldn’t find a laser icon on Tinkercad, I represented it with a lightbulb, and the speaker we used is represented with a piezo for the same reason):

And the box, prior to being assembled, looked like this:

We thought the best way to combine the codes would be to create an if statement below the function that turned on the red light and paste Jackson’s code below. However, it wasn’t that simple, the speaker wouldn’t sound when we tripped the laser. Tristan told us to use the functions “millis” and “long” instead of a delay (since a delay just stops any code from running), and use a while statement instead of an if statement, so that the Arduino kept track of when it would start to look out for the laser being tripped. After we finished the code, we fit all the components into the box and hot glued all but three sides and the top of the box, so that we could make adjustments if necesary during the user testing. This is how the device ended up looking like:

For the user testing we were first given a table in a position where we were unable to set up the mirror on the other side of a walkway, so we were moved to another table that was in a position where we could set the mirror up, albeit this time it was a much longer distance (I estimate it was between 2 to 3 meters), so Jackson took more time to aim the laser towards the LDR. During testing, we realized we had to signal a walkway and the way we wanted the users to walk through so that they would understand our aim (this is something that would not happen in a real life setting, as a pedestrian stoplight is always at the other side of the street, so the user walks towards it instead of past it). Users told us that they didn’t see the laser and that they should see it so that they know they do not need to cross, they said the same thing for the traffic light (it was only visible from one side); however, the point was that the users followed the rules traffic lights have: that red is stop and green is go, and those who do not follow the rules (i.e. attempting to cross on a red light) would be deterred by the alarm coming off of the speaker, something we were able to achieve, as many of the users who tried to cross on a red light asked what was going on when they heard the alarm.

In the group project, I defined interaction as the input and output of information between one human, animal or machine and another (or more) human, animal or machine. The device we made for the project is, through the lights, giving information to the user, telling them whether or not it is safe to cross, and the user is giving the device information through their action of crossing or not. If the user trips the laser (crosses the road while on a red light), the LDR on our device receives an input that tells the device that someone is attempting to cross and sounds an alarm as an output, which should stop the user from crossing and have them back off. The aim of our project was to show that there are ways to attempt to decrease the amount of traffic accidents that happen because of jaywalkers, something that has already been done here in China, but we also wanted to show that we didn’t need to take drastic measures such as shaming jaywalkers by showing their photos on a screen or spraying them with water, a simple alarm will do, and I believe we accomplished our objective. Regarding the suggestions given to us during our user testing, I find them hard to implement on our device because we designed it as the prototype of a concept. If we had more space and resources, we would build it like a proper traffic light and have the sensors on the other side of the street, as well as using more evident deterrents, such as playing a “do not cross!” recording instead of an alarm. If implemented well, this could help drastically decrease the accidents for which jaywalkers are at fault, partly because the device that showed them whether or not it was time to cross the street is now interacting with them telling them they are at risk if they cross on a red light, it gives the pedestrian traffic light a more active role in the pedestrian’s experience, and potentially make the streets safer for cars and pedestrians alike.