Interaction could be most simply thought of as the way in which things work together. Interaction can be seen between humans, between humans and machines, humans and animals, and between machines. As Chris Crawford might say, interaction occurs on a spectrum of complexity (6). In my opinion anything which involves actions from two different parties is considered an interaction. However, this definition of interaction can be narrowed as a an interaction which involves a response or an inference on behalf of the other party involved. For an interaction to be considered “higher” on my idea of the interaction spectrum, the response which is given by the other actor must be related to the input and involve a sort of inference. In Crawford’s example of a fridge light turning on, there is not much inference on behalf of the refrigerator. Once the door is open, the light turns on, when the door is closed, the light is off.

One of the projects I researched were Daniel Rozin’s mechanical mirrors. I feel that his mirrors fit my definition of interaction as an inference must be made between what the sensors are reading and between what is the output of the mirror. If something in front of the mirror is moving a certain way, the mirrors must reflect this.

As far as projects which do not fit my definition of interaction, I would refer to Yayoi Kusama’s Infinity Room installations. While her art is definitely stunning, I feel that the interaction with them is one-sided. When viewing these installations, you either walk inside or stick your head in. The only response you get is the reflection being made by the mirrors. The visual effect is stylistically stunning, but there is no interpretation on behalf of the installation itself. Unlike more traditional pieces of art, these installations require someone to make an action in order for them to be experienced, but they do not do anything as a response. Therefore, I feel that I would not consider these installations “interactive” while others may think they are.

As with many new technologies and with codes, I feel that interaction is often a sort of inference made by the other actor. In terms of interacting with something like our project, as the main actor, you would select what you want to happen, but then the machine (the Dream Catcher in this situation) would make an inference on what it thinks you want to happen and give a result. I feel that this type of interaction is often seen when we are conversing with one another, and I feel that this sort of phenomenon is what I would best use to describe interaction.

As far as other interactive projects, I feel that Daniel Rozin’s mirrors partly fit this definition. The main actor is the person/people standing in front of the mirror, then the “mirror” as the second actor reacts to what it is taking in as an input and makes an inference to get a desired output. While his mirrors do not directly “converse” with the actors, they can still be considered as interactive as they are manufacturing a response to the user.

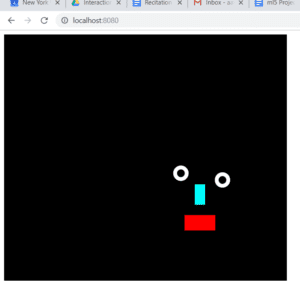

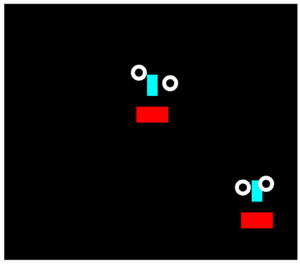

For the project our group made, we wanted to create something that has a more complex mode of interaction. We were also concerned with how devices in the future might have an emotional side to interaction as well. Therefore, we thought about creating a device that was highly personalized. As dreams are often very subconscious, we thought that this would be a very interesting thing to have something interact with. With our project, the interaction is not physical. The Dream Catcher works mainly with what goes on in your mind, then the only physical interaction you would have is selecting what you want the notebook to do with your dream. However, I feel that this type of physical interaction does not necessarily go in with what I would think interaction to be. While clicking or selecting something from a menu may be viewed as an interaction, I think that the main interaction happens when the notebook interprets your dreams.

I feel that this group project fits my definition of interaction as it not a one-way channel of interaction. Where the user gives the notebook the input of a dream, the notebook then interprets the dream and gives the user a variety of choices in return. Then, after the user selects a choice, the notebook executes an action directly related to the choice. Due to this multi-channel flow of action and response, this notebook surpasses a more basic level of interaction and hence fits with my proposed definition of interaction.

Sources used:

The Art of Interactive Design – Chris Crawford (pg 1-6)

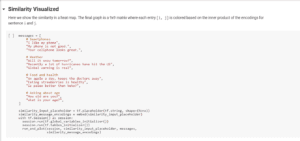

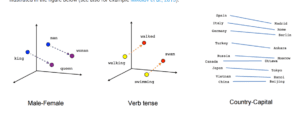

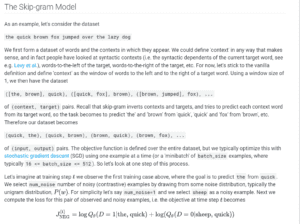

(just one example of how words can be related)

(just one example of how words can be related) (another way to highlight how data sets can be formed)

(another way to highlight how data sets can be formed)