For this assignment, it came down to a question of which hyperparameters I was going to tweak and experiment with. Since I’m still uncertain as to what the num_classes are (and still reading up on it), I decided to just tweak the batch_size and epochs. I set the number of epochs to be 3, and adjusted the batch sizes starting from 16 all the way upto 2048.

I chose an epoch size of three because it allowed me to obtain results in a relatively short period of time. Ideally, three cycles is not sufficient whatsover to obtain a good result, but since I’m measuring how the loss changes relative to the batch size, I think it doesn’t matter as much anymore.

The results have been summarised below.

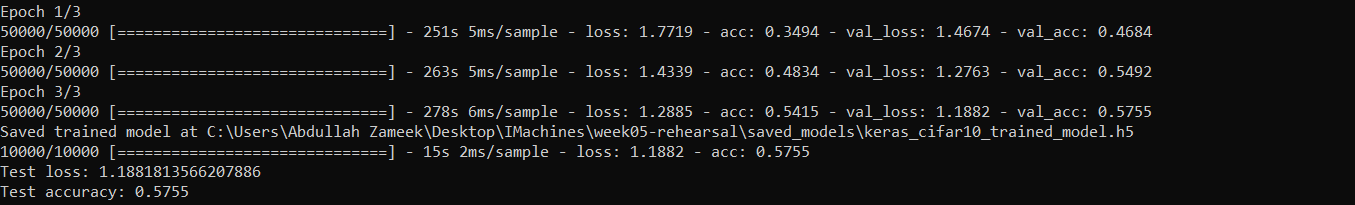

Batch Size of 16

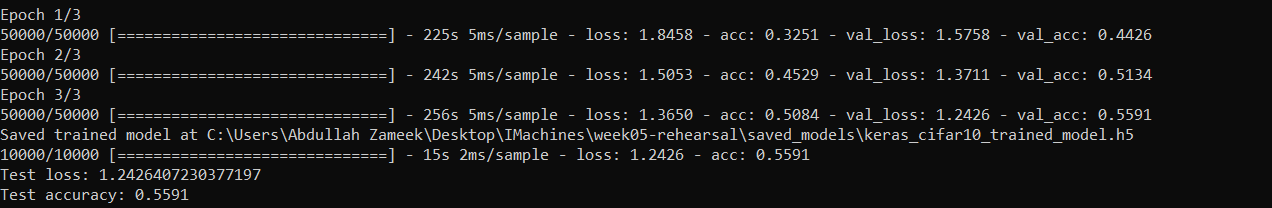

Batch Size of 32

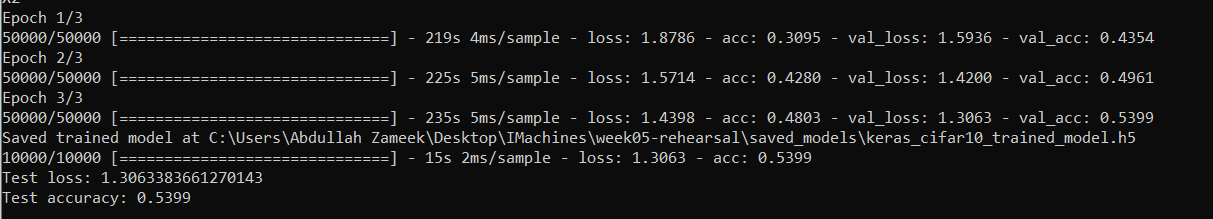

Batch Size of 64

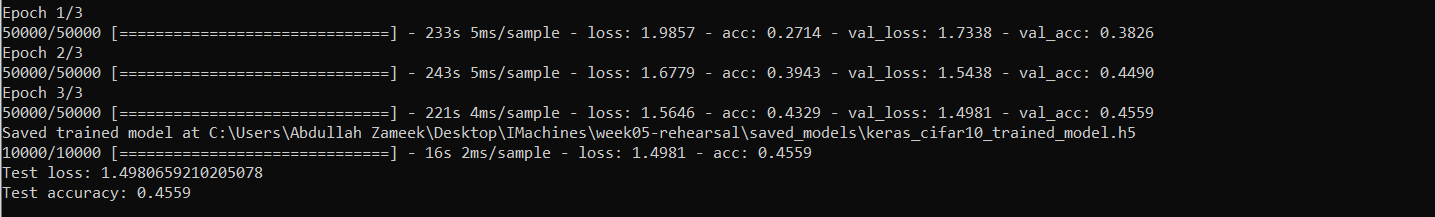

Batch Size of 128

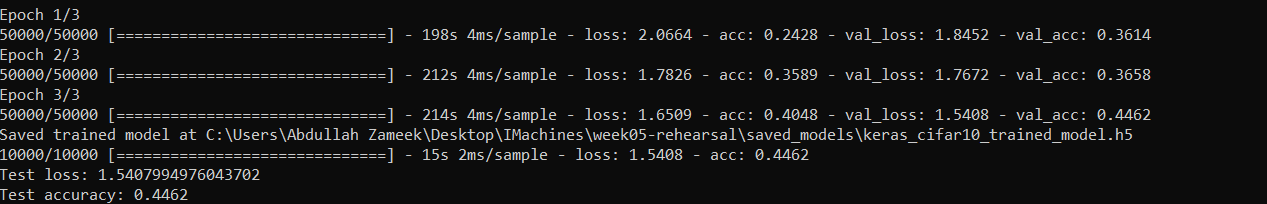

Batch Size of 256

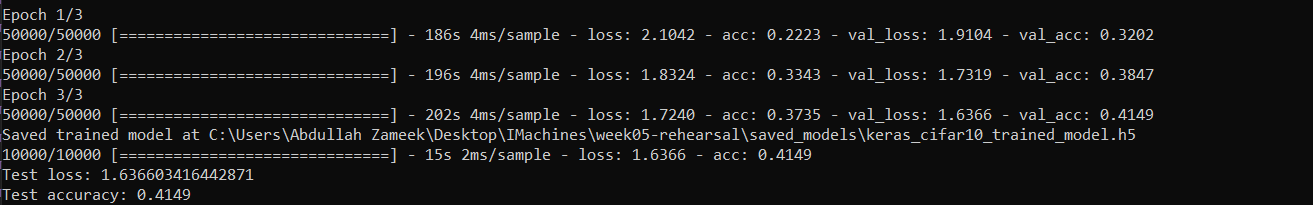

Batch Size of 512

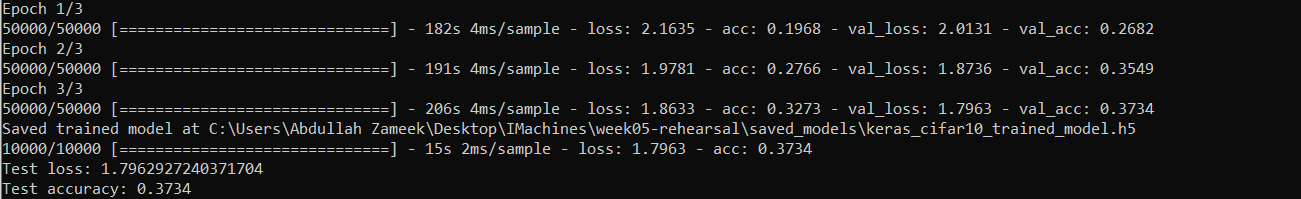

Batch Size of 1024

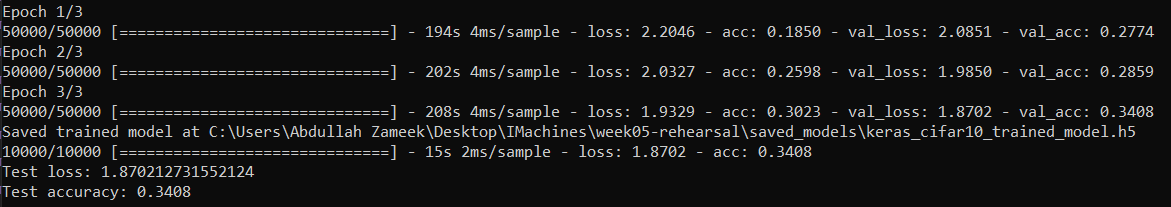

Batch Size of 2048

| Batch Size | Test Accuracy | Test Loss |

| 16 | 0.5755 | 1.1882 |

| 32 | 0.5591 | 1.2426 |

| 64 | 0.5399 | 1.3063 |

| 128 | 0.4559 | 1.4981 |

| 256 | 0.4462 | 1.5408 |

| 512 | 0.4149 | 1.6366 |

| 1024 | 0.3734 | 1.7963 |

| 2048 | 0.3408 | 1.8702 |

As can be seen from the table above, a batch size of 32 seems to be optimum.

I’m still not sure about the technical rationale as to why the Test Accuracy drops as the batch size increases, but I think it is because of the following. As the batch size increases, there are more data points to train/classify. However, since we are only iterating over them thrice, there isn’t enough time to train the examples sufficiently and completely classify them which makes the model not very accurate. However, with a smaller batch, such as 32, there are fewer samples to train which would lead to a better result.

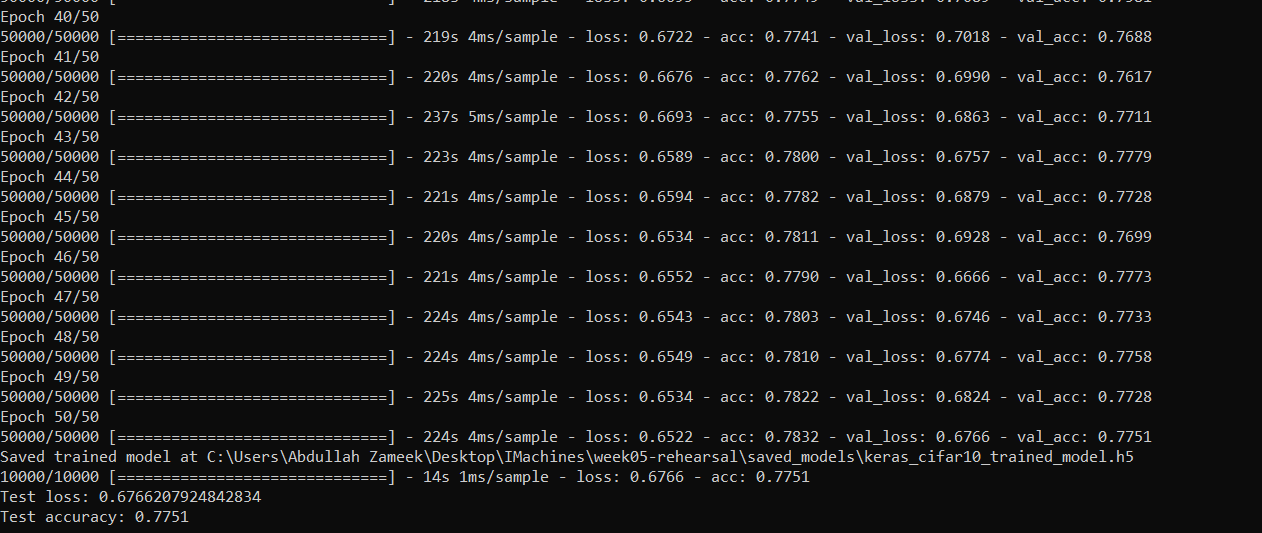

I decided to increase the number of epochs for a batch size of 32, so I set it to 50 and let it run overnight. I got the following result.

The test loss reported was 0.67667 and an accuracy of 0.7751. Great!

It seems to have greatly improved since the epoch of 3, and I expect that it might converge to around 0.95+ after 300 epochs or so. Maybe this is something I would like to test once I know how to use the Intel Server.

When attempting the Data Augmentation part, I got the following error straight off the bat