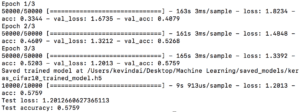

In order to train the CIFAR-10 CNN, I played around with the batch size and epoch, starting off with a batch size of 32, and 3 epochs. The result was a test accuracy of 0.58, and a loss of 1.2.

Because I am still relatively new to machine learning, the effects of epoch and batch size are quite unfamiliar to me, so I wanted to see what would happen if I changed the two drastically. I increased the epoch size to 10, and kept the batch size the same.

I ended up with a test accuracy of 0.68, and a loss of 0.9. Therefore, it seems that more than doubling the epoch size while keeping the batch size the same has little effect on accuracy.

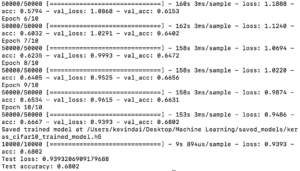

Next, I changed the epoch size back to 3, and increased the batch size to 2048.

As you can see, the test loss approached 1.95, while the accuracy decreased down to 0.32. Judging from this run, having a low epoch size, while running a high batch size did not necessarily increase accuracy. I think this might be because an epoch is one iteration over the entire dataset, and the batch size is number of training examples within the set, so passing a very large batch size through a small epoch size may degrade the quality of the model.

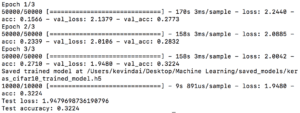

Lastly, I ran it with an epoch of 20, and a batch size of 32.

![]()

This was probably the most stable run out of the tests, as the accuracy rate seemed to peak at around 0.75, while the loss went down to 0.74. In this case, the batch size was relatively similar to the epoch size, so I think in this case, the epoch and batch size setup that follows this range would work best for the data set given.