Project Name: Namaste

Description

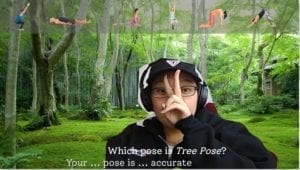

For my final project, I wanted to create an interactive yoga teaching application for people unfamiliar with yoga. The project layout was that users had to choose from 6 different images of yoga poses, and guess which of those poses matched the background on the screen and the pose name at the bottom. Users were able to pick from either cobra, tree, chair, corpse, downwards dog, and cat pose.

The goal of my project was to not only get users to draw the connection between the yoga pose name and the animal/object it was named after, but also feel immersed in the natural environment that these poses are typically found in.

Inspiration

I was thinking of games and body movement/positions, and remembered one of my favorite childhood games, WarioWare Smooth Moves. One of the moments that stuck the most with me in the game, was the pose imitation levels. The point of the game was to complete simple tasks within 3 seconds. It very easily got stressful and fast-paced, so the random “Let’s Pose” breaks where gamers had to simply follow the pose on the screen was peaceful. Thus, the idea came to me where I wanted people to also do yoga poses.

As I was doing research on what poses I could incorporate into my project, I found it interesting that all the poses were named after objects, animals, etc. As I looked into it more, I found that one of yoga’s main principles is to really embrace nature and the environment you’re in. This point of information, in addition to enjoying my midterm project immersive environment, was what made me want to make users feel immersed in the environment and truly understand the context in which these poses were named after.

Work Process

Beginning my project was rather simple because of its similarities to a previous homework assignment I did that used poseNet to change the size of font size based on how close your face was to the screen. It was very quick for me to get images to appear on the screen but after that, I had to consult with Professor Moon frequently to smooth out the details to make the overall project more polished. After getting the images to appear, I worked on getting them to appear more smoothly, which are details addressed later on in the documentation. Once I was able to get the images to appear, I worked on changing the background. The way I changed the background was to put the images into an array and then have them randomly generate once KNN detected that both the background and pose confidence was complete. This was coded by using an if, else statement. The next step was implementing bodyPix into the project so that the user was shown onto the background, but none of the actual real-life environment.

With those 3 elements, the core functions of my project were done. The last main step was for me to create the actual database of yoga poses because I was relying mostly on my face positions since it would be a waste of time to constantly do yoga to test my project. I asked friends to help me press the button since KNN requires a buttonclick to create the database. Once I gathered the database, I had my friends test it and realized I need to add many more examples and not as accurate positions to the database since not everyone was physically able to follow the poses precisely.

After our project presentations, I asked for some feedback from Professor Moon and was given help on how to make the background changes more smooth. With my background transition improved, I was able to add a progress bar to indicate to users how long to hold their poses for.

In terms of functionality, my project didn’t change too much except for the fact that I was originally planning on adding pix2pix but after researching and figuring out what it was, I realized that it didn’t fit what I wanted to do for my project at all. Another thing that changed from my original idea was adding a sound classification system. I originally wanted to add this to trigger image captures to collect info for my database, rather than have to mousepress since it’s impossible to do while doing yoga. But after talking to Professor Moon, he said that these sound classifications are not very accurate. Also, even if it were accurate, I wouldn’t have had time. One of the struggles of my project was getting someone to help press the mouse button to gather images of me doing the poses for the database so maybe I would find another way to create the database.

Technical Aspects

- BodyPix

- KNN Classification

- HTML, CSS, p5

A good portion of my project was simply using css opacity styling and p5 to change the styles of my HTML elements. But I had to use information collect by KNN Classification and the database to trigger these styling changes. The way I was able to make the images of the poses appear on the screen was through the confidence level in how accurate KNN classified the users pose. If the confidence in a pose was 1 or 100%, then make the image opacity full, in contrast to its default state which is 0. It was easy to get the images to appear on the screen however they would simply flash on and off the screen which made the experience unpleasant.

I consulted Professor Moon on how to fix this glitchy appearance and he taught me about the lerp function. Essentially, the way it works is that it calculates a number between two numbers you assign at a specific increment using linear interpolation. What this function did is that it made the opacity gradually increase/decrease, rather than have the opacity change straight from 0 to 1 and vice versus.

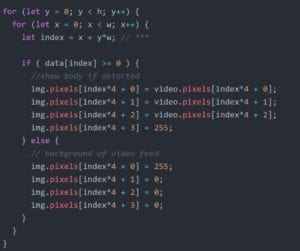

My project also has a almost “green-screen” effect where there is an image background of an environment and only the user appears on the screen. This makes the project experience feel much more immersive, like you are part of the image. The way this was achieved was through bodyPix. I had difficulty separating the live camera feed and what was being captured displayed on the screen. The way this was resolved in bodyPix was when bodyPix detected a body, take this pixel information from the live feed and use it to redraw on the canvas. Anything that wasn’t detected as a body as an opacity of 0.

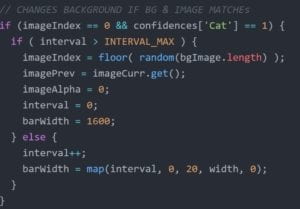

The final major obstacle of my project was to get the background to change gradually and as smoothly as the yoga poses themselves. I was a bit lost on what would be the best method to fix this issue and Professor Moon suggested that I take the current background image’s opacity and make it decrease as the next background image fades in. The code below shows a bit of that process.

I also added a progress bar that showed how much longer you had to hold a pose in order for the background to change. The way I got the bar to work was by using the map function. I essentially took the screen width and the max amount of frames I wanted it to take for the background to change. The reason my width and 0 are reversed is because in order to mirror the camera feed, I inverted the camera in the CSS style.

Final Thoughts/Comments & Future Work

It was interesting to see how my project evolved overtime. Initially I wanted my project to carry a bit more weight and meaning in it, but as I discussed with friends and peers, it seemed better suited to be more playful given the dynamics and context of my project. As for the coding part, I felt that a lot of the core ideas and functions of my project are rather simple. However, the most difficult aspect of my project was being able to make these functions run smoothly and efficiently. The obstacles were definitely a mix of both my coding level and the programs and models that I was using. For example, if I really wanted to improve my project more, I would find another program to replace bodyPix since I did not enjoy how laggy my project was in detecting a person and segmenting them to separate the person from the background.

Also, one piece of feedback I heard during our project presentations was to scale the images based on how close the user was to the screen. That would definitely would make the project a lot more exciting and “alive”, and would be another aspect I would add to my project in the future.

IMA Show

Regarding the IMA show, because I did not have enough time to fully user-test with my project, the show was really eye-opening to me in seeing how people interact with my project.

If I were to pursue this project further, I would definitely figure out a better setup to showcase my project. I noticed that when people first entered the room, most of their attention went towards the projector screen, and there was a disconnect in understanding that the yoga mat and laptop were where the main interaction happened. However, our space and resources were limited so I don’t really think there was too much to fix this at the time.

As for the user interaction, I would perhaps add more direction for people to understand how to interact with my project. I noticed that most people were hesitant to approach my project not only because they were embarrassed to do yoga in a crowded room full of people, but also because they were confused that they had to pick from the yoga poses above, perform it, and make it match the screen. I would probably take out the guessing the yoga poses and give users the name of the yoga pose, and then just have them guess which name matches the background.

Special thanks to everyone who has helped me with my project, but extra special thanks to Professor Moon for literally always being available and eager to help!