My Project:

My final project is called “Dr. Manhattan”. It’s basically an application of combining different machine learning techniques together to create an interactive art project. The idea is to create a sense of post-explosion chaos vibe within the interface where users will see themselves looking as if they’re composed of nothing but the hydrogen atoms, and their body parts are scattered into pieces and floating in disorder. Then a screenshot will be taken and the users will be asked to find a way to rebuild that screenshot image to prove that they can take control over the chaos and try to find a way back to the past. The meaning behind this project is to show the fragility of human lives, the will power of mankind to seek control in the constantly changing chaotic world, and the futility of the attempt to dwell upon the past. Because however likely the image the user manipulates is to the screenshot image, there will always be a slight difference.

Inspiration:

My inspiration for this project is the superhero character “Dr. Manhattan” from the comics Watchman. In comics, this character experienced a tragic physics explosion where his body was scattered into atomic pieces. However, he eventually managed to rebuild himself atom by atom according to the basic physics law and his experience with the atoms allowed him to acquire the power of seeing his past, present, and future simultaneously. The design of this character is rather philosophical in the sense that a man who can see through the chaos of the universe and rebuild himself from atoms following the universe’s rule can’t really do anything about the chaos of the world itself. This character gives me the idea of designing a project where the users can see themselves in a very chaotic and disordered form and giving them the sense that they can actually control the chaos and return to the organized world by giving them an almost impossible task to rebuild the past moment in a constantly changing chaotic world. I also named this project after the character.

The Process of Developing with Machine Learning Techniques

In order to create a post-explosion chaotic vibe for the interface to fit the theme of the project, I first used Colfax to train several style transfer models. I tried several different images as the input and sample for the model to train on to see the outputs and eventually decided to use the one with the interstellar theme, for it gives the output give the webcam image look like everything is composed of blue dots, like hydrogen atoms. This process was relatively smooth. I uploaded the train.sh file and sample image to Colfax and then downloaded the transferred results. Each training took about 20 hours.

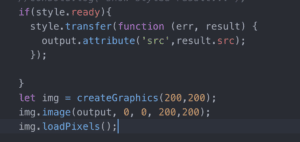

However, when I tried to use BodyPix manipulate the pixels of the style transferred images, I met with a lot of difficulties. First, I found that the output of the style transferred model can’t use the function “.loadPixels()”. After I consulted professor Moon, he told me that the output of the model was actually an Html element, thus I need to first turn these outputs into a pixel image.

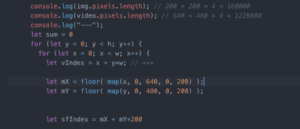

Then, I found that even though the transferred output becomes a pixel image, I still failed to effectively manipulate them with pixel iteration. The segmentation often appears repeatedly on the canvas. For instance, when I tried to manipulate the position of the left face pixels, the canvas showed four left faces of mine, which covers half of the canvas, and is not really what I was aiming for. When I asked professor Moon about this, he pointed out that since the pixel length of the pixel image after style transferred and the pixel length of the webcam image is different. Thus, I need to first learn the pixel lengths of each image and mapped the index accordingly so that I can manipulate them correctly.

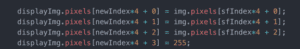

However, when I managed to manipulate the pixels of the segmented body parts away from the body and make them float around the canvas, it appeared that the original part was still on the original body, even if I tried to cover those with different pixels. Professor Moon later told me that I should get another value “displayImg” to store the pixel of the “img”, and display this value.

After I used BodyPix to segment the body parts, the image on canvas showed that the person’s face is cut into half and appear in various places and the arms, shoulders, and hands are all tearing away from the body and also showing up in different places. Then, I utilized KNN to compare the current image with the screenshot image, so that the user can know how well they are rebuilding the screenshot. During the presentation, I used the save() function which can get a screenshot of the current frame and then downloaded it. However, Moon later taught me that getting a screenshot and reloading it could be done simply with the function get(). In addition, I also added the bgm to make the project seems more related to the chaos and scattering theme.

Project Demo

https://drive.google.com/file/d/1yX9cjRdzE9uTAuK2sBTgmlVMSkhRXnAp/view?usp=sharing

Deficiencies & Future Development

As is pointed out by the critics during the presentation, more ways of manipulating the style transferred body pixels remain to be explored. Currently, in order to make the scattered images recognizable as body parts, I took the whole arm connecting the elbow and hand apart from the body together. However, such a way of manipulating these pixels makes the scattering effect less obvious. In the future, I can try to separate the arm and hand too and manipulate those segmentations better to make them both recognizable as body parts and also more artistic. In addition, as Tristan pointed out, the way I utilized KNN in my project didn’t really contribute to the presentation of the idea I wanted to spread. Illustrating the idea of struggling in the chaos and dwelling on the past by asking the users to imitate one screenshot seems a little indirect. Thus, I should try documenting the users’ actual coordination of their body parts and let them interact with the images on canvas to trigger more different output, such as the rebuilding of part of the body, or even more serious scattering.