AI-driven style transfer has been around for many years, the main application of which is to apply a certain style from one image to another. The performance cost has been reduced that even a modern smartphone can run it. And so does high-quality 3D graphics. Is there any similar application for 3D use cases derived from the same technology? The answer is yes, but not quite a lot.

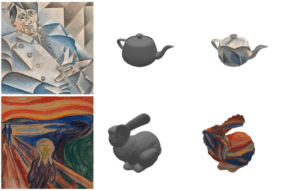

First, introduce the style transfer for texture images for 3D models. This is nearly identical to the 2D image style transfer but applied to texture images.

Check out the example here by Agisoft:

This feature has been integrated into Agisoft Photoscan software to quickly transfer the style of the look of the 3D models. However, it does not modify the mesh itself.

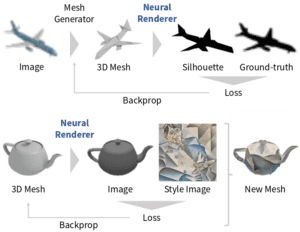

Secondly, let’s look at the Neural 3D Mesh Renderer, a more sophisticated approach which somewhat modifies the mesh.

The work comes from their previous research on 3D mesh reconstruction – given a 2D image as input and output a corresponding 3D mesh. The way that they implement the mesh transfer is through the special renderer that they built. The renderer takes a mesh, renders 2D images from different perspectives, style transfers each image, and feeds them back to the 3D mesh. In this way, the contour of the image may change so that the 3D vertices will be somewhat changed in response.

These two techniques can be applied creatively in 3D productions. In a stylized game, the texture transfer can help artists efficiently implement the desired style. In an interactive piece, it would be fun to see 3D sculptures transferring its style in real-time.

Reference:

https://blog.sketchfab.com/style-transfer-for-3d-models-with-agisoft-photoscan/

Neural 3D Mesh Renderer by H. Kato, Y. Ushiku, and T. Harada