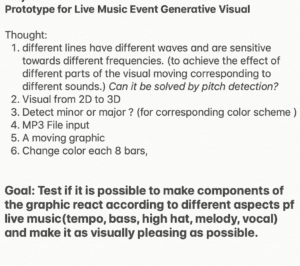

I started off knowing what I wanted to build. Thanks to the ideation presentation at the beginning of the semester, I thought of an idea to improve the live music event experience by integrating generative graphics.

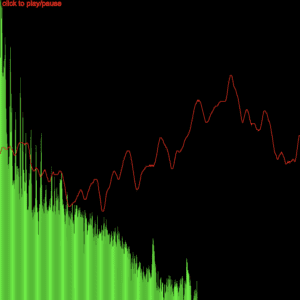

I immediately thought of the machine learning pitch detection model. The graphic we made in class was two dimensional and consists of two parts. The green representing the scale and the red wave displaying the sound waveform.

I recognized a few challenges. First I have to change the input from the computer microphone to an mp3 music file. Second, I have to find multiple ways of inputting information to the generative graphics for it to change according to the music. At last, there is the graphic itself. The first issue was very easy to address with a simple load function. So I spent the majority of my time dealing with the last two issues.

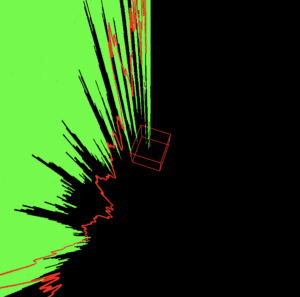

I started off by creating the visuals. Professor moon recommended me to check out WEBGL and the rotating box. After making a simple box and changing the usual 2D p5 canvas to 3D I was quite amazed by the results.

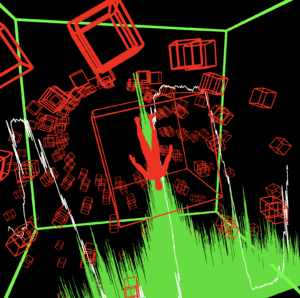

After seeing the effects of 3d in p5 I wanted to explore what else I can do to the graphics. So I searched up some 3D models I can add to the graphics to make it look nicer.

By adding in a human-shaped model and sine graphic, after some adjustments, I was quite happy with the results.

Meanwhile, professor Moon told me to observe the sound waves and see if I can find any patterns. I realized that certain parts of the waves are reactive towards the base and others are more reactive towards the treble. I also realized that vocals make the waves move in an intense fashion. Most of all the peaking of the base is very rhythmic and shows a very consistent pattern.

In my second meeting with professor moon, we created a function that sets a threshold for the value of a certain region of the sound wave that is the most reactive towards the base. Seeing the graphics change according to the music, I was mind blown by the results.

Though the results are noticeable the previous detection method was still not sensitive enough. Then I proposed the possibility of measuring the velocity of the change in the scale. By setting a threshold for the change we were able to achieve a better effect and it is also more compatible with different genres of music.

At last, I made some adjustments to the graphics to math graphics with the bass sounds without having too much going on.

I was so happy with the results I showed it to all my friends and also my parents paired with their preferred type of music. The response was the best for hip hop and EDM music. Mainly because of the style of the graphics.

These are the notes I’ve made from setting goals to new learnings and possibilities after each meeting with professor Moon.

I see myself further developing this project in the future and I also gave this project a name. Project META, Because it sounds cool and comes from the word metamorphosis that resembles change.

Since the majority of the graphics right now are based on the visuals of the actual sound waves, professor moon suggested improving this project by making all the graphics responsive to the actual music itself. I believe taking this advice would take the project to another level and improve my understanding of both code and sound. So I will definitely take this direction. Along with professor Moons suggestion, Tristan also gave very good advice about using lerp to sooth out the graphics.