Nuraphones

The nuraphone is a fascinating product that can adapt to your ears. Whenever you put it on, it can analyze your hearing by the very faint sound your ear returns, and then build a sound profile for you. (ref. https://www.nuraphone.com/pages/how-it-works). After that, it can play music in a way, perhaps by adjusting the tones, amplitude, and frequency, to make it best suitable for your hearing.

Future Implications and Scenarios

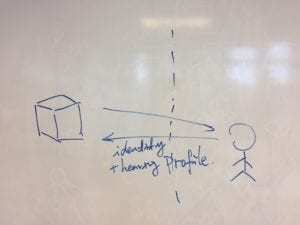

Such technology of analyzing ears is so magical, but it can compromise people’s privacy. Each person’s ear profile is unique, so it provides a potential way to identify people, or even locate people. Also, for people who have bad hearing abilities, this could also lead to discriminations. For instance, in the job market, this can be a new standard for entering certain locations. Imaging one day, we can invent a device that can analyze people’s sound profile from a larger distance, such as several meters or more (see sketch below). In this way, people can be easily identified or even located. This leads to a leak of privacy and has the potential for censorship and surveillance.

Possible Solutions

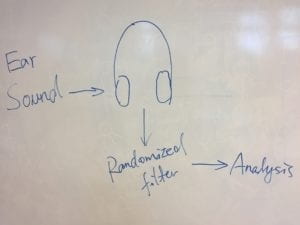

Personally, I think that the problems identified above are relatively hard to solve. Therefore might be two ways. First, for companies such as Nura, they should make sure that all the sound profiles are kept as a secret. Secondly, one potential technological way is to add a sound filter between the sound collector and the algorithm that analyzes the sound (as shown in the following sketch). In this way, each time the sound profile of the person will be slightly different (but should maintain significant characteristics for sound analyzation). The concept of this is shown in the sketch below.

Reflection

In my opinion, the development of big data and machine learning algorithms always involve the tradeoff between convenience and privacy. Currently, in China, the trend is that people tend to pursue convenience without considering much about their privacy. Personally, I think although it might be a good trend in terms of encouraging the development of AI, it could lead to serious problems one day when all our privacy are compromised by these data collecting companies and government surveillance.