For my Interaction Lab final project, my partner Kyle and I created an interactive audiovisual called Spacetime Symphony. The project generated visuals and audio with Processing 3D which both changed through moving your hands from a distance sensor with an Arduino.

The conception and design of this project was pretty flexible and indecisive at first. We knew we wanted to have several components in our project: we wanted people to collaborate, we wanted people to create something  together, and we wanted to include things we were interested in (art and music). We thought it would be interesting to make a collaborative soundscape. Because we can upload music and extract the different frequencies through the libraries and easily create visuals with Processing, we thought that using Arduino to Processing was the most feasible for our project. We created a moodboard to figure out the visuals and my partner based those sounds off the visuals. We thought having multiple sensors may be confusing and having the same sensor made the device more focused and easier to understand how to use our device as our goal was to make art and music easier to create together.

together, and we wanted to include things we were interested in (art and music). We thought it would be interesting to make a collaborative soundscape. Because we can upload music and extract the different frequencies through the libraries and easily create visuals with Processing, we thought that using Arduino to Processing was the most feasible for our project. We created a moodboard to figure out the visuals and my partner based those sounds off the visuals. We thought having multiple sensors may be confusing and having the same sensor made the device more focused and easier to understand how to use our device as our goal was to make art and music easier to create together.

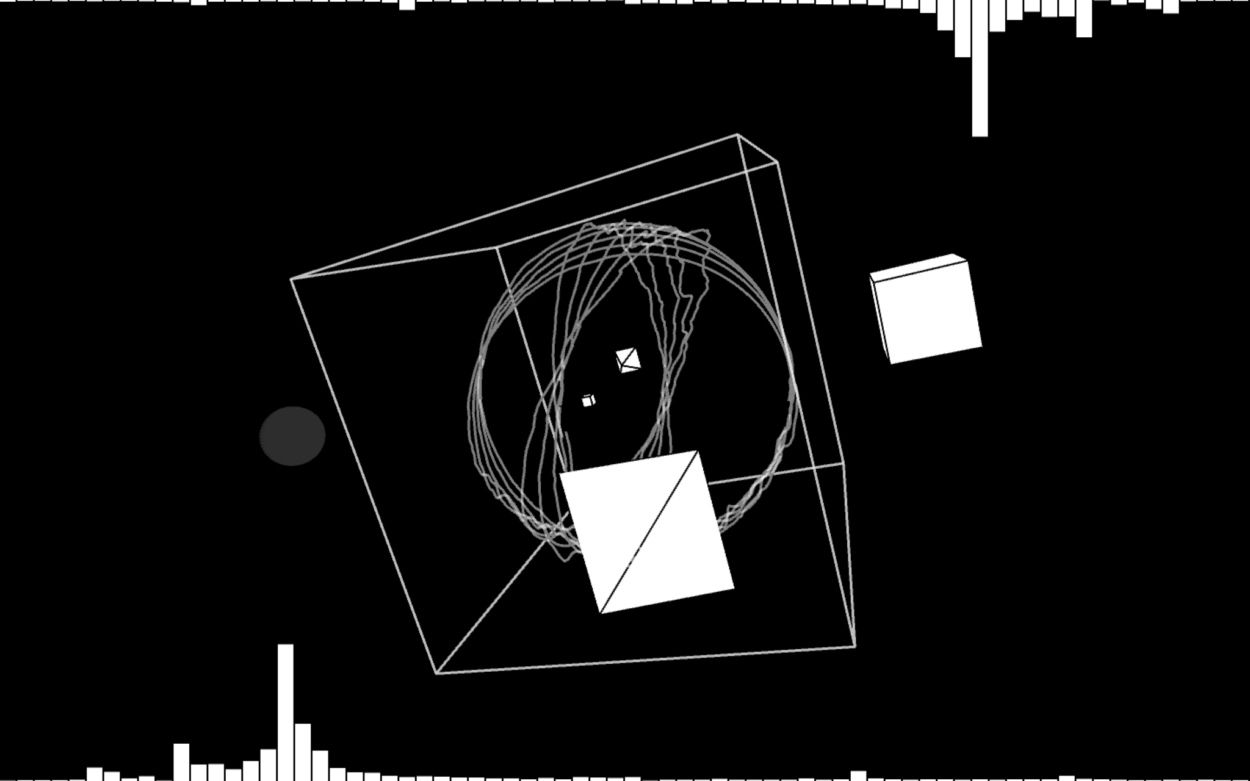

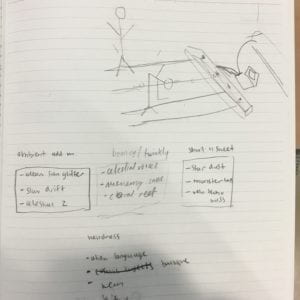

The fabrication and production process was split apart between my partner and I. He was in charge of the audio assets, Arduino code, and physical component, while I was in charge of the visuals, extracting frequencies from the audio, and connecting his components to my Processing code. For my production process, I first waited until he had finished all the audio assets which he made on GarageBand. He made the entire soundscape and made sure each audio asset could be split and synced with the tempo. Once airdropping the audio files, I, with some demo code, incorporated his audio files into Processing. I spent a couple days crafting the code and creating 2 different demo visuals until I was satisfied with the results. Because the visuals were a big component of what the users would see, we needed to create visuals that were relatively easy and obvious to manipulate with color and size, yet also appealing to the eye. At first the visuals changed in many ways with certain combinations of distances, until I decided to have each separate sound asset have its own shape it would manipulate so one could tell that the visuals were changing with clear intention, rather that appearing as if random. During the user testing session, we had our sensors faced toward the user because we wanted users to move by standing. However, after user testing, we discovered many issues with our fabricated prototype. Many people when approaching our project most of the time used hands, not walking, and did not know that there were several audio assets when you changed the distance of where you were. Another issue was how fast people moved their hands near the sensor and didn’t know what was changing on the screen. Overall, the design wasn’t very clear in connecting what the visuals and audio do.

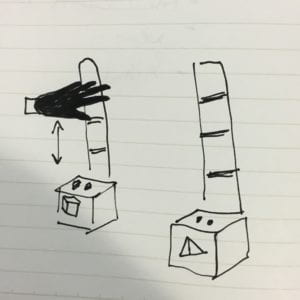

Kyle and I spent the Friday after user testing by fixing our fabricated prototype. We separated the boxes to encourage multiple people to interact with the device and contained our Arduino and wires. We also added an acrylic panel in the back which had markings which signified areas of where to put your hand. Additionally, we thought to change the interaction with hands rather than through standing due to the manner users were interacting with it. We also added labels to each box which showed what users were changing on the screen to help understand what was happening with each distance sensor. With the Processing, I also changed the rate the program detected the changes in hand position for every 5 frames. This is because the original code detected it for every 20 frames which was too slow, and if I removed this portion of the code, the drawings generated would glitch up and slow down due to how many times the “update” function was being called — which was an issue I ran into.

We used the cardboard material for our physical component because we wanted to draw the shapes onto the boxes. We also glued the acrylic on the back of the component instead of apart of it because we wanted to have the marks changeable in case they were printed wrong and we wanted a lighter material because we were afraid that the box would fall over. With these changes, we hoped that our intention for our design would be self-explanatory; however, I do think there are issues with our physical component which I considered when redesigning the component — everyone’s hand size is different. The top of the hand would bump into the acrylic marker. We decided it was fine since the users could move the box. Looking back on that decision, I think it still would have been better to move the acrylic stick to the side of the box.

The goal of my final project was to create a collaborative experience where people can create music and visuals in an easy way. My project results aligns with my definition of interaction because you can visibly see the interaction between two entities/actors. When you change the distance of your hands to the sensor, the visuals and audio react to the changes. But the audience was still somewhat hesitant approaching the project and there was some collaboration and the design was slightly faulty. If I had time, I would have made the boxes more approachable and spread out (LEDs?) and wrote instructions for the user to understand our intention to our design. I would also change the acrylic back and move it to the side of the box. I learned a lot with what I accomplished and from my setbacks and failures. With coding, I learned how to use the minim library, Processing in 3D, and animating with processing. With design, I learned that designing with purpose, functionality, and friendliness (if I could say) takes in account a lot of considerations. Designing for functionality and purpose makes you think about the audience and how they would feel and interact with your device. Although our physical component was not very inviting, it made me think about how do designers create objects that make people want to use it? How can you use design to communicate what you want it to do? How do you bring an invention or foreign object to the world and make it inviting? As for the visuals I created, it makes me think about how do I make visuals that people would want to look at and would notice? Despite the fact we were only allowed a Mac screen for the IMA show, it made me consider if we had made it into a projection or on the TV would people look at it more?

With this final project, I learned a lot about how to create an interactive experience without touching. Often, interactive involves pressing a button, moving a joystick, or using force or torque. How can we make audiences interact with objects that don’t really involve using one’s strength but mere movements with an object. Usually an object represents something to hold and be tactile with, not to hover over.

Ultimately, we decided to create a project which allowed users to not touch much of anything at all because we wanted to create an experience that was pretty seamless and effortless. We wanted to encourage collaboration with art and music as well as create an improvised moment between people much like how communication is — unplanned but natural. I think because of this intention, our project gave way to create meaningful interactions with people through music and art and gave people a new way to interact with objects without touching them. Why are we compelled to approach certain objects of design more than other? What do we decipher from them when we look at it? Is it comfort? Is it beauty? And why, at least for this project, are used to tactile interactions? These questions make me think about how we are used to certain ways of navigating through the world and how it bleeds through our approaches to different experiences. When we live our lives, we are approaching a certain way of design — design of a lifestyle, a design to how we approach life. With this class, we are allowed an opportunity to break those boundaries, inventing how we approach, design, and interact with technology. We are able to propose: what if?