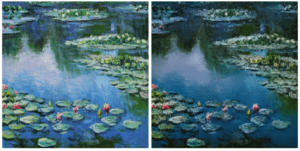

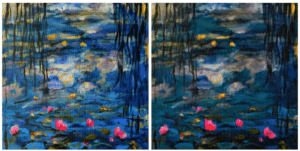

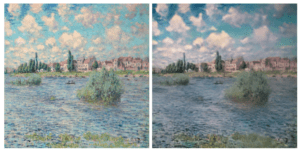

This week I was playing with the Cycle GAN to generate some interesting images. However, due to the time limit and my access to the Intel AI DevCloud has been canceled. I tested Cycle GAN using the model we transferred during the class and here shows some of the results:

The images transferred from the original one make me feel like the content had been blurred. And their color scheme also changed a little bit and became more similar to the real-world color. In other words, the contrast of the color had been reduced. This model preformed quite well in dealing with water color and the sky, but it performed less well in transferring the figures of building, trees and the bridge in the first image. Two possible reason might be count for this result. First, if the transfer logic is that the real-world image is smoother in their texture and the network is trying to blur the art piece in order to achieve the similar effect, the water and the sky are certain more easy to deal with. Second, the training set might be more images related to the water or the sky and those figures are more easy for the network to extract their characteristic.

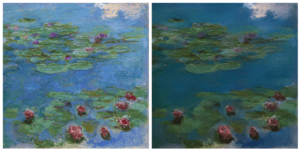

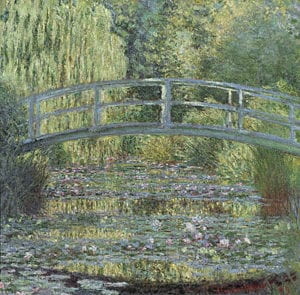

Then I tested another network with Monet’s painting. This model is a convolutional network which can transfer style of one image to another. I found an image from the web which is the real spot where Monet put in his work. The reason that I chose this one is because this might be the best picture that perfectly represent the light and the color in Monet’s painting, so we can have a comparison between this one and the circle GAN based on the output. So here is what I got:

The input image, the first one is the real-world figure:

The output:

It looks like model only tried to combine the two different styles instead of transferring one’s style to another one. And this network can also combine two styles so I had a try but the output was quite the same. The circle GAN achieved a better performance without any doubts.