http://imanas.shanghai.nyu.edu/~ktb300/audio_project%202/

Description

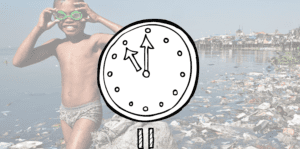

Our intention for this project was to simulate a PSA style that brings awareness to an urgent issue in a creative way. The layout of the website has a clock in the center with various pollution-related photos in the background. One the play button is pressed, a playful and light underbeat begins and each time the user presses the clock, it signals another the sound of a pollutant, will new pollutant. The juxtaposition of the visuals with the mood of the sounds to portray the harmful effect of our seemingly inconsequential habits, i.e. motor vehicles contributing to carbon emission and plastic bags. The clock symbolize people fixation on their daily routine, sometimes oblivious to the existential and pressing issues of the outside world. Once the clock-hand reaches 12 am, sirens sound off signaling the final audio recording of a call to action. Users can then click on the clock and be routed to the UN Climate Action website.

Process

We had originally planned for minimal interaction, but we learned from the test-run in class last Thursday that it was important add more interaction and visual elements for a better user experience and general understanding of the topic. I initially thought it would be cool to have minimal visuals, so the user would have to infer the meaning and then come to the surprising understanding in the end. However, that awakening ended up benefitting us immensely because our project is much more powerful and comprehensive than before.

We gathered the majority of our audio using the TASCAM. All the sounds heard when the clock is clicked were recordings of things both Selina and I associate with being harmful to the environment. The other tunes heard underneath our gathered audios are from royalty-free websites. And lastly, gathered recordings from Donald Trump and Sir David Attenborough’s famous speech at a United Nations conference of December 2018. Our initial plan was to make one holistic audio, but we instead opted for interactivity by allowing the user to signal the audio by attaching separate audio ID’s to the sounds and their respective number of clicks. For the audio at the end, though, we did use audacity which was helpful in manipulating certain sounds.

I think the most difficult part of this project was the interactive audio portion. Because we have so many audios IDs and different layers of audio, I really needed to pay close attention to the detail for the code to work. Although connecting the audio IDs to Javascript seems fairly concrete, it was easy to make a small mistake that would later be difficult to detect.

Conclusion

I am overall very pleased with the outcome of this project. Both my partner and I were bit concerned about our project’s lack of interaction from the peer feedback we received last Thursday; however, this pushed us to reevaluate how we could improve the user experience, which helped strengthen the communication of this topic.