I am working on my capstone theme, urbicide as the topic for my final project in this class. The project acts as a virtual gallery to display my machine learning artworks.

Capstone Project- Synthetic Dystopia Presentation Slide: https://www.canva.com/design/DAE_YYsMXqE/zlcBhkZ1VstKkZyectYr-A/view?utm_content=DAE_YYsMXqE&utm_campaign=designshare&utm_medium=link2&utm_source=sharebutton

Act I of Synthetic Dystopia is a Decay Convertor that literary converts an interior space into its decayed form. The converter is capable of producing the ongoing, intermediate stage of decay, and projected destruction in the future, based on the input of the predecay image, and vice versa. Ideally, the visual output of this converter would be a data sculpture or data painting. The artwork would be presented in a digital frame hung inside the room where the decay is generated. It is meant to impart a sense of distress and imminence on the audience, by prophecizing the outcome of our surroundings in a state of urban decay. I decided to use particle systems to stylize the generated interpolation video because I think it is a good metaphor for machine learning art. Since a generated Image is represented by 512 vectors in an ML model, each pixel would represent a piece of data. Just like the particles, the data is constantly transforming, twisting, turning, and pushing into infinity.

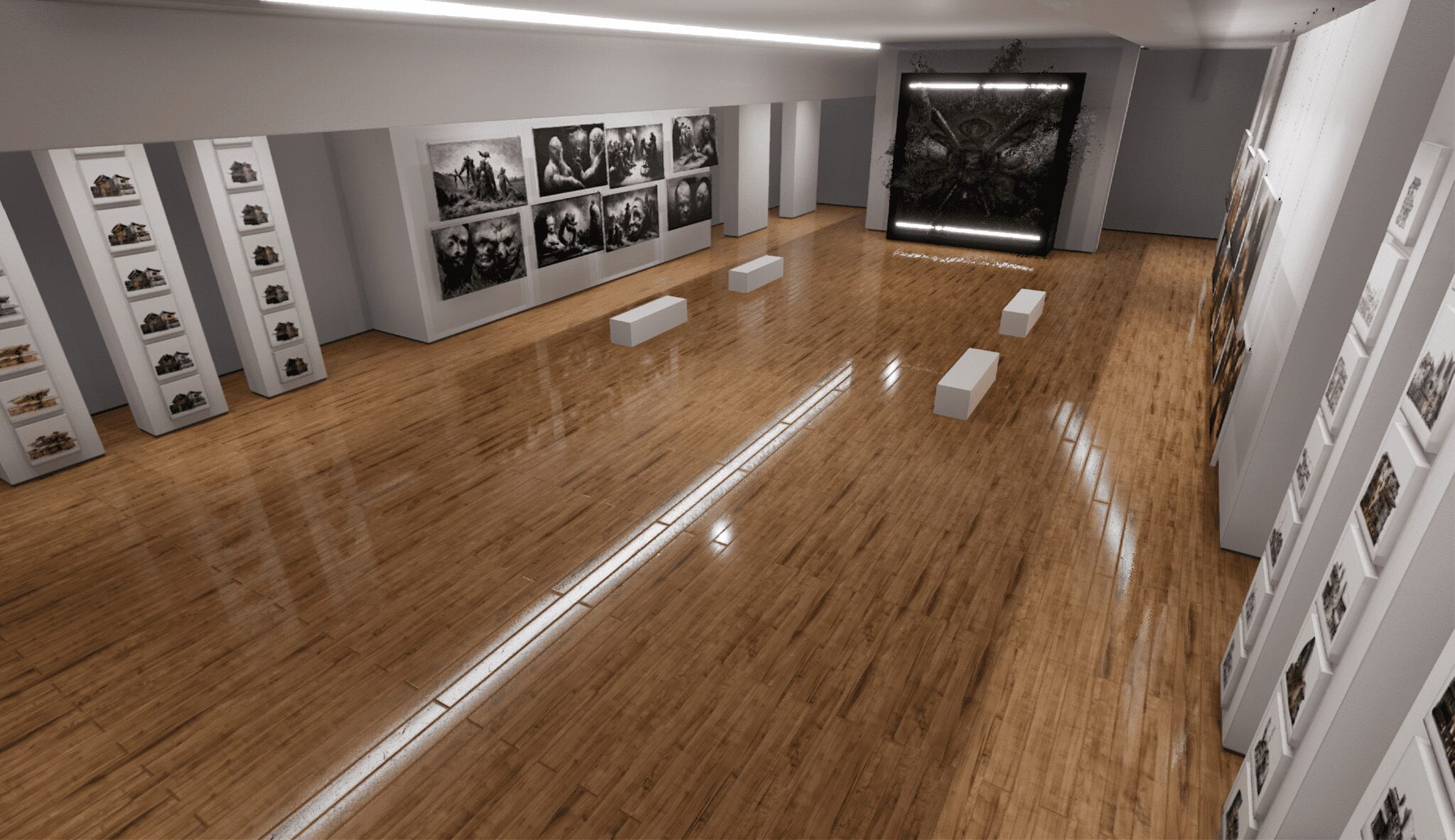

My original intention is to make an immersive experience from this Decay Converter by porting my unity project into a Looking Glass display. However, Looking Glass currently doesn’t support VFX/particle systems and high definition render pipeline. Due to limited time and resources, I am unable to build the digital frame physically in real life. The immersive project is meant to be a virtual simulation of how the data sculpture would be displayed in an interior space. For Act II and III of Synthetic Dystopia, I have created numerous ML image artworks and animations. This gave me an idea to turn the virtual space into a gallery that could display all of my artworks.

I choose Unreal Engine for two reasons. First, since I am a bad coder, I prefer to work with blueprints rather than scripts. More importantly, I would want the virtual experience to be as realistic as possible, which unreal has the advantage over Unity. However, I do agree with Oliver’s suggestion to build the project in Unity for displaying it on VR Chat. I think this could be an excellent way to deliver the message of urbicide to general audiences.

The narrative and the fictional context of the project aren’t fully delivered through these illustrations. Thus the message of urbicide and desensitization of violence are not manifest clearly through the project. If I have more time, I would definitely spend more time considering the forms of output. The idea I have in mind now is to create a realistic VR gameplay environment in which the data sculpture could be displayed. The most ideal output is an immersive video art or projection mapping installation similar to the one by Ouchhh Studio.

In response to the question that I posted during the presentation, “can machine dream”, I was just looking for others’ opinions on this controversial subject. Here is a link to an article that briefly explains this subject. I know machine learning art is currently in a grey area where authorship and copyright are still ambiguous and problematic. As a student, I am just using AI as experimentation to create art, and at the same using AI’s symbolic meaning to build a narrative context for my topic. AI technology and machine learning tools are developing at an astonishing speed in recent years. The techniques and programs I used for this project would be outdated in several months. The series of visual experimentations in my capstone project is just my learning process and exploration of this cutting-edge technology.