1. Point Clouds

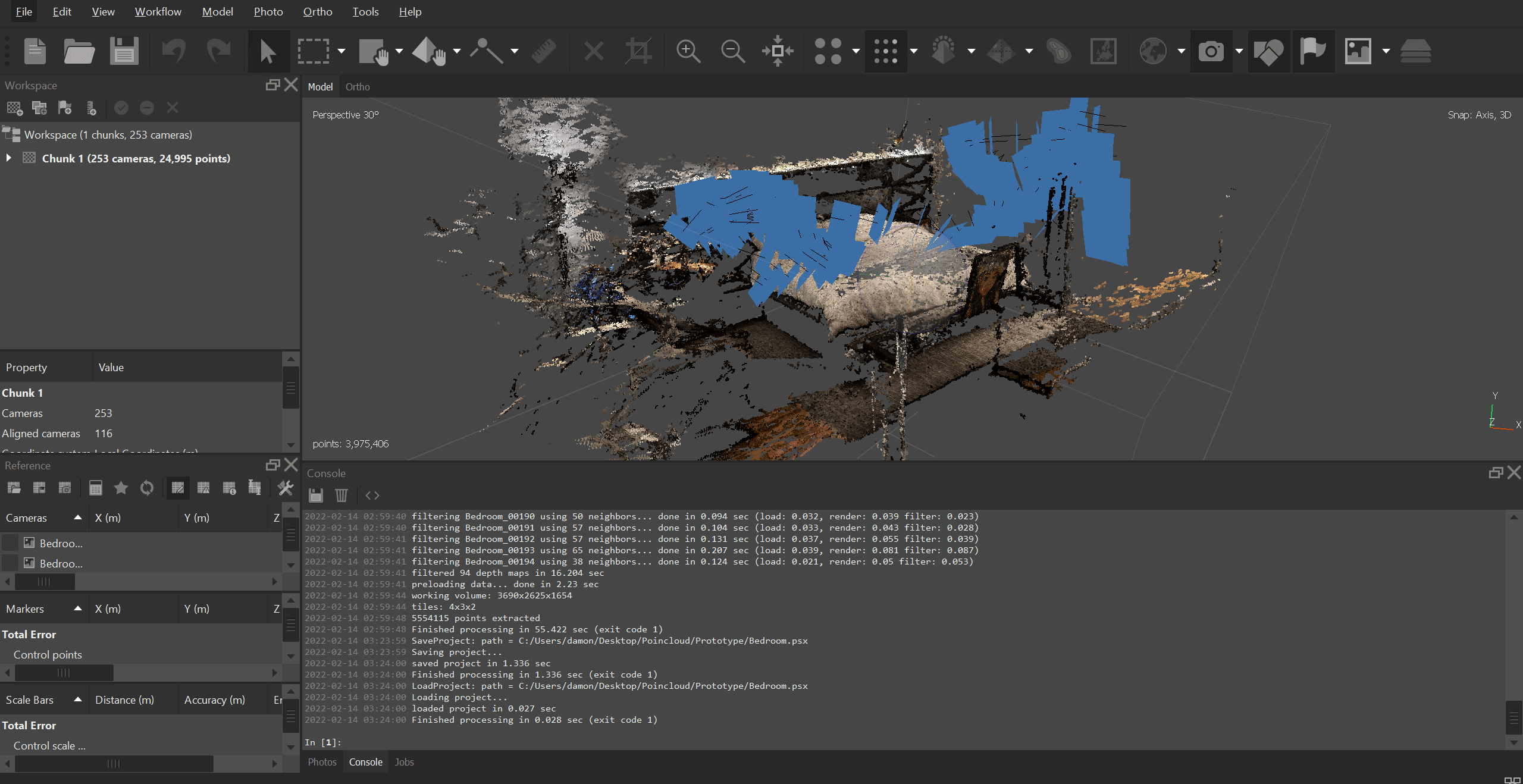

Photogrammetry using Agisoft Metashape

For my previous projects, I used the Lidar scanner app on my iPad for the point cloud. The scanned model is quite accurate, but the points are relatively sparse. Also, most apps generate meshes instead of point cloud models using cloud processing. Canvas, the app I used before, requires extra fees per scan and quite a long time to generate a model. SiteScape is bale to generate point clouds, but with low density. I need to try out more apps on my Ipad and possibly connect an external scanner. Also, more experimentations and research are needed for me to decide whether to use Lidar or photogrammetry for the best result.

I did some research and found out most artists and professionals (including Rubenfro) used Agisoft Metashape for photogrammetry. I decided to follow the tutorials and play around with this software.

My first experimental scan is my own apartment. Photogrammetry required detailed photos of the object/scene in all directions and angles to generate an accurate model. Following the tutorial, I took several 4k 60fps videos of my living room and bedroom with my iPhone. I then export it in After Effects into 2fps PNG sequences. According to the tutorial, If I import thousands of photos into the software, the software would take forever to process. However, the generated point clouds look pretty sparse and broken, missing a lot of details and accuracy.

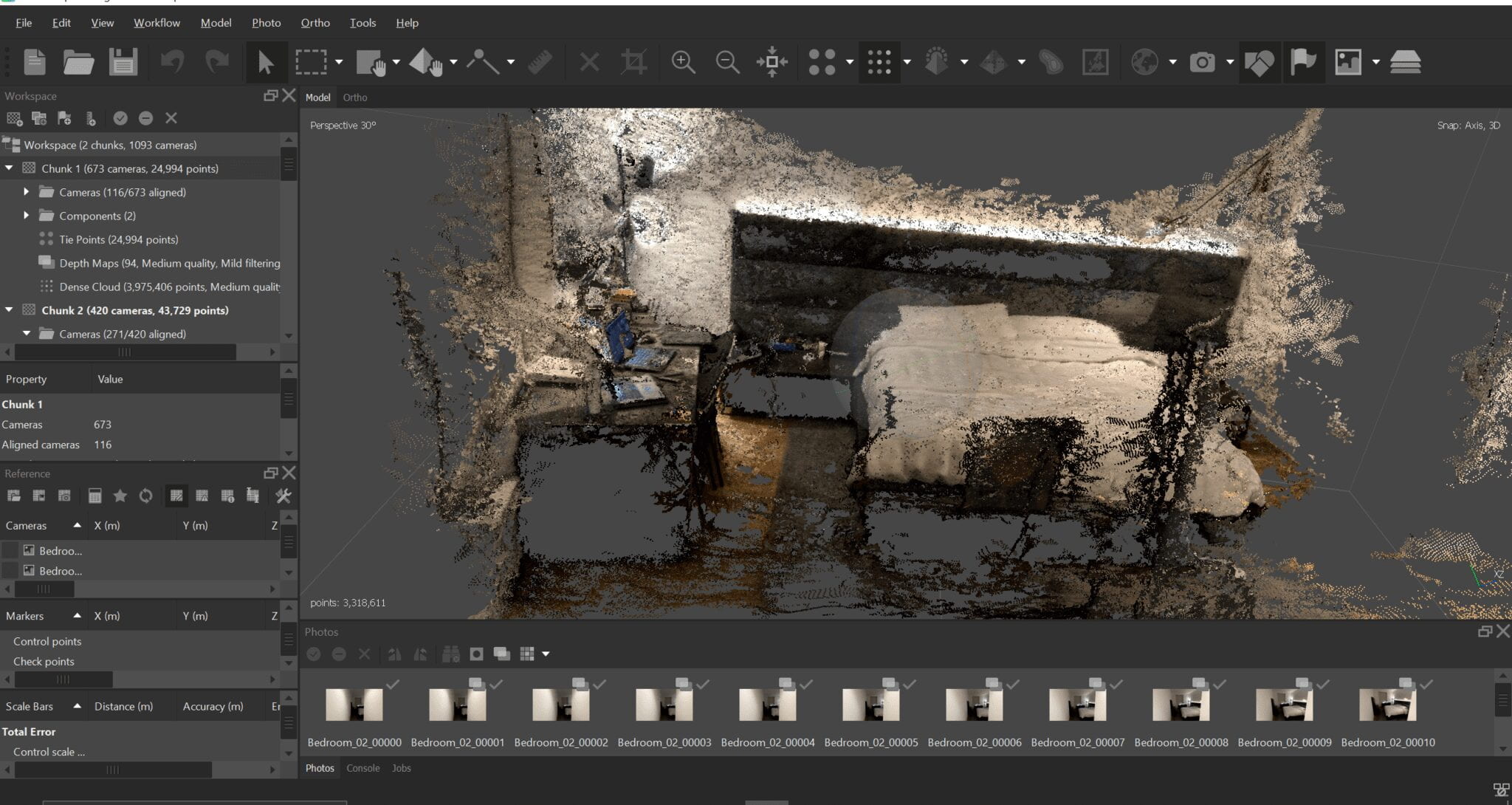

First Scan:

- only 116/673 cameras are aligned

- most images are unusable

- walls are not generated

- objects are broken

(The blue line is the predicted trajectory of all the “cameras”)

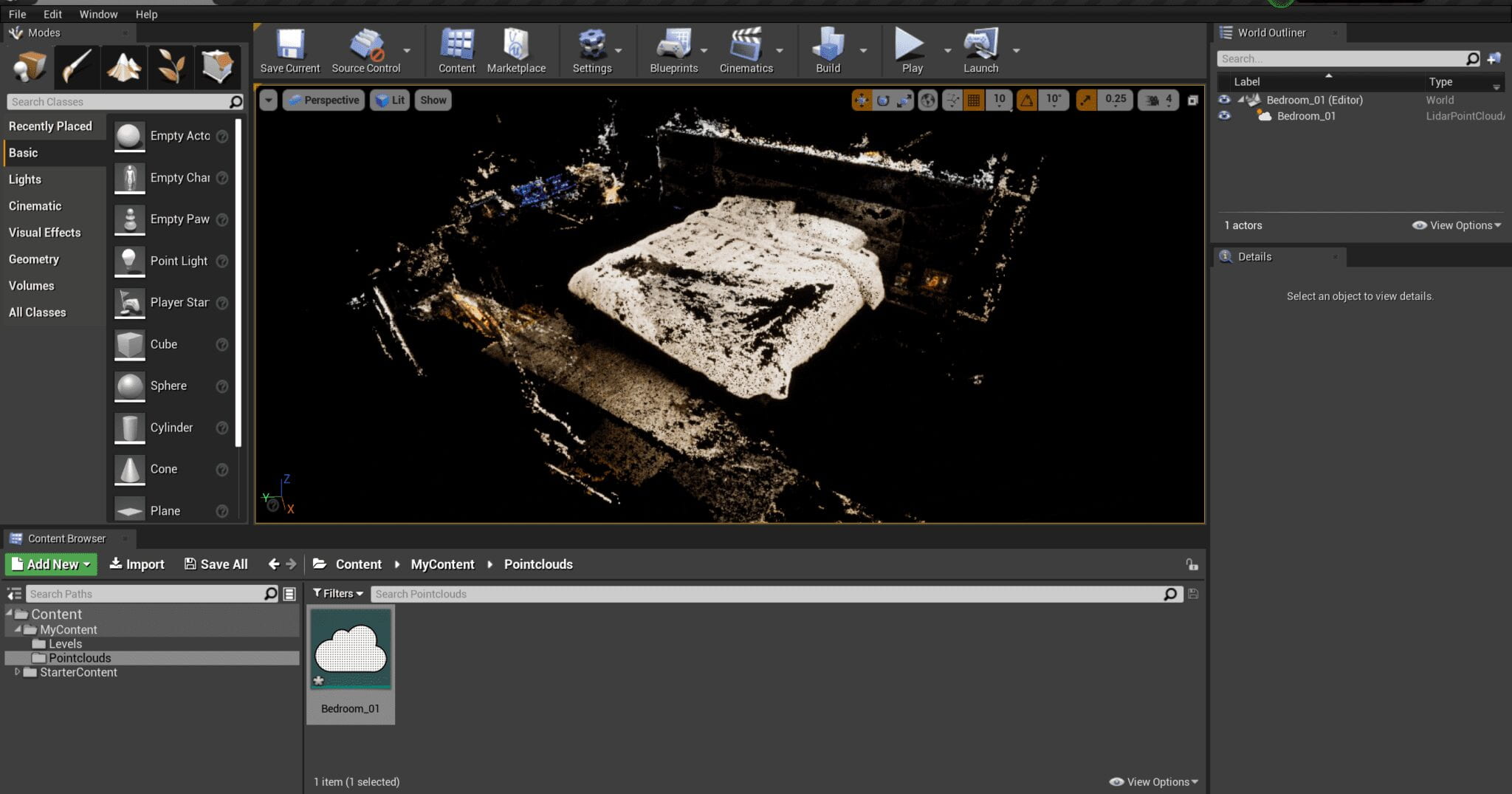

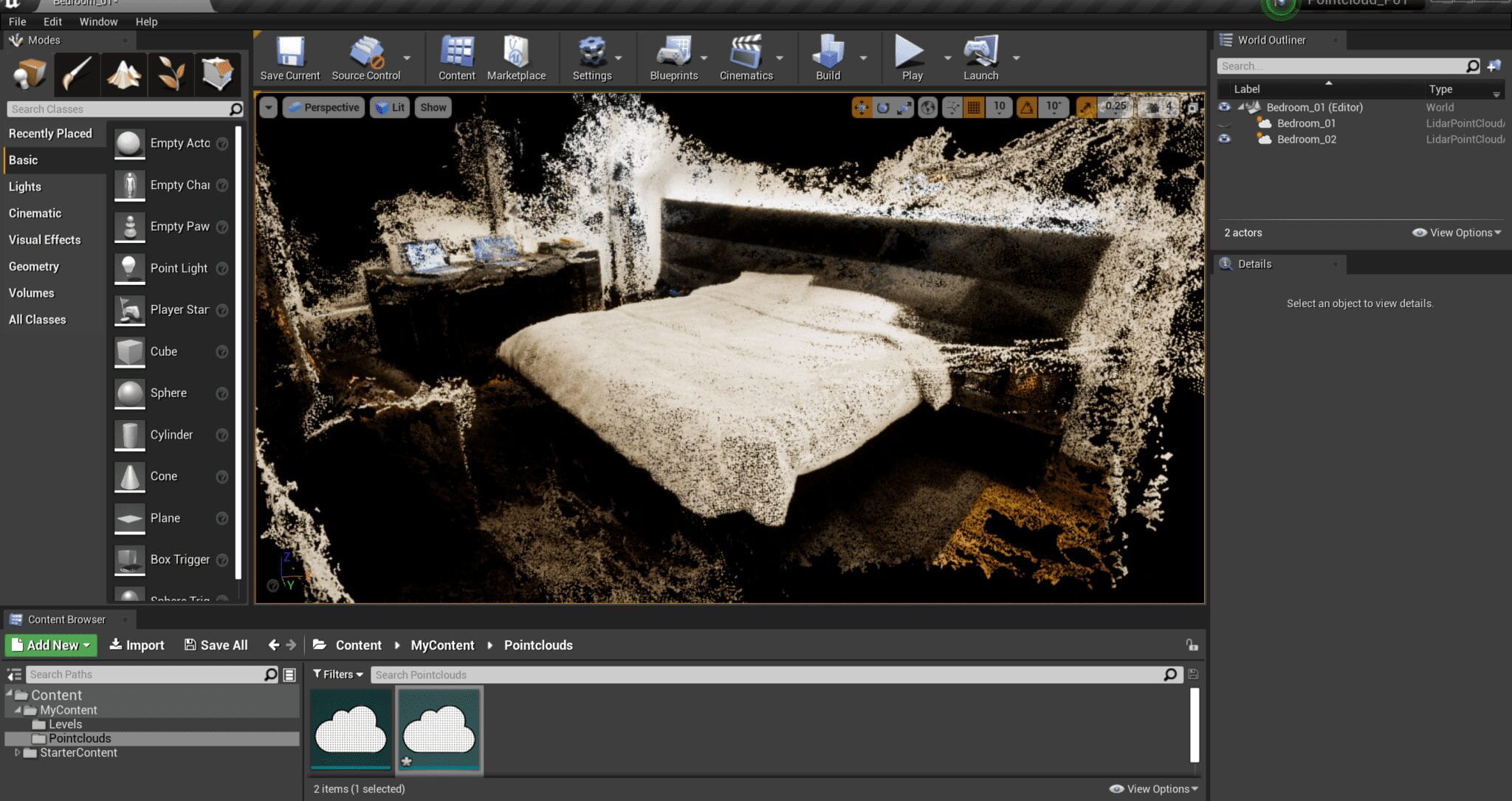

(I installed the point cloud plugin in the unreal engine and this is the result of the model)

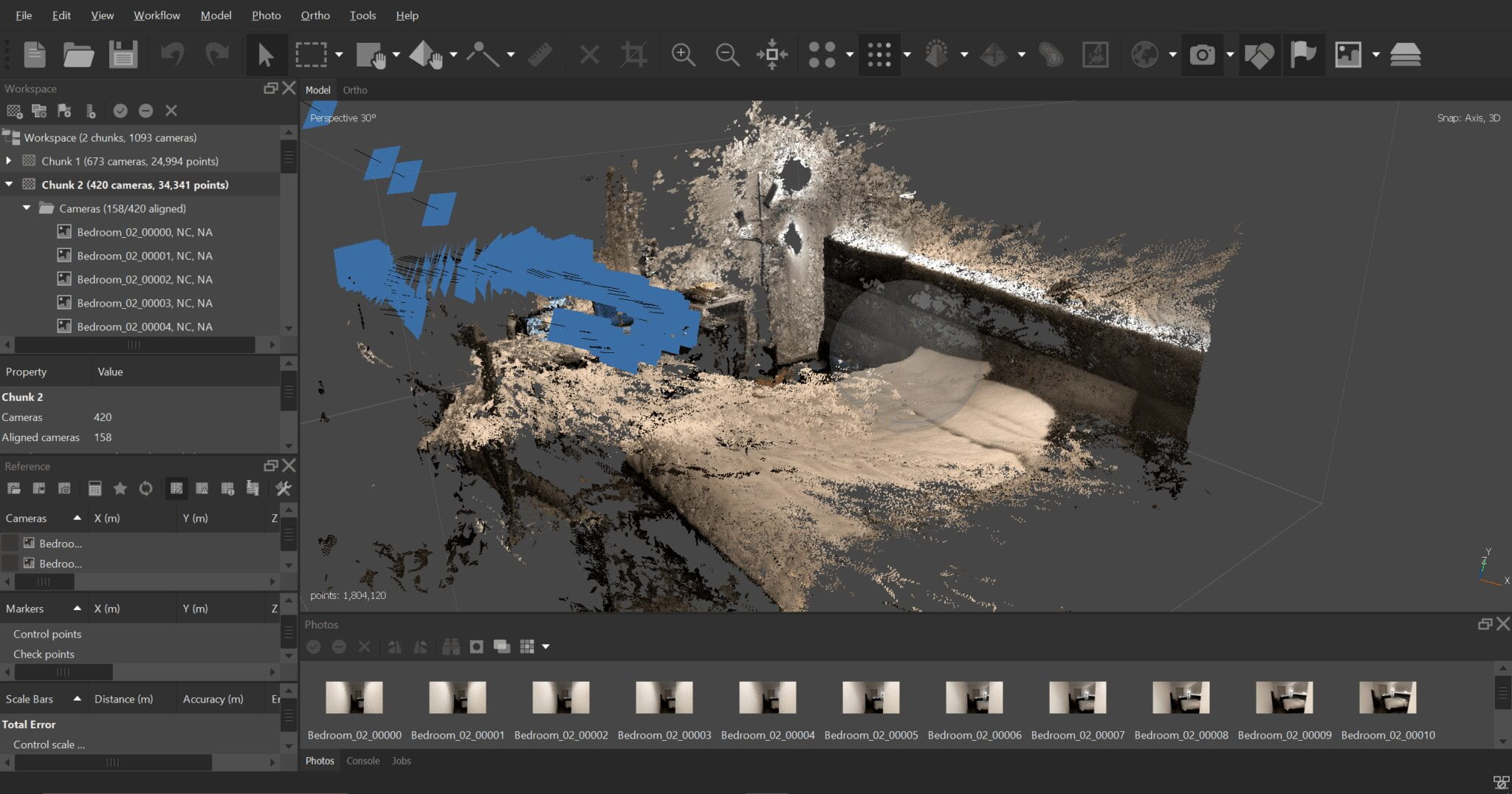

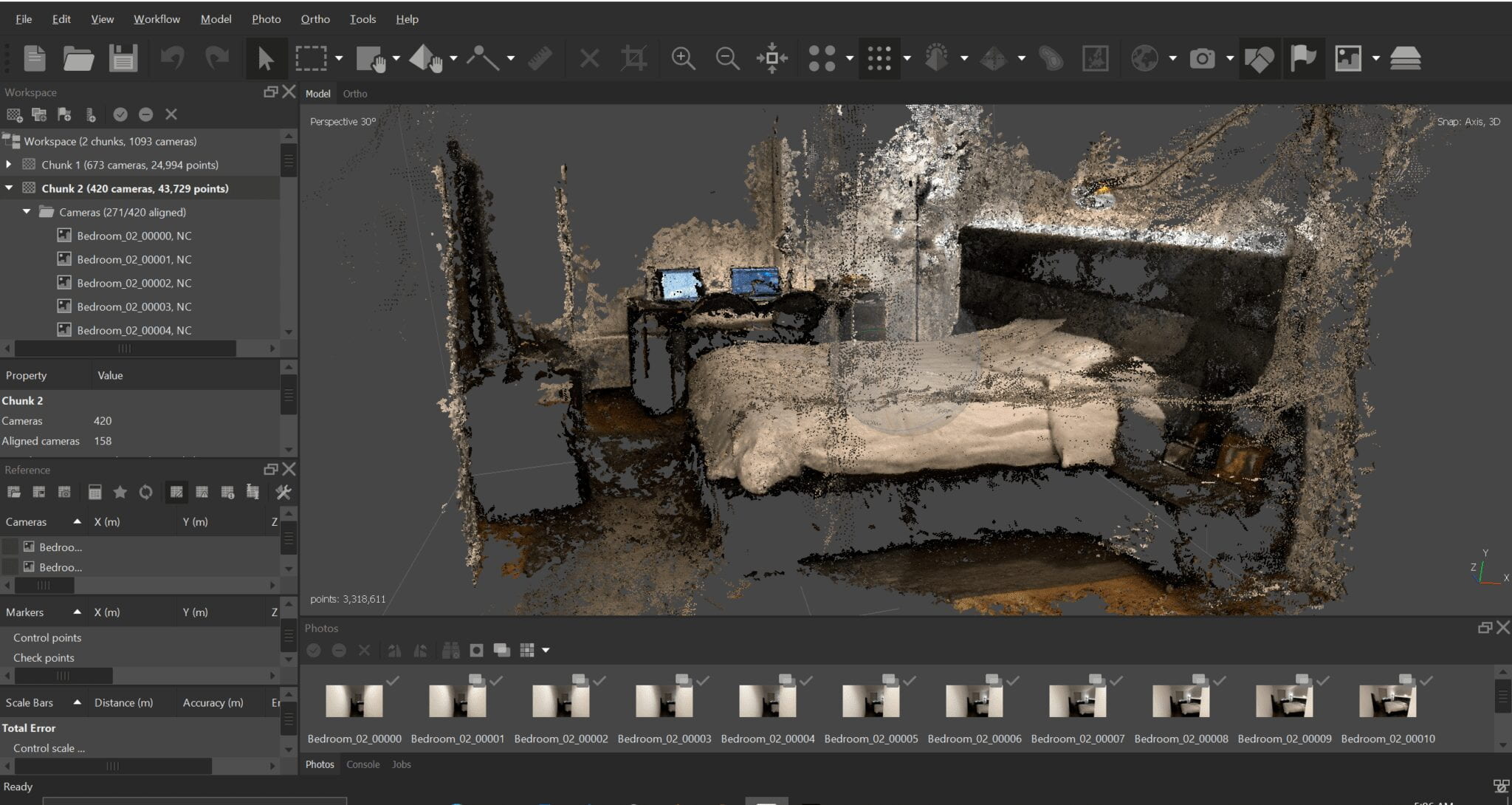

Second Scan:

I decided to reshoot the source video with slower rotation and an extra-wide lens (0.5x zoom) to capture more information.

- 158/420 aligned

- half of the room is missing

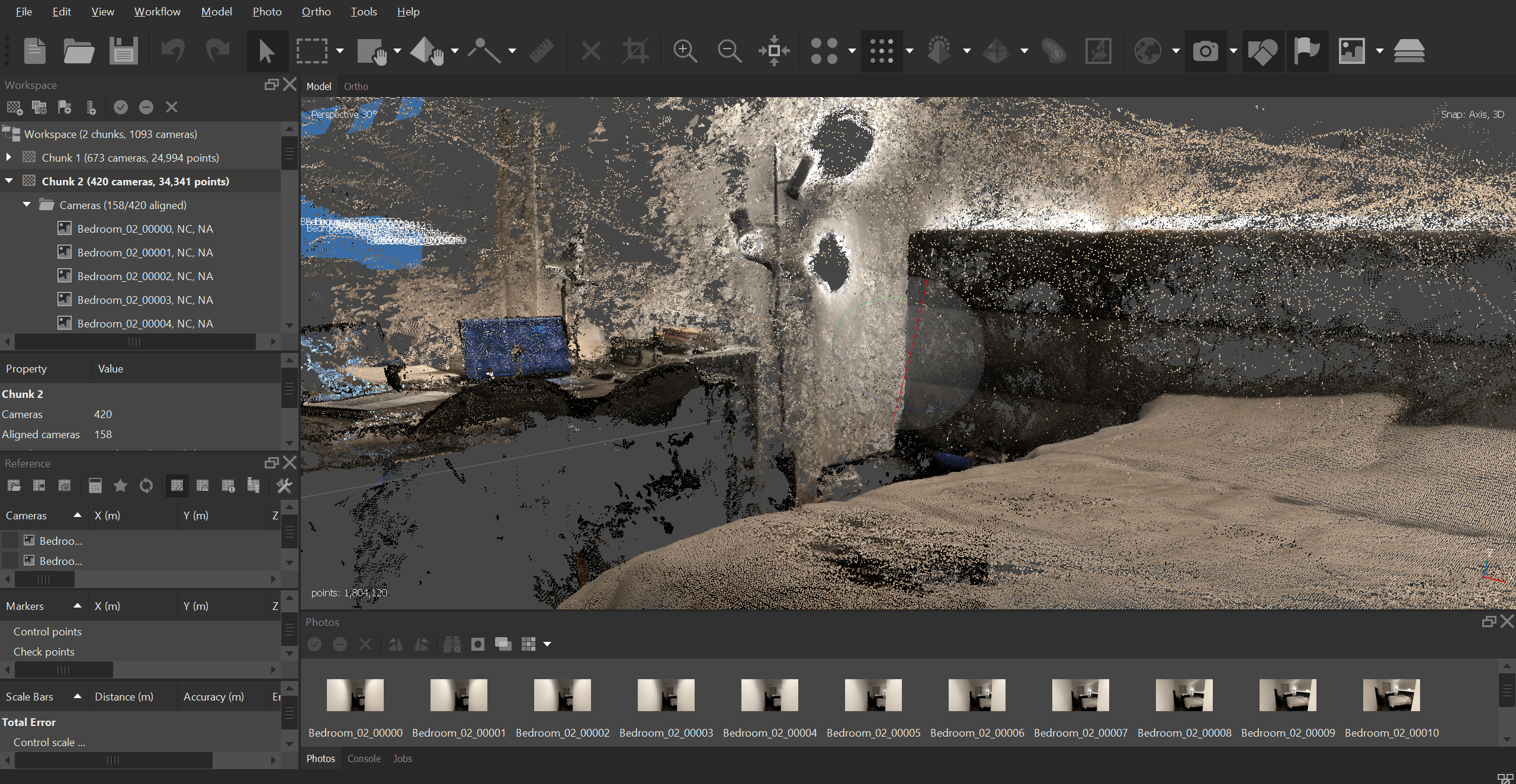

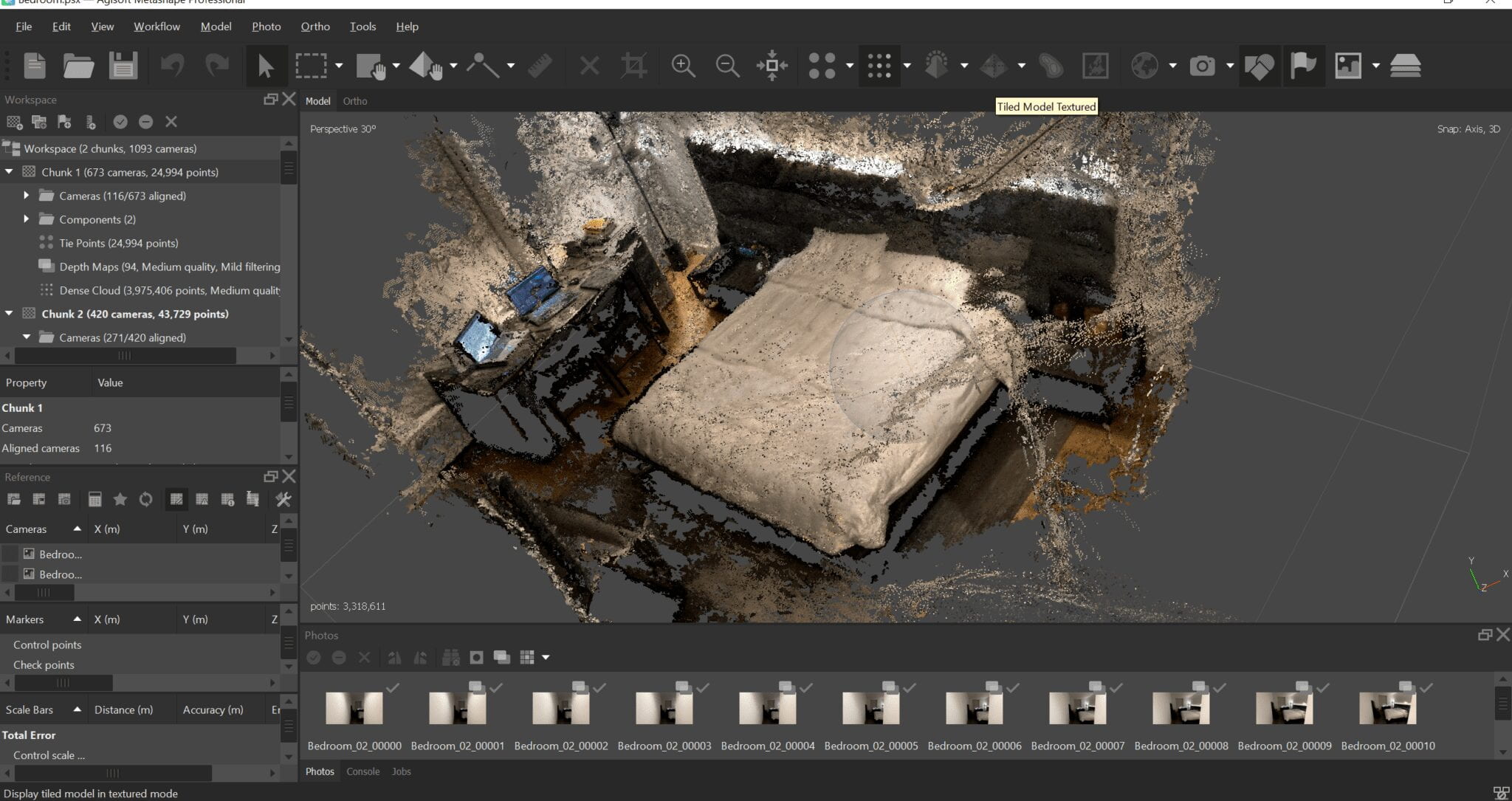

Third Scan:

Same input footage, adjusted settings. (Align Photos: generic preselection, guided image matching, adaptive camera model fitting checked. Key points limit per mpx: 40000, tie points limit: 4000 )(Build dense cloud: calculate point confidence & colors checked, Quality: medium)

- 271/420 aligned

Visual Effects:

- Unity

- Pcx plugin to render point cloud in unity

- Pcx separates point cloud into position and color maps for VFX

- Custom shader

- Unreal Engine

- Point cloud lidar plugin to render point cloud in unreal

- Niagara? FluidNinja?

- Houdini pointcloud plugin in unreal, import model in Houdini and applied effect, transfer cache to unreal’s niagara

2. Machine Learning Art

Ideas: Stylized architecture generation using AI, and its various implementations in digital art

RunwayML

StyleGan Latent Spacewalk Video:

I tried to train my own StyleGan model for generative abstract artworks or distinctive textures. The dataset I trained it with is 100 JEPEG images of Ink in Milk texture.

Other Pretrained Models:

Google Colab

Process:

- Preparing datasets

- Using Anaconda to sort python environment

- Using Instagram-scraper and flicker-scraper for dataset collection

- Using dataset-tools for dataset normalization

- Training the model

- Testing the model

Tutorial followed:

- Train your own model with Google Colab & Runway ML

https://www.youtube.com/playlist?list=PLWuCzxqIpJs9E1XQ8x5ys2mcPApqy6zxs

- ML art with Google Colab

https://www.youtube.com/playlist?list=PLWuCzxqIpJs8PN_bY5FON5mFqZ0I9QoSG

- Advance StyleGan

https://www.youtube.com/watch?v=AMDPvOd3hIE&ab_channel=ArtificialImages

- Experimental Film with Machine Learning, Python, and p5.js

https://www.youtube.com/watch?v=lN_dSl4V1BI&ab_channel=ArtificialImages

Inspirations:

Cyberfish by AvantContra

AvantStyleGan+CLIP

Possible Tools:

- StyleGan 3

- StyleGan 3 style transfer based on Wambo Dream

- StyleGan 3 visualizer and OSC control

- Real-time control over posenet

- Real-time control over external apps, blender or custom apps on phone

- Audio reactive

- Holography

- https://github.com/nosy-b/holography

- WebXR and Deep Learning using Tensorflow to create holograms on-the-fly. It works on Android with the last Chrome

- t-SNE Map

- Generated image from StyleGan to point cloud

- https://vergazova.com/en/floating-utopia-act-2-new/

- Generated image to video

- 3D Photography using Context-aware Layered Depth Inpainting

- https://shihmengli.github.io/3D-Photo-Inpainting/

3. Interactive Real-Time Gesture for StyleGan

Handpose Model Experimentation:

https://editor.p5js.org/hnc247/sketches/pEnrjusYC

I tweaked the handpose example into a regression model. I was inspired by the color pose regression tutorial and trained the output as a background color regression. I added two more inputs (coordinates of the middle finger), so each of the three fingers can control the corresponding R, G, and B value of the background. The middle finger for Green, the index finger for Blue, and the thumb for Red.

I first trained the system moving three fingers simultaneously as well as the RGB values to see if the model works. The result seems pretty accurate, lighting up the background when fingers are apart and dimming when they are close.

Then I trained and saved the data of moving my fingers separately. The middle finger controls Green, the index finger controls Blue, and the thumb controls Red. In order to incorporate all colors, I trained all the scenarios, which took me quite a while.

I managed to make it work before saving the data and the model. After adding the load model function, the system seems to stop working entirely. I assume there’s something wrong with my “brainLoaded” function.

Training a handpose regression model:

Basically, the idea is to control the R, G, and B color of the background with three-finger. Although I got it to work, I encountered an issue last week that the model could not be deployed after saving and loading the trained model. So this week, I decided to recollect all the data, retrained the model, and separate it into three sketches.

Data Collection & Saving: https://editor.p5js.org/hnc247/sketches/ssdVrvWJk

Training Data & Saving the Trained Model: https://editor.p5js.org/hnc247/sketches/qF_S-lqtm

Loading and Deploying the Trained Model: https://editor.p5js.org/hnc247/sketches/3t0iLdnIt

I redid the whole process and the same issue came up. The editor had no response and crashed.

Fixed!

let thumbX=0;

let thumbY=0;

let indexX=0;

let indexY=0;

let middleX=0;

let middleY=0;