Model Training

Collect your own image or text dataset and train a generative model in RunwayML. Render a latent walk video with the output (for images) or text output samples (for text):

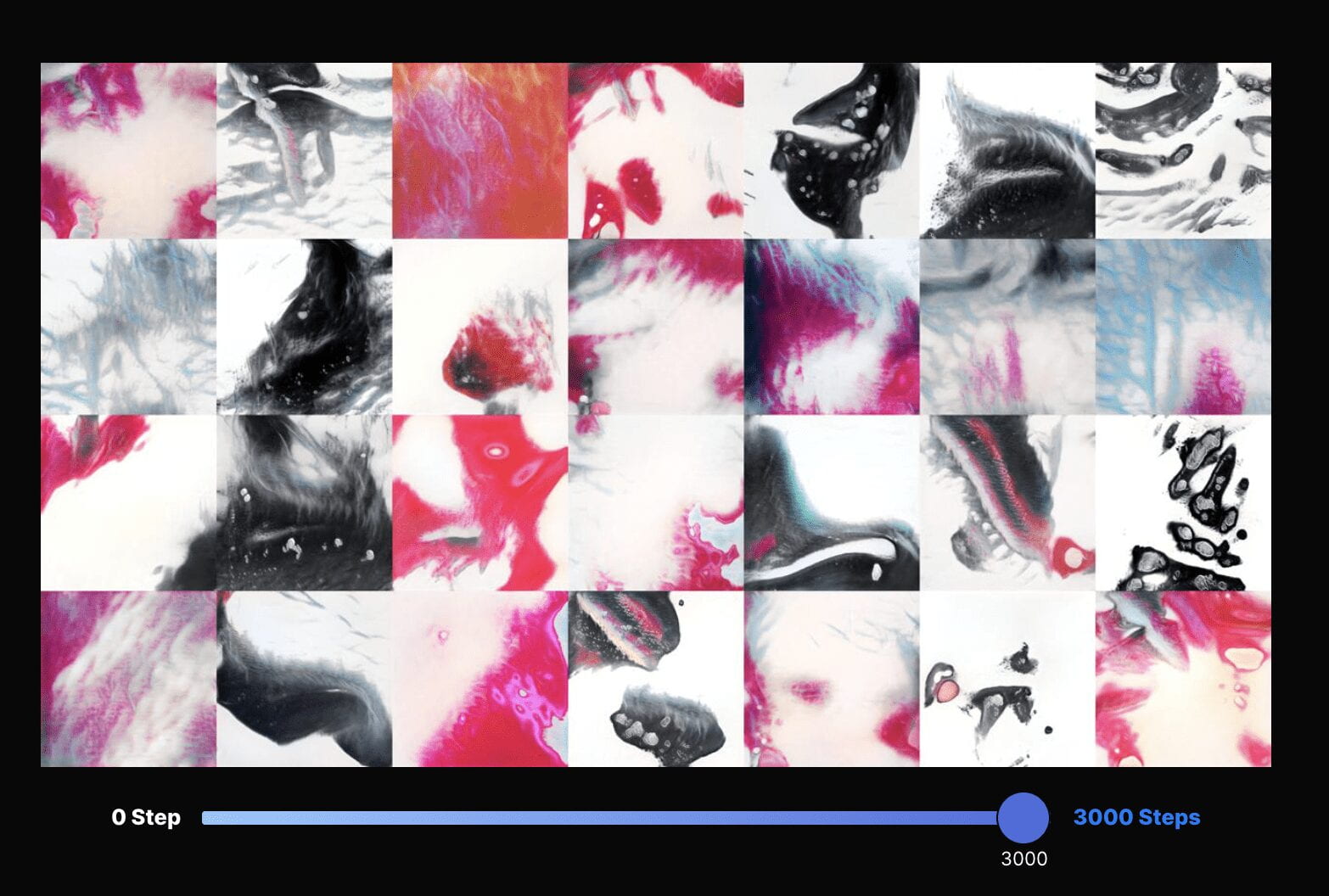

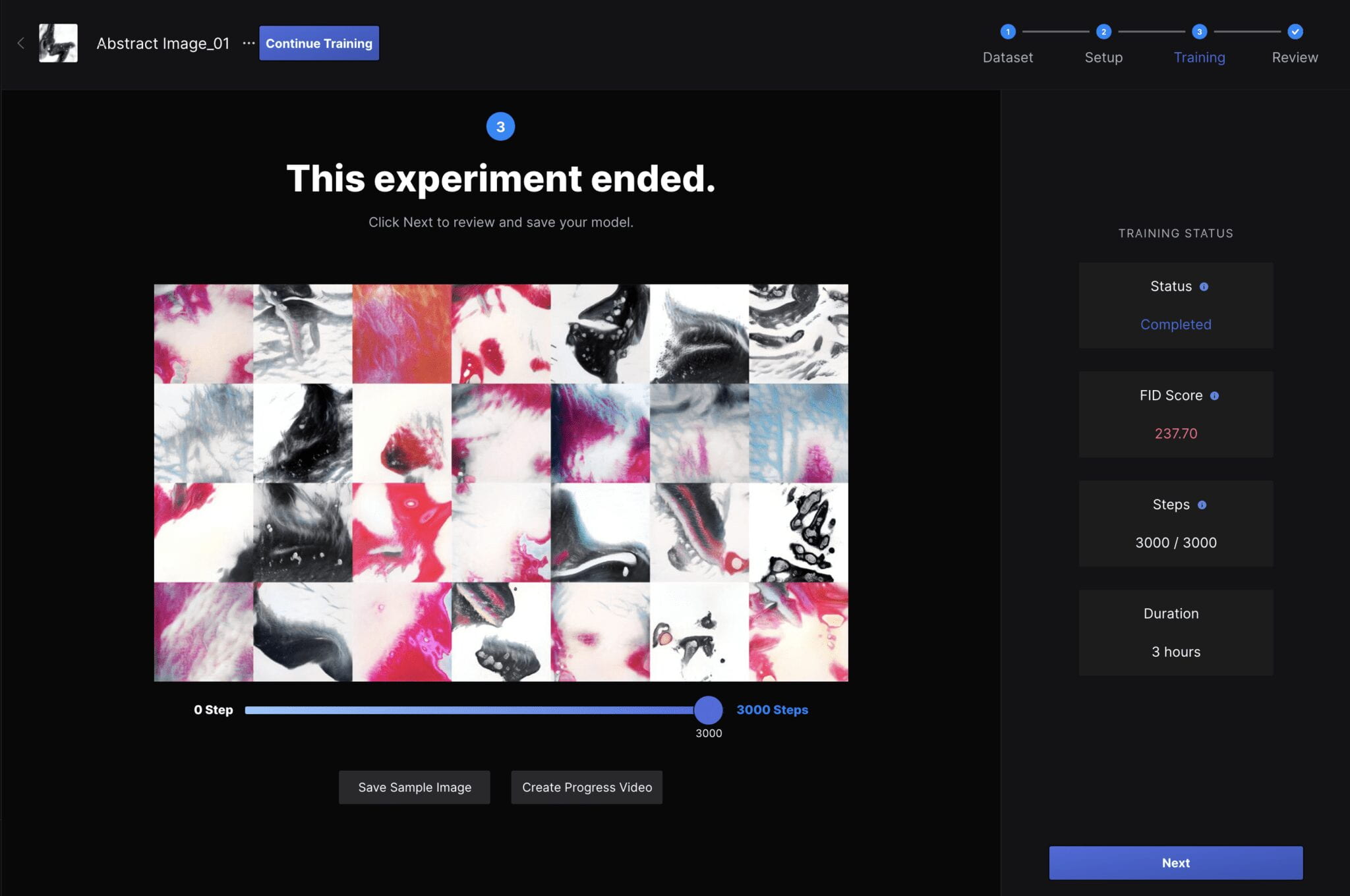

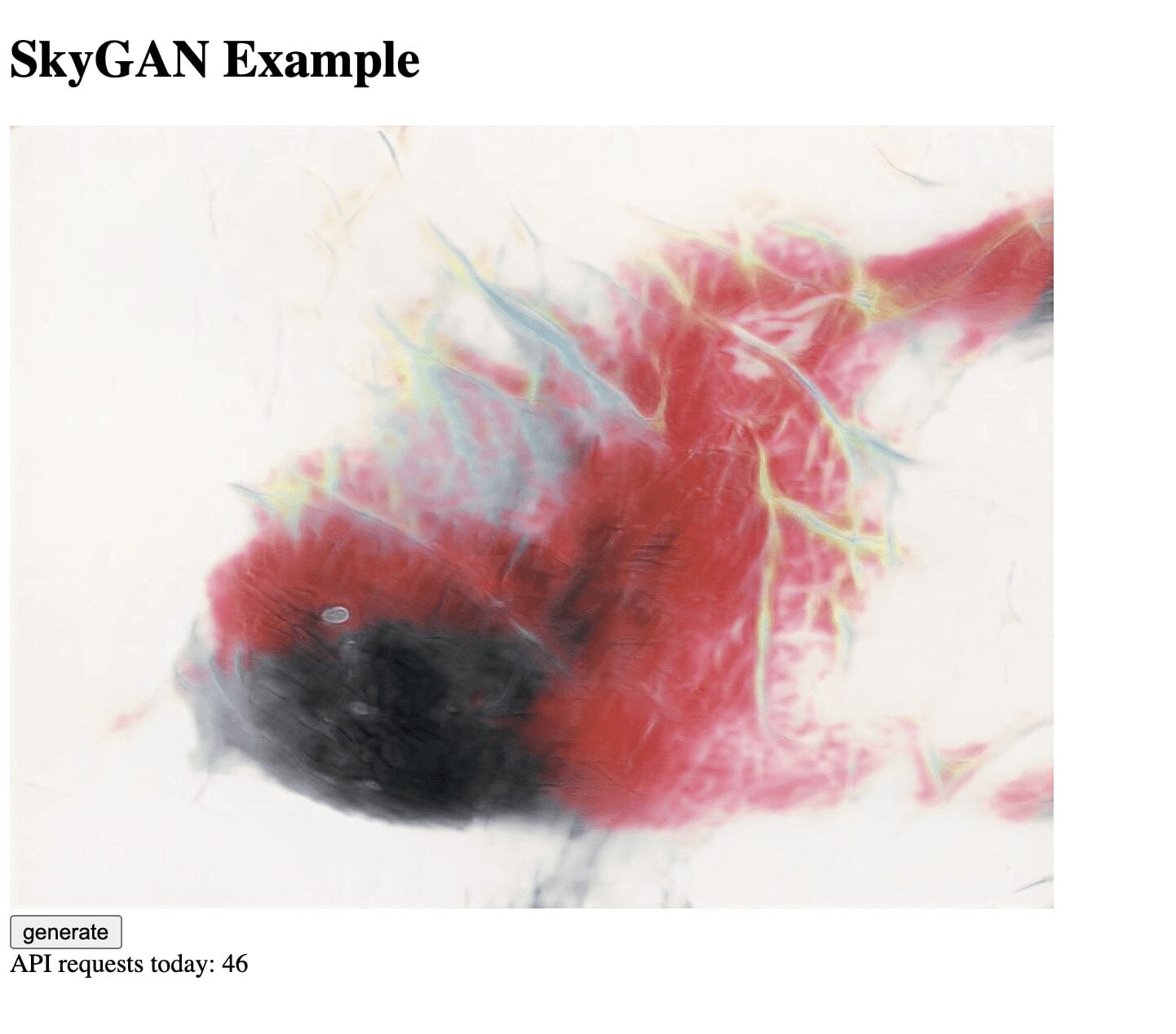

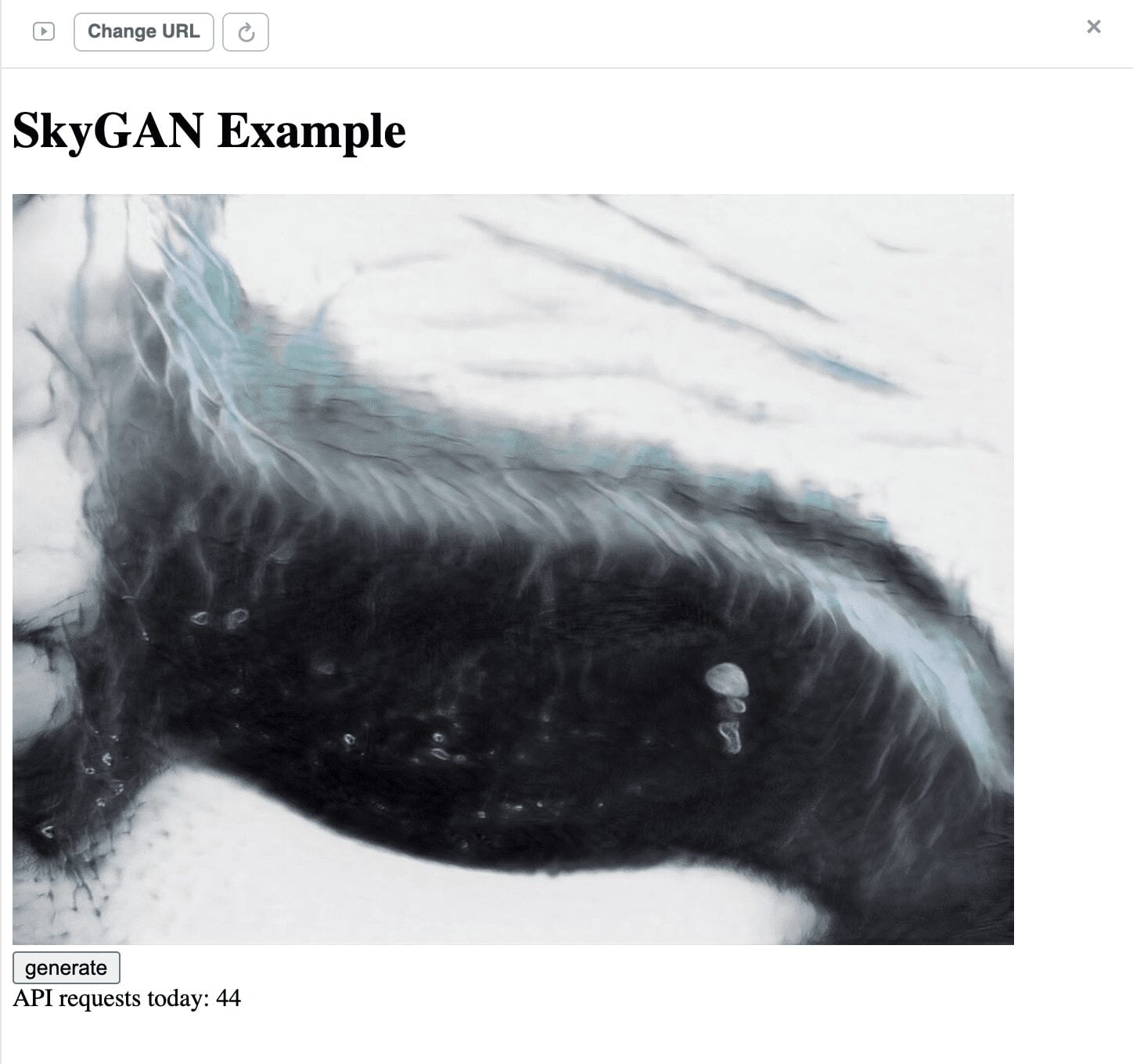

I tried to train my own StyleGan model for generative abstract artworks or distictive textures. The dataset I trained it with is 100 JEPEG images of Ink in Milk texture.

Since the model required the image to be a square, I selected the center crop option for preprocessing. I didn’t select the random crop option because the images are distinctive from each other already. The training option I selected was Illustration. I assume there won’t be much difference if I pick other pre-trained models.

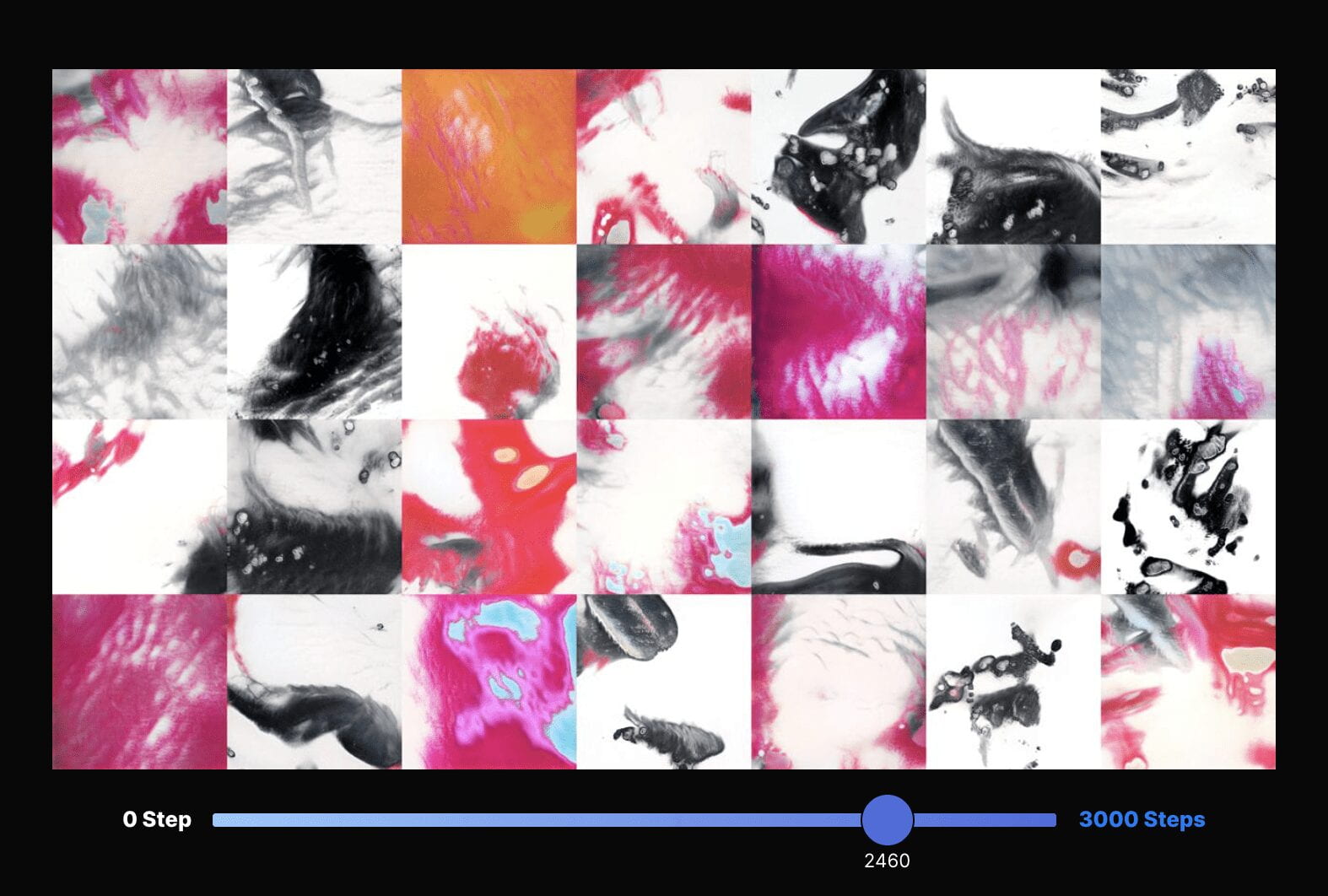

The training steps were set to 3000. I believe that’s the most I could get with the credits I have in my account.

I notice that the FID Score is extremely high even after the training ends, which means that the generated images are very different from the original dataset. It started with 300+, and it remained at 237.7 after it finished training. I wonder if that’s due to an insufficient amount of images I trained the model with. If possible, I would try rotating, mirroring, or simply cropping different parts of the image to cramp up the amount of data I train.

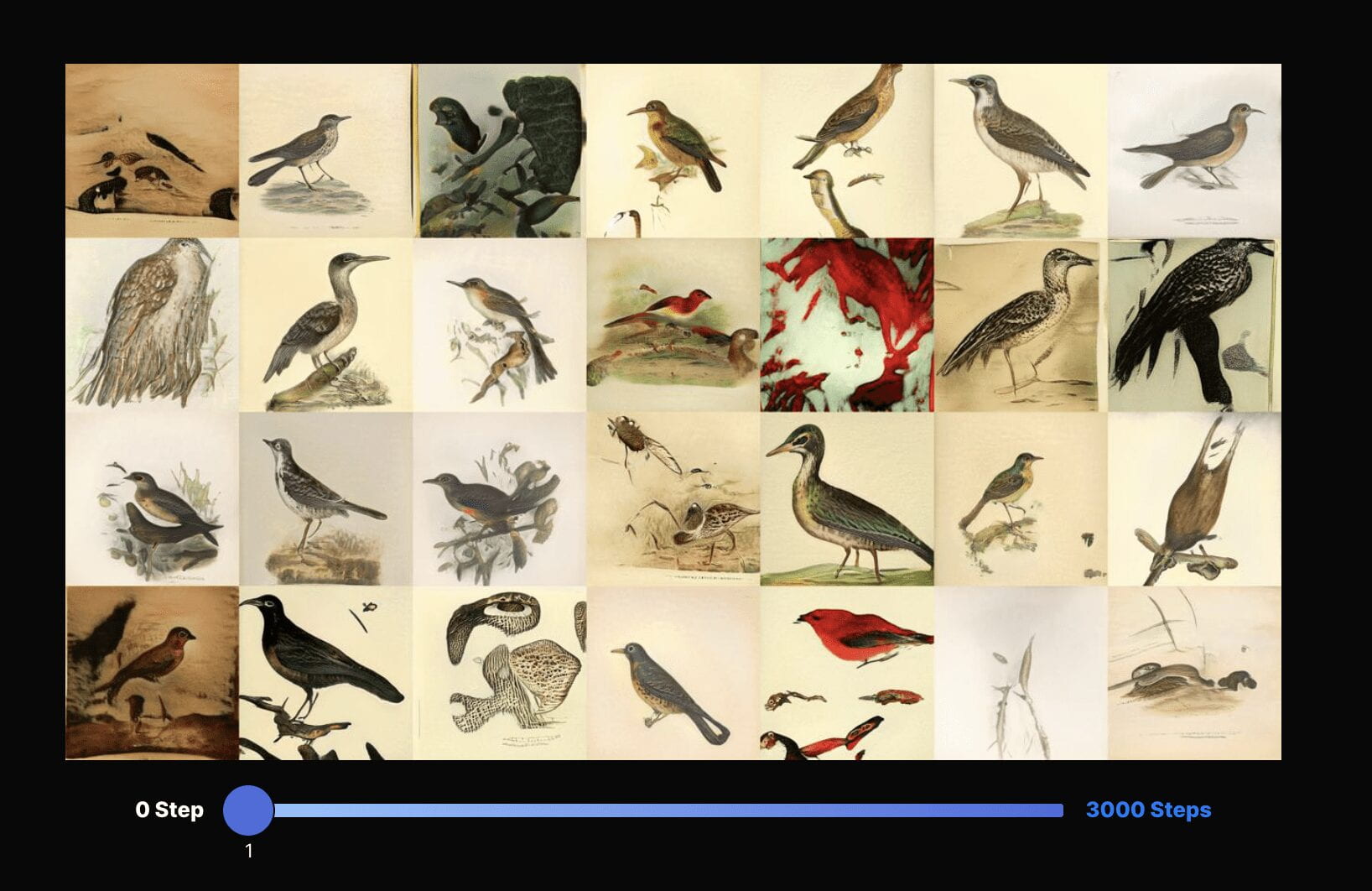

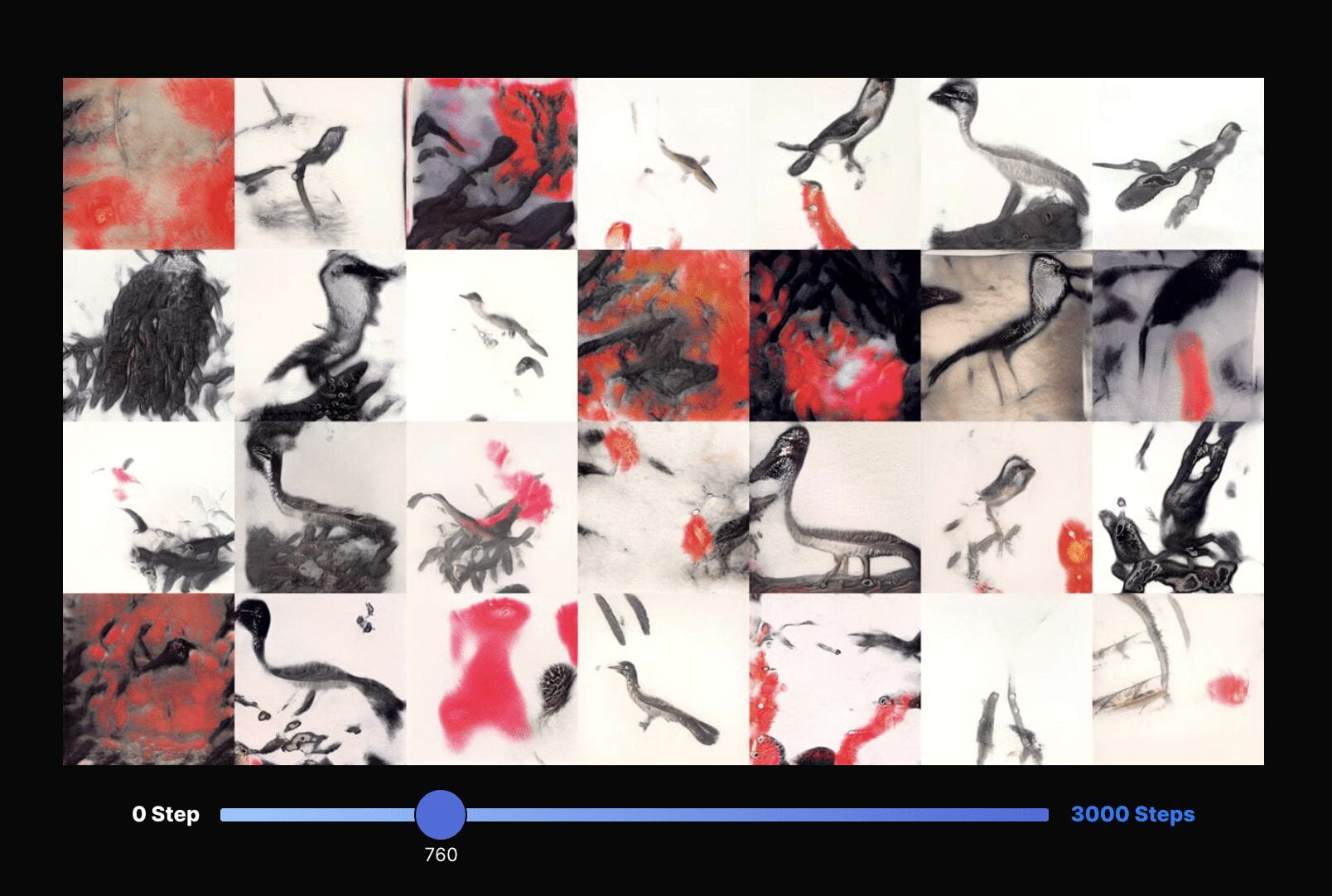

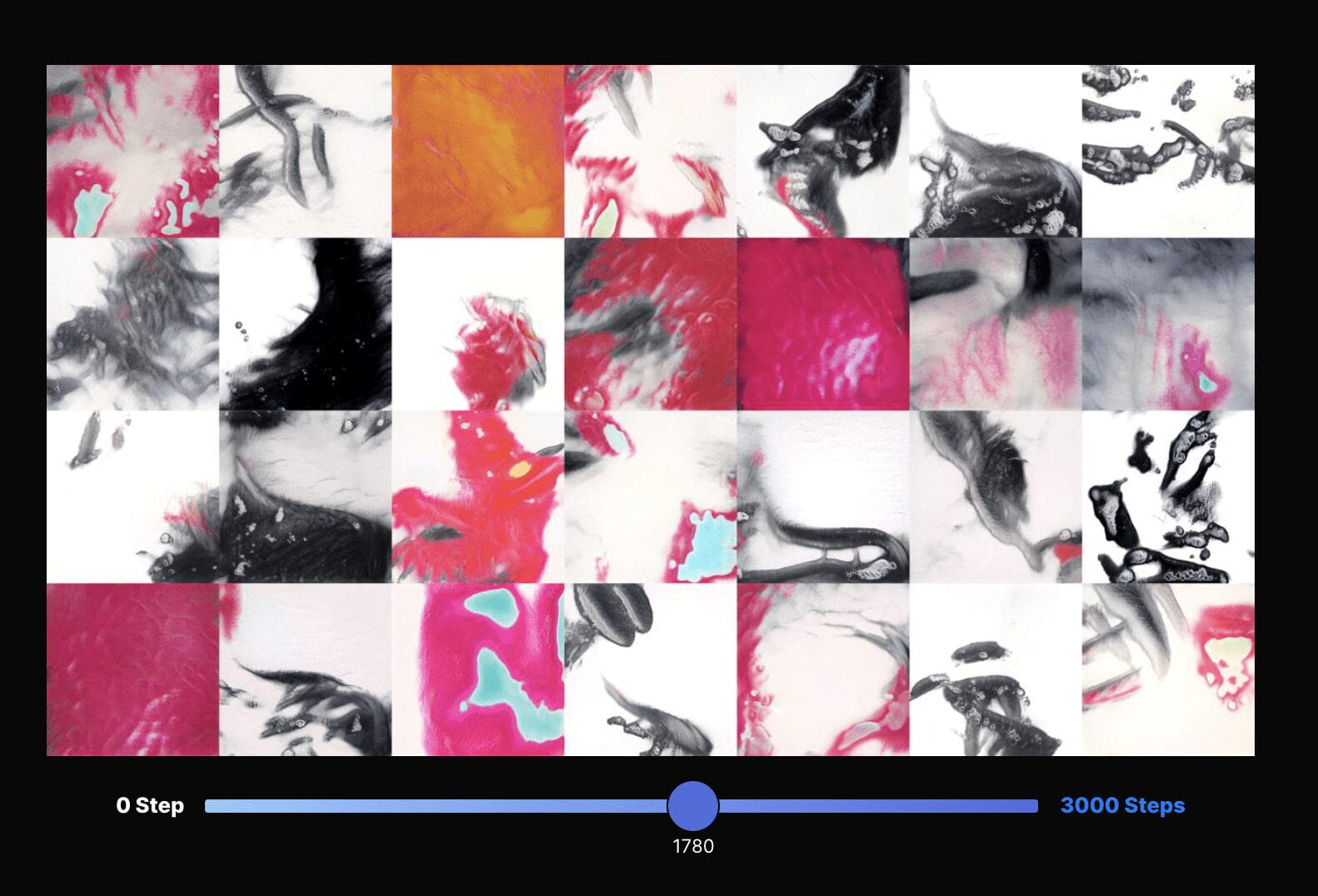

Here’s the latent walk video of the trained model:

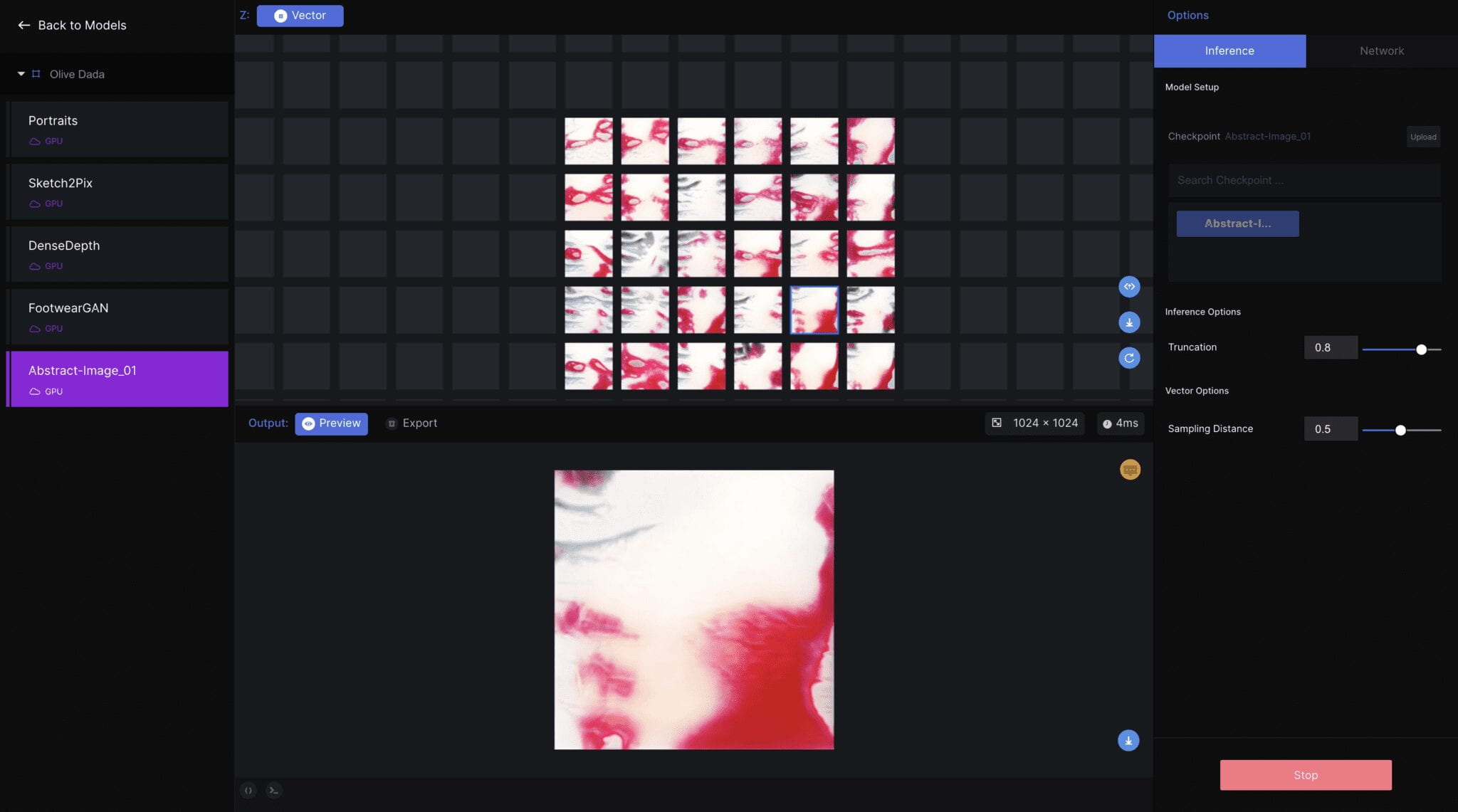

This is a screenshot and a recording of the model’s workspace:

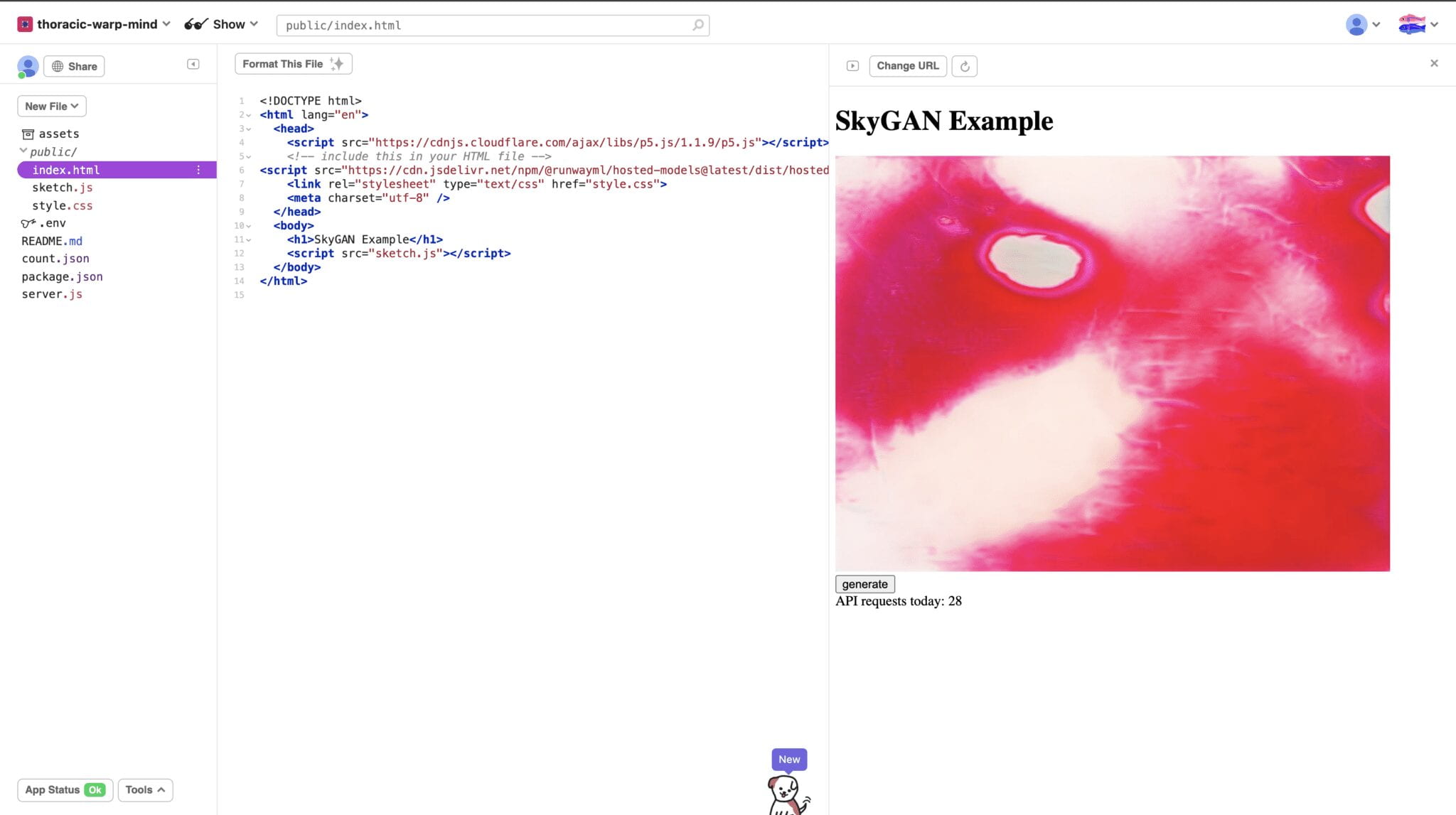

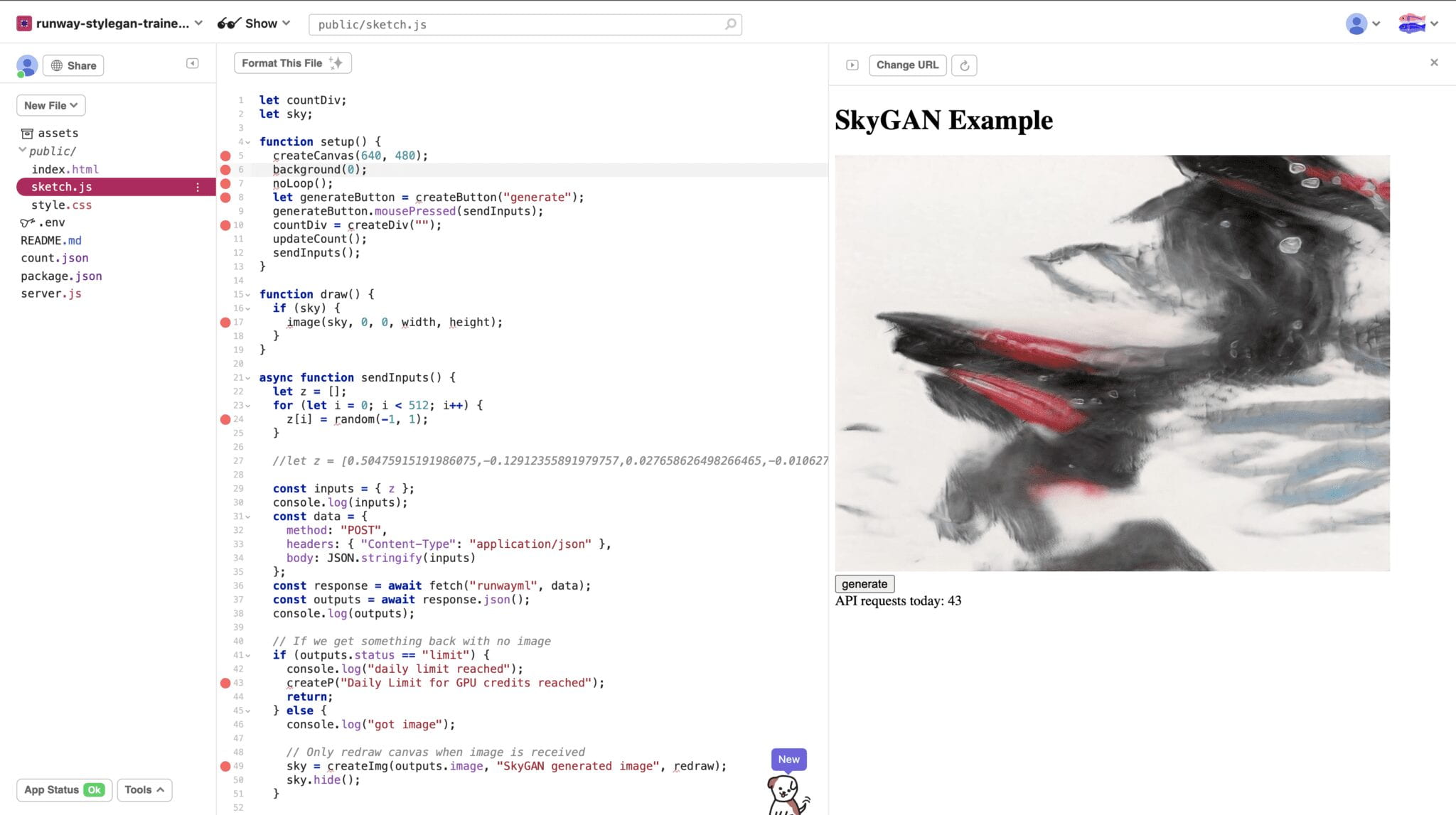

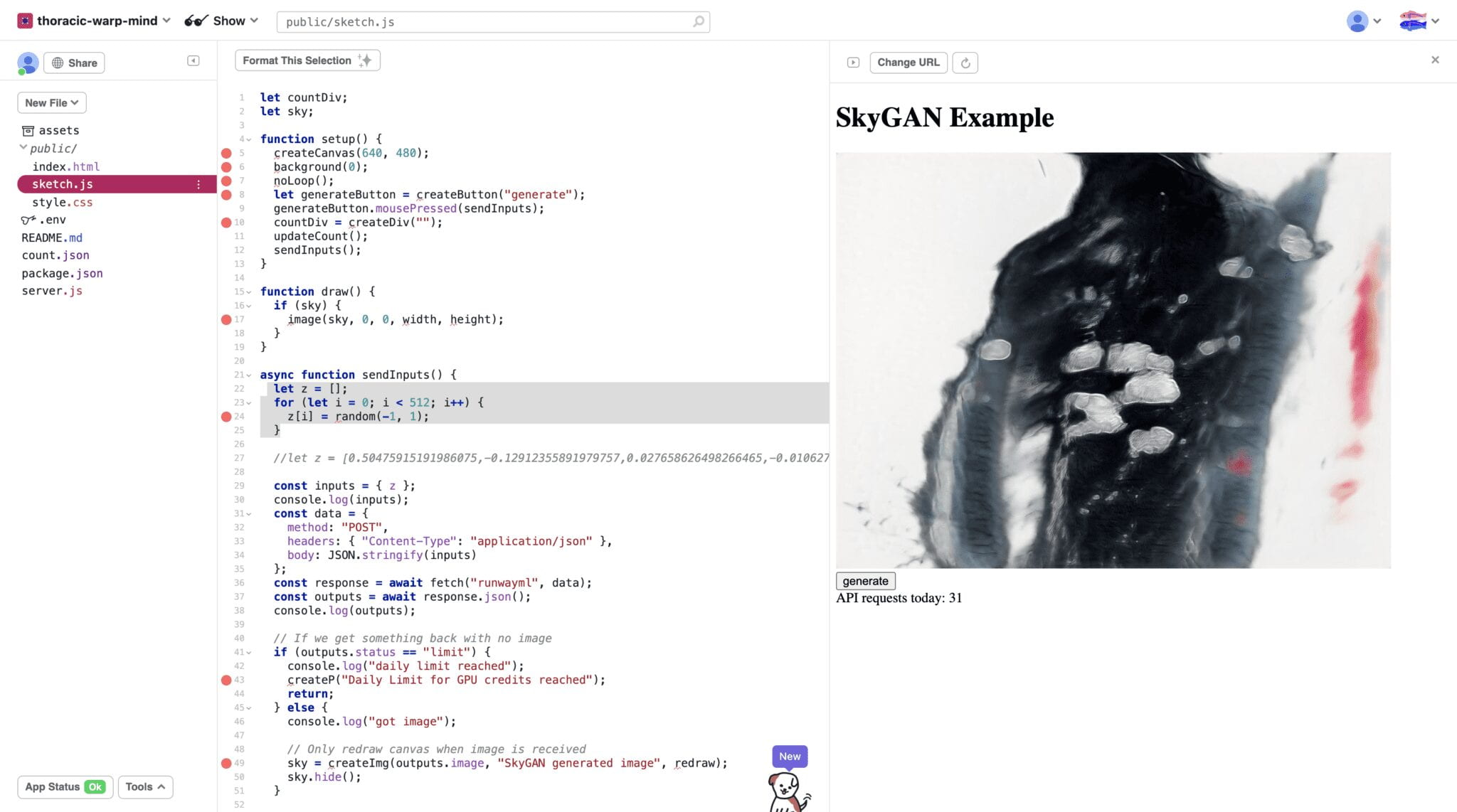

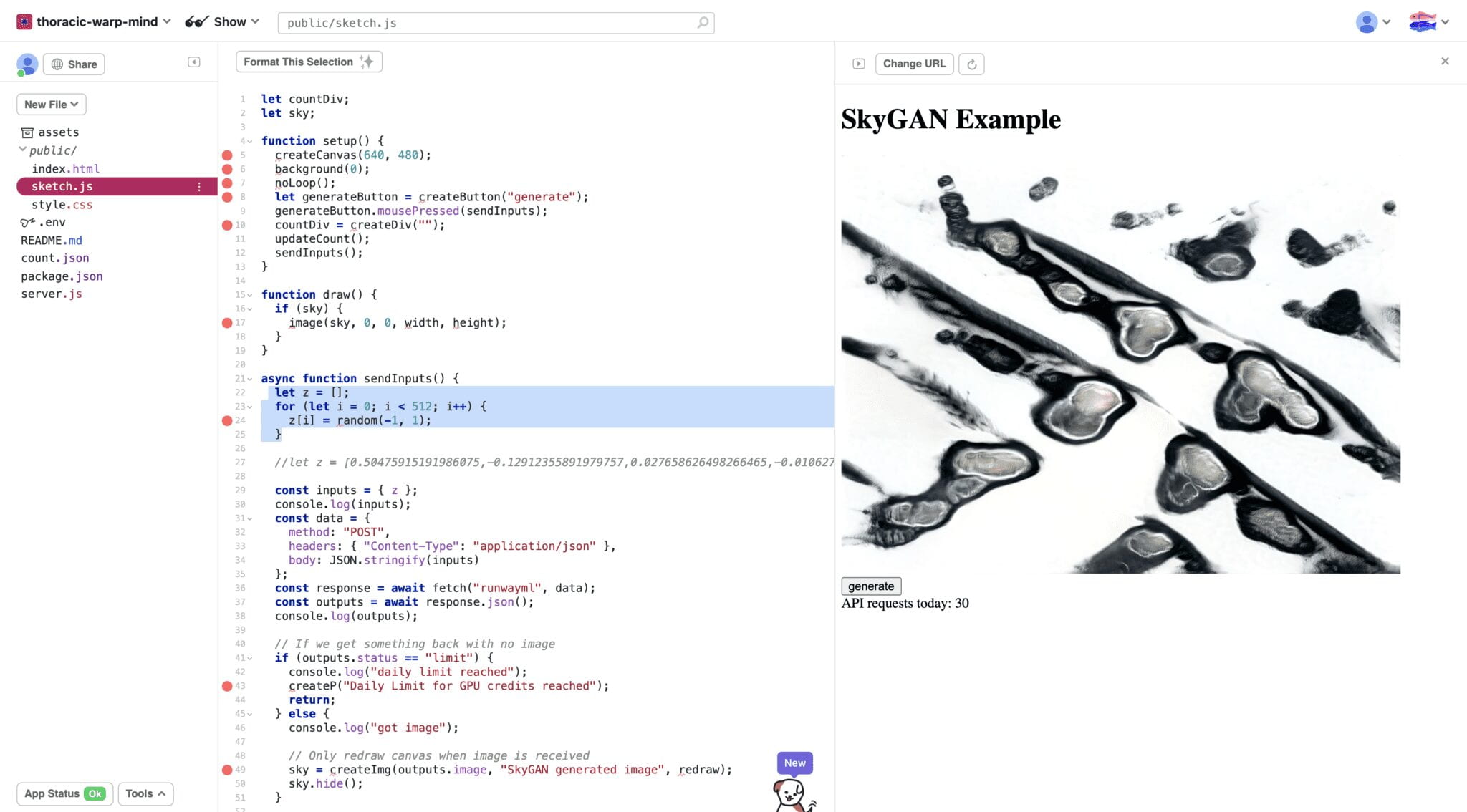

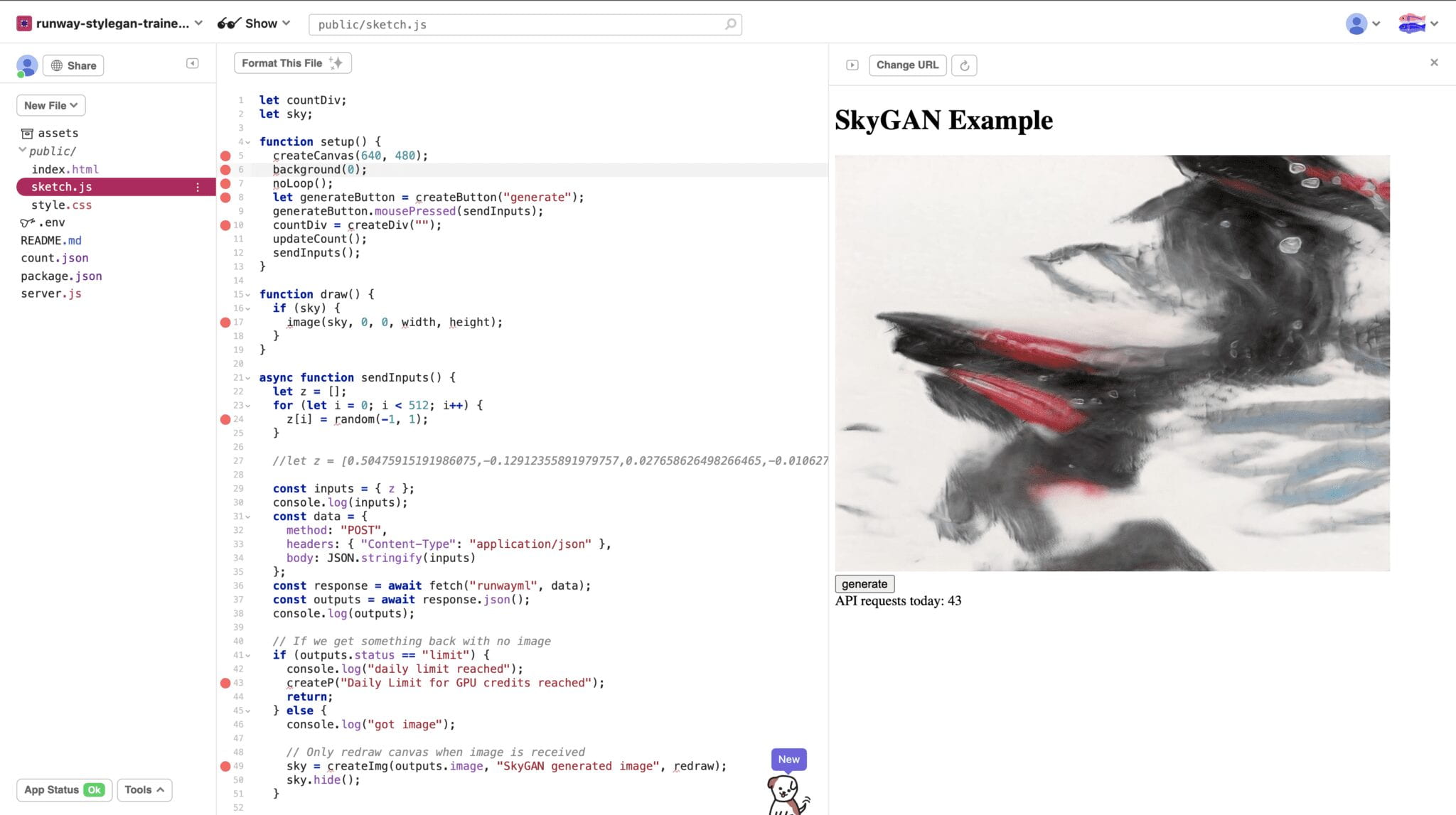

Glitch RunwayML

I worked with the “Glitch! RunwayML template – StyleGAN” template model and host my own model on it.

https://glitch.com/edit/#!/runway-stylegan-trained-model?path=public%2Fsketch.js%3A6%3A16