A Rainy Night in Spring

Statement and Purpose:

In this project of A Rainy Night in Spring, Music can be visualized, and the metaphor and the scene from an ancient musical piece can be reimagined using modern technologies. This project intends to redefine the concept of musical instruments, revolutionize the way of making and appreciating music, and modernize the ancient traditional Chinese music culture by adding modern elements. As well as foster the awareness of protecting culture, arise the pride of our history and culture.

The targeted audience of our project are those who wants to expand their senses to appreciate music. Cultural lovers, anti-war supporters, culture protectors, people who are interested in music, digital heritage, and design are all welcomed by this project.

Project Plans:

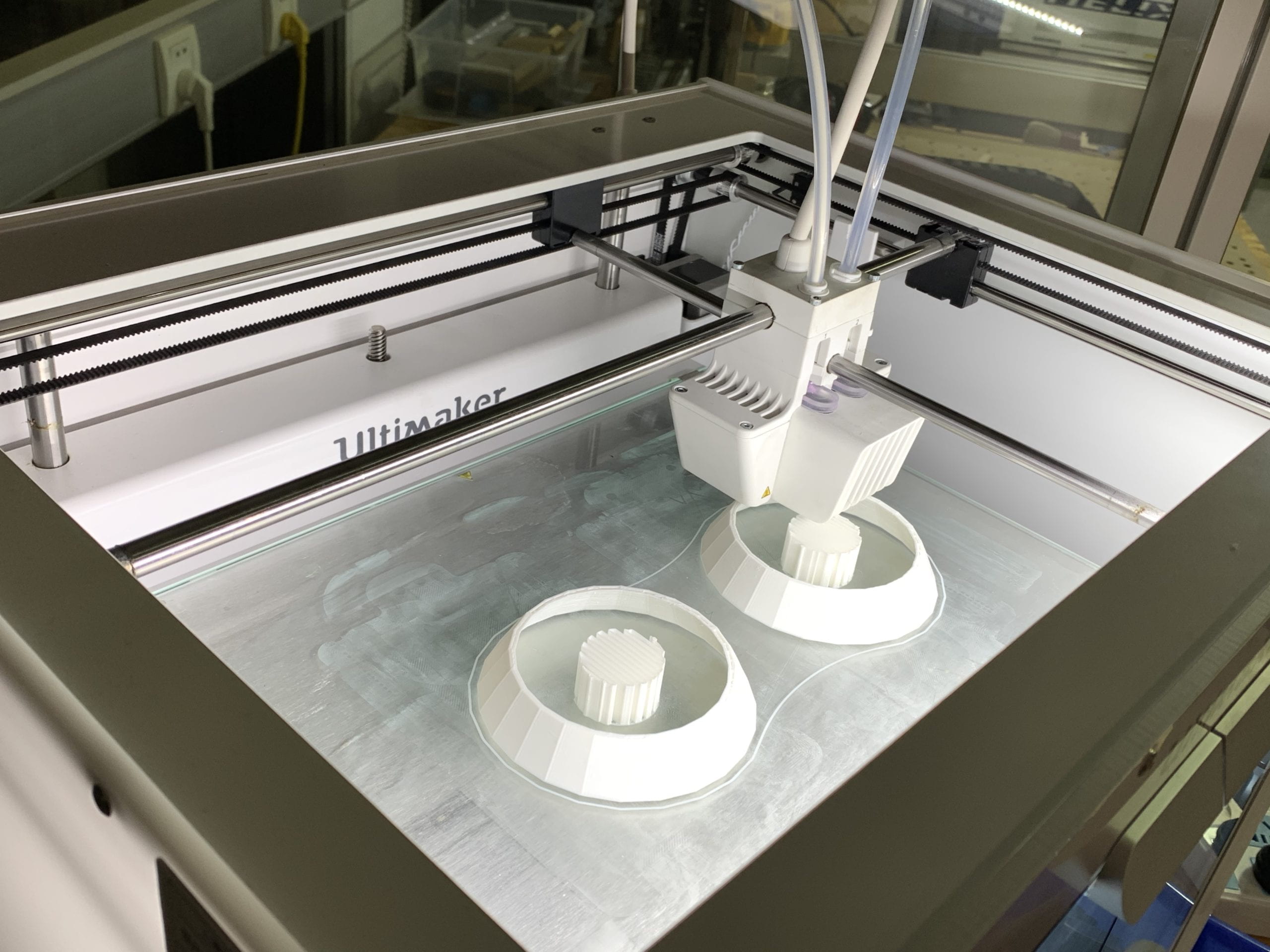

Laser-cutting: We want to laser cut a wood panel to represent the concept of pipa, or as a metaphor of the Chinese musical instruments, or even the lost Chinese cultural heritage at all.

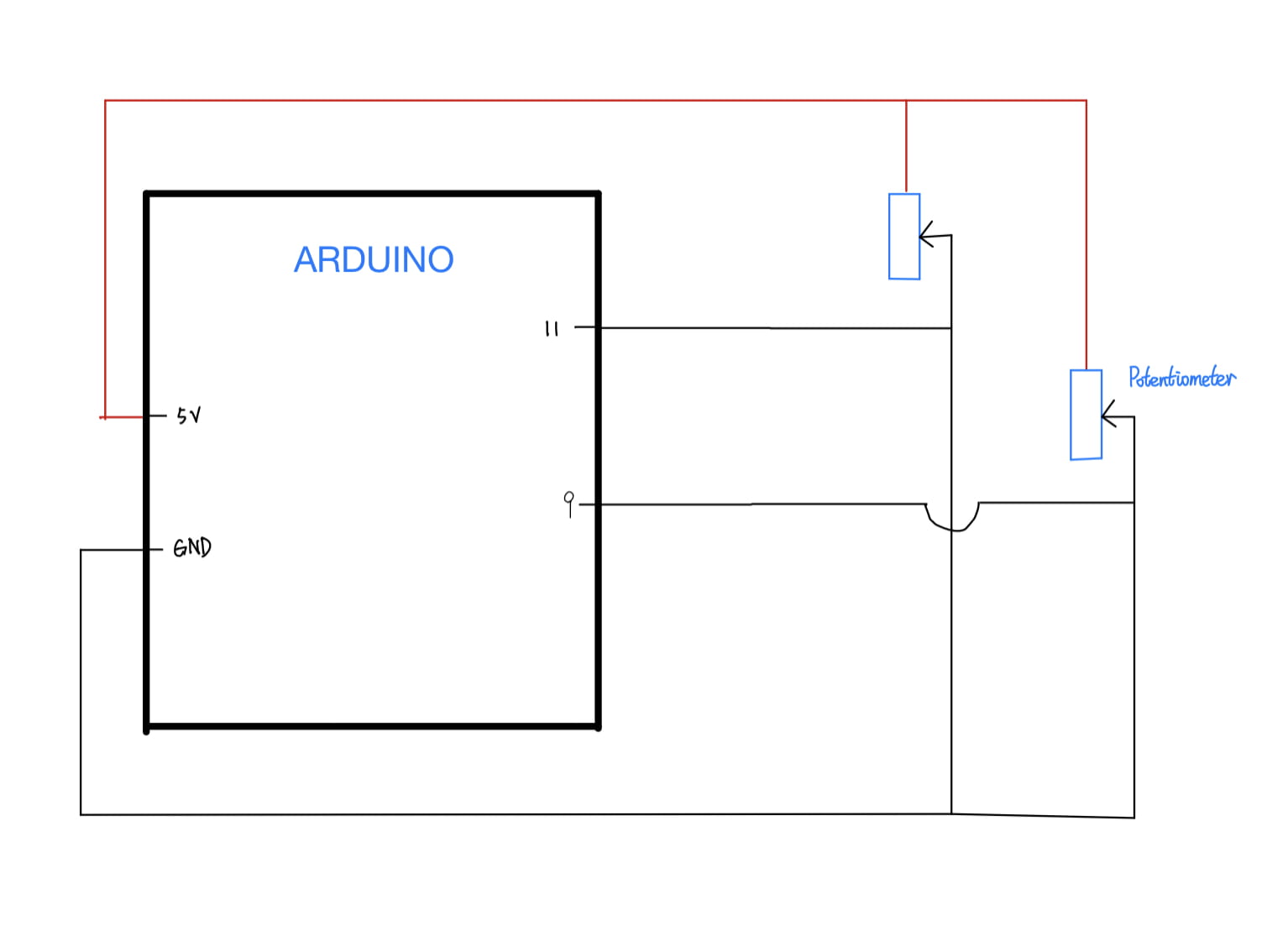

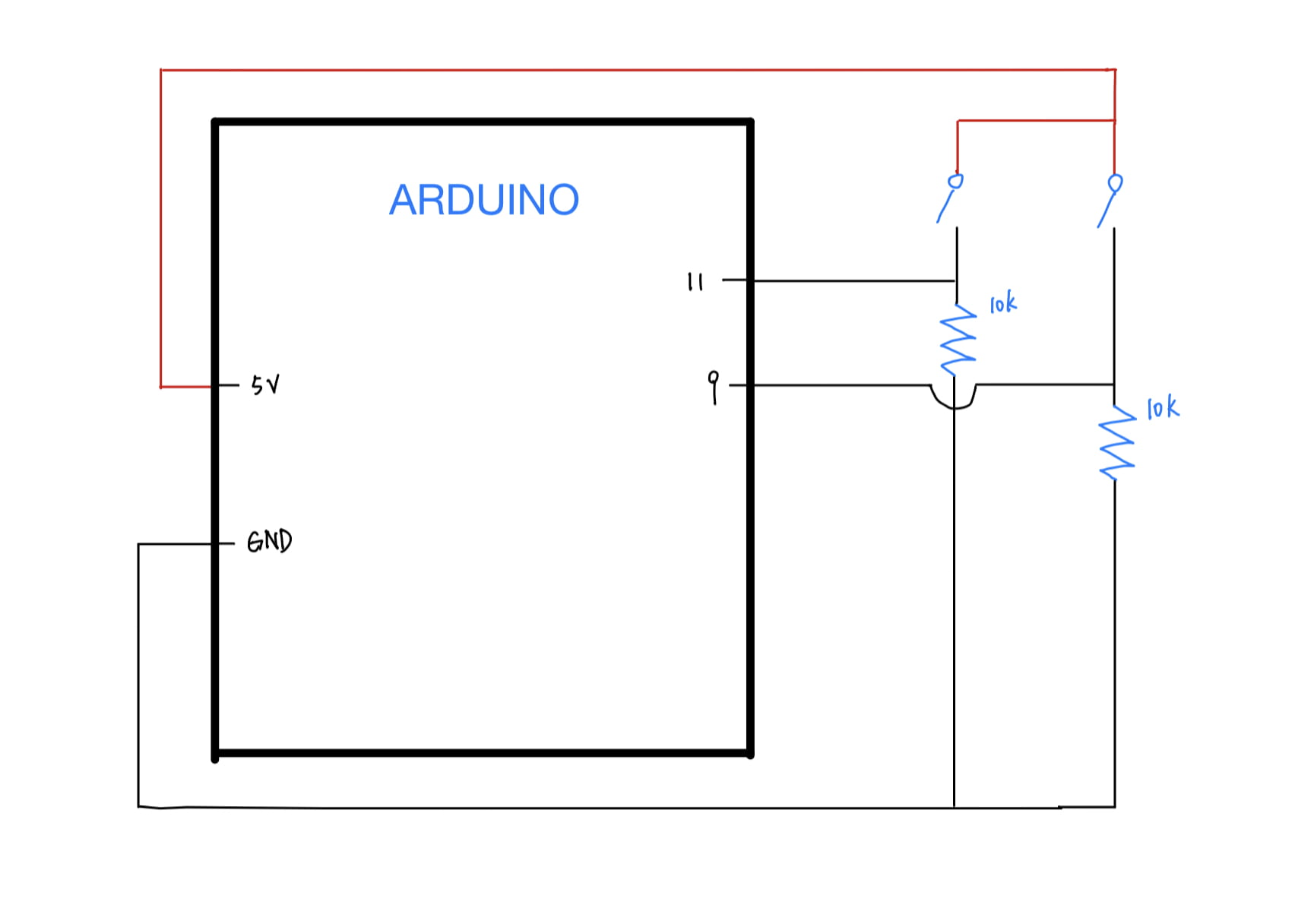

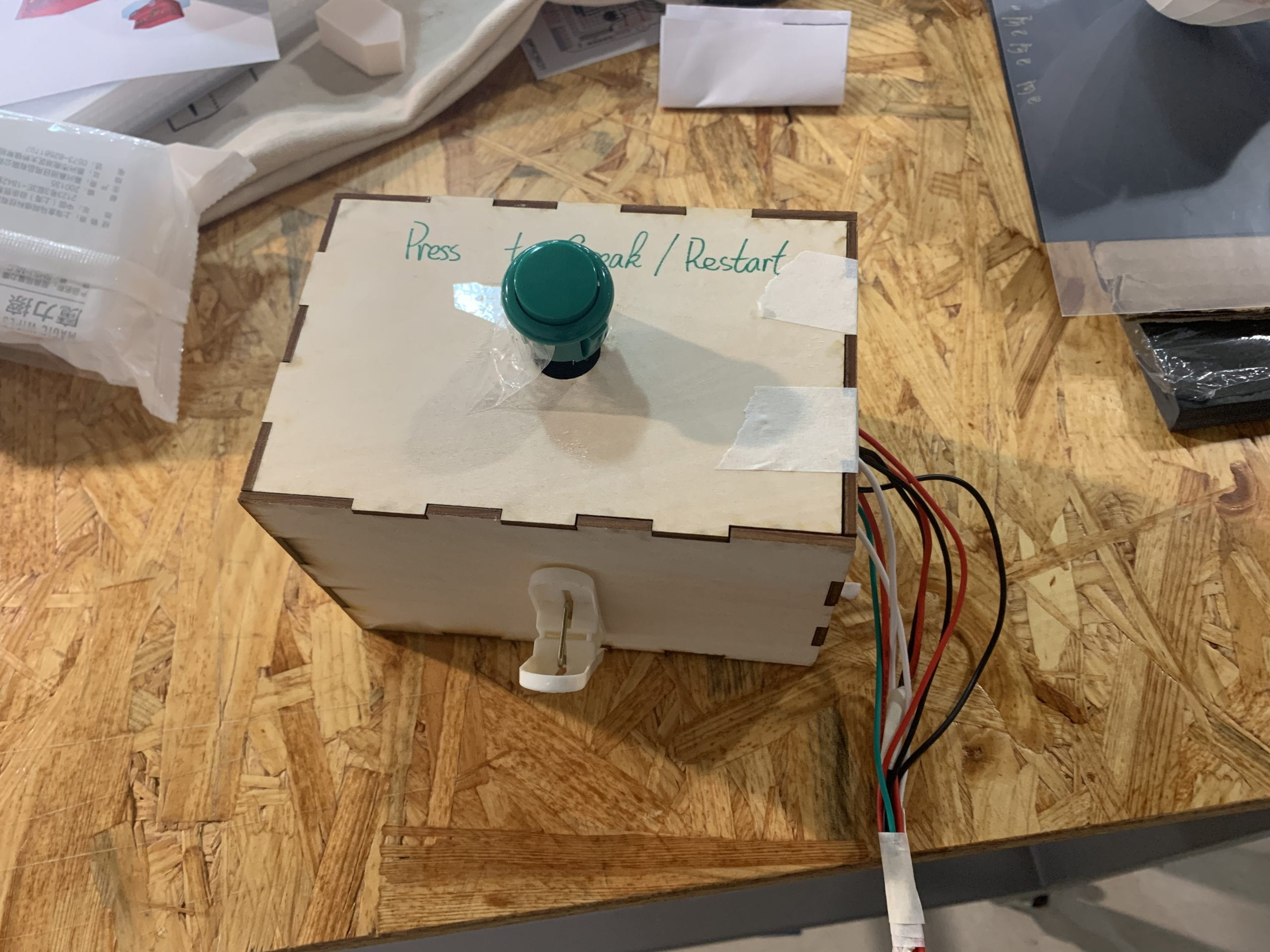

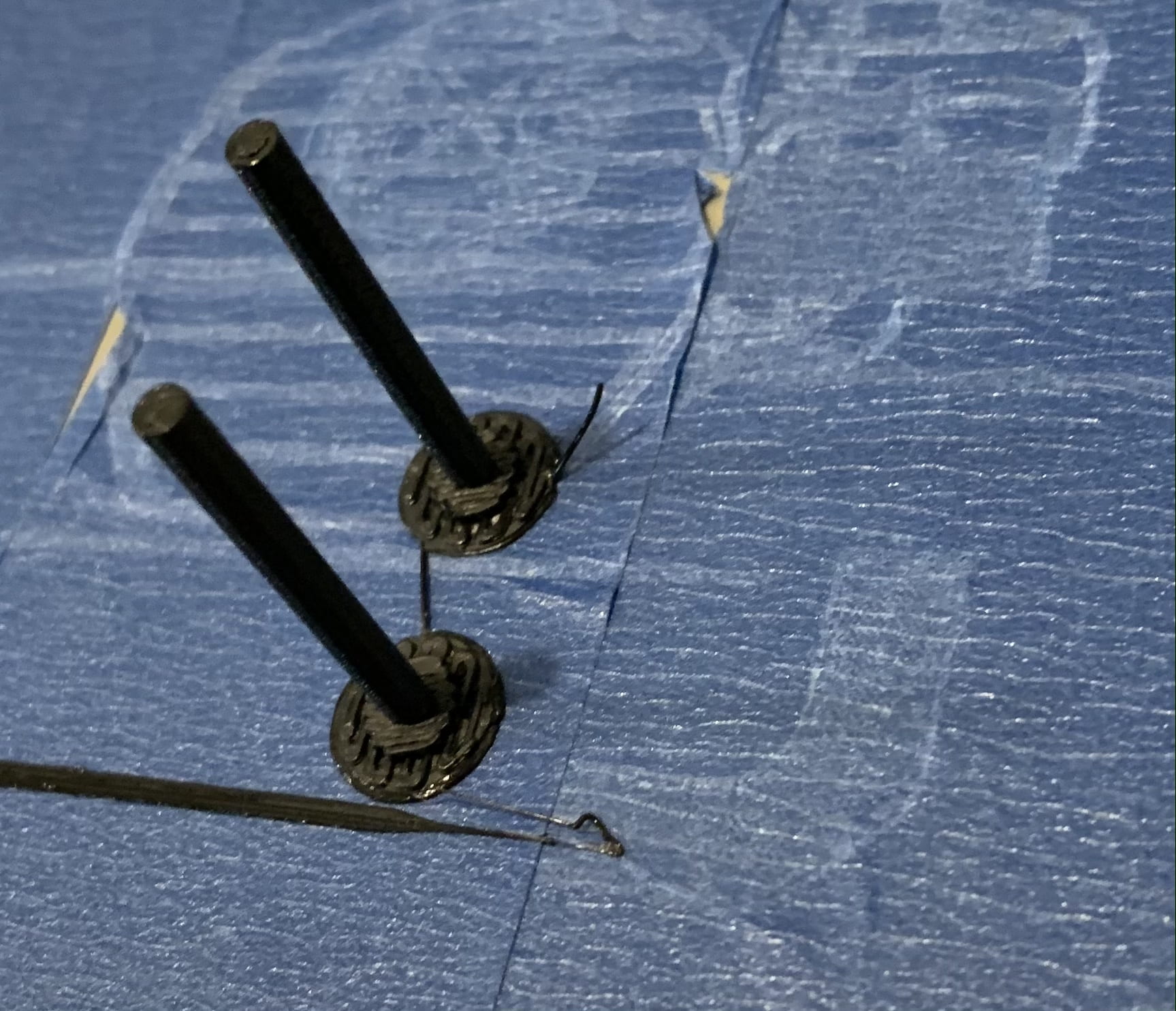

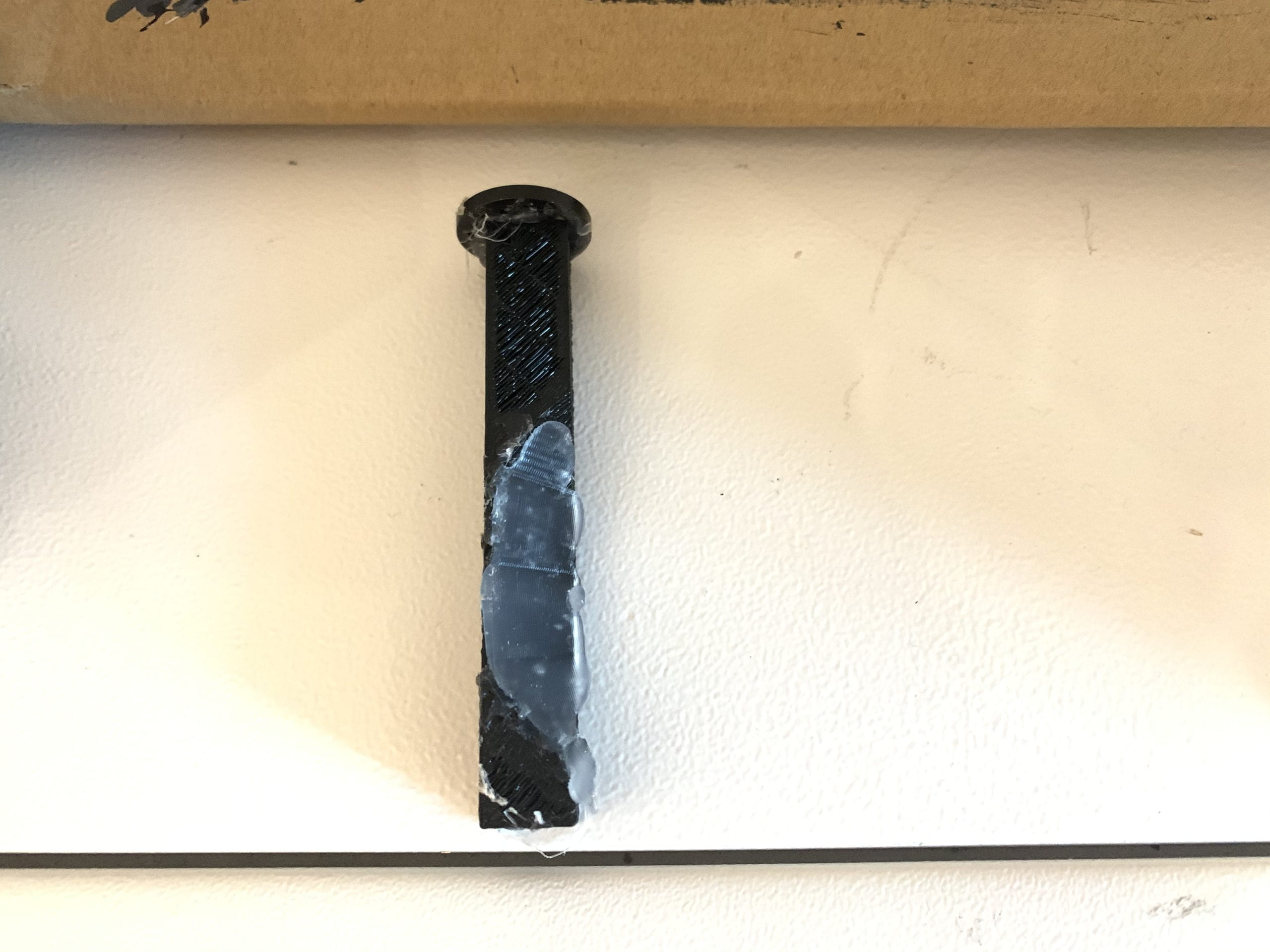

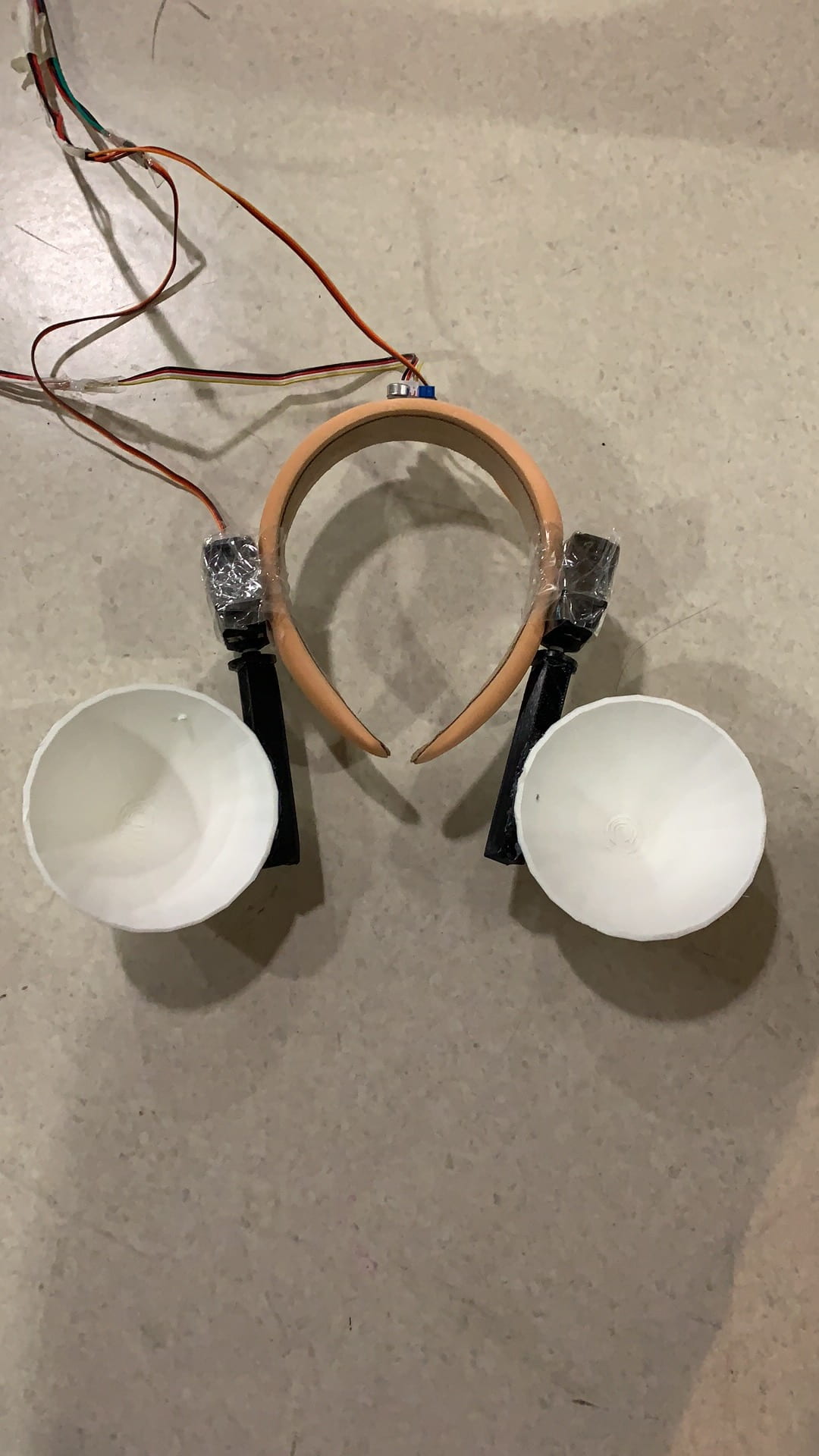

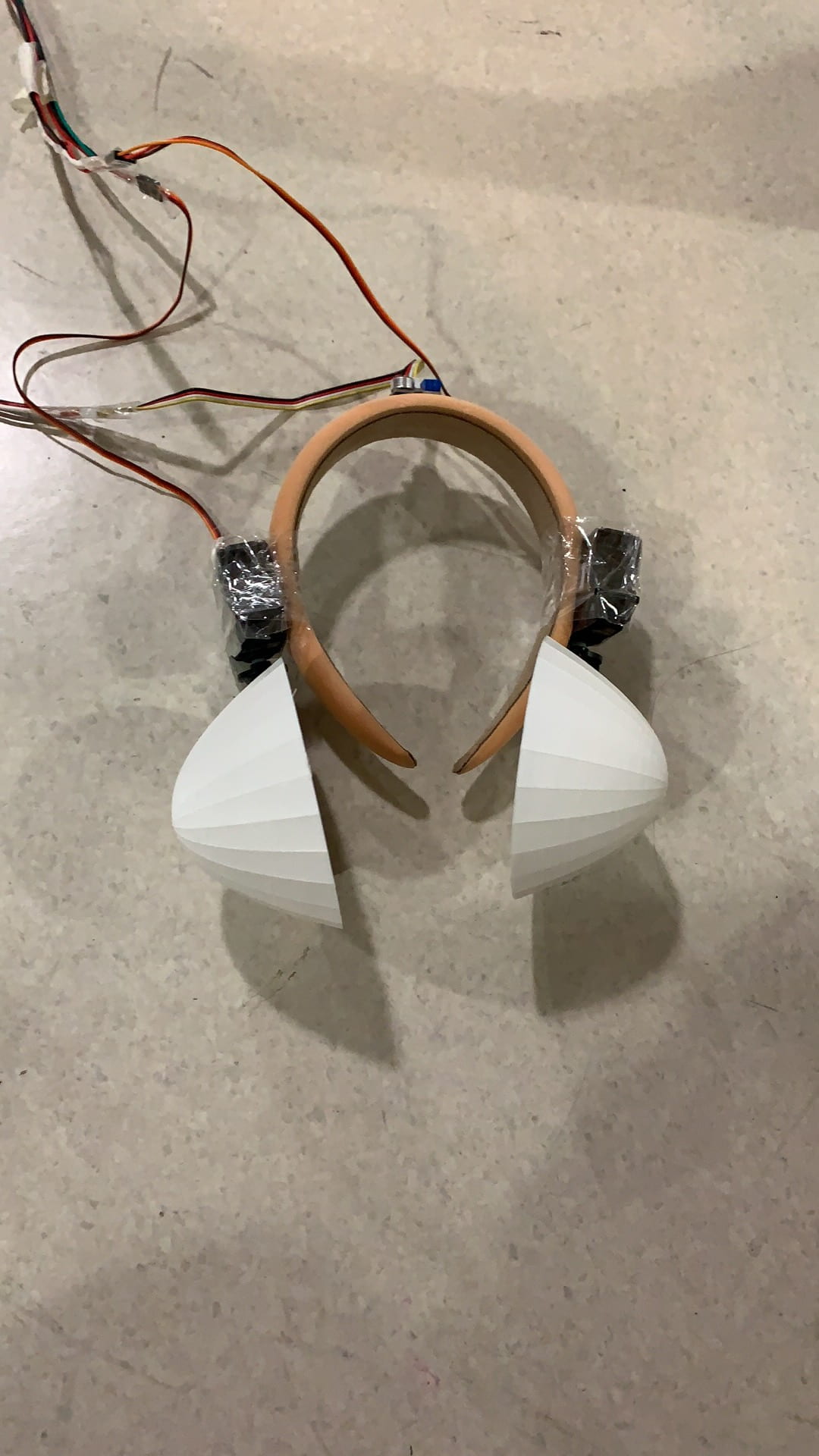

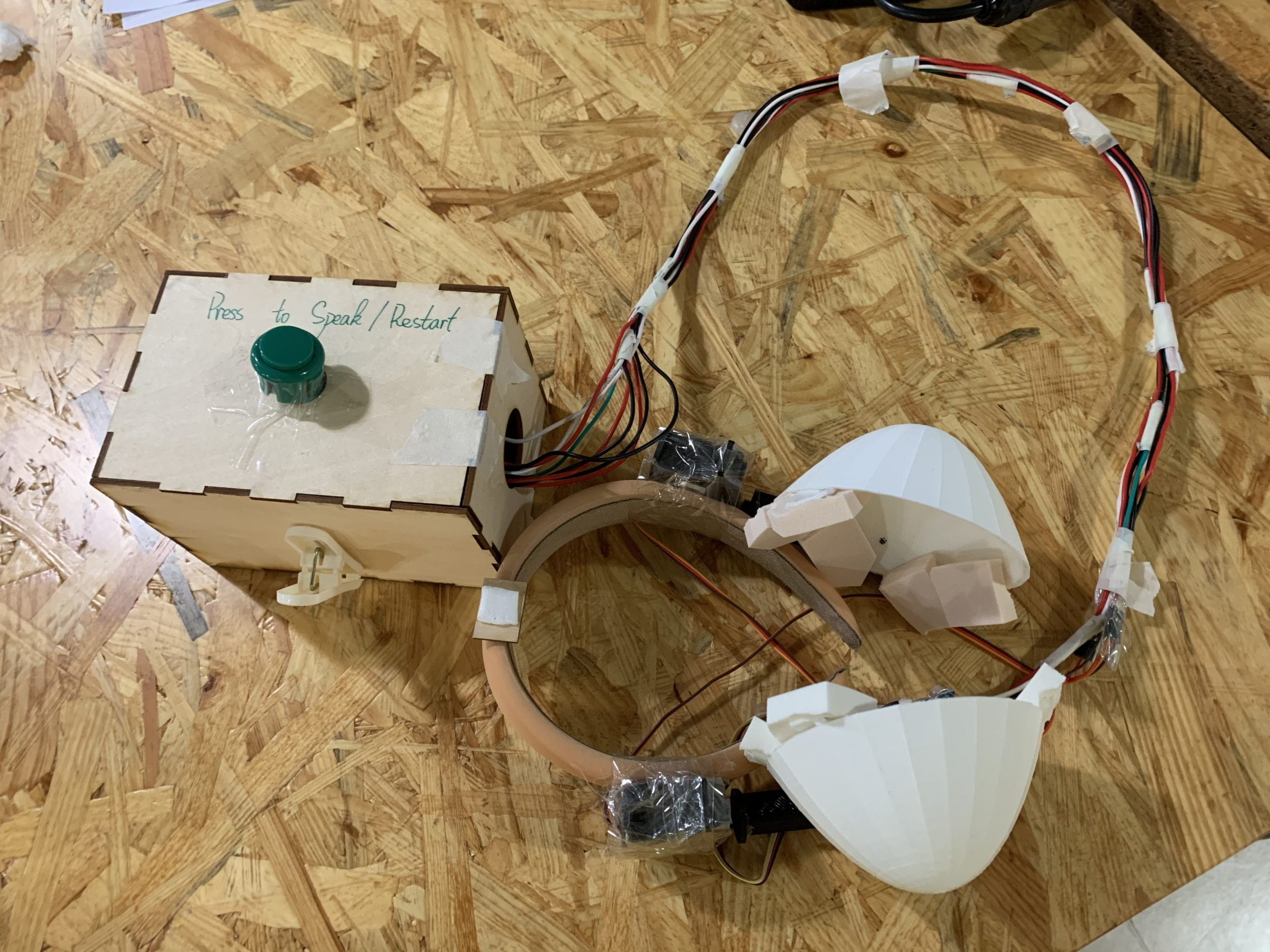

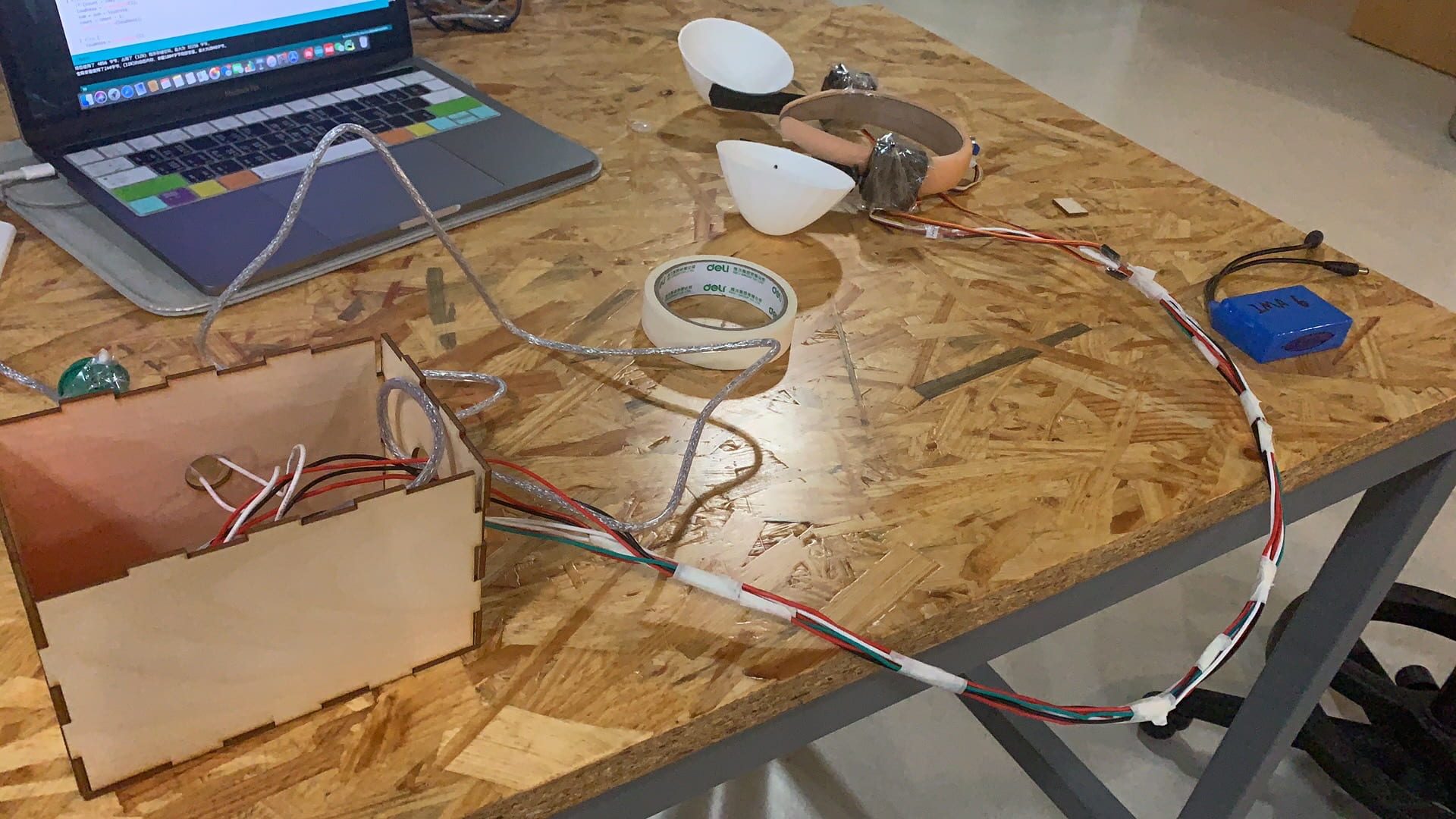

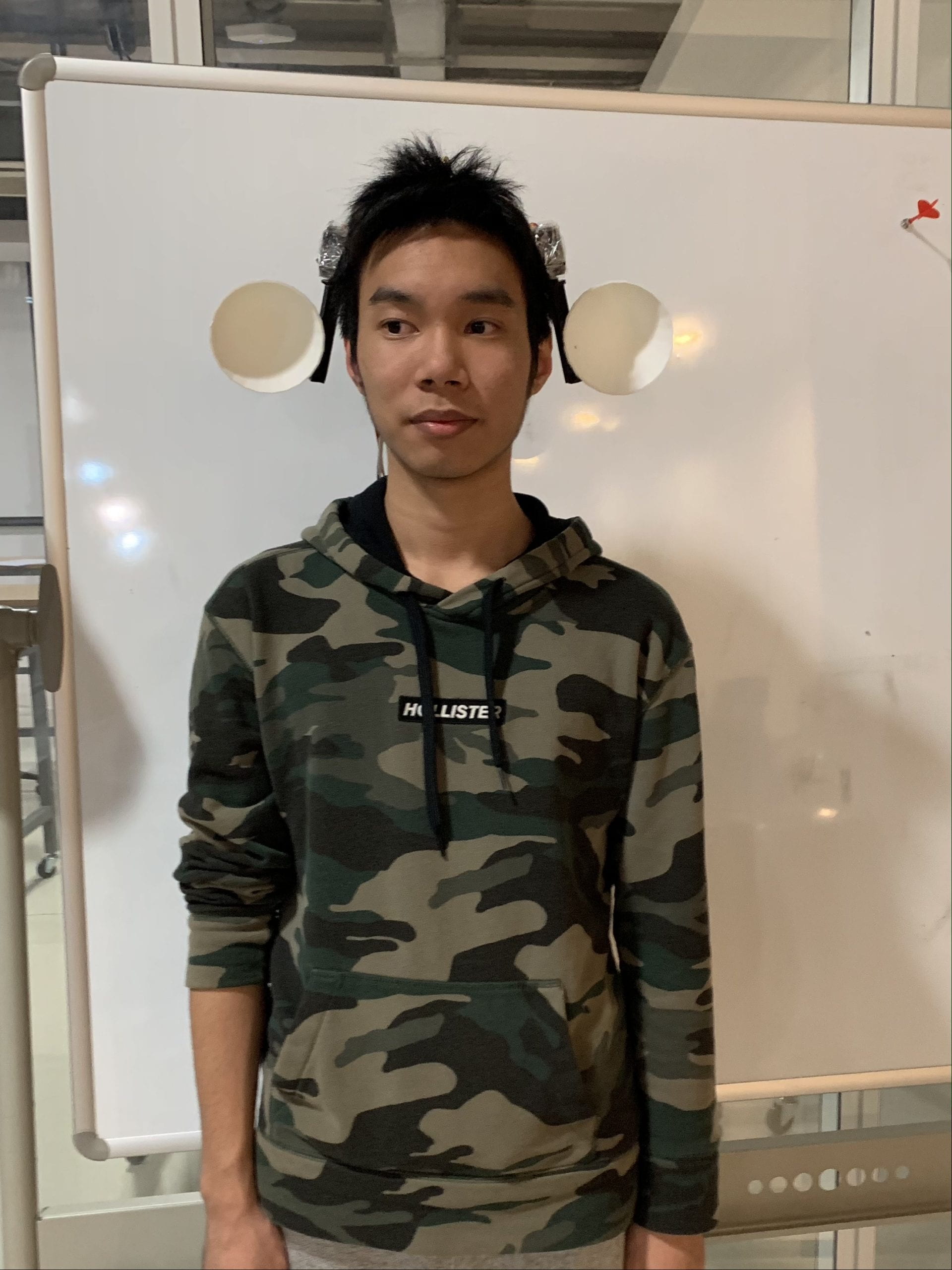

Sensors and Inputs: We would add sensors on the “instrument”, they would be the light resistors, detecting whether or not the laser is pointing at it. Laser lines would be used to represent the strings of the instrument. The sensors would be triggered when the user holds the pipa on their lap and plays it.

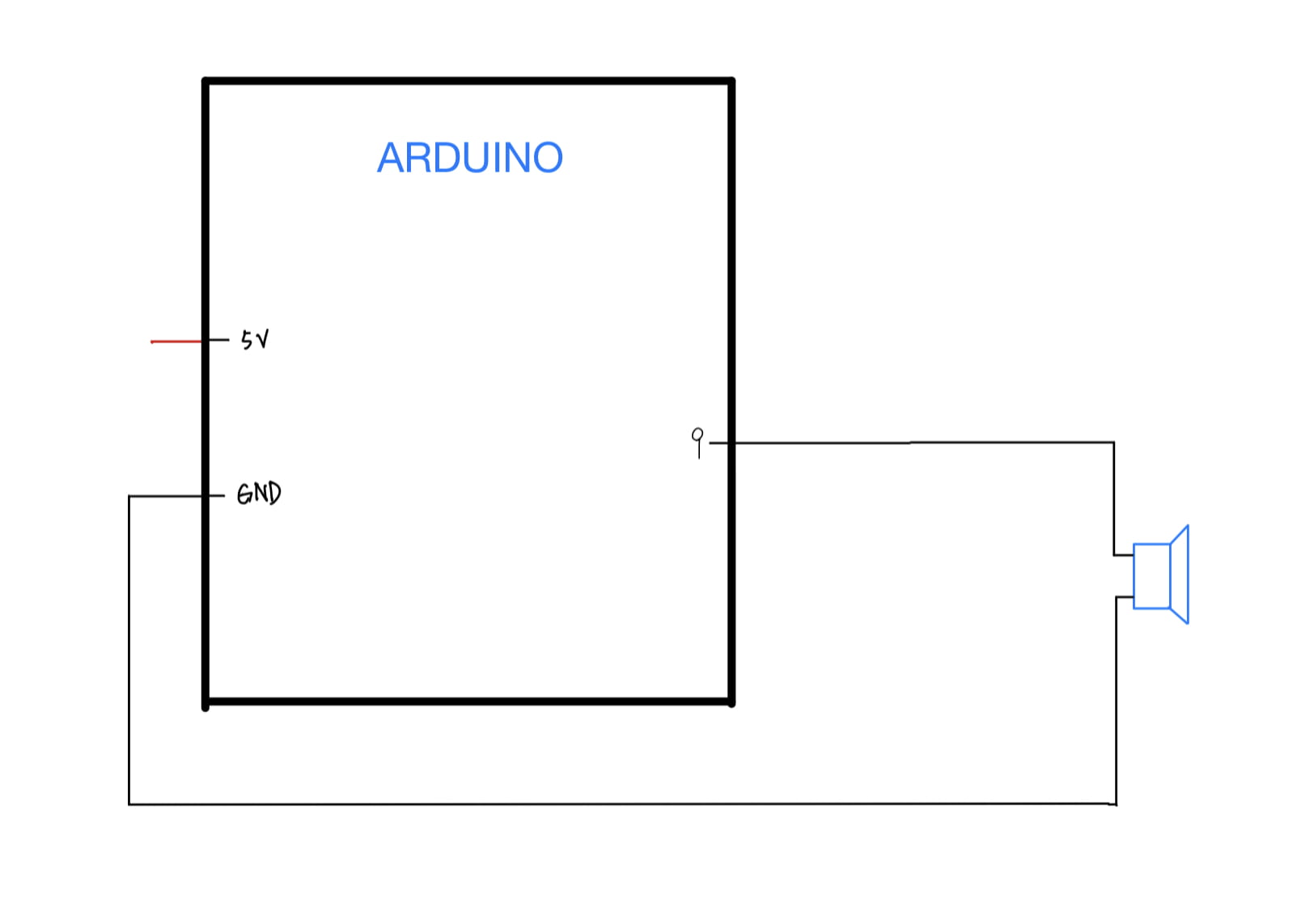

Processing and Outputs: When playing the instruments, different patterns will be projected on the panel. The very patterns are those from ancient instruments and drawings with the concept and meaning of ancient Chinese culture that are not being well reserved these days.

We would finish building the real instrument in the next week, figuring out how could the input and output work. And we would continue working on the patterns and music presented by the processing in the week after, to make our project more complete and convey the very idea we want.

Context and Significance:

Due to wars and the cultural revolution of China, China’s precious cultural relics have been plundered and destroyed. Now if we want to see the most glorious historical remains of the great Tang dynasty, we have to go to Japan. It is sadly true that Japanese people see and know more about pipa than Chinese. It is time to use some technology to bring our culture back.

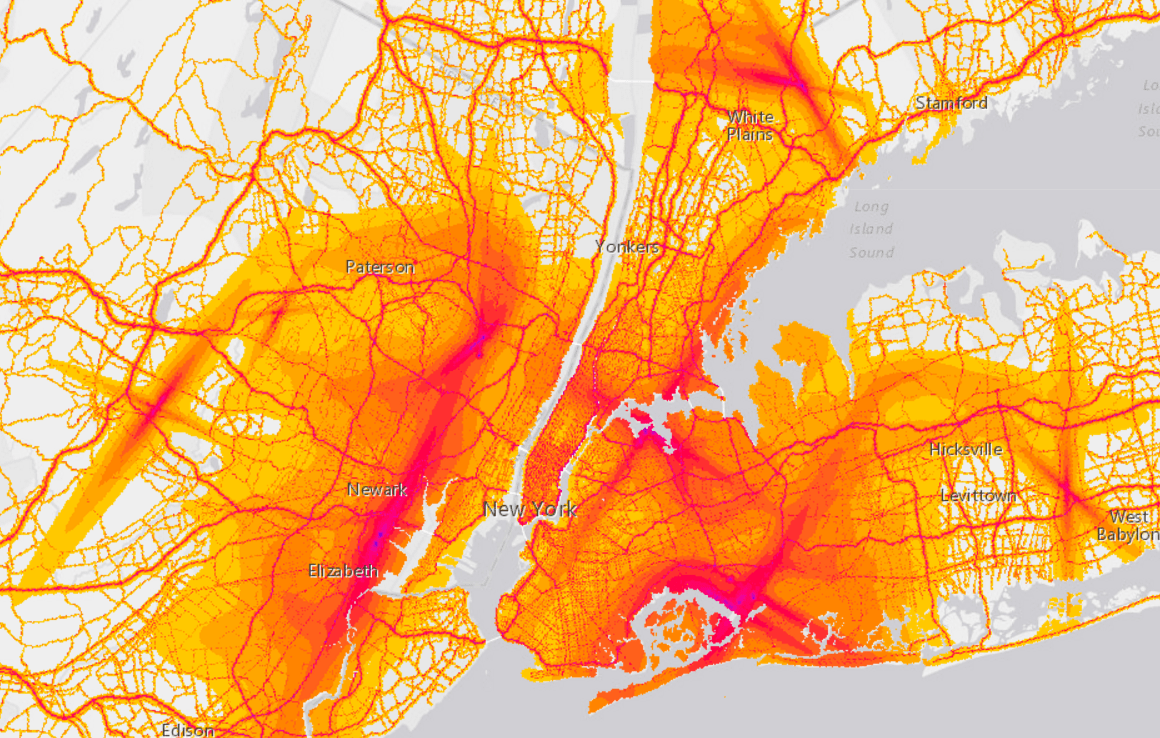

春江花月夜[ A Rainy Night in Spring] is one of the oldest and most famous Pipa pieces. It pictures a poetic and Zen atmosphere on a spring night, combining metaphors like flowers, warm river water, and the moonlight. Together it creates a beautiful soundscape. Although many people find this musical piece pleasant to listen to, people who are not familiar with traditional Chinese culture can hardly understand it culturally, losing the most important part.

This project intends to visualize the music piece for audiences to better feel the atmosphere of the music and to spread the traditional Chinese culture by making it modernized and readable to all.