Wow. This week’s exploration into synthetic media has really opened my eyes. The power of artificial intelligence in media generation is undeniably going to change the industry’s landscape forever. I knew the day that AI takes over the paintbrush would come eventually; I just didn’t know it would be so soon.

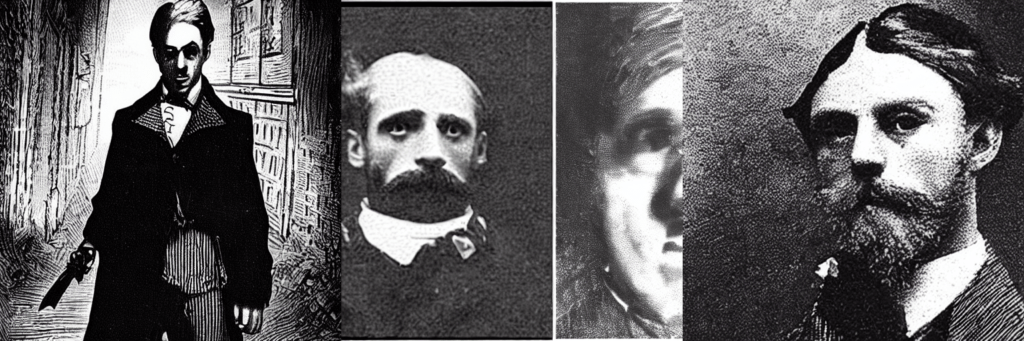

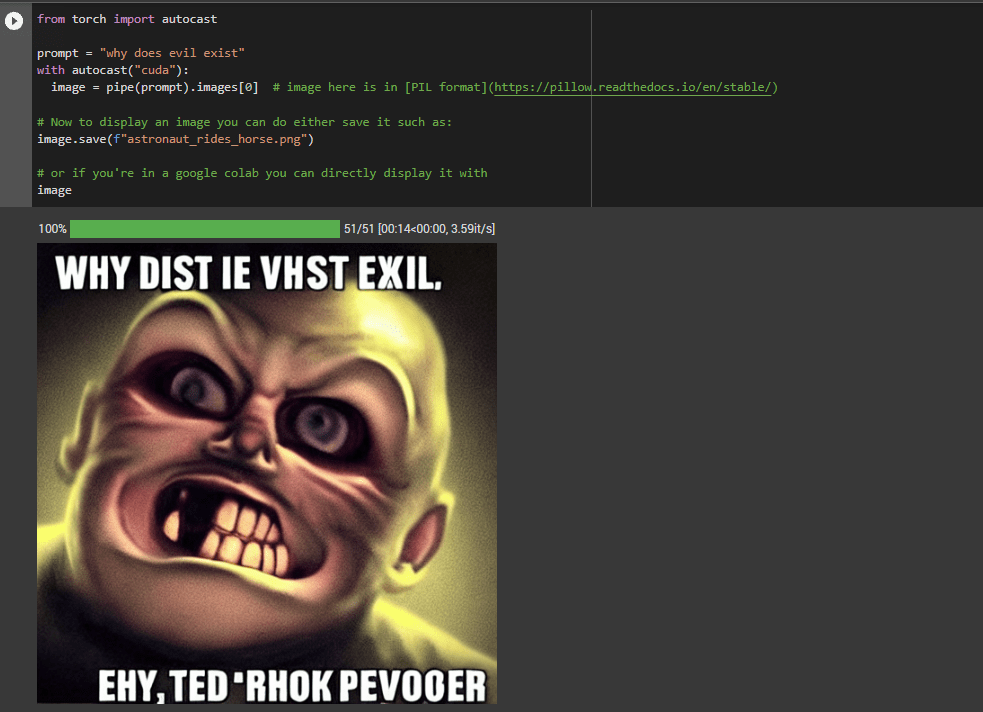

Playing around with Stable Diffusion for the past week has really shown me that we truly are living in a new epoch of technology and art and that the two has been closer than ever before. I wanted to really dive into the specifics of prompts as it applies to Stable Diffusion. My initial idea was to use SD to “unravel” mysteries of the world such as the true identity of Jack the Ripper or how eels reproduced. I sought to use artificial intelligence to give the unknown or the unseen a form, or to get a glimpse into how AI understood/perceived those topics. And I went one step farther by entering prompts that asked philosophical questions such as “why does evil exist?” I hoped to use the prompt command not simply as a description of the scene I had in my mind but to use it as a conversational tool between the machine and me.

However, I soon realized that SD was not a tool meant to answer questions as the I noticed patterns in the images it was generating. Because the model was trained on countless images that exist in the world and every word in my prompt corresponded with certain words and connotations I was simply getting a reflection and amalgamation of all those images, not what the AI thinks of those subject. How naïve of me, to think that AI has the ability to discern those associations and form its own cognitive opinions on the questions I’m asking it. But maybe, the ability to ask questions and receive answers from AI via images is on the horizon, if not already here.

Therefore, I proceeded to learn more about the capabilities of SD and I have to admit: I dug myself out of the rabbit hole before I got too deep. The possibilities are literally endless and the implications are truly breathtaking to even imagine. We are witnessing the end of traditional media for all we know and even though I believe that the human component will never cease to exist (I hope), there is no denying that this technology is going to take away a lot of people’s livelihood. And while that is quite a somber truth to accept, the creative potential that AI generated content is going to bring to our civilization as a whole is astounding to think about.

The amount of time and human labor image generation models such as Stable Diffusion is going to save is beyond comprehension. It is hard to comprehend that we are only witnessing the first stages of its existence. Artists are already harvesting its power to greatly reduce the bulk of the workload in their creative processes and it’s capabilities are only going to keep expanding. In two or three years time machine-learning models will no doubt become sophisticated enough to represent every imaginable detail we can think of.

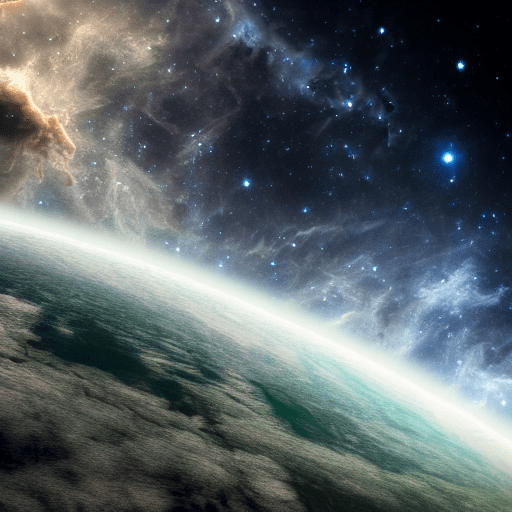

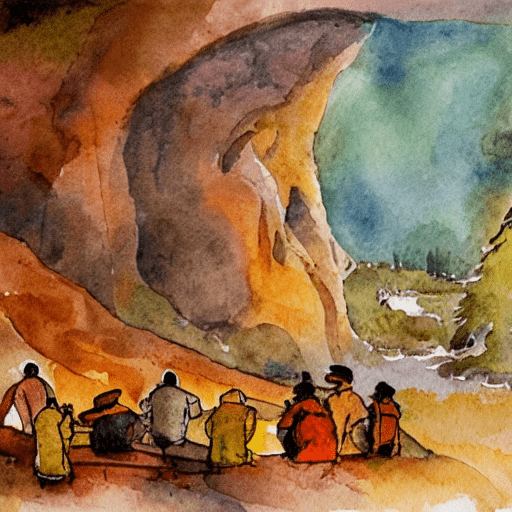

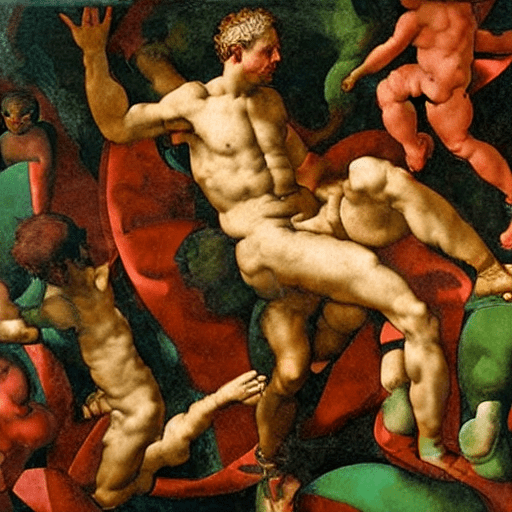

Having understood a bit more about what Stable Diffusion can achieve, I had to be realistic and think about what I am able to produce with it with the knowledge I have. I found it poetic that everything we have witnessed and achieved as the human race up to this point has been documented, photographed somewhere on the internet and now the technology exists to take all that information and synthetize into anything our mind can imagine. Therefore, I wanted to make an homage to how far we have come as a species and how much more there is to come.

The process of creating this 7 image interpolation started with the idea to condense all of human civilization into 7 distinct time periods, all of which I chose entirely based off of what I consider to be the 7 most distinguishable, characteristic periods in the moment. Here are the prompts I entered:

seeds_and_prompts = {

0: {"seed": 3450, "prompt":"the beginning of life on earth, abstract, atmospheric, earth, universe"},

1: {"seed": 3456, "prompt":"human civilization begins, water color, men in caves, huddled around fire"},

2: {"seed": 5678, "prompt":"height of Renaissance period, art and science prosperity, Michelangelo, oil painting"},

3: {"seed": 9999, "prompt":"industrialization, atmospheric, innovation, cities being built, changing landscape, abstract, bright sky"},

4: {"seed": 2345, "prompt":"Swinging sixties, London, nightlife, bright lights, something dark lurking in background, happy woman and men in foreground, last night in soho, glamorous, colorful"},

5: {"seed": 2838, "prompt":"21st century, high aerial view, large depth of field, technological innovation, urbanization, corporation, city life, Edward Hopper"},

6: {"seed": 2345, "prompt":"cyberpunk future, optimistic, tall skyscrapers in background with urban forest, big divide between nature and civilization, grassfield in foreground, Hayao Miyazaki"},

7: {"seed": 3450, "prompt":"the beginning of life on earth, abstract, atmospheric, earth, universe"}

}

And these are the 7 images generated:

Upon entering the prompts, four of the seven images turned out 95% how I wanted it to or at least aesthetically acceptable to the point where I am satisfied with. However, the three images starting with the center one resulted in images that weren’t quite pleasing to the eye, and I think it was partly due to the fact that I hadn’t specified the art style or artist. Therefore, the AI simply composed a rather stock looking image, sometimes with unwanted text generations as well. After tweaking the input words and their sequencing and reducing sentences to more simple, single words, the elements that appear were set in place. Then it was just a matter of playing around with the seed number to get the best result.

The interpolation took quite a while to run even at a low resolution so I can imagine it will take significantly more processing power to generate quality output efficiently using SD. My biggest takeaway from this assignment was just the sheer awe of SD’s application and the ways creative people are already starting to utilize SD in their own work. It will truly be fascinating to see just what kind of workflows we can achieve over the next year and I cannot wait.