Project Title: Colorful Land

Designer: Yuxing Li

Conception and Design

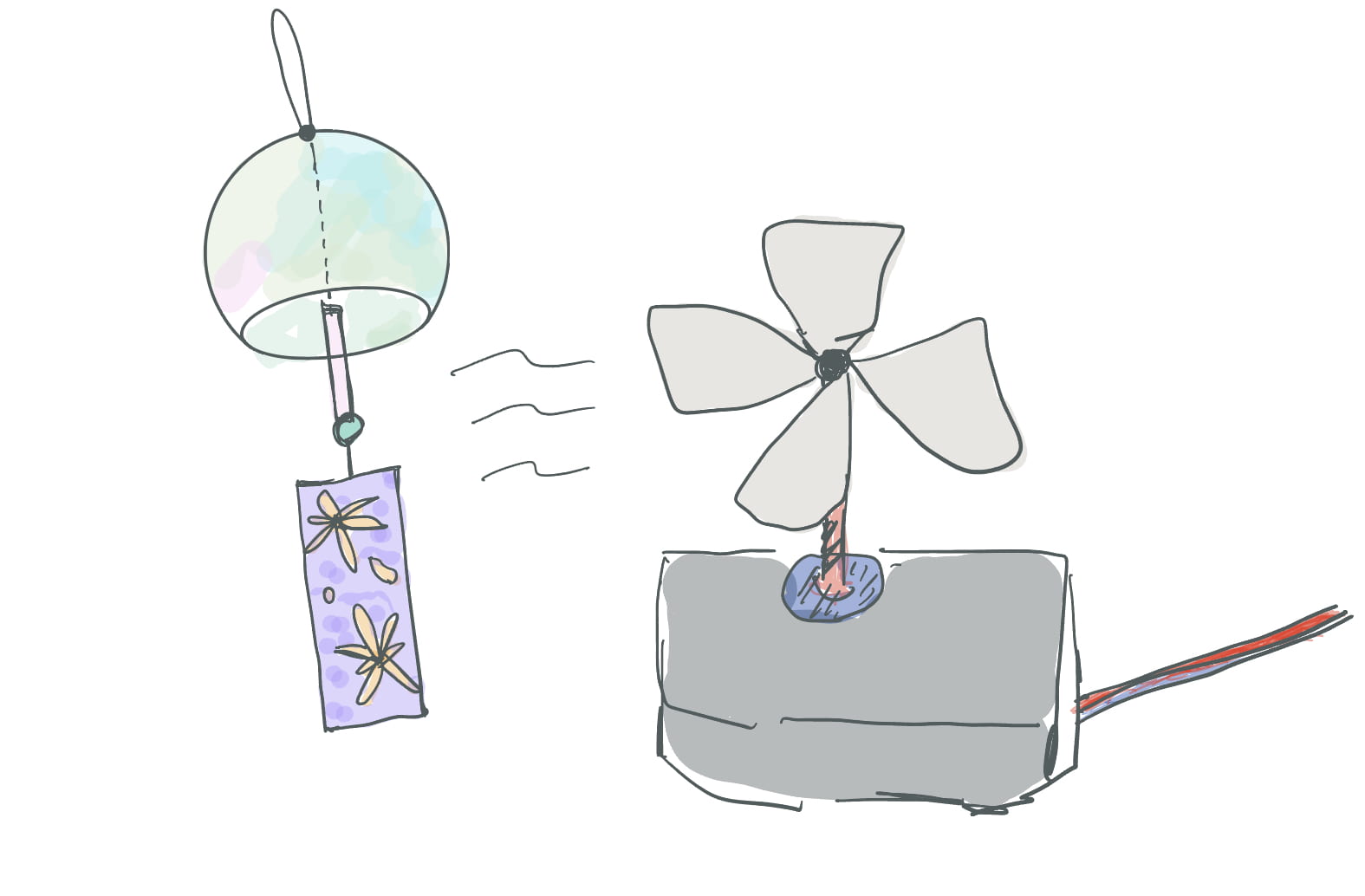

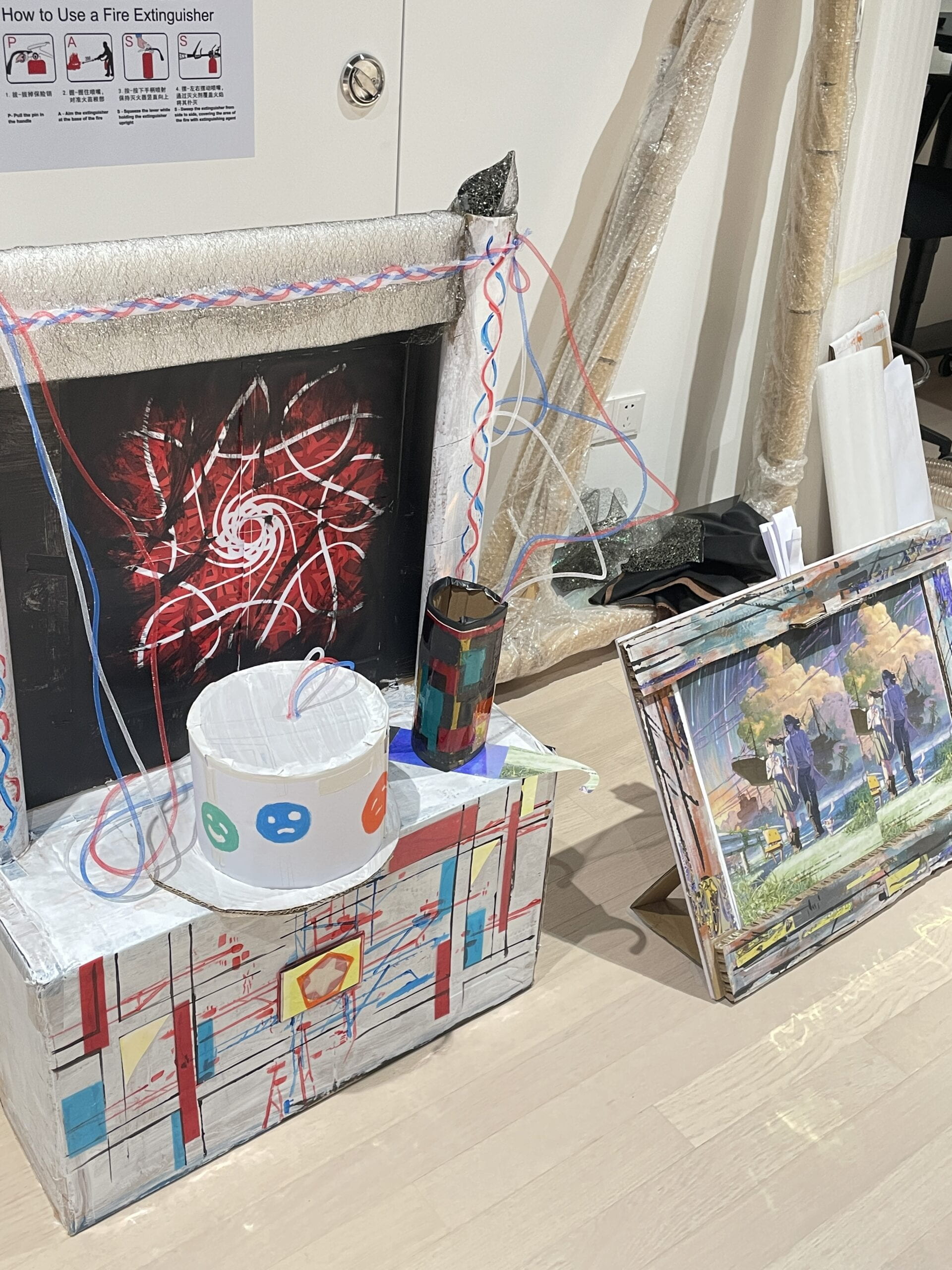

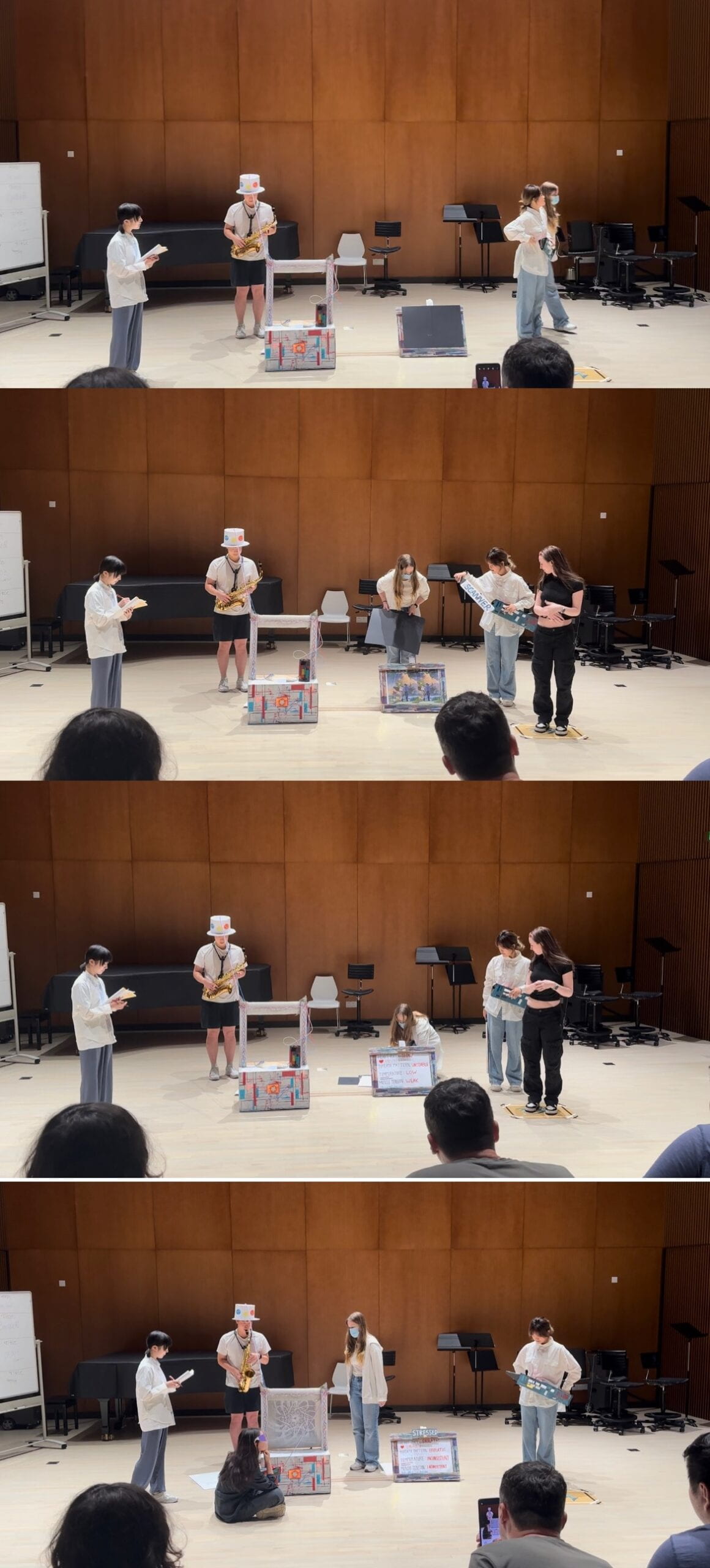

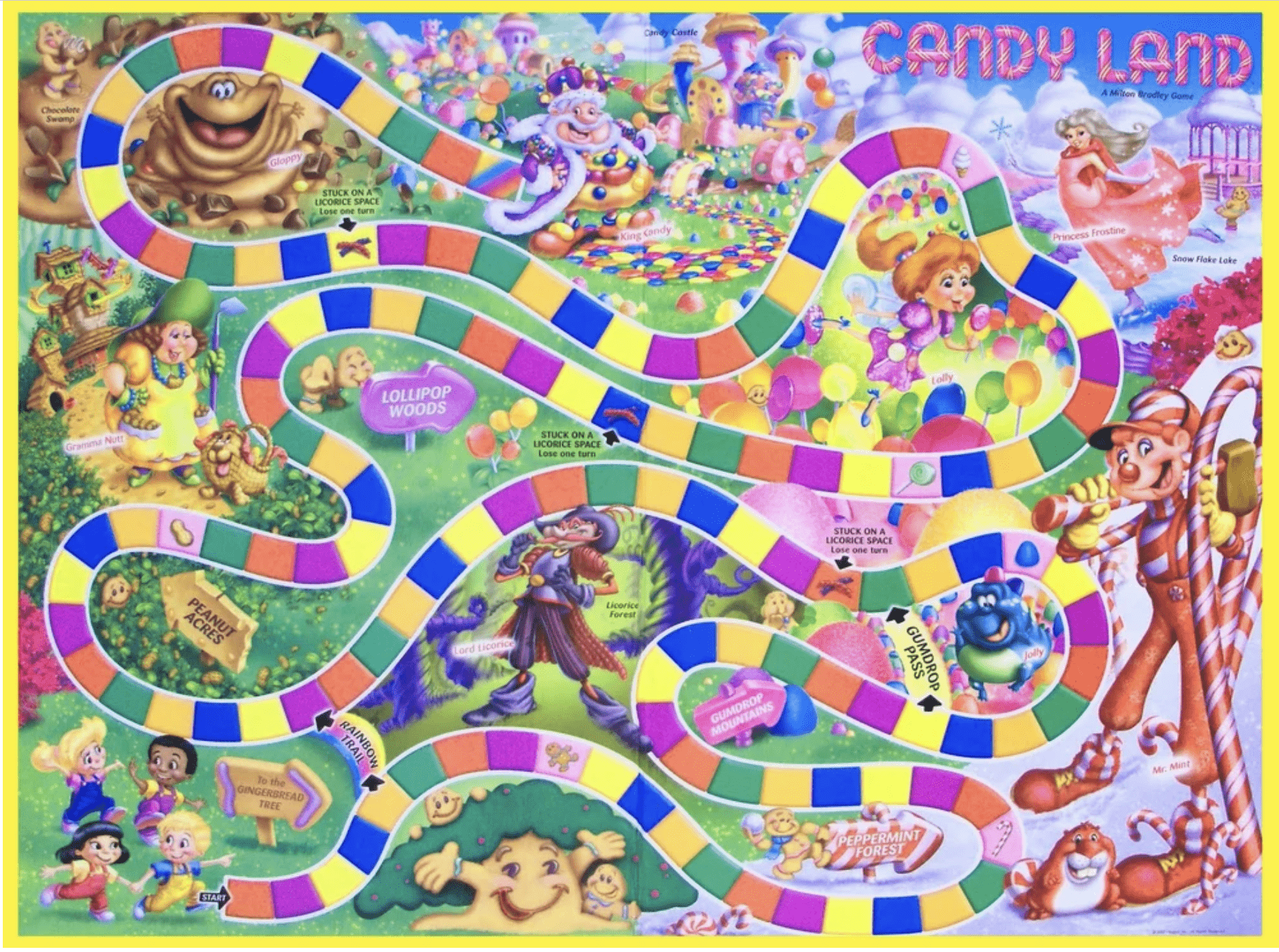

The project is based on an original game called Candy Land, which is a multi-player board game for 2-4 players. I planned to add more interactive parts to this game to make it more playable and interesting. I designed specific game mechanisms such as LED lights, music, popping ice cream, and candy falling from the sky.

The primary objective of this project is to provide students with a valuable opportunity to unwind and find respite amid their hectic lives through the medium of gaming. By incorporating a carefully designed game into their routine, we aim to offer a momentary escape from the pressures and demands of academic and personal commitments. This endeavor recognizes the significance of balance and well-being in a student’s life, acknowledging that leisure activities can contribute to overall happiness and productivity.

Through engaging gameplay and captivating features, the project aspires to create an immersive and enjoyable experience for students. By offering a brief respite from their daily responsibilities, the game serves as a means of relaxation, fostering a sense of rejuvenation and mental clarity. It provides students with an avenue to temporarily detach from the stresses of their studies, allowing them to recharge and return to their tasks with renewed focus and energy.

In summary, the primary aim of this project is to prioritize the well-being of students by providing them with a means to momentarily escape their busy lives through a thoughtfully designed game. By offering a source of relaxation, mental rejuvenation, and skill development, the project seeks to contribute positively to the overall academic and personal journeys of the students involved.

Fabrication and Production

Before the user testing:

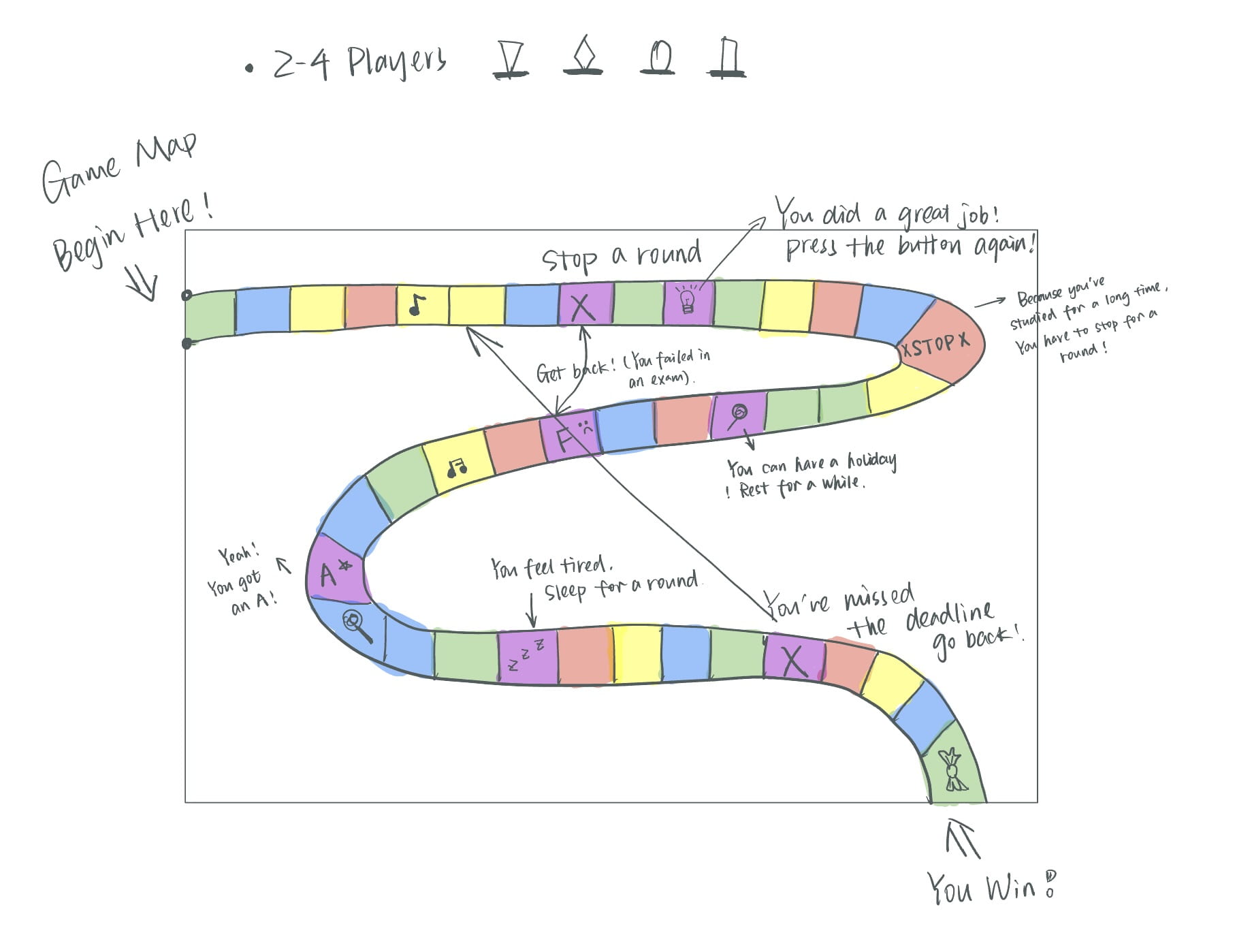

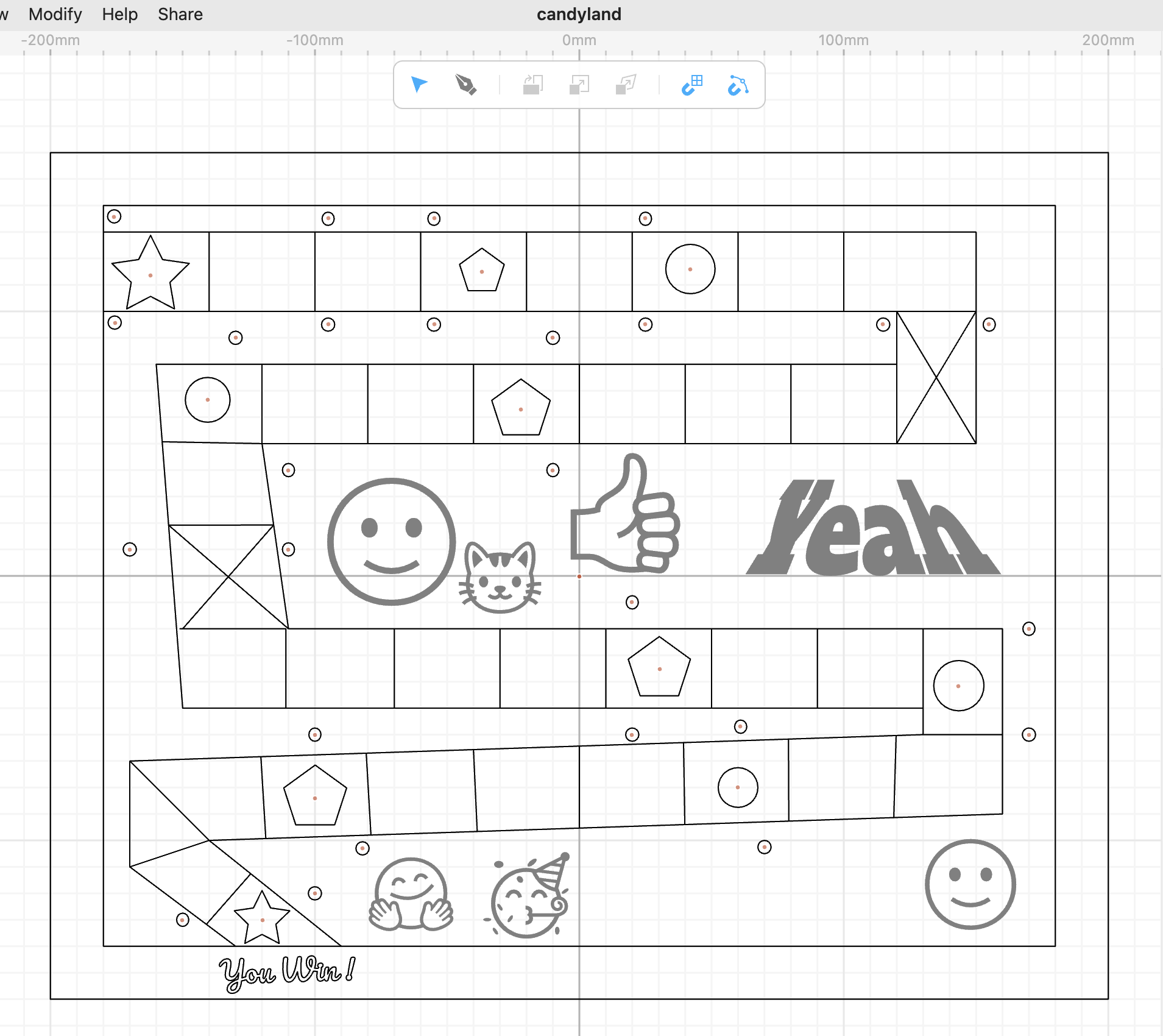

In the beginning, I sketched the board by hand. This hands-on approach allowed me to visualize the initial framework of the game and lay the foundation for its development.My initial idea was to design students doing assignments as a backstory for the game, linking the interactive part of the game to the grading of assignments and learning progress in the real world.

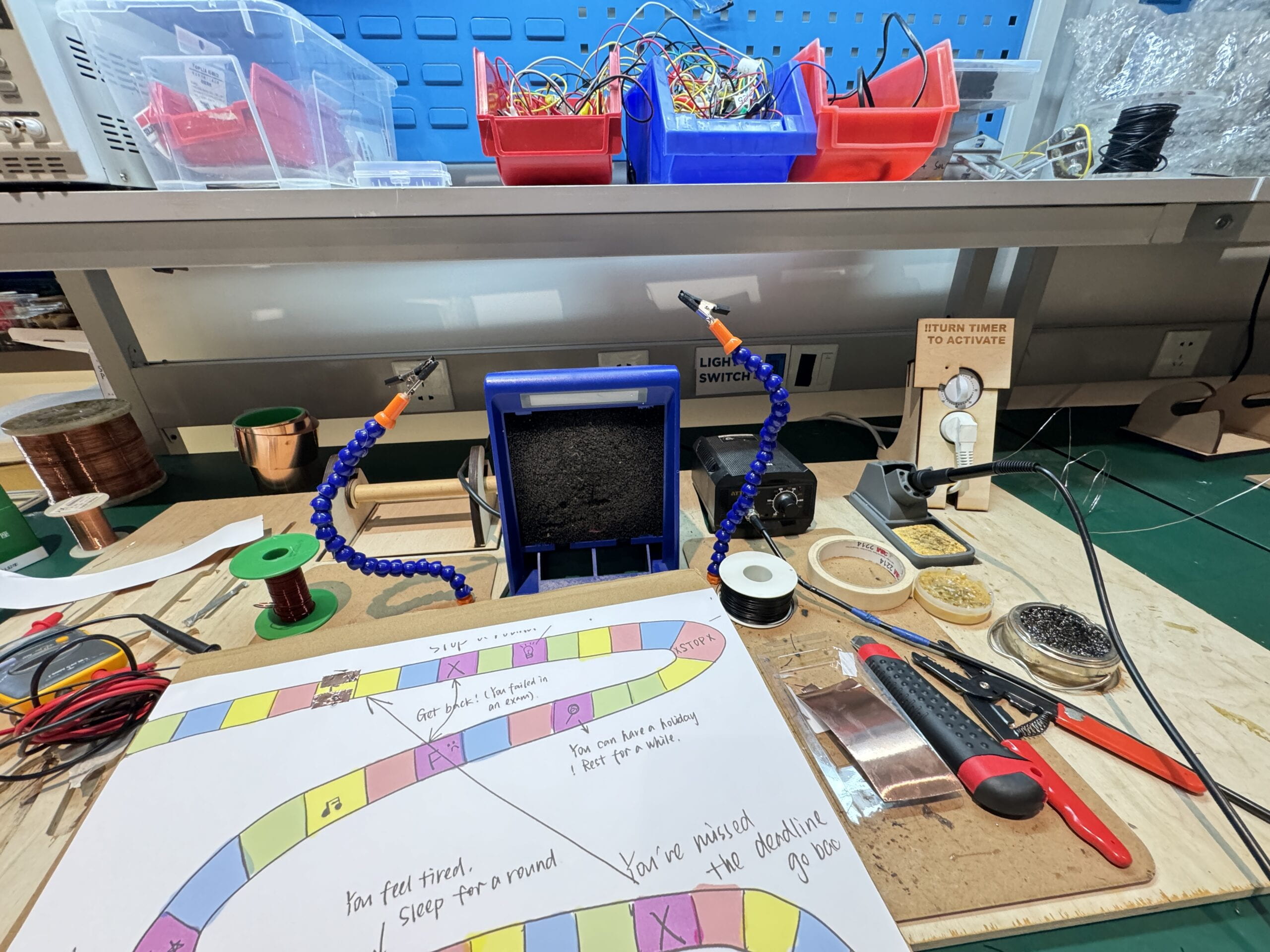

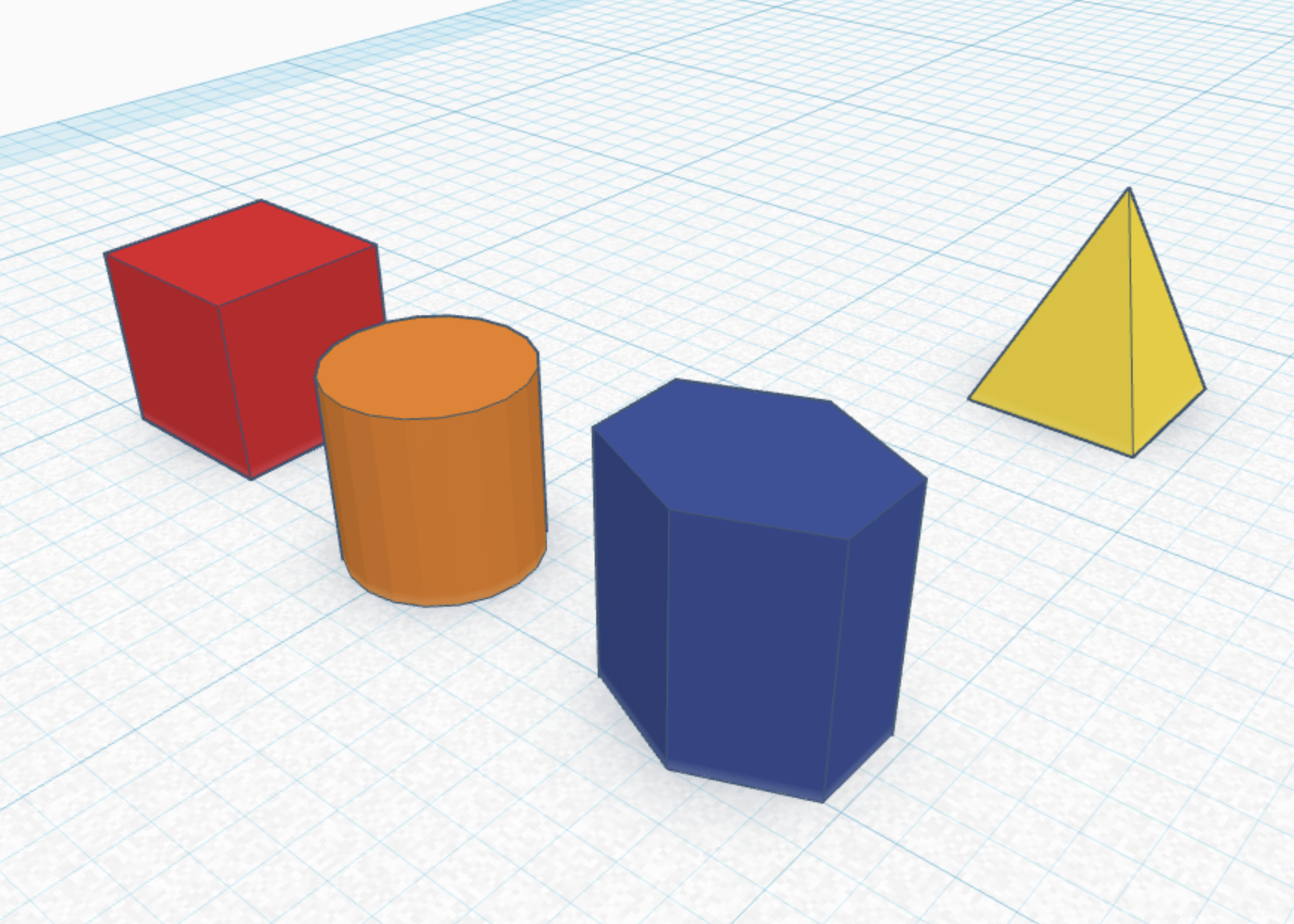

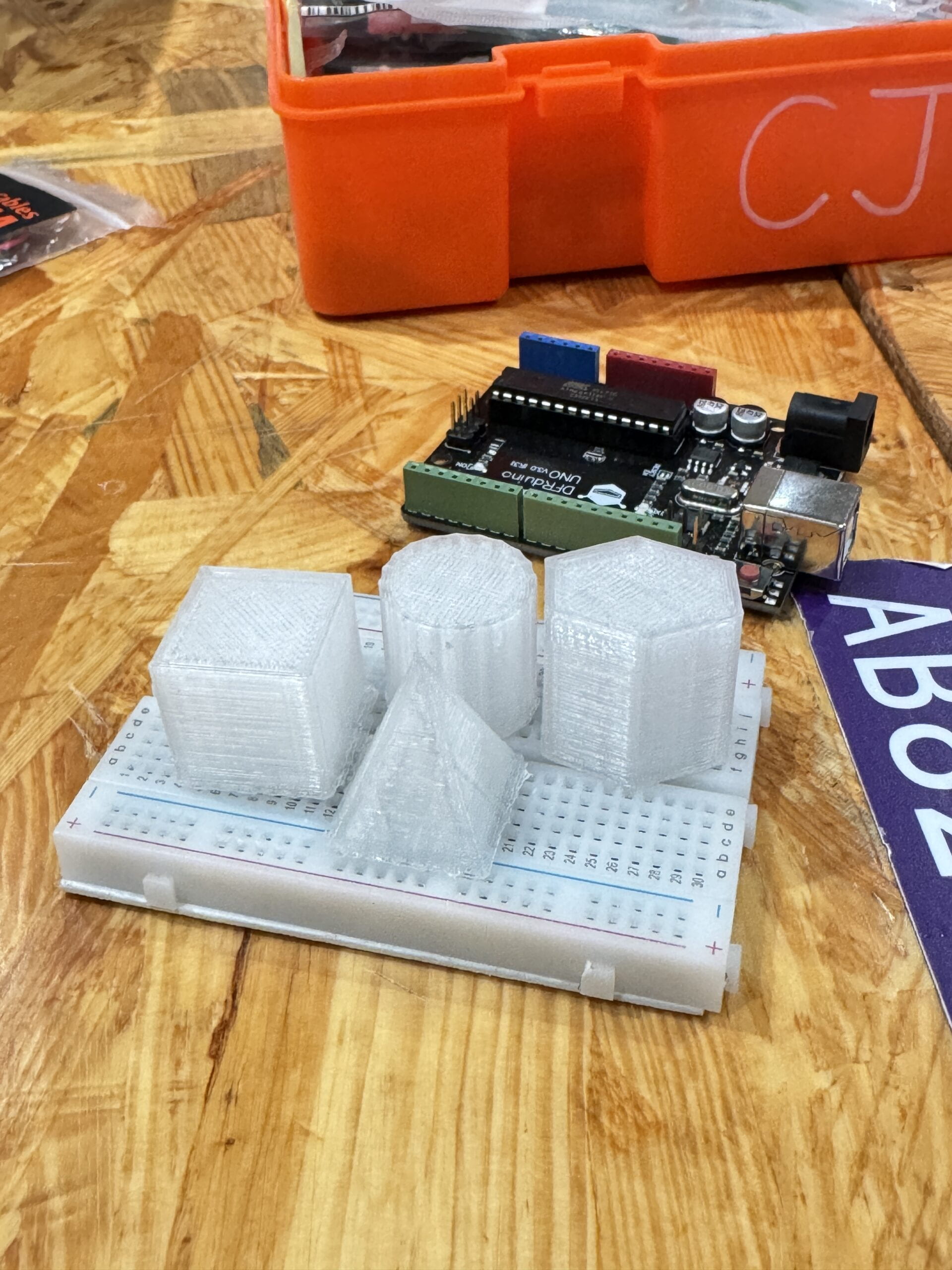

In the user testing, I tried to print out and weld the hand-drawn board, connect the pieces to the board circuit through metal tape, make the code work, and play a video to show the player what to do next. I also used the 3D print to print those chess and decorations.

After the user testing:

I received suggestions for adding interactive fixtures and improving the look of the project, so I decided to redesign the board and laser cut it to make it look more like a board game, as well as re-solder the wires and metal tape.

And I decided to add a ribbon of lights. When the pieces connect the circuit, the pixel will change from the original bright rainbow color to the rolling color.

Processing:

After the appearance was completed, I began to write the code. First, I needed to write a processing code to connect the chess pieces to a specific grid, and after the connection, the corresponding video would appear on the computer screen, which might make the players move forward, move back, or stay for a round. So I hand-painted 11 different videos and matched them with different pin codes.

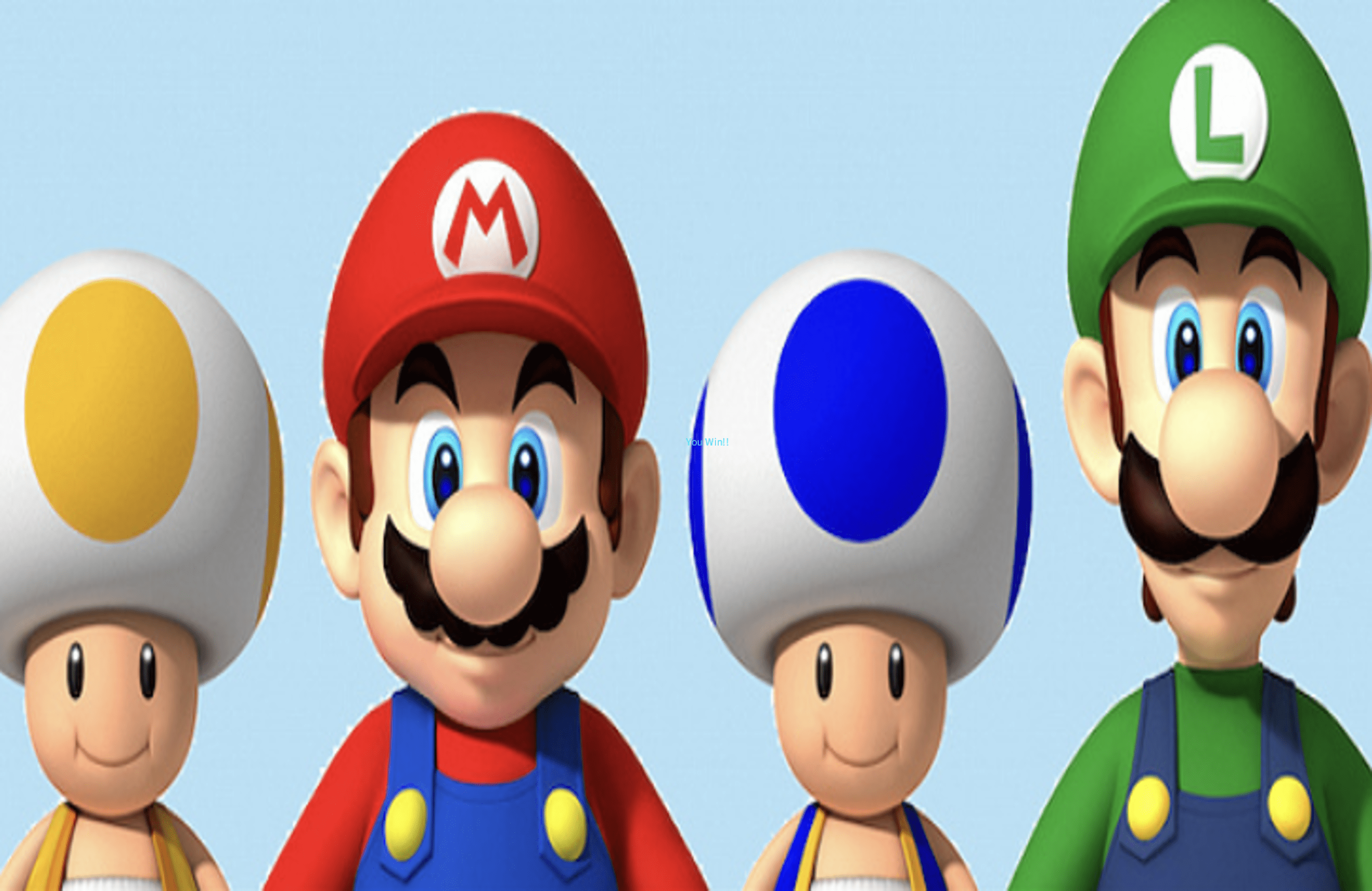

At the same time, I chose Mario as the game’s victory interface.

For arduino, I coded the pixel so that it could scroll through colors after each piece connected.

Final look:

Conclusions:

In the process of the final presentation, the lamp belt of my project could not light up normally. After checking, it was found that it was a connection problem. However, due to the excessive number of wires, I could not find out which wire was disconnected. During the presentation process, the video often got stuck due to the excessive number of videos. The above two points are where I need to modify and improve.

At the same time, I should enrich the narratology of my board game, for example, make the background story related to the homework more closely related to my final project. For example, some grid players will fail in the exam, and some will get A in the final exam.

Code:

processing:

import processing.serial.*;

import processing.video.*;

Movie movie1;

Movie movie2;

Movie movie3;

Movie movie4;

Movie movie5;

Movie movie6;

Movie movie7;

Movie movie8;

Movie movie9;

Movie movie10;

Movie movie11;

Serial serialPort;

int NUM_OF_VALUES_FROM_ARDUINO = 12; /* CHANGE THIS ACCORDING TO YOUR PROJECT */

/* This array stores values from Arduino */

int arduino_values[] = new int[NUM_OF_VALUES_FROM_ARDUINO];

PImage img;

color[] colors = new color[5];

int option;

boolean isBackgroundVisible = true;

void setup() {

fullScreen();

size(720, 480);

printArray(Serial.list());

img = loadImage("win.png");

colors[0] = color(#4FE561);

colors[1] = color(#3AA2F0);

colors[2] = color(#EFF03A);

colors[3] = color(#F04333);

colors[4] = color(#A022F0);

frameRate(30);

movie1 = new Movie(this, "movie1.mov");

movie1.loop();

movie2 = new Movie(this, "movie2.mov");

movie2.loop();

movie3 = new Movie(this, "movie3.mov");

movie3.loop();

movie4 = new Movie(this, "movie4.mov");

movie4.loop();

movie5 = new Movie(this, "movie5.mov");

movie5.loop();

movie6 = new Movie(this, "movie6.mov");

movie6.loop();

movie7 = new Movie(this, "movie7.mov");

movie7.loop();

movie8 = new Movie(this, "movie8.mov");

movie8.loop();

movie9 = new Movie(this, "movie9.mov");

movie9.loop();

movie10 = new Movie(this, "movie10.mov");

movie10.loop();

movie11 = new Movie(this, "movie11.mov");

movie11.loop();

serialPort = new Serial(this, "/dev/cu.usbmodem101", 9600);

}

void draw() {

noStroke();

if (keyPressed) { //button pressed, then pick random color

if (key == 'c') {

fill(colors[int(random(0, 5))]);

rect(0, 0, width, height);

}

}

stroke(255);

fill(255);

getSerialData();

if (arduino_values[2] == 1) {

if (movie1.available()) {

movie1.read();

}

pushMatrix();

scale(-1, -1);

image(movie1, -width, -height, width, height);

popMatrix();

}

if (arduino_values[3] == 1) {

if (movie2.available()) {

movie2.read();

}

pushMatrix();

scale(-1, -1);

image(movie2, -width, -height, width, height);

popMatrix();

}

if (arduino_values[4] == 1) {

if (movie3.available()) {

movie3.read();

}

pushMatrix();

scale(-1, -1);

image(movie3, -width, -height, width, height);

popMatrix();

}

if (arduino_values[5] == 1) {

if (movie4.available()) {

movie4.read();

}

pushMatrix();

scale(-1, -1);

image(movie4, -width, -height, width, height);

popMatrix();

}

if (arduino_values[6] == 1) {

if (movie5.available()) {

movie5.read();

}

pushMatrix();

scale(-1, -1);

image(movie5, -width, -height, width, height);

popMatrix();

}

if (arduino_values[7] == 1) {

if (movie6.available()) {

movie6.read();

}

pushMatrix();

scale(-1, -1);

image(movie6, -width, -height, width, height);

popMatrix();

}

if (arduino_values[8] == 1) {

if (movie7.available()) {

movie7.read();

}

pushMatrix();

scale(-1, -1);

image(movie7, -width, -height, width, height);

popMatrix();

}

if (arduino_values[9] == 1) {

if (movie8.available()) {

movie8.read();

}

pushMatrix();

scale(-1, -1);

image(movie8, -width, -height, width, height);

popMatrix();

}

if (arduino_values[10] == 1) {

if (movie9.available()) {

movie9.read();

}

pushMatrix();

scale(-1, -1);

image(movie9, -width, -height, width, height);

popMatrix();

}

if (arduino_values[11] == 1) {

if (movie10.available()) {

movie10.read();

}

pushMatrix();

scale(-1, -1);

image(movie10, -width, -height, width, height);

popMatrix();

}

if (arduino_values[10] == 1) {

option = 10;

}

if (option == 10) {

win();

}

}

void getSerialData() {

while (serialPort.available() > 0) {

String in = serialPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (in != null) {

print("From Arduino: " + in);

String[] serialInArray = split(trim(in), ",");

if (serialInArray.length == NUM_OF_VALUES_FROM_ARDUINO) {

for (int i=0; i<serialInArray.length; i++) {

arduino_values[i] = int(serialInArray[i]);

}

}

}

}

}

void win() {

if (isBackgroundVisible) {

img = loadImage("win.png");

image(img, 0, 0, width, height);// 设置背景颜色为白色

fill(#FAF312); // 设置文本颜色为黑色

} else {

img = loadImage("win.png");

image(img, 0, 0, width, height);

; // 设置背景颜色为黑色

fill(#12FAEC); // 设置文本颜色为白色

}

// 在屏幕中央绘制文本

text("You Win!!", width/2, height/2);

// 闪烁背景

if (frameCount % 30 == 0) {

isBackgroundVisible = !isBackgroundVisible;

}

}

void mousePressed() {

// 当鼠标点击屏幕时,关闭窗口

exit();

}