Concept and Design

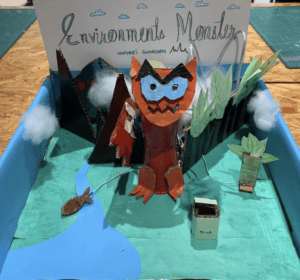

Description: The original concept of a “pet alien” was greatly conserved while realizing and building the final artifact “Alien Allure.” The interactive art project “Alien Allure” involves the user interacting with an alien inside its containment chamber. Due to its contained nature, the interactions possible with the alien are relegated to influencing its environment. This can be done by feeding the alien with a push of a button or changing the internal temperature of its environment with a sliding potentiometer. The project is interactive due to the nature of how the alien reacts due to you changing its internal environment. On the computer window behind the alien enclosure, a webcam feed displays the “vision” of the alien, allowing you to see your effect on the alien’s internal world. When fed, burgers bounce around the screen, when the temperature is changed from hot to cold its vision tints red to blue. This is accompanied by the sound play through processing. The alien chirps with joy when fed, but shivers in the cold. To increase the liveliness of the alien in the enclosure, a distance sensor is integrated into the interface allowing the alien to sense your presence. When you are need the alien shaking in excitement, however when you are far he becomes docile and moves minimally. With the evident button and sliding potentiometer, as well as the feedback from getting close to the alien enclosure, we believed that this would entice people to explore the functionality of the alien enclosure without prompt so that they could ascertain the story of the alien for themselves. With a simple enclosure with a name tag and sensors we hoped that the way in which the alien could be interacted with would be apparent, and the reactions would be immediate and obvious.

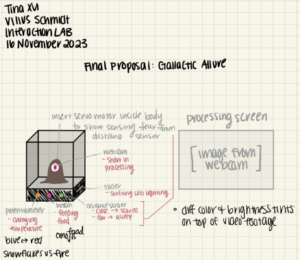

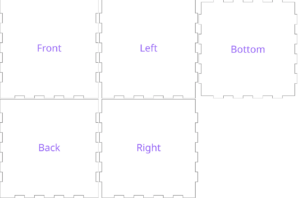

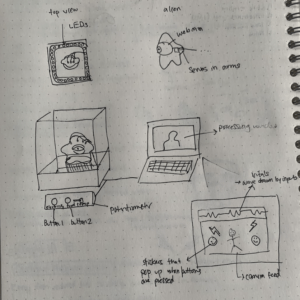

image of original proposal.

Influences: When thinking of an idea for this project, digital pets such as tamagotchi were inspiration. In our feedback on our project, tamagotchi was brought up in reference to the project. I am glad that the inspiration came through despite out “digital pet” was a physical alien object. Like a tamagotchi the user is only allowed simple interactions like feeding playing and cleaning, However the reactions and interactions make it something worth while and enticing. This was an important aspect in the design process. The alien is happy when it is hot, it is shivering when it is cold, and it shakes when you are near. there is a blend of movement, visual change, and sound.

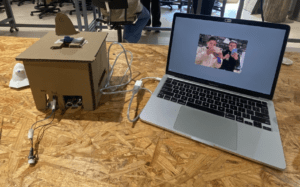

User Testing: Before user testing the alien was a cardboard prototype with the computer towards the side, a potentiometer for temperature (not-sliding), and without any sound.

Some of the most important additions to the final project (other than upgrading from cardboard to laser cut acrylic and a silicone alien) where the aforementioned things missing from the project. Previously the idea of adding sound had not crossed my mind in the development process, but did well to bringing life to the alien, as well as concrete feedback that “something was happening.” While many people were queued into the sensors, many were confused with what was happening. why did burgers appear on the screen when the button was pressed, why does the screen have a camera? what is the point of the color changes? from these questions I realized that a failure in presentation was creating conclusion. the idea that the alien was in an enclosure, and the the screen was the POV of the alien was lost. A simple fix to this was creating the lid for the alien enclosure, as well as putting the computer behind the enclosure, so it is evident that whatever is going on the screen is directly related to the aliens environment. This change was suggested multiple times. Finally the sliding potentiometer became the temperature modulating mechanism as it was easier to read. While the screen and the sound was quite effective in bringing the allusion to life, the sliding temperature sensor was still hard to read. As the computer monitor was behind two layers of clear acrylic, people did not resgister the change in color on the moniter when the temperature slider was changed. The location for the potentiometer may have been a bigger issue as due to it being on the side people could not easily see that it modulated temperature.

Fabrication and Production

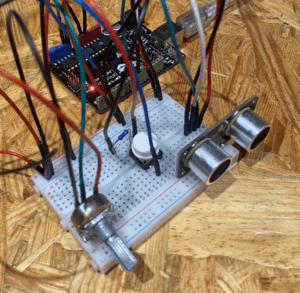

Key Step 1: Sensor Test

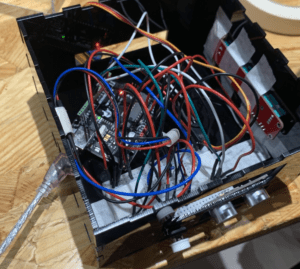

The first step in creating the project was to determine the sensors we were going to used, and the values that were determined by these sensors. We chose to use a potentiometer as it was able to change values in an analog manner like a thermostat, which was to become its purpose. Another sensor was a button, this yes and no input would work perfect for feeding the alien. The distance sensor was also used in order to add interaction to the physical bodie of the alien that controls a servo motor that rotates back and forth. Each of these sensor are the most intuitive for their interaction type. Sliders and buttons are commonly seen on a tech apparatus.

An arduino code was then generated to read the values for each of the sensors in the same sketch.

Key Step 2: Alien Vision

The processing file was then separately created to text the effects on the screen that was constitute the “alien’s vision”. The two interaction that I wanted seen on the screen was the change in temperature and the feeding. To do this a sketch was created in which the processing video library was imported and the video being played in the draw function was the footage taken from the web cam. The window was then set to the size of the web cam footage, as the footage did not cover the whole screen. To generate the tint effect one tint function was set up in front of image (cam, 0, 0) function to tint the color of the video footage. In the tint functions the RBG values became function, in which the mouse position effected the color of the tint. For the red tint for hot, the blue and green were diminished as mouse approached x=0, for the cold tint, red was diminished when x approached it maximum. When trying to implement feeding, an array was created that would generate burgers. Values were set for initial position, initial speed in the x and y direction. And the values were then set to bounce when reaching the boundary conditions of the screen. The initial intention was for that at each button press the number of values in the array would increase, however, this was out of my ability, and thus the idea that the button state would visualize the array was implemented. This was done by putting in a conditional that when the mouse was pressed, the array of 20 burgers was implemented so that they would bounce once reaching the edges of the screen by reversing their velocities.

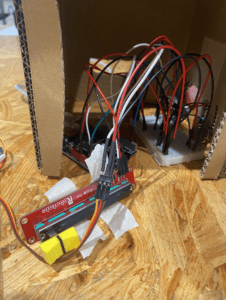

Key Step 3: Putting it all together

The Arduino code was updated in order to import the servo motor library and the servo motor was attached to the Arduino chip. Then conditionals were set in regards to the distance read in the distance sensor. There were 3 teirs in which the distance was at a maximum, the distance was a “50” to “20” and when the distance was at “20” to “0”. In each conditional the servo was set to sweep back and forth. the closer to the distance sensor, the faster the sweeping (done by decreasing the delay) and the greater the angle that was swept. The Processing code was then copy and pasted into the serial communication AtoP Processing code in order to obtain the values from Arduino. The values to be received by the processing code were 3, one pot, one slide pot, and one button, and thus the code was set to receive 3 values from Arduino. We had difficulty figuring out how to send Arduino code to the processing code. However, I was taught by simple printing the values of the Arduino code on the same line the processing could read the 3 values from Arduino. Finally the processing code was modified in order to replace the button press for burgers with the digital values from the button connected to processing, and the x coordinated controlling the tint were replaced by the mapping of the analog Arduino values. Finally a cardboard box was created and a prototype of our alien (made of cardboard) was tapped to the servo motor. The Arduino was placed under the box, the sensors were exposed, and the servo motor was stuck through the top for the alien to move.

This a video of the project at the user testing stage

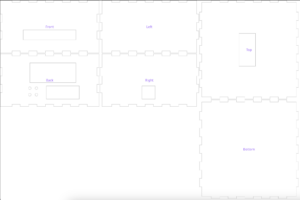

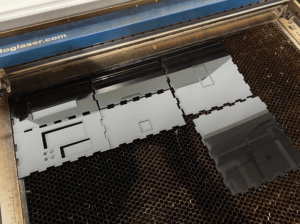

Key Step 4: Post User Testing

The first part of updating our project was laser cutting out of acrylic instead of a cardboard box. This was done in cuttle.xyz by looking through some of the templates for making boxes. These were then modified to fit a 5×5 inch box that was half as short. This was then modified by cutting out holes for the sensors to fit through. These were measured against the size of the sensors to be the right size. This box was then cut out of black acrylic.

There was an issue with the hole for the slid pot being on the back instead of the side, however this was easily remedied by laser cutting the same sized hole on the side piece separately. Then out of clear acrylic a “glass bubble” was printed to contain the alien.

This was then sealed with adhesive.

This was then sealed with adhesive.

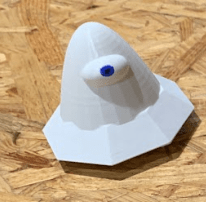

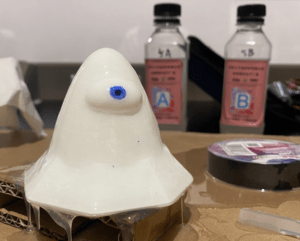

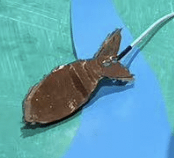

The alien mold was then 3d printed. This was done by creating a 3d model in tinkercad. It was created by fusing a few basic shapes together. The 3d print is shown down below.

The aline then was created by pouring polymer iver the mold. When two bottles A and B where mixed and painted onto the alien, the polymer then solidified. 2 more layers were poured on. Finally the after drying the alien was painted and stuffed with cotton.

A small carboard attachment was closed to the servo motor to move the alien puppet. One large issue in the code was that a large delay was being input into processing making the visual aspects unresponsive and janky. To overcome this, we learned that the delay being used to control the servo motors was affecting the rest of the code. The change this, instead of the servo sweeps bieng controlled by delays, they were then converted by sin functions, this made the serve’s move smoother with out any delay. The code involving the angles that the servo turned were conserved so that the closer one was to the distance sensor, the more active the alien would twist. For some final touch up on the code sound was added so that when the potentiometer values was at either 0 or 1023, two audio clips would be played. One being for when the alien was hot and one for when the alien was cold. Another audio file was set to play when the button state read that the alien was being fed. Finally for a final touch an eye hole was effect was created for the screen by importing a black cut out png of an eye hole and placing it at the very bottom of the code so that it would be in front of all the visual information on the processing screen.

Final Circuitry:

Final code:

Arduino:

Processing:

//import arduino values

import processing.serial.*;

Serial serialPort;

int NUM_OF_VALUES_FROM_ARDUINO = 3; /* CHANGE THIS ACCORDING TO YOUR PROJECT */

/* This array stores values from Arduino */

int arduino_values[] = new int[NUM_OF_VALUES_FROM_ARDUINO];

//capture webcam footage

import processing.video.*;

String[] cameras = Capture.list();

Capture cam;

// insert sound

import processing.sound.*;

SoundFile yummy;

SoundFile hot;

SoundFile cold;

//importing images of food

PImage photo;

PImage img;

//food bouncing array

int numFood = 10;

float [] triXs = new float [numFood];

float [] triYs = new float [numFood];

float [] speedXs = new float [numFood];

float [] speedYs = new float [numFood];

void setup() {

size(640, 480);

background(0);

cam = new Capture(this, cameras[0]);

cam.start();

for (int i=0; i<numFood; i=i+1) {

triXs[i] = random(0,640);

triYs[i] = random(0,480);

speedXs[i] = random(-7,7);

speedYs[i] = random(-7,7);

}

photo = loadImage(“burger.png”);

img = loadImage(“eyehole.png”);

printArray(Serial.list());

// put the name of the serial port your Arduino is connected

// to in the line below – this should be the same as you’re

// using in the “Port” menu in the Arduino IDE

serialPort = new Serial(this, “/dev/cu.usbmodem11201”, 9600);

yummy = new SoundFile(this, “yummy.wav”);

hot = new SoundFile(this, “hot.wav”);

cold = new SoundFile(this, “Brrr.mp3”);

}

void draw() {

background(0);

//trying to rotate

//translate(width/2, height/2);

//rotate(radians(-180));

pushMatrix();

translate(cam.width,0);

scale(-1,1); // You had it right!

image(cam,0,0);

popMatrix();

// receive the values from Arduino

getSerialData();

if (cam.available()) {

cam.read();

}

//change color of footage

tint(map(arduino_values[2],1023,0,100,255), map(arduino_values[2], 0, 1023, 100, 255), map(arduino_values[2], 0, 1023, 100, 255), 255);

//image(cam, 0, 0);

if (arduino_values[2] == 0) {

if (hot.isPlaying() == false) {

// start playing it

hot.play();

}

}

if (arduino_values[2] == 1023) {

if (cold.isPlaying() == false) {

// start playing it

cold.play();

}

}

//bouncing burgers

if (arduino_values[0] == 1 ) {

// if the sound is not already playing

if (yummy.isPlaying() == false) {

// start playing it

yummy.play();

}

for (int i=0; i<numFood; i=i+1) {

image(photo, triXs[i],triYs[i],photo.width/3,photo.height/3);

triXs[i] = triXs[i] + speedXs[i];

triYs[i] = triYs[i] + speedYs[i];

if(triXs[i] > 580 || triXs[i] < 0) {

speedXs[i]=-speedXs[i];

} if (triYs[i] > 400 || triYs[i] < 0) {

speedYs[i]=-speedYs[i];

}

}

}

image(img, 0,0,640,480);

println(arduino_values[0]);

println(arduino_values[1]);

println(arduino_values[2]);

}

// the helper function below receives the values from Arduino

// in the “arduino_values” array from a connected Arduino

// running the “serial_AtoP_arduino” sketch

// (You won’t need to change this code.)

void getSerialData() {

while (serialPort.available() > 0) {

String in = serialPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

if (in != null) {

print(“From Arduino: ” + in);

String[] serialInArray = split(trim(in), “,”);

if (serialInArray.length == NUM_OF_VALUES_FROM_ARDUINO) {

for (int i=0; i<serialInArray.length; i++) {

arduino_values[i] = int(serialInArray[i]);

}

}

}

}

}

Conclusion

The goal of this project was to make an interactive pet alien. I would say that I was moderately successful with this plan. While people seemed to have issue with the presentation causing some confusion on interaction purpose, I think users were able to understand the concept well. Though some people were confused on exactly what the purpose of each switch was, people were drawn into the buttons and sliding potentiometer and were excited to continue pressing buttons and sliding the potentiometer to make the alien speak and make noise. People seemed to inherently understand that the burgers appearing meant that the alien was being fed. People also seemed to enjoy messing with the distance sensor by getting closer and farther from the alien. Every aspect of possible interaction was explored by all who tested it. I believe it fits my idea of interaction cause people were able to continue to have a conversation with the alien through changing multiple stimuli. Some improvements for the project are 3 things: one with the code being a hunger bar in order to give a reason for feeding it to make it more interactive. Anther interaction would be screen presentation. Instead of placing the computer behind the project, a different monitored that was less intrusive could be added behind. A final improvement would be to label the buttons and switches for a clearer presentation. From my setbacks I was able to learn “when to quit.” This meant that when. something seemed impossible, finding an easier solution to create a similar effect can be an important tool to move on. From my accomplishments I have been able to learn that I am able to create some very polished looking work using digital fabrication.

This project has let me explorer and increase my affinity to breathing life into machines. This project was sucsessfully able to demostrate interactions with creatures unknown using our senses of sound and sight without specifically using words.

Disassembly

Appendix

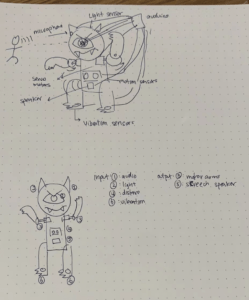

Original sketch by me of project

Citations:

Alien sound chirp:

https://www.soundsnap.com/monster_element_alien_creature_chirp_02_sfxbible_ss00232

Ho yeah:

https://www.soundsnap.com/who_yeah_long_wav

Brrrrr:

https://www.youtube.com/watch?v=qycVSRGghzo

Eye hole:

vs.

vs.