Hearo

Hearo is a fusion of physical product, being glasses and bracelet, and digital application that is designed to aid people with hearing disabilities in daily communication.

In order to truly understand the issue of having a hearing disability, we developed 3 empathy tools, focusing on the physical impairment as well as the mental feeling. The first one was “The Wall” which is an installation of a 4 sided mirror that causes people to not be able to see the outside and only their own reflection, creating the feeling of isolation of felt where one can only see their voice and also mimicking the fact that these individuals are often omitted and othered in society. For the second one, “The Distortion” is about the distorted sounds individuals with hearing disability feel. They may hear something, but it will be muffled. That’s the concept of the distortion. There’s also a code that was done to mimic this feeling where users can ask questions and the AI will answer randomly, expressing a sort of confusion felt by the hearing impaired individuals. Lastly, the mentality has to do with a very tight elastic band on your head to create a sort of tension and stress. This really pushes people to feel the sort of pressure that deaf individuals often feel when interacting with others and going out.

As a verification of the empathy tool, I interviewed Adam Liu at the event “Innovation for Inclusion”. Adam Liu is a severely deaf individual that has the inability to effectively communicate vocally. He can’t hear what people say and has to use applications to both listen and to communicate with others. Moreover, he faces issues of communication even when using these applications mainly due to the lack of clarity of language from people where he can only listen to one voice at once through mobile voice recognition. This may be impacted by the noisiness and when he cannot catch the information. It’s also this sort of miscommunication that he has to often guess what others say as it’s inconvenient to ask at times.

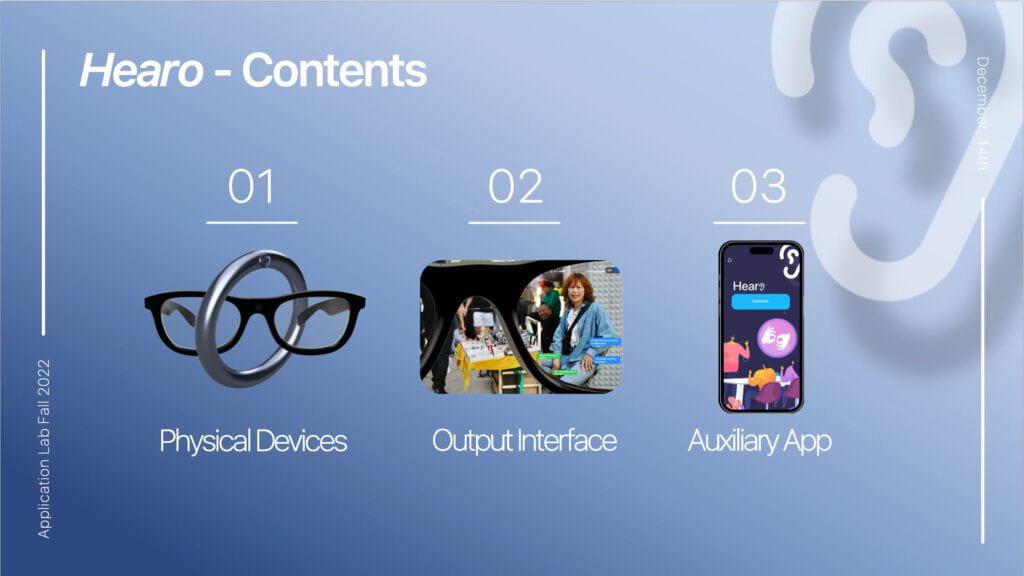

Therefore for our solution, we focused on both the physical devices and the auxiliary app. For the physical device, it also has an output interface to express the sort of device this is. Furthermore, there is also the presentation of the auxiliary app which controls the output interface for personalized use and experience.

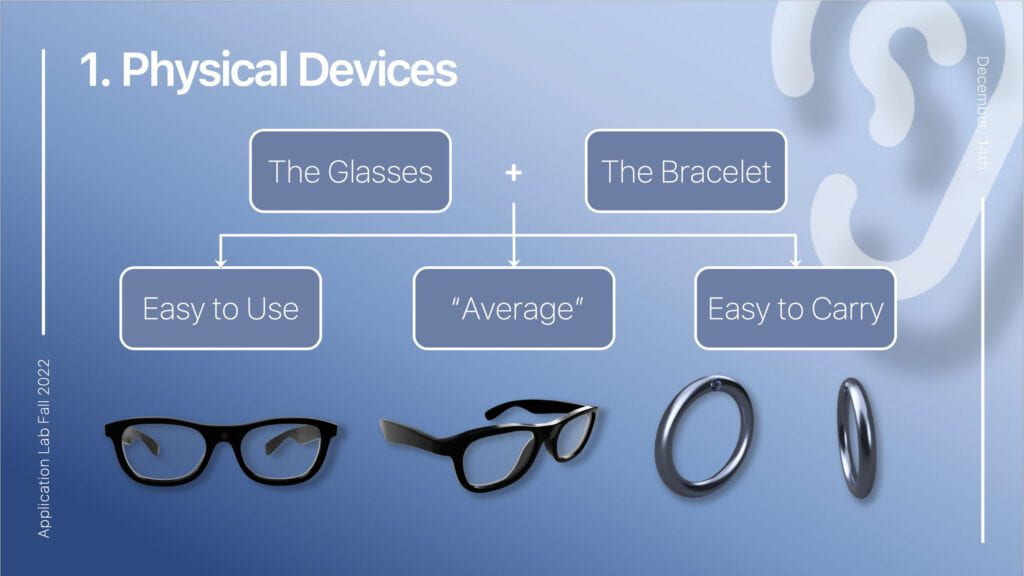

For the physical devices, there is the glasses and the bracelet which are designed to look like everyday objects to make sure that it doesn’t stand out, making the user feel normal. Moreover, these devices are easy to use and easy to carry as people don’t have to hold it, but can actually wear it. Everything is also automated according to the settings from the auxiliary app.

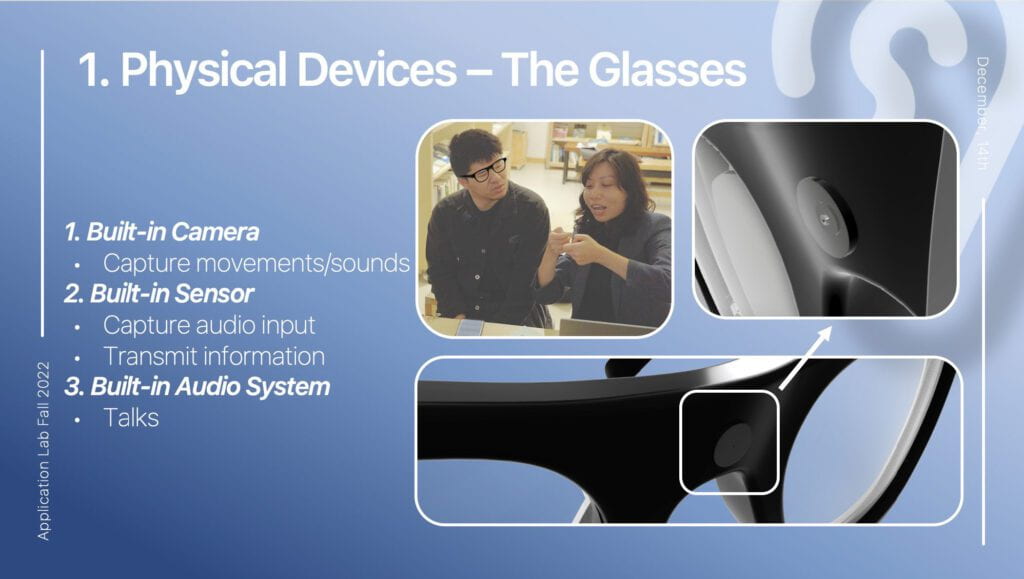

The glasses have built-in cameras to capture movements and sounds from eyetracking technology. The user can look at one person and the glasses will focus on the sound from the user, especially the dialogue they speak. As for the built-in sensors, there is one to capture audio input from the person talking and one sensor to transmit the voice of the user to the interface. Lastly, there is an audio system built in to help Adam talk, being his digital voice as he has speaking impairment too.

The bracelet is used to transfer sign language into actual language. This is what is transferred to the audio system of the glasses to talk. Moreover, it has a camera and sensors to complete these task through tracking the fingers and hand movement which will then be transmitted to the glasses for output in the interface.

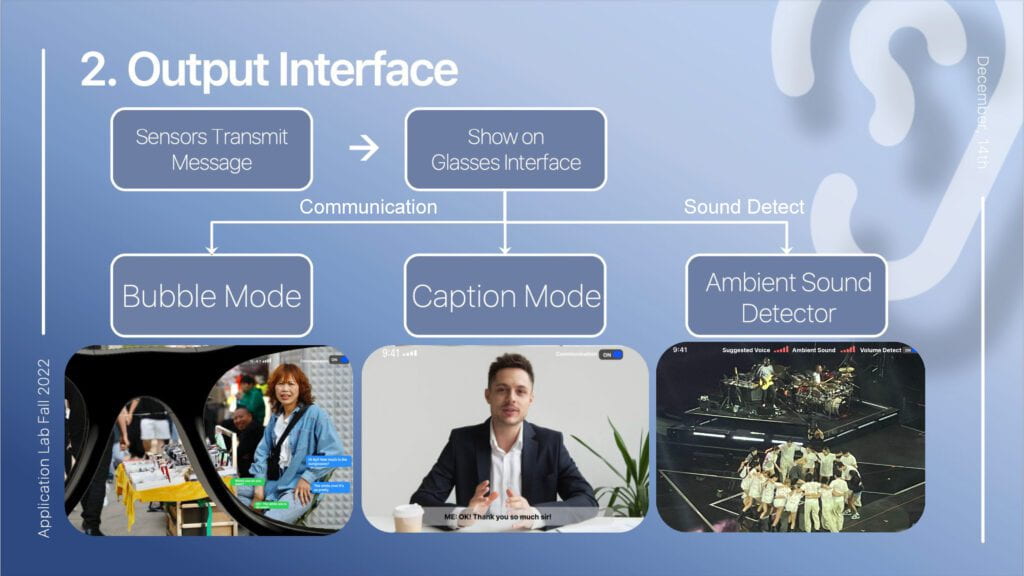

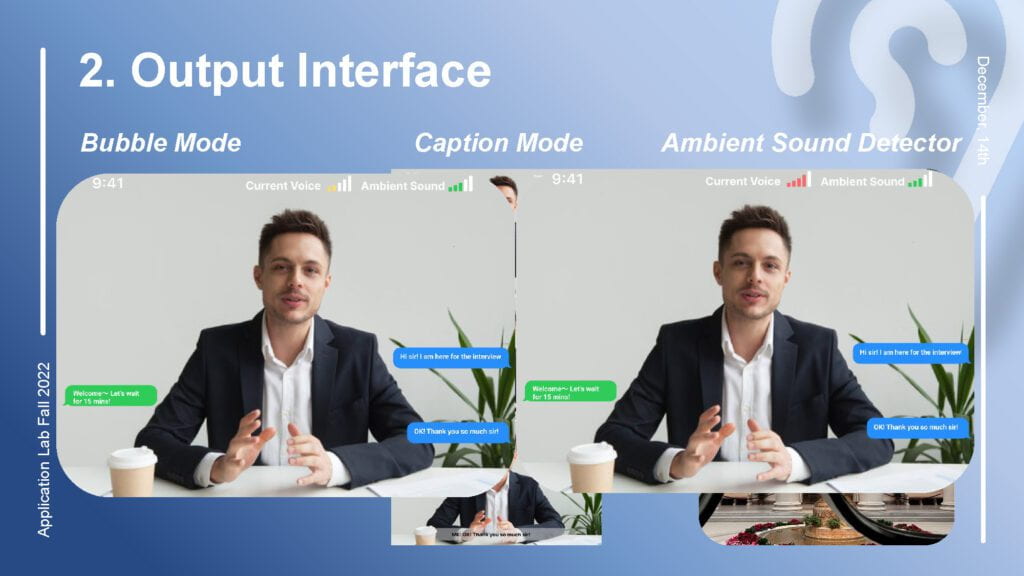

As for the output interface, it’s all about sensors transmitting message to show on the glasses from the input of the talker. This can be presented in bubble mode which is similar to the texting system in place on phone and caption mode which is like movie captions. We also have sound detect on the glasses which detects the ambient sound around and suggests voices to speak, letting people know how to talk in situations. Adam doesn’t know how big his voice is and this will help him know.

This is a clearer example of this in an interview situation where you may feel that visualizing what you said before is important. You can also see how the digital voice appears during the meeting for good communication.

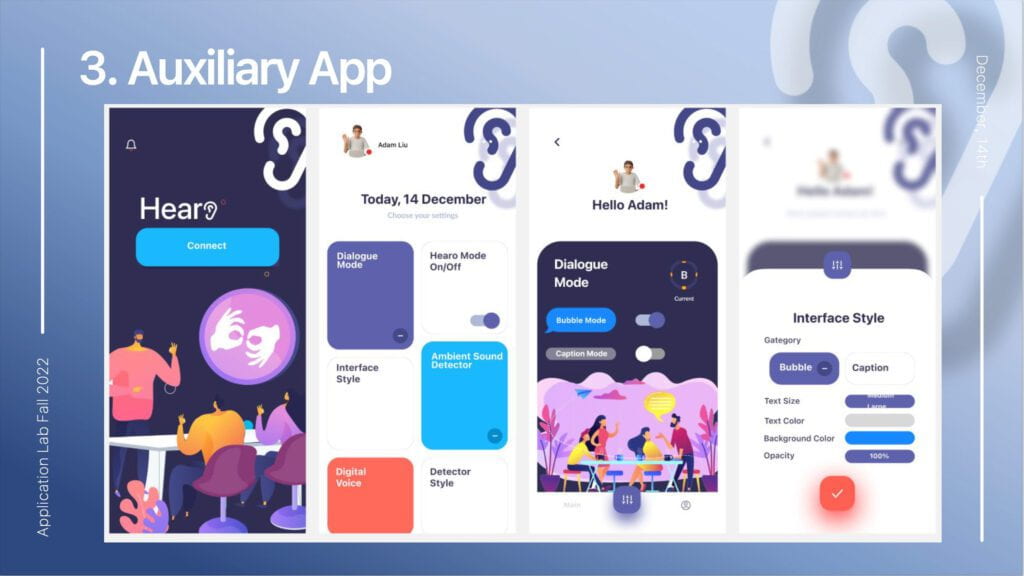

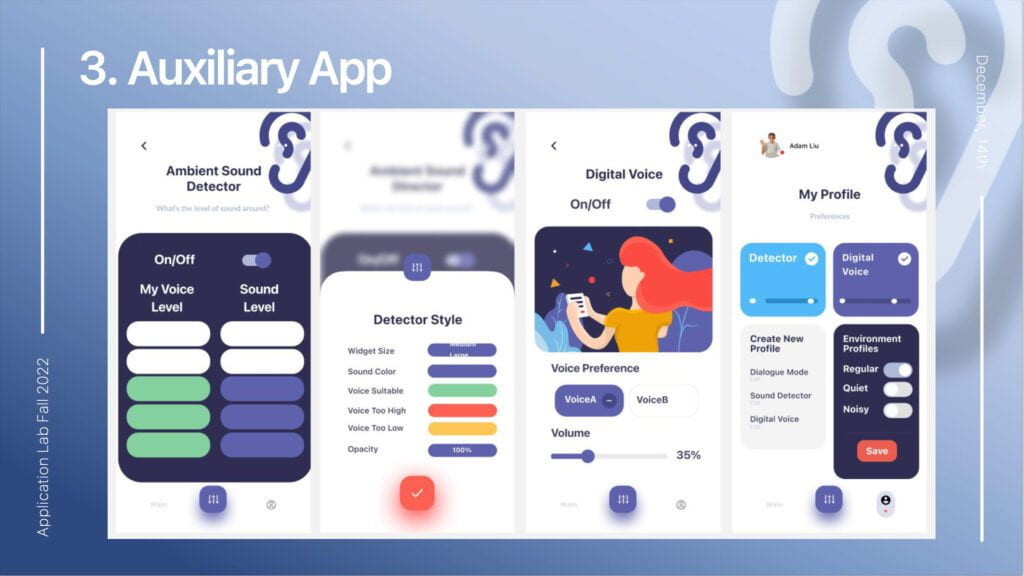

Lastly, the application is connected to our device to help make personal adjustments. You can choose whether or not you want to turn Hearo on. You can also choose the mode of dialogue e.g. bubble mode or caption mode. This will then be what you see on the glasses. Moreover, as everybody has different levels of eyesight as well as preferences of color, opacity and size, they can also design this in the interface style to help them use the product comfortably. The last important feature is creating the profiles which are shortcuts for the application. For the shortcuts, it is a sort of tap interface that the user can press which will give the correct presets in certain situations. This will save them time, much like personal customized buttons on cameras that photographers use.

Leave a Reply