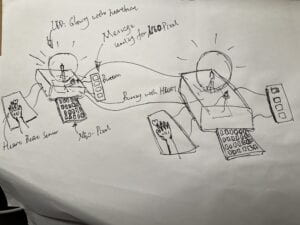

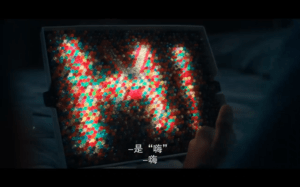

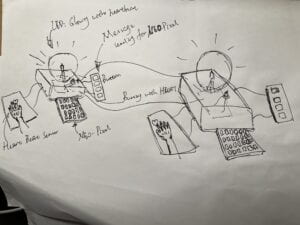

In the previous researches, I found the interactive media artifacts that gained great success were of great scale and highly sensitive towards human actions, compared to the things that we are assigned to do during recitations, which were simple and of minor interactions, and most fatally, couldn’t be even be titled as an interactive media art. Thus, I decided to make something that was of a greater portion of interaction. The project was dedicated to solve a kind of problem and the first thing that popped into my mind was to help with the lonely feeing people have, because it was often the case that partners have to leave one another minding their own business. The lack of company could often be frustrating and unbearable. As Rudi suggested to us that the best way to eliminate loneliness was not giving artificial accompaniment but telling one that “I was really here for you,” we decided to design something that could give lonely people real company, that there is really someone out there for you. Then we thought of that the most featured signs of human which included ones heartbeat, expressions and temperature. The artifact that we were about to make was to show the other person one’s heartbeat, temperature, and certain simplest messages.

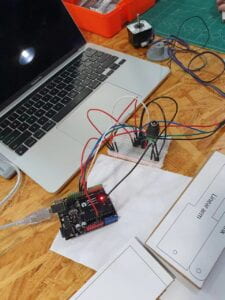

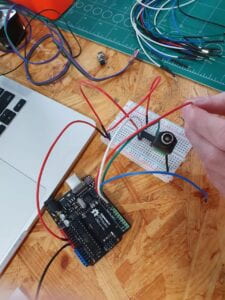

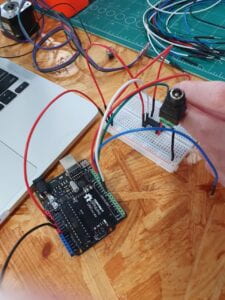

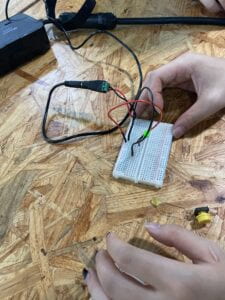

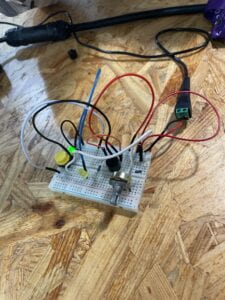

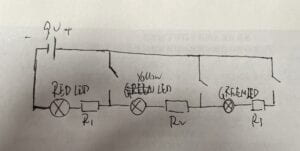

To acquire the input of one’s heartbeat, the heartbeat senso was needed; to send certain messages, couples of buttons should be used to coordinate with Neopixel 8×8, so that when the user pushed a button, the pixel would show the corresponding message that the user wanted to send; to gain one’s temperature, a more sensitive temperature sensor to the one in our Arduino kit was needed. Our heartbeat sensors were ear clips while temperature sensors were poor in sensitivity. Thus, we bought our own materials.

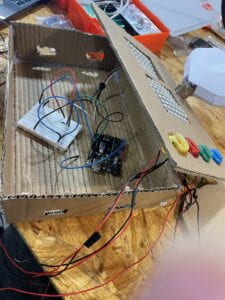

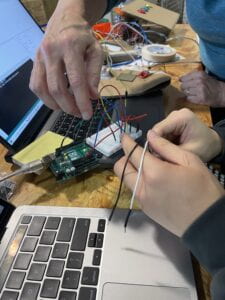

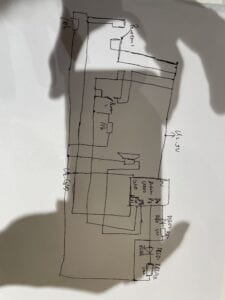

With cardboards, we made the shell of our prototype and inside, we connected our cables. By inserting the codes to Arduino Uno. It worked just fine. We succeeded in making the very early prototype of our project and we made it work, which contained only the heartbeat sensor and the message sender. During the user test, things worked fine, but it stroke us that it allowed only one users, because the input and output devices were compiled into the same cardboard box. The user gave instructions while receiving all the outputs of the machine. It was not a loneliness killer, but a self-cycle of messages. Thus, to make it into the real loneliness killer, the output devices and input devices should be distant from one another, because they should be separately had by two remote partners. Thus, we departed the prototype and clearly separated the parts of output and input. And with new cardboards, we made shells for both input and output devices. Then we connected them with long cables. The coding was not much of problem but the connections of cable for input, output, power, ground was of high complexity. We tried multiple times in connecting different devices into the circuits of three Arduino Uno boards and failed for tens of dozens of times until we finally made it work. Consequently, I colored the paper shells green and blue to apply a serious and deep tone to it. We recorded the usage of the machine, and I made it into a video clip, as could be seen below with the conclusions I made.

And, here are the codes:

- Button & Sensor:

#include <Adafruit_NeoPixel.h>

#define LED_PIN 6

#define pressure A0

#define button1 13

#define button2 12

#define button3 8

#define button4 7

int pressure_val;

int button1_state = 0;

int button2_state = 0;

int button3_state = 0;

int button4_state = 0;

int prev_pressure_val = 0;

#define LED_COUNT 64

Adafruit_NeoPixel strip(LED_COUNT, LED_PIN, NEO_GRB + NEO_KHZ800);

void setup() {

// 8*8 NEOPIXEL 代码

strip.begin();

strip.show();

strip.setBrightness(255);

Serial.begin(9600);

pinMode(pressure, INPUT);

pinMode(button1, INPUT);

pinMode(button2, INPUT);

pinMode(button3, INPUT);

pinMode(button4, INPUT);

}

void loop() {

pressure_val = analogRead(pressure);

button1_state = digitalRead(button1);

button2_state = digitalRead(button2);

button3_state = digitalRead(button3);

button4_state = digitalRead(button4);

Serial.println(pressure_val);

if((prev_pressure_val == 0) && (pressure_val > 200)){

rainbow1(10);

delay(500);

HI(100);

wo(100);

zai(100);

zhe(100);

prev_pressure_val = 2 ;

}

if(pressure_val > 250){

rainbow1(10);

}

if(button1_state == 1){

//我爱你

wo(100);

love(100);

ni(100);

}

else if(button2_state == 1){

//笑脸

happy(100);

}

else if(button3_state == 1){

//哭脸

sad(100);

}

else if(button4_state == 1){

//再见

byezai(100);

jian(100);

}

}

//funtions used in the project

void HI(int wait) {

int list_HI[34] = {0,1,2,3,4,5,6,7,11,20,12,19,31,30,29,28,27,26,25,24,47,48,46,49,44,43,42,41,40,51,52,53,54,55};

int firstPixelHue = 20000;

for(int i=0; i<34; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_HI[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(400);

strip.clear();

strip.show();

}

void I(int wait) {

int firstPixelHue = 0;

for(int i=24; i<40; i++) { // For each pixel in strip…

for(int c=0; c<16; c += 1) {

// hue of pixel ‘c’ is offset by an amount to make one full

// revolution of the color wheel (range 65536) along the length

// of the strip (strip.numPixels() steps):

int hue = firstPixelHue + c * 65536L / 10;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(i, color); // Set pixel ‘c’ to value ‘color’

}

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

firstPixelHue += 65536 / 90; // One cycle of color wheel over 90 frames

}

delay(100);

strip.clear();

strip.show();

}

void AM(int wait) {

int list_AM[36] = {7,6,5,4,3,2,1,15,16,30,29,28,27,26,25,24,12,19,39,38,37,36,35,34,33,32,46,49,63,62,61,60,59,58,57,56};

int firstPixelHue = 0;

for(int i=0; i<36; i++){

int hue = firstPixelHue + 65536L / 36;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_AM[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

firstPixelHue += 65536 / 90; // One cycle of color wheel over 90 frames

}

delay(500);

strip.clear();

strip.show();

}

void HERE(int wait){

int list_HERE[44] = {0,1,2,14,17,31,30,29,32,47,48,63,33,34,46,49,62,45,50,61,4,11,20,27,26,21,10,5,6,7,22,24,36,43,52,59,37,42,53,58,38,41,54,57};

int firstPixelHue = 0;

for(int i=0; i<44; i++){

int hue = firstPixelHue + 65536L / 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_HERE[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

firstPixelHue += 65536 / 90; // One cycle of color wheel over 90 frames

}

delay(500);

strip.clear();

strip.show();

}

void wo(int wait){

int list_wo[30] = {16,31,13,18,29,34,45,50,61,30,28,27,26,25,24,23,9,21,35,47,46,44,43,42,54,56,38,52,48,62};

int firstPixelHue = 30000;

for(int i=0; i<30; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_wo[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(500);

strip.clear();

strip.show();

}

void zai(int wait){

int list_zai[27] = {13,18,29,34,45,50,32,33,28,20,10,6,21,22,23,26,37,42,53,44,43,41,24,39,40,55,56};

int firstPixelHue = 40000;

for(int i=0; i<27; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_zai[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(500);

strip.clear();

strip.show();

}

void zhe(int wait){

int list_zhe[27] = {14,17,12,19,20,21,22,8,24,39,40,55,56,47,46,29,34,45,50,61,35,36,42,54,51,52,38};

int firstPixelHue = 50000;

for(int i=0; i<27; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_zhe[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(500);

strip.clear();

strip.show();

}

void love(int wait){

int list_love[36] = {2,3,14,13,12,11,16,17,18,19,20,21,30,29,28,27,26,25,33,34,35,36,37,38,47,46,45,44,43,42,49,50,51,52,61,60};

int firstPixelHue = 50000;

for(int i=0; i<36; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_love[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(500);

strip.clear();

strip.show();

}

void happy(int wait){

int list_happy[30] = {1,2,3,4,5,6,8,23,24,39,40,55,57,58,59,60,61,62,48,47,32,31,16,15,18,45,21,25,38,42};

int firstPixelHue = 50000;

for(int i=0; i<30; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_happy[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(500);

strip.clear();

strip.show();

}

void sad(int wait){

int list_sad[30] = {1,2,3,4,5,6,8,23,24,39,40,55,57,58,59,60,61,62,48,47,32,31,16,15,18,45,22,26,37,41};

int firstPixelHue = 50000;

for(int i=0; i<30; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_sad[i], color); // Set pixel ‘c’ to value ‘color’

strip.show(); // Update strip with new contents

delay(wait); // Pause for a moment

}

delay(500);

strip.clear();

strip.show();

}

void byezai(int wait){

int list_byezai[38] = {15,16,31,32,47,14,13,12,11,10,9,8,14,17,30,33,46,49,50,51,52,53,54,55,40,39,29,28,27,26,19,35,44,5,21,37,42,58};

int firstPixelHue = 50000;

for(int i=0; i<38; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue));

strip.setPixelColor(list_byezai[i], color);

strip.show();

delay(wait);

}

delay(500);

strip.clear();

strip.show();

}

void jian(int wait){

int list_jian[28] = {16,17,18,19,20,21,31,32,47,48,49,50,51,52,53,34,35,36,37,25,23,38,39,40,55,56,57,58};

int firstPixelHue = 50000;

for(int i=0; i<28; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue));

strip.setPixelColor(list_jian[i], color);

strip.show();

delay(wait);

delay(500);

strip.clear();

strip.show();

}

void ni(int wait){

int list_ni[30] = {16,17,13,3,12,11,10,9,8,31,30,29,19,33,46,49,62,50,45,44,43,42,41,40,39,24,27,21,52,58};

int firstPixelHue = 50000;

for(int i=0; i<30; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue));

strip.setPixelColor(list_ni[i], color);

strip.show();

delay(wait);

}

delay(500);

strip.clear();

strip.show();

}

void rainbow1(int wait) {

for(long firstPixelHue = 0; firstPixelHue < 2*65536; firstPixelHue += 256) {

strip.rainbow(firstPixelHue);

delay(wait);

}

strip.clear();

strip.show();

}

2. Heartbeat Sensor:

#include <Adafruit_NeoPixel.h>

#define BUTTON_PIN 6

const int heartbeat = 4;

int heartbeat_val;

int old_val = 0;

#define PIXEL_COUNT 64

Adafruit_NeoPixel strip(PIXEL_COUNT, BUTTON_PIN, NEO_GRB + NEO_KHZ800);

void setup() {

// put your setup code here, to run once:

pinMode(heartbeat, INPUT);

pinMode(BUTTON_PIN, OUTPUT);

Serial.begin(9600);

}

void loop() {

//put your main code here, to run repeatedly:

heartbeat_val = digitalRead(4);

Serial.println(heartbeat_val);

if((heartbeat_val == 1)&&(old_val == 0)){

colorWipe(strip.Color(255, 0, 0),300);

}

old_val = heartbeat_val;

delay(10);

}

//

void colorWipe(uint32_t color, int wait) {

for(int i=0; i<64; i++) { // For each pixel in strip…

strip.setPixelColor(i, color); // Set pixel’s color (in RAM)

}

strip.show();

delay(wait);

strip.clear();

strip.show();

}

3. Temperature Sensor:

#include <Adafruit_NeoPixel.h>

#define LED_PIN 6

#define LED_COUNT 64

Adafruit_NeoPixel strip(LED_COUNT, LED_PIN, NEO_GRB + NEO_KHZ800);

const int temp = A0;

int temp_val;

void setup() {

strip.begin();

strip.show();

strip.setBrightness(255);

pinMode(temp, INPUT);

}

void loop() {

temp_val = analogRead(A0) * (5000 / 1024.0) / 10;

if(temp_val == 35){

num35();

}

else if(temp_val == 36){

num36();

}

else if(temp_val == 37){

num37();

}

else if(temp_val == 38){

num38();

}

else if(temp_val == 39){

num39();

}

else if(temp_val == 40){

num40();

}

delay(100);

}

void num34() {

int list_34[30] = {0,15,16,31,30,29,28,27,26,25,24,23,8,7,3,12,19,63,62,61,60,59,58,57,56,49,45,35,44,51};

int firstPixelHue = 8200;

for(int i=0; i<30; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_34[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

void num35() {

int list_35[34] = {0,15,16,31,30,29,28,27,26,25,24,23,8,7,3,12,19,63,48,47,32,33,34,35,44,51,60,59,58,57,56,55,40,39};

int firstPixelHue = 8200;

for(int i=0; i<34; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_35[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

void num36() {

int list_36[37] = {0,15,16,31,30,29,28,27,26,25,24,23,8,7,3,12,19,63,48,47,32,33,34,35,36,37,38,39,40,55,56,57,58,59,60,51,44};

int firstPixelHue = 8200;

for(int i=0; i<37; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_36[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

void num37() {

int list_37[28] = {0,15,16,31,30,29,28,27,26,25,24,23,8,7,3,12,19,32,47,48,63,62,61,60,59,58,57,56};

int firstPixelHue = 8200;

for(int i=0; i<28; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_37[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

void num38() {

int list_38[42] = {0,15,16,31,30,29,28,27,26,25,24,23,8,7,3,12,19,32,47,48,63,62,61,60,59,58,57,56,32,33,34,35,36,37,38,39,47,48,44,51,40,55};

int firstPixelHue = 8200;

for(int i=0; i<42; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_38[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

void num39() {

int list_39[36] = {0,15,16,31,30,29,28,27,26,25,24,23,8,7,3,12,19,32,33,34,35,44,51,60,47,48,63,62,61,59,58,57,56,55,40,39};

int firstPixelHue = 8200;

for(int i=0; i<36; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_39[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

void num40() {

int list_40[34] = {0,1,2,3,12,19,28,29,30,31,27,26,25,24,32,33,34,35,36,37,38,39,10,55,56,57,58,59,60,61,62,63,48,47};

int firstPixelHue = 8200;

for(int i=0; i<34; i++){

int hue = firstPixelHue + 100;

uint32_t color = strip.gamma32(strip.ColorHSV(hue)); // hue -> RGB

strip.setPixelColor(list_40[i], color); // Set pixel ‘c’ to value ‘color’

}

strip.show();

delay(300);

}

Conclusively, the overall of the midterm project was a success. Although during the exhibiting presentation at last the temperature didn’t work well because of some unconscious disconnection of cables. Our project was to the definition of interaction I defined as that the machine can intake human actions and reply to human’s understanding. The interactions our project contained was not at little amount. It was three, so it was almost for certain that the circuits we connected would be complicated. Keeping the project in one piece was of high difficulty. Thus, we did need more protection on the circuits to not make it inefficient as the temperature sensor during the presentation was. What’s more, it would be show more compliance to the ideology of our project to replace the long cables with electromagnetic signals so that the circuits won’t be so effected by our movements and increase the using distance of the device. It would be even better if we could make two same machines, so that the two partners could communicate, instead of using the single-directional information we presented.

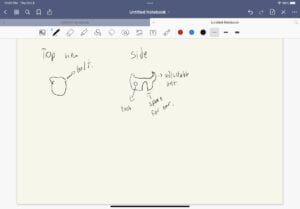

It was the very prototype of the prototype. To get into the details of it, we needed a blue print. Thus, Kenneth did his part to make sketches that we decided on his iPad.

It was the very prototype of the prototype. To get into the details of it, we needed a blue print. Thus, Kenneth did his part to make sketches that we decided on his iPad.

Here is the link of the adjusting of ht size of the band;

Here is the link of the adjusting of ht size of the band; Here is the switches that we designed. We thought of how to make these switches for long and finally we came to an agreement that we use a short stick to go through the band to stable the round switch while giving them enough freedom to turn. However, they fell off easily at first, so we have to thought of another way for them to stay. We stuck two round card boards on the other side to keep the switches on the band. It took us three days to think of it and finish it. Then, what came forth would be a rather big task, to show without telling, namely, the group performance.

Here is the switches that we designed. We thought of how to make these switches for long and finally we came to an agreement that we use a short stick to go through the band to stable the round switch while giving them enough freedom to turn. However, they fell off easily at first, so we have to thought of another way for them to stay. We stuck two round card boards on the other side to keep the switches on the band. It took us three days to think of it and finish it. Then, what came forth would be a rather big task, to show without telling, namely, the group performance.

It detected natural movements to generate corresponding sounds with the drumming of the iron buckets. It was the an artifact of low cost while it was creative and interactive enough, which was to the spirits of the “Arduino Way”.

It detected natural movements to generate corresponding sounds with the drumming of the iron buckets. It was the an artifact of low cost while it was creative and interactive enough, which was to the spirits of the “Arduino Way”.

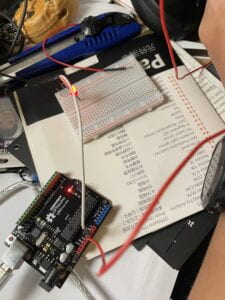

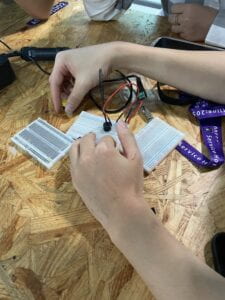

Before we proceeded the construction of the circuits, we tried to understood the rudimental structure of the bread board that was distributed to us beforehand. With the help of both professors and the assistants, we caught hold of that the board was symmetrically build while each side was made of 2 parts, dividing by a blue line, of which, one was for the plug-in for the rudimental power supply and the other was for the build-up of the circuit.

Before we proceeded the construction of the circuits, we tried to understood the rudimental structure of the bread board that was distributed to us beforehand. With the help of both professors and the assistants, we caught hold of that the board was symmetrically build while each side was made of 2 parts, dividing by a blue line, of which, one was for the plug-in for the rudimental power supply and the other was for the build-up of the circuit.

Question 2

Question 2