PROJECT TITLE – YOUR NAME – YOUR INSTRUCTOR’S NAME

Junyi(Stephen) Li – Rudi

CONCEPTION AND DESIGN:

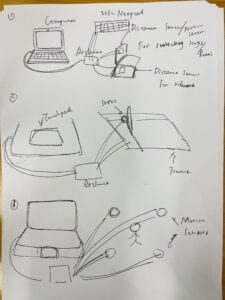

Concept:

This project is intended to help hand disabled people to create the same sketching art as any one could. I have seen a Polish artist who was born without hands but through hard work, he managed to create sketching arts in his own unique way. Thus, to let people with hand disabilities sketch their own works comprehensively and more easily, I created this machine. By placing certain parts of one’s body on the touchpad of a computer, one can easily control the pen upon the paper to move around drawing things. I used 2 stepper motors to control the location of the pen and attached a servo to the pen, to that the pen can draw as if it is in the hand of a human.

User test:

I showed the version 1 of my program in user testing and realized that the pen wasn’t stable enough to draw steadily, to change the situation I kept the pen and the servo in a wooden box and put weight under it as Andy suggested, so it can go around the paper more stably.

Algorithms for location:

X and Y can represent any point in the graph so can the length of the two strings attached to the two steppers. It was like imaging the graph to be a curved screen. Any combos of the lengths of the two strings are as unique as the points on the graph. The location for the lengths of the strings of the the pen was calculated through the Pythagorean theorem. Assume that the coordinate of the my mouse is x and y and the whole graph was in 600×600 size. The left string length would be the square root of (x^2 + y^2) while the length of the right string is the square root of [(600-x)^2 + y^2)]. By giving the changes of length to Arduino and let the steppers step correspondingly would satisfy the need of changing the position of the pen on the paper.

Fake Threading:

To let the servo and the 2 steppers run together simultaneously, I need to use threading which can manage so. I googled it and it told me that the SCoop library can help with that, but it turned out not so trustworthy as they said. Scoop still let missions proceed in sequence instead of going simultaneously. By checking the source code of Arduino, I realized that it was completely impossible for Arduino to proceed plural missions at the same time. Then, as Andy and Rudi instructed, I can have the two motors run little and little by sequence. The intervals between movements of the separate steppers can be so little that they can neglected. Thus, I decided to have a for loop to include them to work by turn rapidly. I decided the number of steps for each stepped differently, so they can run different lengths. There is still a problem that I can’t solve. Let’s say that the distances of left ad right that the steppers have to proceed were 125 and 175. By map() function, I change them from the arena of -300 to 300 to the arena of -4 to 4, and they both turn out to be 2. Then, say we have a 75 times loop, the two steppers would both walk 150 steps.

From Processing to Arduino:

It was always an issue when it came to “serialRead” that serialRead in Arduino actually can’t read negative numbers. To solve this problem, I have to add 300 to the massage sent by Processing and minus them by 300 in Arduino, so that stepper can finally approach they way I want it to work.

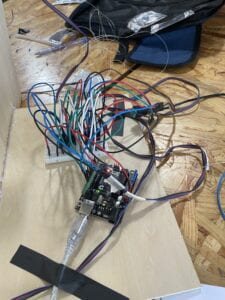

FABRICATION AND PRODUCTION:

Frame and base design:

Compared to the coding and circuit parts, the construction of the wooden works was rather easy. What I need to do only was to make a huge frame to hold both drawing paper and the two steppers. Andy helped me a lot in the designing of the wooden work. He told me that instead of sticking the steppers across the board, I should nail the steppers against the board. By using 4 nails, the steppers were successfully nailed onto the board. Whereas the cables could not go in rolls I wanted and the thin sticks of steppers can’t hold much strings. I borrowed two thick columns to how the strings and instead of using the cables as strings, I used thin transparent plastic strings. However, I just realized that the plastic strings could easily go off the columns I used. I had to switch to thicker strings. Luckily, I found myself some thick paper strings so that the strings would not go off the columns so easily.

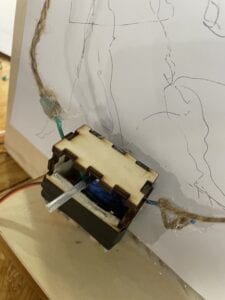

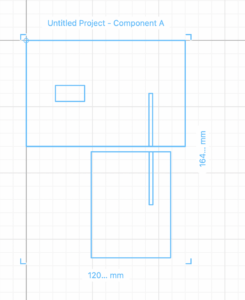

Pen and Servo Holder:

I created the box in a website for wooden box creating recommended by Andy. I made a box at first but it didn’t fit so I changed the parameters and created a new one. They were measured so precise that they fitted so well for the servo and the pen attached to it.

CONCLUSIONS:

I can say that it achieved my goal to help hand disabled people to sketch pictures so long as they can cater to the mechanism of this way of drawing. By simply placing any parts of one’s body to the touchpad of the computer, it could work. In the version one of my code, the goal was achieved, but the sketch would not be so precise. The second version worked fine after I modified certain parameters. To improve this project, I should keep changing the parameters used in Arduino and focus more on the details, say the showing of windows of Processing and performing the drawing on a larger paper. I should place more emphasis on the cosmetics of the subject. Moreover, I have realized that Arduino Uno, though said to be a low level platform, can achieve a lot of things. There is always a method for problems to be solved, and Arduino was not always to be blamed for the limited capability. The true limitation was the creation of the creator herself. I can even limit the stepping length so that the machine won’t always go out of the paper.

ANNEX

Construction:

Final Project V1 for Arduino:

#include "SCoop.h"

#include "SerialRecord.h"

#include <Stepper.h>

const int stepsPerRevolution = 160;

Stepper leftStepper(stepsPerRevolution, 7, 6, 5, 3);

Stepper rightStepper(stepsPerRevolution, 8, 9, 10, 11);

#include <Servo.h>

Servo drawServo;

SerialRecord reader(2);

int val = 150;

defineTask(TaskOne);

defineTask(TaskTwo);

void setup() {

mySCoop.start();

}

void loop() {

yield();

delay(200);

}

void TaskOne::setup(){

Serial.begin(9600);

rightStepper.setSpeed(60);

leftStepper.setSpeed(60);

}

void TaskOne::loop(){

reader.read();

if (reader[0] == 1){

leftStepper.step(-stepsPerRevolution);

}

if (reader[0] == 2){

rightStepper.step(stepsPerRevolution);

//delay(100);

}

if (reader[0] == 3){

leftStepper.step(stepsPerRevolution);

//delay(100);

}

if (reader[0] == 4){

rightStepper.step(-stepsPerRevolution);

//delay(100);

}

reader[0] = 0;

}

void TaskTwo::setup(){

drawServo.attach(12);

}

void TaskTwo::loop(){

reader.read();

if (reader[1] == 1){

drawServo.write(0);

delay(200);

drawServo.write(val);

}

reader[1]=0;

}

Final Project V1 for Processing:

import processing.serial.*;

import osteele.processing.SerialRecord.*;

Serial serialPort;

SerialRecord serialRecord;

void setup() {

size(600,600);

frameRate(30);

strokeWeight(5);

for(int x = 0; x <= 300; x += 300){

for(int y = 0; y <= 300;y += 300){

fill(random(255),random(255),random(255));

rect(x,y,300,300);

}

}

String serialPortName = SerialUtils.findArduinoPort();

serialPort = new Serial(this, serialPortName, 9600);

serialRecord = new SerialRecord(this,serialPort,2);

}

void draw() {

if ((0 < mouseX) && (mouseX < 300) && (0 < mouseY) && (mouseY< 300)){

serialRecord.values[0] = 1;

}

if ((0 < mouseX) && (mouseX < 300) && (300 < mouseY) && (mouseY< 600)){

serialRecord.values[0] = 2;

}

if ((300 < mouseX) && (mouseX < 600) && (0 < mouseY) && (mouseY< 300)){

serialRecord.values[0] = 4;

}

if ((300 < mouseX) && (mouseX < 600) && (300 < mouseY) && (mouseY< 600)){

serialRecord.values[0] = 3;

}

delay(200);

}

void mousePressed(){

serialRecord.values[1] = 0;

serialRecord.send();

delay(200);

}

void mouseReleased(){

serialRecord.values[1] = 1;

serialRecord.send();

delay(200);

}

Final Project V2 for Arduino:

#include "SCoop.h"

#include "SerialRecord.h"

#include <Servo.h>

#include <Stepper.h>

const int stepsPerRevolution = 160;

Stepper leftStepper(stepsPerRevolution, 7, 6, 5, 3);

Stepper rightStepper(stepsPerRevolution, 8, 9, 10, 11);

Servo drawServo;

SerialRecord reader(3);

int val = 180;

defineTask(TaskOne);

defineTask(TaskTwo);

defineTask(TaskThree);

void TaskOne::setup(){

reader[0]=0;

reader[1]=0;

reader[2]=0;

Serial.begin(9600);

leftStepper.setSpeed(30);

drawServo.attach(12);

}

void TaskOne::loop(){

reader.read();

leftStepper.step(reader[0]);

reader[0]=0;

}

void TaskTwo::setup(){

Serial.begin(9600);

rightStepper.setSpeed(30);

drawServo.attach(12);

}

void TaskTwo::loop(){

reader.read();

rightStepper.step(reader[1]);

reader[1]=0;

}

void TaskThree::setup(){

Serial.begin(9600);

drawServo.attach(12);

}

void TaskThree::loop(){

reader.read();

if (reader[2] == 1){

drawServo.write(0);

delay(500);

drawServo.write(val);

reader[2] = 0;

}

}

void setup() {

mySCoop.start();

}

void loop() {

yield();

}

Final Project V2 for Processing:

import processing.serial.*;

import osteele.processing.SerialRecord.*;

Serial serialPort;

SerialRecord serialRecord;

float beginX = 150; // Initial x-coordinate

float beginY = 150; // Initial y-coordinate

float endX = 150; // Final x-coordinate

float endY = 150; // Final y-coordinate

float distX; // X-axis distance to move

float distY; // Y-axis distance to move

float exponent = 4; // Determines the curve

float x = 0.0; // Current x-coordinate

float y = 0.0; // Current y-coordinate

float step = 0.01; // Size of each step along the path

float pct = 0.0; // Percentage traveled (0.0 to 1.0)

float beginl1;

float beginl2;

float endl1;

float endl2;

float dstl1;

float dstl2;

void setup() {

size(500, 500);

noStroke();

distX = endX - beginX;

distY = endY - beginY;

String serialPortName = SerialUtils.findArduinoPort();

serialPort = new Serial(this, serialPortName, 9600);

serialRecord = new SerialRecord(this,serialPort,3);

}

void draw() {

fill(0, 2);

rect(0, 0, width, height);

pct += step;

if (pct < 1.0) {

x = beginX + (pct * distX);

y = beginY + (pct * distY);

}

fill(255);

ellipse(x, y, 20, 20);

pct = 0.0;

beginX = x;

beginY = y;

endX = mouseX;

endY = mouseY;

distX = endX - beginX;

distY = endY - beginY;

beginl1 = dist(beginX,beginY,0,0);

beginl2 = dist(beginX,beginY,300,0);

endl1 = dist(endX,endY,0,0);

endl2 = dist(endX,endY,300,0);

dstl1 = endl1 - beginl1;

dstl2 = endl2 - beginl2;

serialRecord.values[0] = int(dstl1) + 300;

serialRecord.values[1] = int(dstl2) + 300;

if (mousePressed == true){

serialRecord.values[2] = 1;

serialRecord.send();

} else {

serialRecord.values[2] = 0;

serialRecord.send();

}

}

Final Project V3 for Processing:

#include "SCoop.h"

#include "SerialRecord.h"

#include <Servo.h>

#include <Stepper.h>

const int stepsPerRevolution = 360;

Stepper leftStepper(stepsPerRevolution, 7, 6, 5, 3);

Stepper rightStepper(stepsPerRevolution, 8, 9, 10, 11);

Servo drawServo;

SerialRecord reader(3);

int val = 180;

defineTask(TaskOne);

defineTask(TaskTwo);

void TaskOne::setup(){

Serial.begin(9600);

}

void TaskOne::loop(){

reader.read();

leftStepper.setSpeed(120);

rightStepper.setSpeed(120);

int leftSteps = map(reader[0]-300,-200,200,-5,5);

int rightSteps = map(reader[1]-300,-200,200,-5,5);

for(int i=0; i<18; i++){

leftStepper.step(leftSteps/3);

rightStepper.step(rightSteps/3);

}

reader[0] = 300;

reader[1] = 300;

}

void TaskTwo::setup(){

Serial.begin(9600);

}

void TaskTwo::loop(){

reader.read();

if (reader[2] == 1){

drawServo.write(0);

drawServo.write(val);

reader[2] = 0;

}

}

void setup() {

reader[0]=300;

reader[1]=300;

reader[2]=0;

mySCoop.start();

}

void loop() {

yield();

}

Version 1:

Version 2:

(Bern, n.d.)

(Bern, n.d.)

(Dockery, n.d.)

(Dockery, n.d.) (Visnjic, n.d.) The artifact, like the former gave immediate reactions from the input of users and that the art of it was made varying by the users. It created a visual reality for the users, bringing them into a suburbia that was renewed by the lights.

(Visnjic, n.d.) The artifact, like the former gave immediate reactions from the input of users and that the art of it was made varying by the users. It created a visual reality for the users, bringing them into a suburbia that was renewed by the lights.

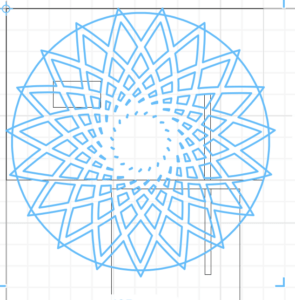

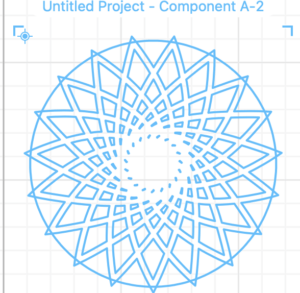

This was the graph that I wanna draw, but then I realized that it was kind of hard to draw because of all those lines and shapes of grass. So I wanted it automatically done. Thus, I made the following.

This was the graph that I wanna draw, but then I realized that it was kind of hard to draw because of all those lines and shapes of grass. So I wanted it automatically done. Thus, I made the following.