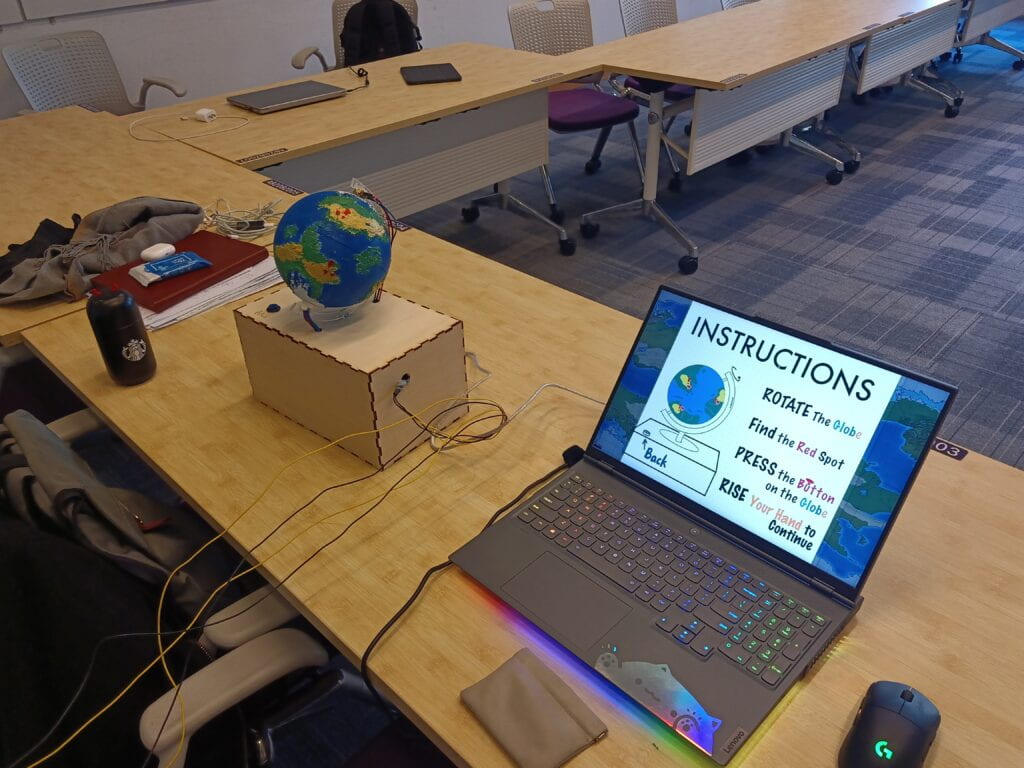

Project Title: Global Experience

Artist: Andy Ye & Jason Xia

Instructor: Margaret Minsky

Concept:

This time we want to design a project which users can have an immersive to the users. Firstly, we want to build a equippment that can allow an object to move freely on a surface. We drew the draft and the blue print and found 3D models on the internet. We all agreed that achieving a free movement physically was great. In Midterm project, we simulate linear movement, and now was the best time to practice movement on a plain. The next step was to think about how to embed an idea into it to make it meaningful and immersive.

However, this time Andy and I have different ideas on how to achieve this goal. I proposed a way to apply a mechanism or a rule to make a game, something similar with what I did research on the grid game like “the game of life”. The difficulty was that I cannot guarantee to design such rules. That’s why I asked questions to Amber about her grid-game-like project in presentation on how she designed the rules and how to test the rules.

We went on with Andy’s idea, which is to script some events on different places. I was strongly against this idea because I thought that content-based project was very time consuming and boring. I shared with Andy my experience in “Creative Game Design and Development” class, when my group designed a content-based game. The disadvantages of such design are that the users’ experience is strongly depend on designers’ efforts, the users cannot experience a place unless designers have coded the place and most of the experience are moduled, which lack coherence with each other. There are also a problem on how much contents you designed. If too less, it will be very boring. If too much, users won’t have the patience to experience all.

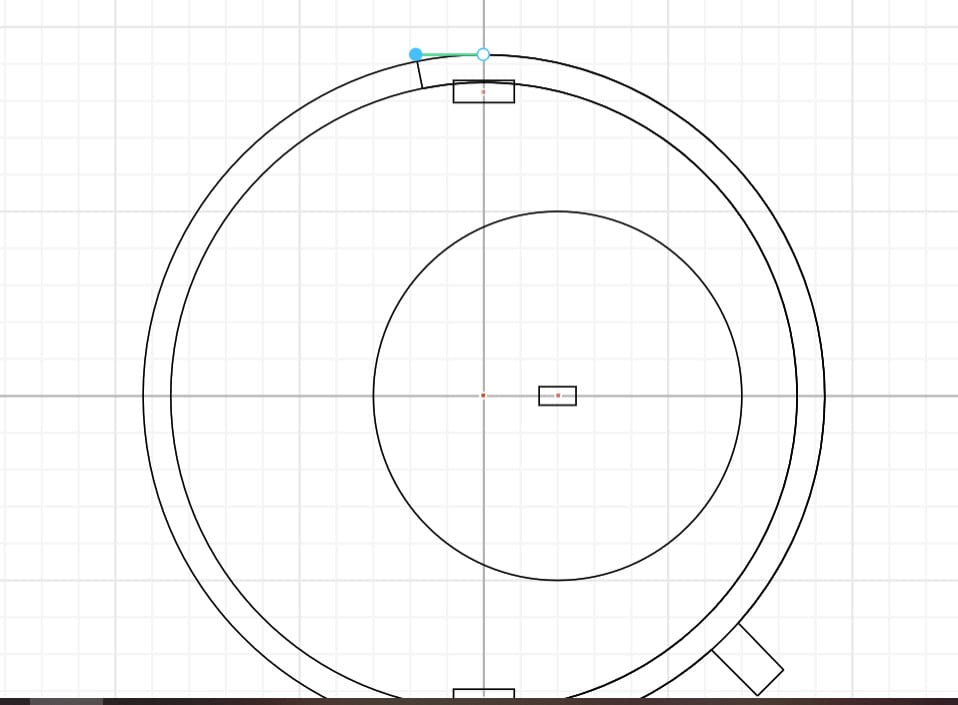

We decided to spent more time on the idea. We first consult Prof. Minskey. She saw our draft and suggested that if we wanted to locate a place on a plain, we could make a globe and it would reduce many efforts on fabrications. Then I accepted Andy’s ideas to make a content-based project. But I embeded my ideas of multi-sensors in my research proposal to detect users’ movement. I thought that this design would contribute to an immersive experience.

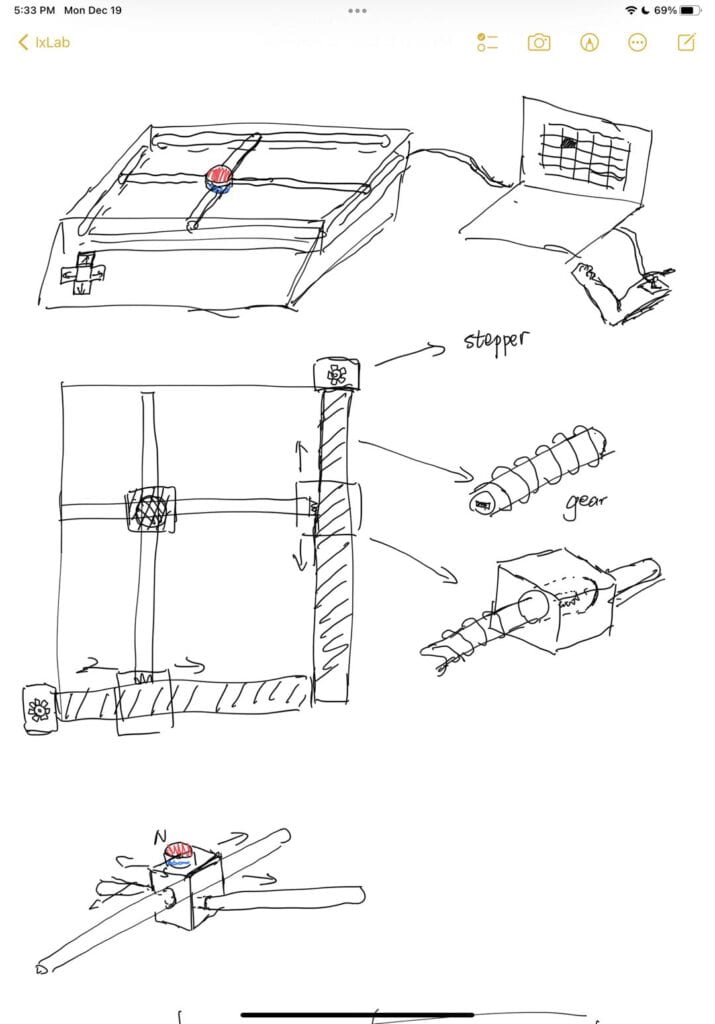

Here is the draft for our design.

We found a 3D model of Eberron, the world map of the game Dragon and Dungeon Online. I thought this resource is much better than the Earth, because it was a fantacy and we don’t have to care too much about the coherence with the reality. Moreover, the Game DND Online is origined from a very famous and interactive table game DND where users can create their own characters and use their creativity to do actions follow strict rules. A special player control the game flow. It was a milestone for RPG game. This game fully aligns what I expected, where the designers set up some rules and users learn the rules, use the rules, and finally comprehend the rules. Of course we are unable to create such a fantastic game, but different from Andy, my own idea is to embed this thought in our project as an inpiration, though it won’t effect much on the main theme of our project.

Preparation and Testing

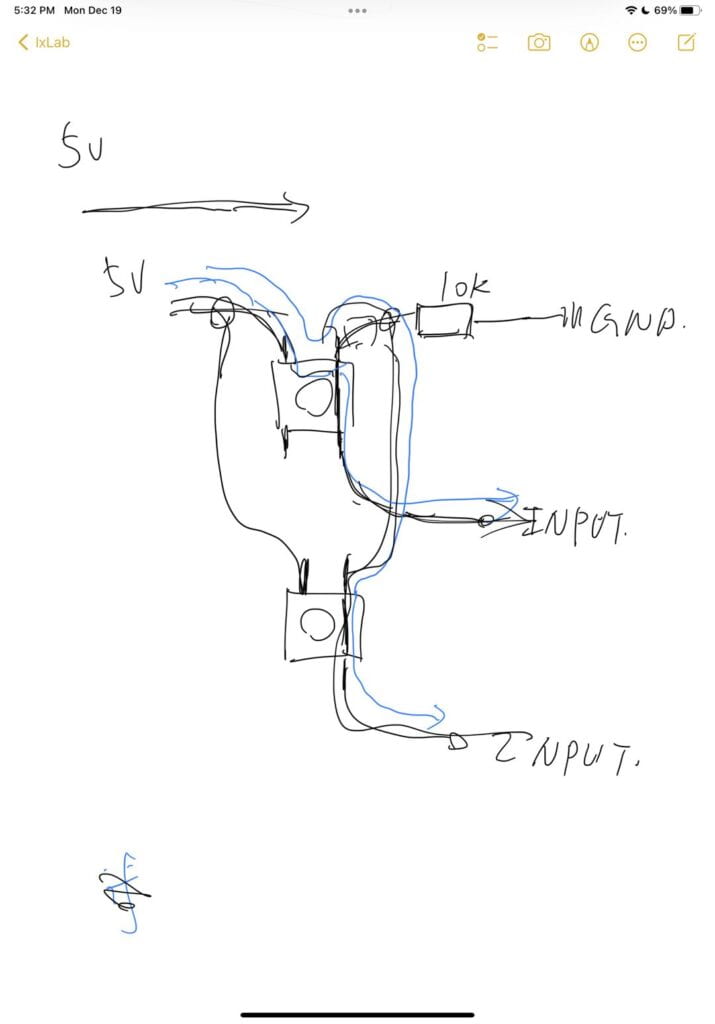

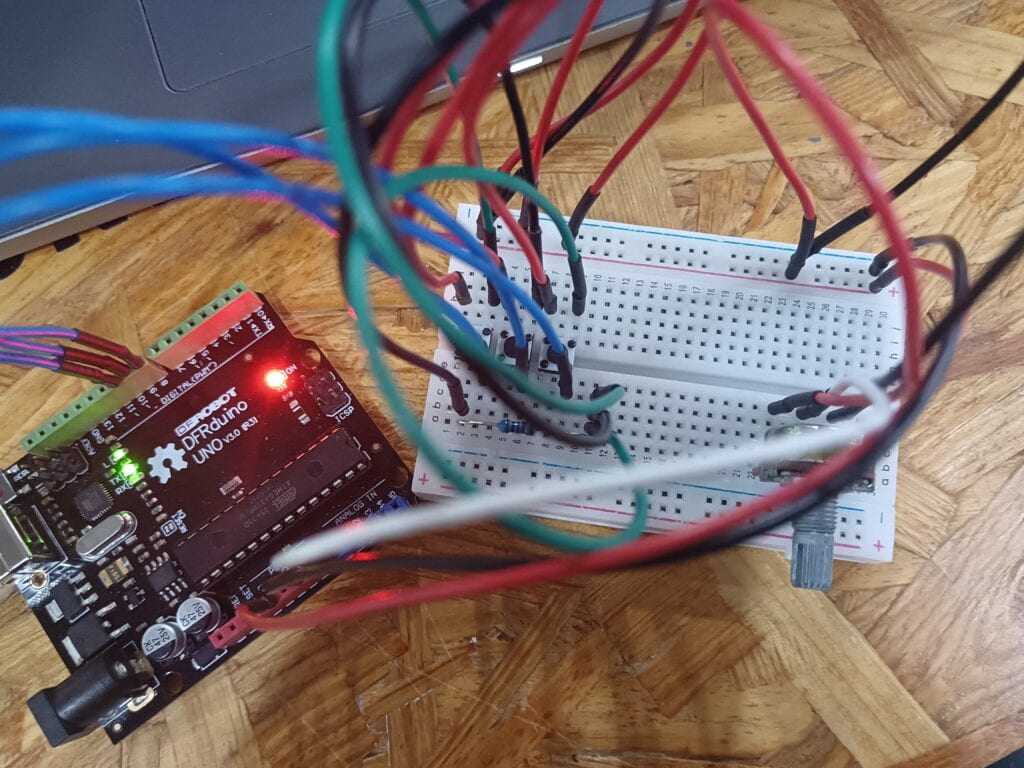

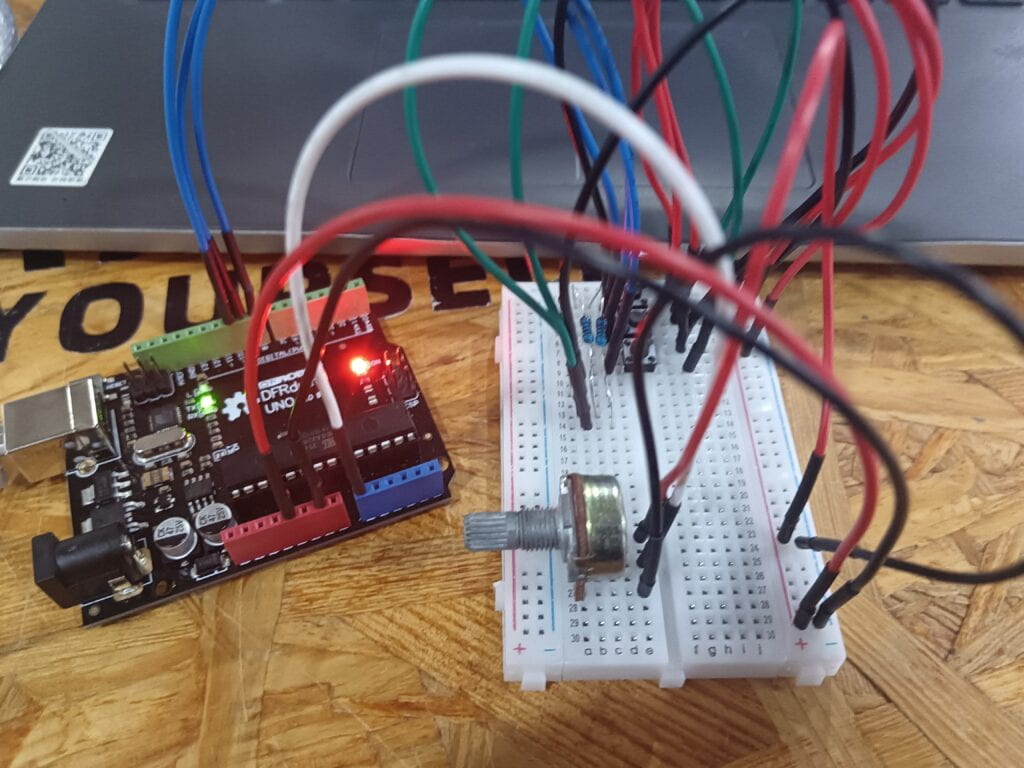

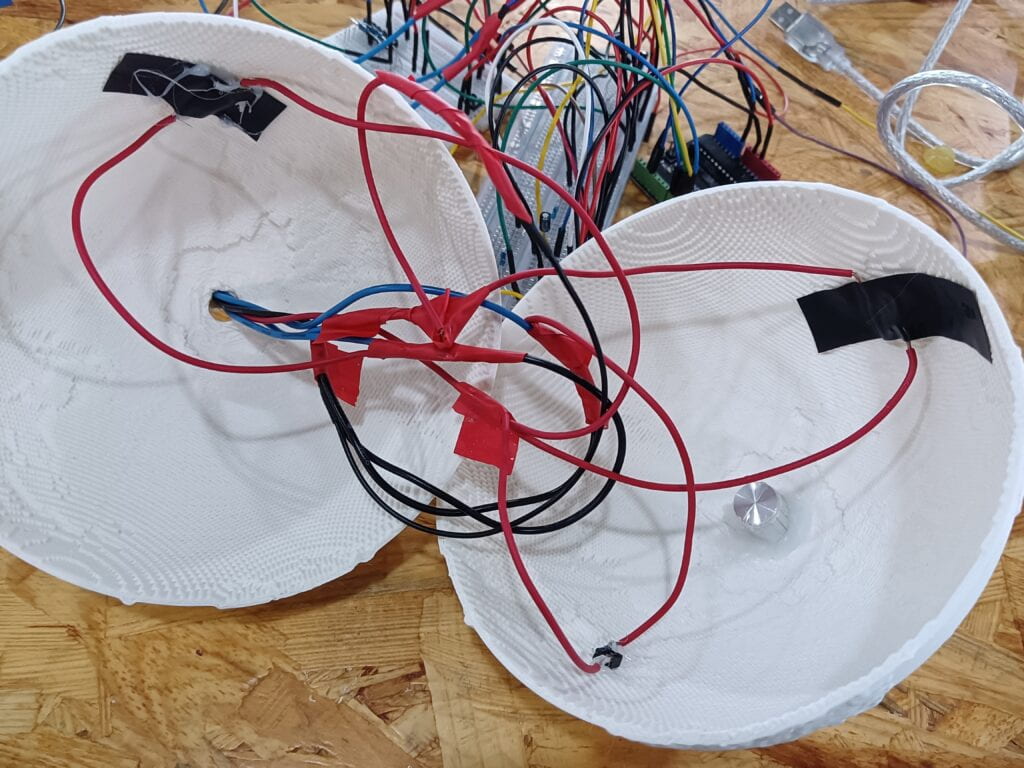

In preparation, we devided our works. I tested the machanism for the globe movement and Andy tested the performance of sensors. Because all the wire have to be embeded inside the globe and cross a small hole, at the same time when I wrote the code for Arduino and tested circuit on breadboard, I was thinking how to minimized soldering work and wire number. This the first time I’ve dealt with so many buttons. Firstly, I thought I could merge the wires to one resistance. The test output showed that the ports had same input. I drew the sketch and guessed that the problem might be without resistance protecting the port, the high signal current will go to all the ports. So I changed back to three resistances.

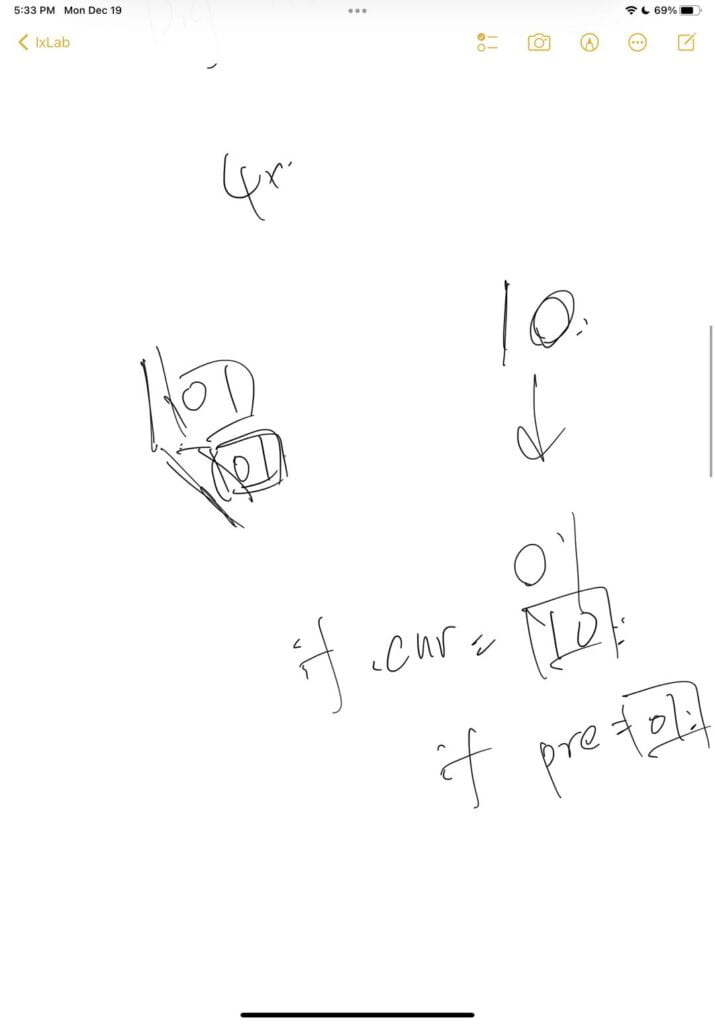

draft for my guessing

draft for my guessing

A trivial but interesting I found when I wrote the report was that I noticed that We didn’t care about the number of attractions later. We all defaulted the number of three. The only thing corelate with it is that there are only 4 buttons in the kit. So I used 4 buttons in testing, 1 for back operation, 3 for attractions. We haven’t notice the cause of the number and there were no users ask such question, though I thought it would be a very interesting question concerning the development of content based project.

Code for Arduino testing before merging:

#include "SerialRecord.h"

SerialRecord writer(5);

//potentiometer, back, image1, image2

void setup() {

// put your setup code here, to run once:

Serial.begin(9600);

pinMode(8,INPUT);

pinMode(9,INPUT);

pinMode(10,INPUT);

pinMode(11,INPUT);

}

void loop() {

// put your main code here, to run repeatedly:

int value1 = analogRead(A0);

int value2 = digitalRead(8);

int value3 = digitalRead(9);

int value4 = digitalRead(10);

int value5 = digitalRead(11);

// Serial.println(value1,value2,value3,value4);

writer[0] = value1;

writer[1] = value2;

writer[2] = value3;

writer[3] = value4;

writer[4] = value5;

writer.send();

delay(20);

}

After that I wrote the code for processing. I downloaded some pictures from the internet to test.

State = S_MAP

The key is that I have to build a frame, which controls the states of the program. In different states, the input has different meaning. I used my knowledge from ICS, in which I wrote a state-control-machine. When I helped others debuging their projects later, I found that this frame is very powerful, especially processing has a looping function draw(). Many peers asked me how to embed a starting page before their main part. I always introduced the state idea. For example, State equals to 0 at the beginning, and the draw funtion has a large if-elif structure, the State == 0 brunch will always show the starting page and detecting changing from users. If detected, it will change State to 1, and then draw function will never return to the State == 0 brunch. Such signal might be a mouseclick() event outside of the draw function, or a timer recording the length of a video. This frame can also separate codes in different scenes, which was proved to be very helpful when we merged our codes together smoothly.

Here is the processing code before merging:

import processing.serial.*;

import osteele.processing.SerialRecord.*;

Serial serialPort;

SerialRecord serialRecord;

PImage Map,img1,img2,img3;

int S_MAP = 0,S_HEAP = 1,S_PLAIN= 2 ,S_FOREST = 3;

int STATE;

int portNum = 5;

int[] val = new int[portNum];

int[] pre = new int[portNum];

void setup() {

size(960, 600);

String serialPortName = SerialUtils.findArduinoPort();

serialPort = new Serial(this, serialPortName, 9600);

serialRecord = new SerialRecord(this, serialPort, portNum);

Map = loadImage("map2.jpg");

img1 = loadImage("ocean.jpg");

img2 = loadImage("mountain.png");

img3 = loadImage("forest.jpg");

STATE = S_MAP;

for(int i=0;i<portNum;i++){

val[i] = 0;

pre[i] = 0;

}

}

void draw(){

serialRecord.read();

for(int i=0;i<portNum;i++){

val[i] = serialRecord.get(i);

}

if(STATE == S_MAP){

float posx = map(val[0],0,1023,-width,0);

image(Map,posx,0,width*2,height);

if(val[1]==1){

if(pre[1]==0){

STATE = S_PLAIN;

}

}

if(val[2]==1){

if(pre[2]==0){

STATE = S_HEAP;

}

}

if(val[3]==1){

if(pre[3]==0){

STATE = S_FOREST;

}

}

}

if(STATE == S_PLAIN){

image(img1,0,0,width,height);

if(val[4]==1){

if(pre[4]==0){

STATE = S_MAP;

}

}

}

if(STATE == S_HEAP){

image(img2,0,0,width,height);

if(val[4]==1){

if(pre[4]==0){

STATE = S_MAP;

}

}

}

if(STATE == S_FOREST){

image(img3,0,0,width,height);

if(val[4]==1){

if(pre[4]==0){

STATE = S_MAP;

}

}

}

for(int i=0;i<portNum;i++){

pre[i] = val[i];

}

}

Fabrication

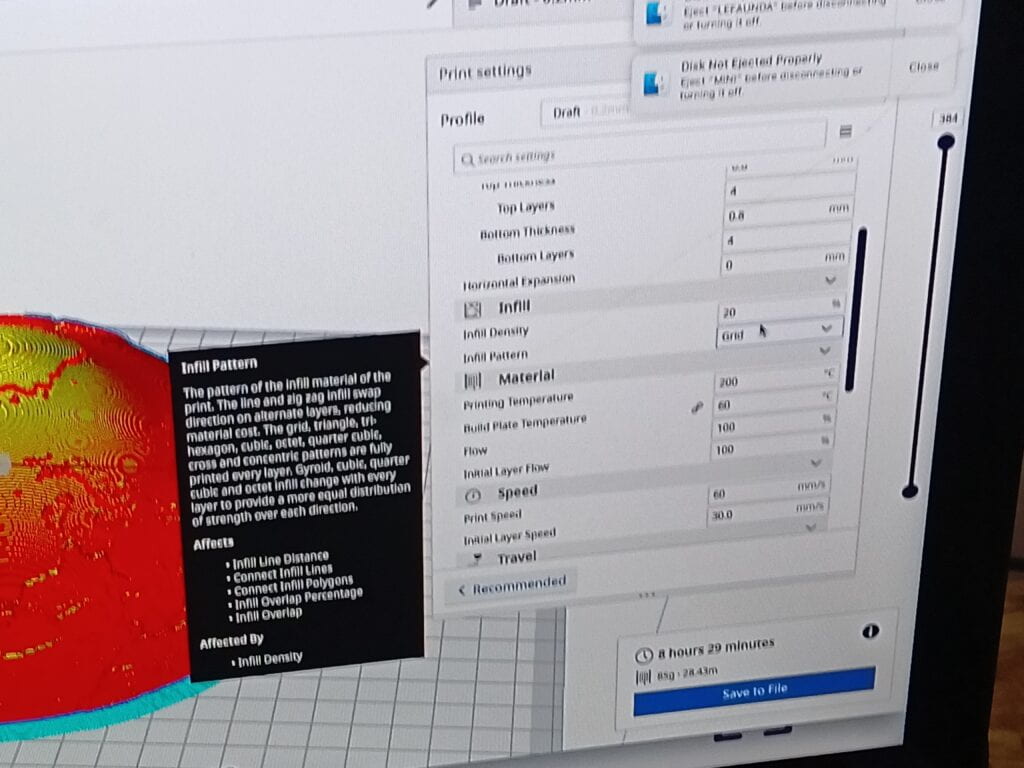

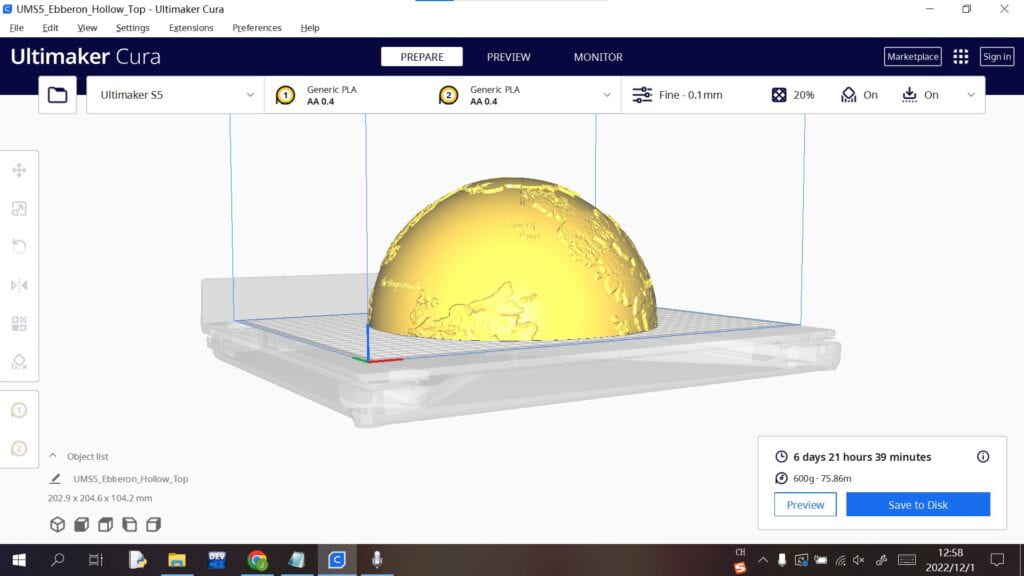

When I was testing the code, I also started a 3D printer to print a prototype. I asked a lab assistant to help me set up the 3d printer. The next day, Andy got the prototype, which was very ugly.

As soon as we wanted to abandon the idea to 3D print our globe, Lab Manager Andy Garcia pointed out that I used a wrong materials and I didn’t need to add supporters. He helped us change the material, modify parameters, and set the printers. We got a perfect sphere. He also helped us a lot later. Here we want to give our heartfelt thanks to him.

We also discussed how to detect users’ movement. Andy proposed to use an acceleration sensor. Because we only have one and we found it hard to interpret the data collected, we decided to use tilt sensors. We seperated our tasks. I gave my codes to Andy and he would add codes to detect users’ actions. I also asked him to draw a small avatar on the screen to add more interactivity. The avatar’s arms would raise up according to users’ movements detected. At the same time, I did a lot of measurements and made some 3d models and laser cut files. Andy.G taught me a lot on how to decide the data, do correct measurement, and use laser cutting machine. Here are the objects I made:

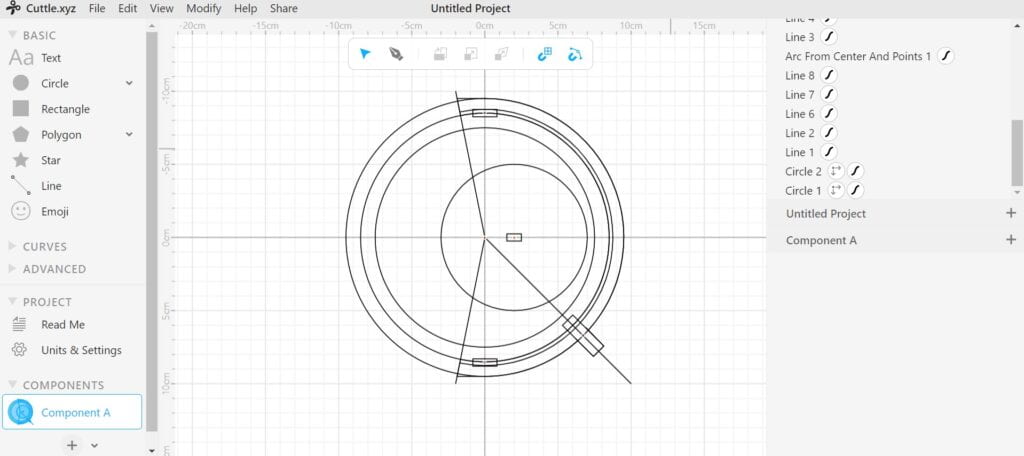

Supporter of the globe, laser cut file, with subline (in)visible:

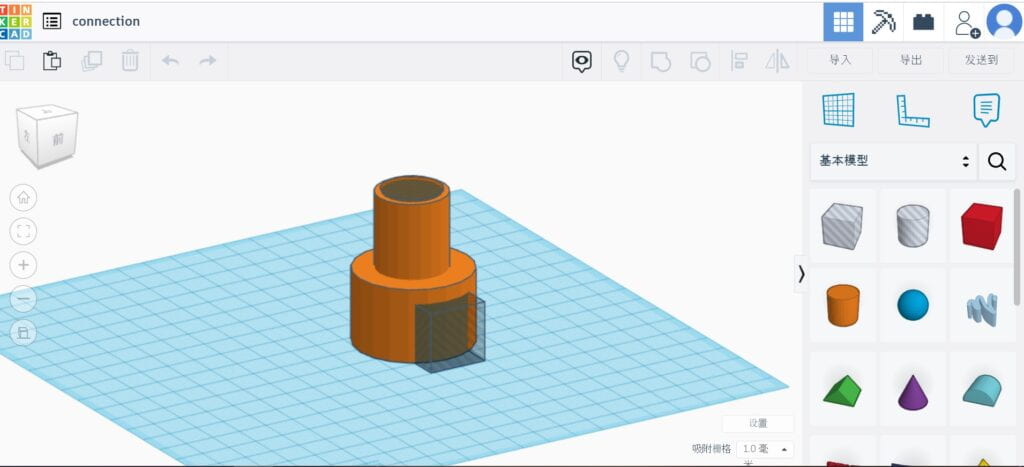

Connection between globe and frame:

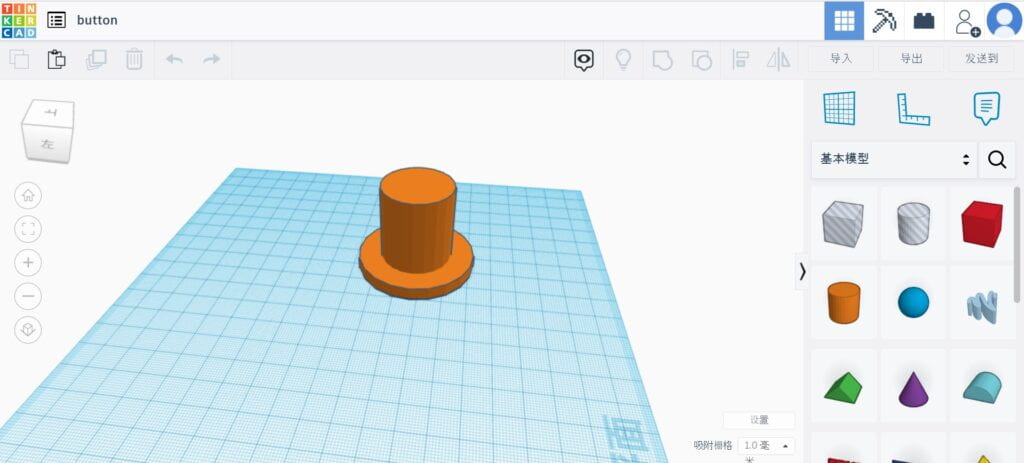

Button attachment:

Andy’s work in a nutshell: He found some problem in processing that processing had a performance on handling images. When he changed the position of images according to the potentiometer, there were high delays. He solved this problem by not changing the size of image inside processing but did the adjustment to the properties of image directly using PS. He soldered the wires. He designed a scene where users can climb up a mountain virtually.

We drilled some hole on the hemispheres and embeded the buttons. We assembled the hemispheres and the support. Then it came the user-testing.

User-testing

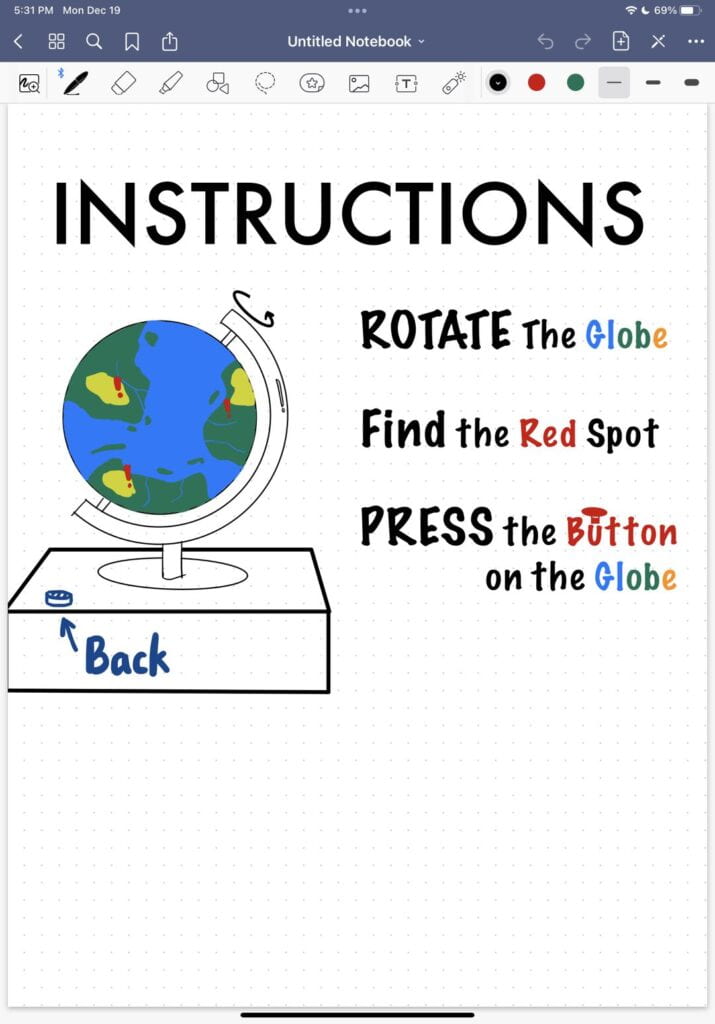

We implemented the synchronized rotation of maps and one climbing scene. Although before the user-test I guaranteed to Andy that we don’t need an instruction because all the design is direct and simple. For example, one see a globe will have a desire to rotate it, one see a button will try to press it, one with two sensors on its arm will try to move its arm. But we found that users stilled get stuck. One user moved her arm and there was no feedback on the screen because it was in map state. One user always move her forearm in the climbing scene and we had to give instructions that you have to raise your upper arm.

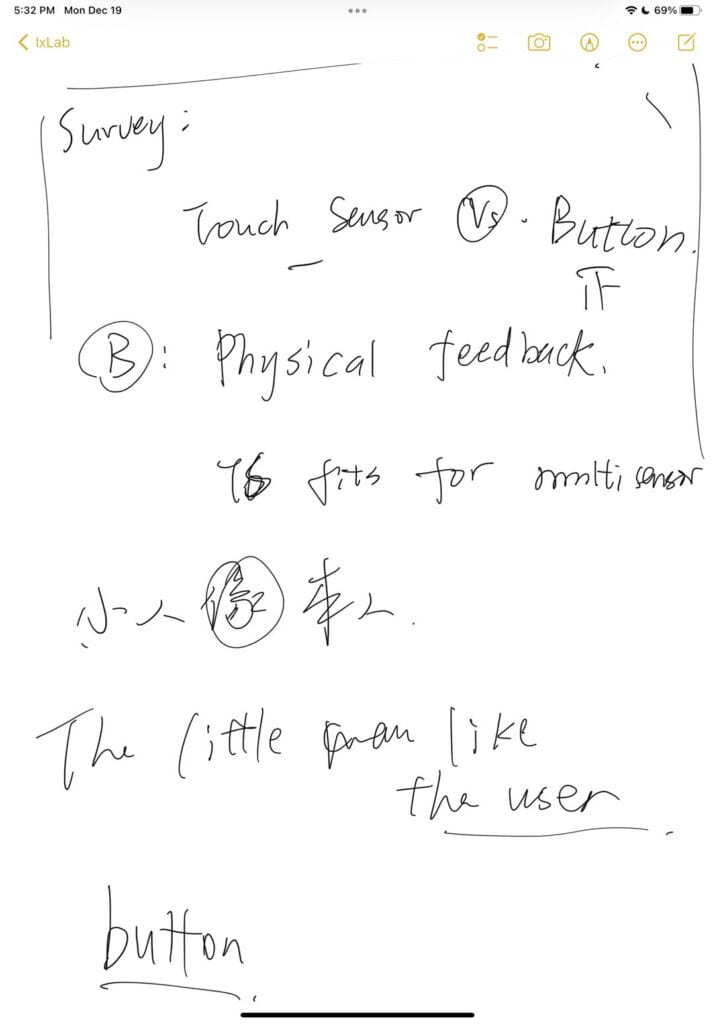

We also conducted a survey because Andy and I debated on using buttons or using touch sensors. All the users voted for buttons. The most common reason were that buttons provide physical interactivity.

Post User Testing Fabrication

After the user-testing, we added more scenes and do some decorations. I painted the globe in green and blue colors. I also made the laser cut file for shell to hide the wires and scaffold the globe. Meanwhile, Andy wrote the code for the movement of avatar and a new scene where user can ride a camel. He changed all the pictures to the pictures he photographed. I finished my part earlier so I went to help peers on their project codes. In exchange, I invited them to user test our project. When Andy finished his part, he told me that he really want to draw an instruction page, so I got the code and wrote the state switching code for instructions and build another scene where users can pick up mushrooms. Compared with other scene, this scene is a bit crude, but it was a good practice to understand Andy’s codes because he defined a lot of variables.

User testing:

Instruction Page:

Complete processing code:

import processing.serial.*;

import osteele.processing.SerialRecord.*;

import processing.sound.*;

Serial serialPort;

SerialRecord serialRecord;

PImage Map, img1, img2, img3, imgCamel, imgm, inst;

SoundFile oceanS;

SoundFile desertS;

SoundFile forestS;

SoundFile climbS;

SoundFile pickS;

SoundFile rideS;

boolean oceanPlay = false;

boolean desertPlay = false;

boolean forestPlay = false;

int S_MAP = 0, S_HEAP = 1, S_OCEAN= 2, S_FOREST = 3, S_INS = -1;

int STATE;

int LAST_STATE;

int portNum = 7;

int Rtilt1 = 0;

int Rtilt2 = 0;

int manTransX = 0;

int manTransY = 0;

int desertY = -2960;

int camelManMoveX = 0;

float cosCMX = 0;

int[] val = new int[portNum];

int[] pre = new int[portNum];

//boolean[] msrb = new boolean[7];

int[] msrx = new int[7];

int[] msry = new int[7];

int cur = 0;

void setup() {

fullScreen();

frameRate(165);

//size(1600, 900);

background(0);

String serialPortName = SerialUtils.findArduinoPort();

serialPort = new Serial(this, serialPortName, 9600);

serialRecord = new SerialRecord(this, serialPort, portNum);

Map = loadImage("mapH.jpg");

img1 = loadImage("desert.jpg");

img2 = loadImage("cliff25604.jpg");

img3 = loadImage("forest.jpg");

imgCamel = loadImage("camel.png");

imgm = loadImage("mushroom.png");

inst = loadImage("instruction.jpg");

oceanS = new SoundFile(this, "cliff.mp3");

desertS = new SoundFile(this, "desert.mp3");

forestS = new SoundFile(this, "forest.mp3");

climbS = new SoundFile(this, "climbS.mp3");

pickS = new SoundFile(this, "pickS.mp3");

rideS = new SoundFile(this, "rideS.mp3");

STATE = S_INS;

//STATE = S_OCEAN;

for (int i=0; i<portNum; i++) {

val[i] = 0;

pre[i] = 0;

}

}

int preRtilt1 = 0;

int preRtilt2 = 0;

int status = 0;

int preStatus = 0;

//int camelMove = 1;

void draw() {

//assignvalue

serialRecord.read();

for (int i=0; i<portNum; i++) { val[i] = serialRecord.get(i); } Rtilt1 = val[5]; Rtilt2 = val[6]; //------------------------------------------------------------------ //main structure if (STATE == S_INS) { push(); image(inst, 0, 0); pop(); if (val[5]==0) { if (val[6]==0) { STATE = S_MAP; } } } if (STATE == S_MAP) { //float posx = map(val[0],0,1023,0,width); oceanS.stop(); desertS.stop(); forestS.stop(); oceanPlay = false; desertPlay = false; forestPlay = false; manTransX = 0; manTransY = 0; if (val[0] >= 850) {

image(Map, -1700, 0);

} else {

image(Map, -val[0]*2, 0);

}

//fill(0);

//text(-val[0]*2,100,100);

if (val[1]==1) {

if (pre[1]==0) {

STATE = S_HEAP;

}

}

if (val[4]==1) {

if (pre[4]==0) {

STATE = S_INS;

}

}

if (val[2]==1) {

if (pre[2]==0) {

STATE = S_OCEAN;

}

}

if (val[3]==1) {

if (pre[3]==0) {

STATE = S_FOREST;

}

}

}

//------------------------------------------------------------------

if (STATE == S_OCEAN) {

if (val[4]==1) {

if (pre[4]==0) {

STATE = S_MAP;

}

}

if (desertPlay == false) {

desertS.play();

desertPlay = true;

}

//initialize

if (LAST_STATE != STATE) {

climbTran = 0;

climbCount = 0;

desertY = -2960;

manTransX = -750;

manTransY = 270;

camelManMoveX = 0;

}

cosCMX = sin(camelManMoveX/100.)*800;

Rtilt2 = 1;

image(img1, 0, desertY);

push();

if (((camelManMoveX+150) % 600) <= 300) {

translate(800+cosCMX, 0);

} else {

translate(800+cosCMX, 0);

scale(-1, 1);

translate(-1100, 0);

}

camel();

displayMan();

pop();

}

//------------------------------------------------------------------

if (STATE == S_HEAP) {

//image(img2,0,0,width,height);

if (val[4]==1) {

if (pre[4]==0) {

STATE = S_MAP;

}

}

if (oceanPlay == false) {

oceanS.play();

oceanPlay = true;

}

//initialize

if (LAST_STATE != STATE) {

climbTran = 0;

climbCount = 0;

cliffY = -5120;

manTransX = 0;

manTransY = 0;

}

//Rtilt1 = 0;

push();

translate(0, climbTran);

//image(cliff, 0, cliffY, width, width*3);

image(img2, -1, cliffY);

displayMan();

pop();

climb();

}

//------------------------------------------------------------------

if (STATE == S_FOREST) {

image(img3, 0, 0);

//initialize

if (forestPlay == false) {

forestS.play();

forestPlay = true;

}

if (LAST_STATE != STATE) {

for (int i = 0; i<7; i++) {

msrx[i] = int(random(40, width-200));

msry[i] = int(random(height-600, height-400));

//msrb[i] = true;

}

cur = 0;

manTransX = 0;

manTransY = 0;

}

textSize(50);

text("Mushrooms:", 10, 100);

text(cur, 300, 100);

if (cur<7) {

manTransX = msrx[cur]-1100;

manTransY = msry[cur]-800;

}

pick();

for (int i = cur; i<7; i++) {

image(imgm, msrx[i], msry[i]);

}

displayMan();

if (val[4]==1) {

if (pre[4]==0) {

STATE = S_MAP;

}

}

}

for (int i=0; i<portNum; i++) { pre[i] = val[i]; } //------------------------------------------------------------------ LAST_STATE = STATE; } int climbTran = 0; int climbCount = 0; int cliffY = -5120; void climb() { status = Rtilt2; if (status != preStatus && status == 1) { climbCount = 1; climbS.play(); } if (climbCount>= 1 && climbCount < 25 && cliffY <= -10) { cliffY += 10; climbCount += 1; manTransY += 1; if (manTransY >= 200 && manTransY < 500) { manTransX -= 3; } else if (manTransY >= 500) {

manTransY += 11;

}

} else {

climbCount = 0;

}

preStatus = status;

fill(0, 100);

rect(2100, 0, 600, 1600);

fill(255);

textSize(150);

text(-cliffY/50, 2150, 120);

text("M", 2400, 120);

textSize(100);

text("Remains", 2150, 220);

push();

strokeWeight(100);

stroke(255);

line(2350, 400, 2350, 1500);

stroke(0);

line(2350, 1500-1100-cliffY/5, 2350, 1500);

pop();

}

void camel() {

image(imgCamel, 280, 1000);

status = Rtilt1;

if (status != preStatus && status == 1) {

climbCount = 1;

rideS.play();

}

if (climbCount>= 1 && climbCount < 25 && desertY <= -1000) {

desertY += 2;

climbCount += 1;

camelManMoveX += 1;

} else {

climbCount = 0;

}

preStatus = status;

}

void pick() {

status = Rtilt1;

if (status != preStatus && status == 1 && cur<7) {

cur += 1;

pickS.play();

}

preStatus = status;

}

//int Ltilt1 = 0;

//int Ltilt2 = 0;

int RarmHeight1 = 225;

int RarmHeight2 = 225;

int LarmHeight1 = 225;

int LarmHeight2 = 225;

void displayMan() {

if (Rtilt2 == 0) {

RarmHeight1 = 200;

LarmHeight1 = 250;

} else {

RarmHeight1 = 250;

LarmHeight1 = 200;

}

if (Rtilt1 == 0) {

RarmHeight2 = RarmHeight1 - 25;

LarmHeight2 = LarmHeight1 + 25;

} else {

RarmHeight2 = RarmHeight1 + 25;

LarmHeight2 = LarmHeight1 - 25;

}

//text(camelManMoveX, 100, 100);

push();

translate(1180+ manTransX, 600 + manTransY);

strokeWeight(30);

fill(255);

stroke(255);

ellipse(100, 100, 100, 100);

line(100, 100, 100, 300);

line(100, 300, 150, 400);

ellipse(100, 100, 100, 100);

line(100, 225, 175, RarmHeight1);

line(175, RarmHeight1, 200, RarmHeight2);

if (STATE != S_OCEAN) {

line(100, 225, 25, LarmHeight1);//left arm

line(25, LarmHeight1, 0, LarmHeight2);//left arm

line(100, 300, 50, 400);//left leg

}

pop();

}

Andy also wrote the code for avatar movement and found some background music

Demo:

Conclusion,Improvement, and Further Thoughts

This is a very memorable experience, especially the fabrication process. Being a CS major student, I believe that I’m the most engaging users of our project. I wrote hundreds of code before, and debugged them nightlessly. The interactivity embeded in program is very common in my eyes,. However, the fabrication process provides me an impressive experience that when I wanted to create something, I could open a project file in the computer, created digital object, uploaded to machine, whatever 3D printer or laser cut, and got the object I wanted. Throughtout the process, I debugged the measurement on real world and the objects fabricated several times. It expands the realm of my projects meanwhile allows freedom for fabracating.

Our project did provide an engaging and interesting experience to users. The combination of location selection and movement detection contributes the interactivity. Dividing them into two separate design, we can still say them are interactive projects, but these two systems generate an engaging experience when they are combined together, which I would say greater than the sumation of their own effect. This project combined Andy’s idea on immersive experience, somehow like AR in my eyes, my design of multiple tilt sensors. It proved to be a good combination and it’s good to see that what we brainstormed before contributed to the final project. I also secretly embed the idea of interactive grid game inside the DND map.

There are some drawbacks worth mentioning. Andy designed the avatar moving in the scene, but I think separate the avatar from the scene and designe an interface might be a good idea. Firstly, the avatar is just a man in lines and angles, which will damage the realistic scene. Secondly, users’ simple movements can hardly align with the transition of avatar. But there are also drawbacks on my interface idea. The avatar is hard to engage into the scene and users might not understand what they are doing. Anyways, I think if the avatar is more realistic, we can improve this drawback and discuss which design is better.

Another drawback in my eyes roots in the nature of this project because it is content based. We replied casually that we will build more scenes in the future. But the question is how many scene should we build? Absolutely the answer is not the more the better. The interactivity, which I regard as the heart of our project was determined as soon as we built the first scene. Adding a new scene won’t change the interactivity. This is a very serious question in art field. We see projects with enormous efforts, money, and time spent, but fail to get expected profits, while projects with tiny cost get famous around the world overnight. What is the meaning of spending time on creating content with similar mechanism one by one? Such question is hard to answer right now, but I believe with more experience and projects designed, I can gradually reach the answer.

Leave a Reply