Draw your life · Jelena Xu · Eric Parren

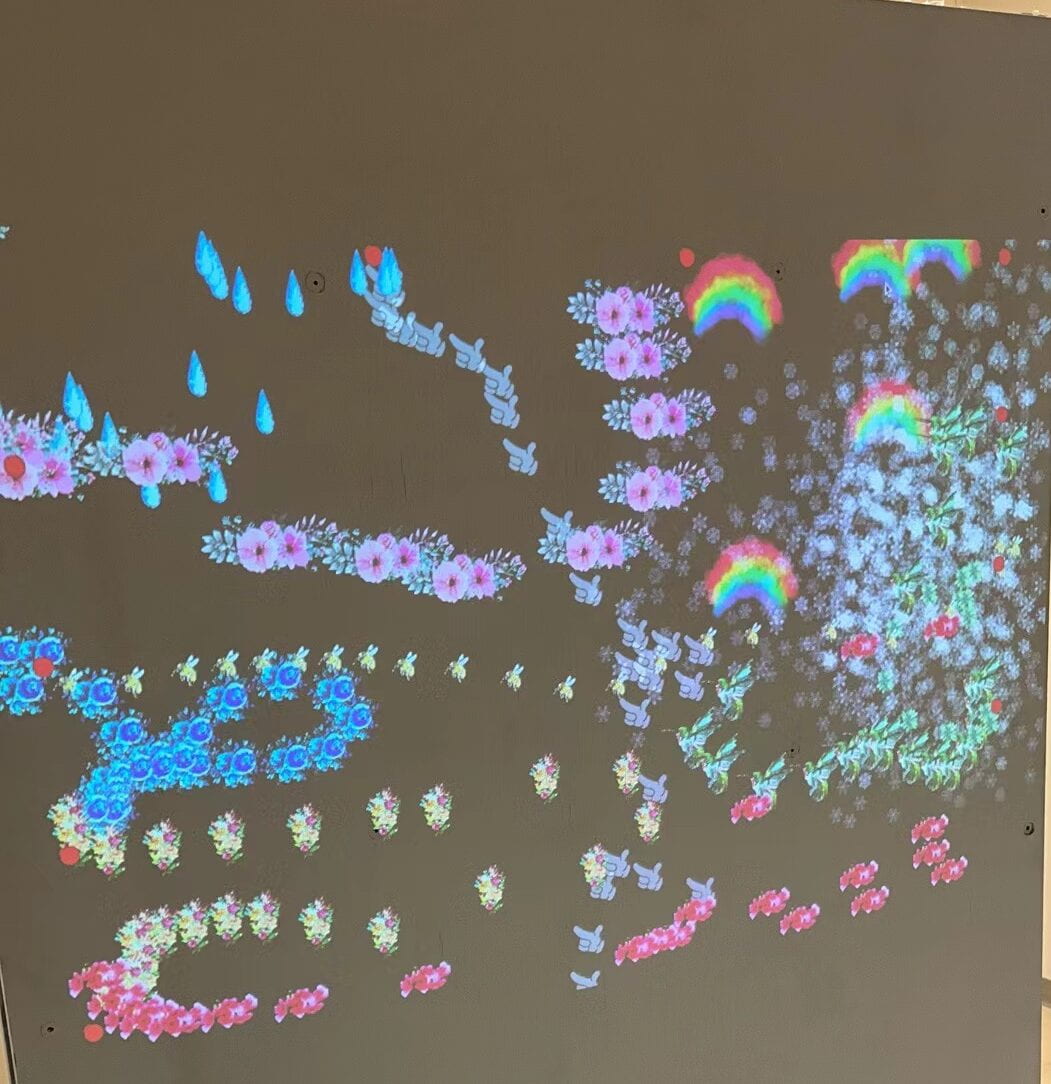

By picking the “Magic Wand” and waving it in the space, image will be triggered, and the user can either choose different pen images by exploring to the corners or wait for the internal interaction happen. In this project, we hope to remind people who are trapped in endless skyscrapers and are overwhelmed rushing through their never-ending schedule of the beauty of the image and sound nature through an immersive experience. Also, the process of the drawing is unlimited, which allows collaborative production between users. This is designed to encourage people to pull themselves away from the virtual connection via social media or other internet platform and feel the real, warm and lively connection and interaction between individuals. It is about drawing, giving life to a Xanadu and drawing out the connection between human and nature and within the whole human community.

From the research…

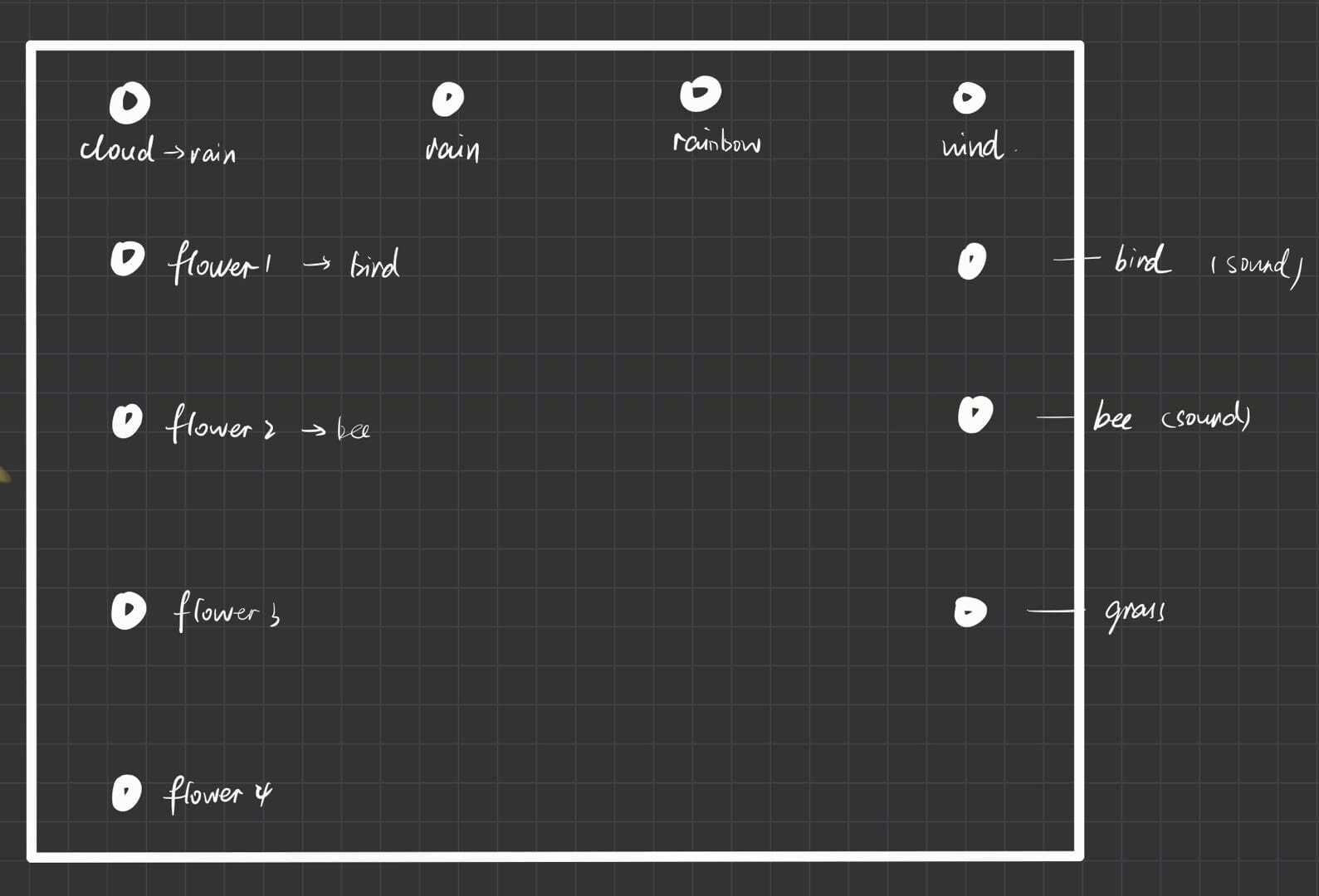

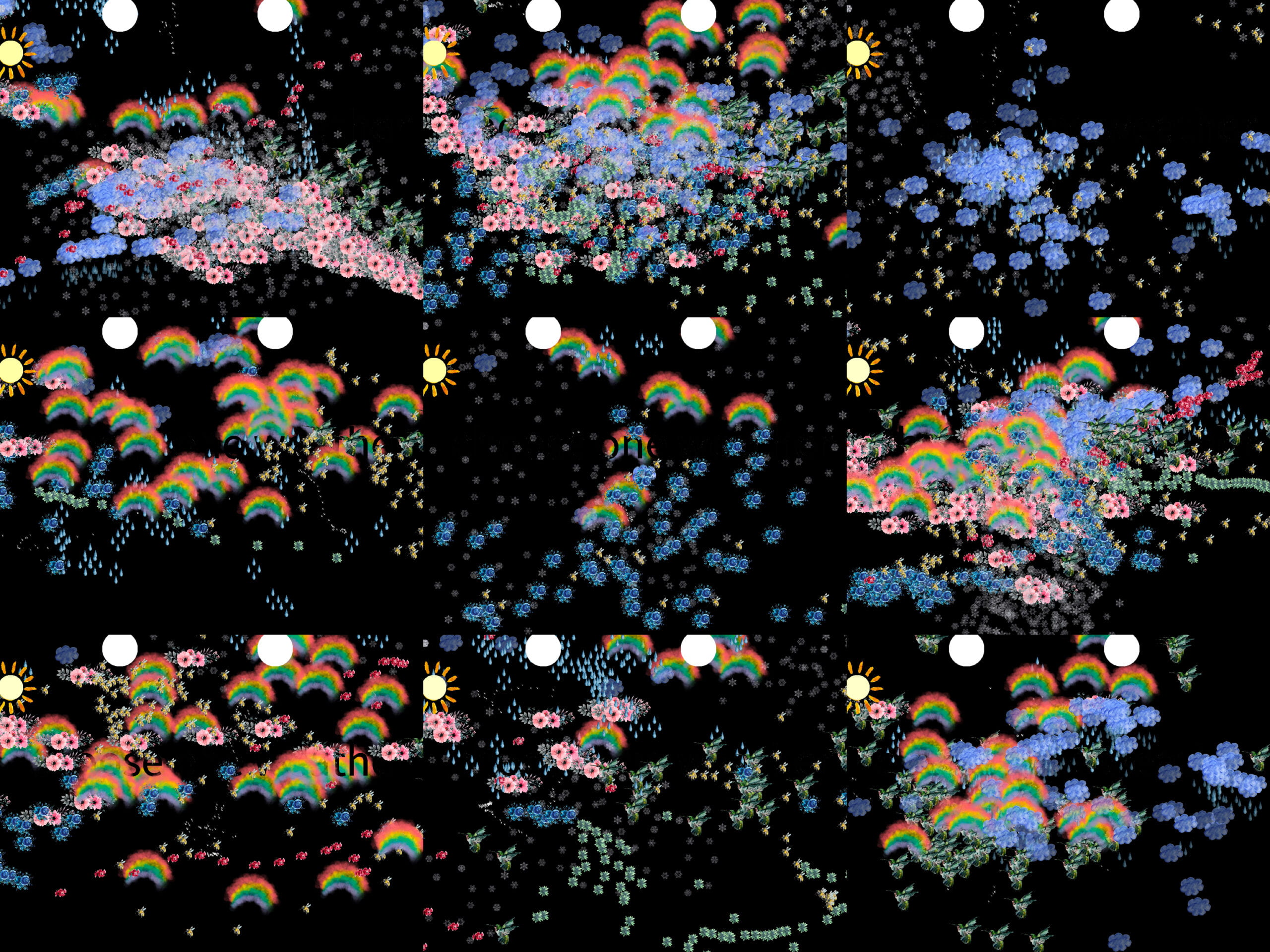

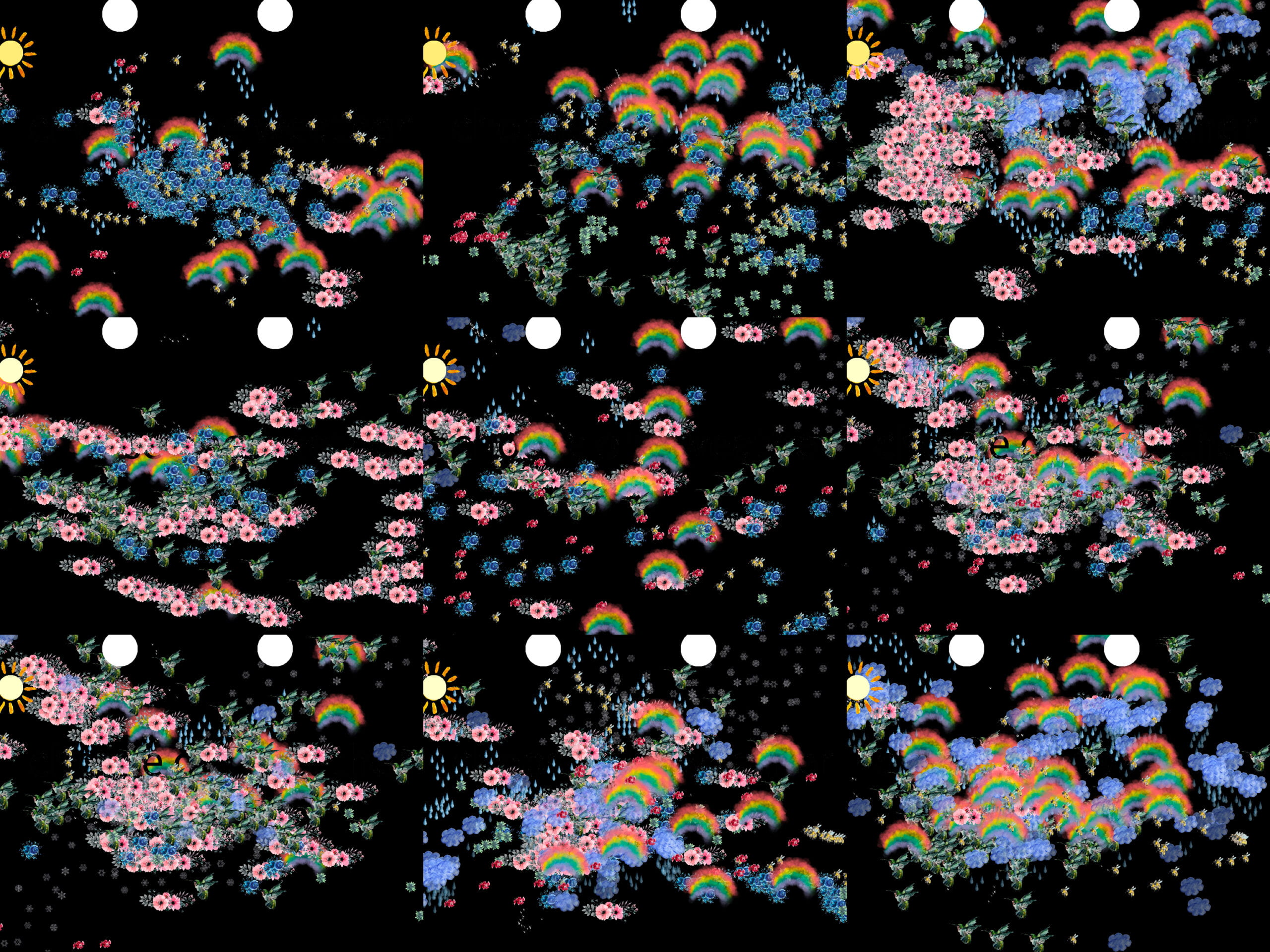

My new understanding of “interaction” did affect a lot in my design of the project. First, the emphasis on “multiple senses” inspired me to add background music and sound effect when certain pen brush is triggered. In this project, we integrated visual experience with auditory experience. Also, I think it would be better if scent can be included as well to help construct a certain atmosphere (e.g., the scent can change according to the density of certain elements and the position of different images…). Second, about exploration, the project merely gives hint on where the users can go explore what will appear on the canvas, so during the process of drawing a picture, users are not only exploring how their body movements should change to select a pen image but also exploring what will be triggered and what effect will take place after certain choices or movements. Third, also the most important part, is internal interaction. I got this idea from the Ernest Edmonds’s Art, Interaction and Engagement when doing the preparatory research. As a result, I added triggering effects to make the elements interact with each other internally. For example, when the number of a certain flower accumulate to the pre-set number, birds and bees will come out.

During the User Testing Session…

The original idea of our project is to make a clock as the canvas and let users to draw on the clock, then capture the image at a regular interval and flash them back in the end of the whole process. However, during the user testing session, Rudi came and tried our project and pointed out that there isn’t a strong relation between the drawing of flowers and the idea of time cherishing. We also received similar comments from other users, so it is really an important problem. I reflected on it and thought there should be two reasons: First, we didn’t finish the fabrication of the background clock during user test, and we just put the projector upon a white wall, so users may feel at lost when drawing and don’t really know what is happening and what will happen next as well. The second reason should be that the rotating and flashing effect wasn’t catering to our imagination, and it appeared too small and too fast so users can hardly get the idea that these pictures were what they have drawn without any explanation.

To solve the two problems, I first thought of a scene for the drawing to start with. It was autumn at that time and leaves began to turn yellow. It was kind of a tradition for me and my sister to pick the fallen leaves and to turn them into bookmarks. So, when my sister sent me pictures of the bookmarks we made last year, it suddenly reminded me that it has been a long time since I last slowed down my pace and explore and observe the nature around me and that’s the main reason why I got to the idea of making an immersive experience to emphasize the relationship between human and nature. Then to make the scene more real and enrich the experience, I added elements to the project. For the second problem, as the physical background is no longer a clock, there seems to be no necessity to make the images flash back and rotate to emphasize the passage of time. Also, an interactive project can be for either one or multiple users, so I just got rid of the rotating part.

From my perspective, after the adaption, the visual and experiential presentation of our project better aligns with and even enriched the message we would like to convey.

—FABRICATION AND PRODUCTION—

Coding…

For the coding part, I was responsible for the whole processing part, the biggest challenge at first is to track multiple objects at the same time. We watched the tutorial form Daniel Shiffman to set up blobs. It worked but the tracking was quite unstable, and won’t work if we want to track separate fingers.

vid. for the unstable blob:(((

So I transferred back to color tracking and managed to make the images move with the hand movements.

After the user testing session, the biggest thing to figure out how to choose the pen image, which was a bit complicated as we had 12 different images at first. For this part, Kaylee inspired me to use a “state” function to mark whether a certain option was triggered or not. Things became much clearer after that, but it also made the code a little too long and repetitive, I believe there might be a way to simplify it, but I couldn’t find it out.( Anyways, it worked as I wished🥳. Love you Kayleeeee😘😘😘)

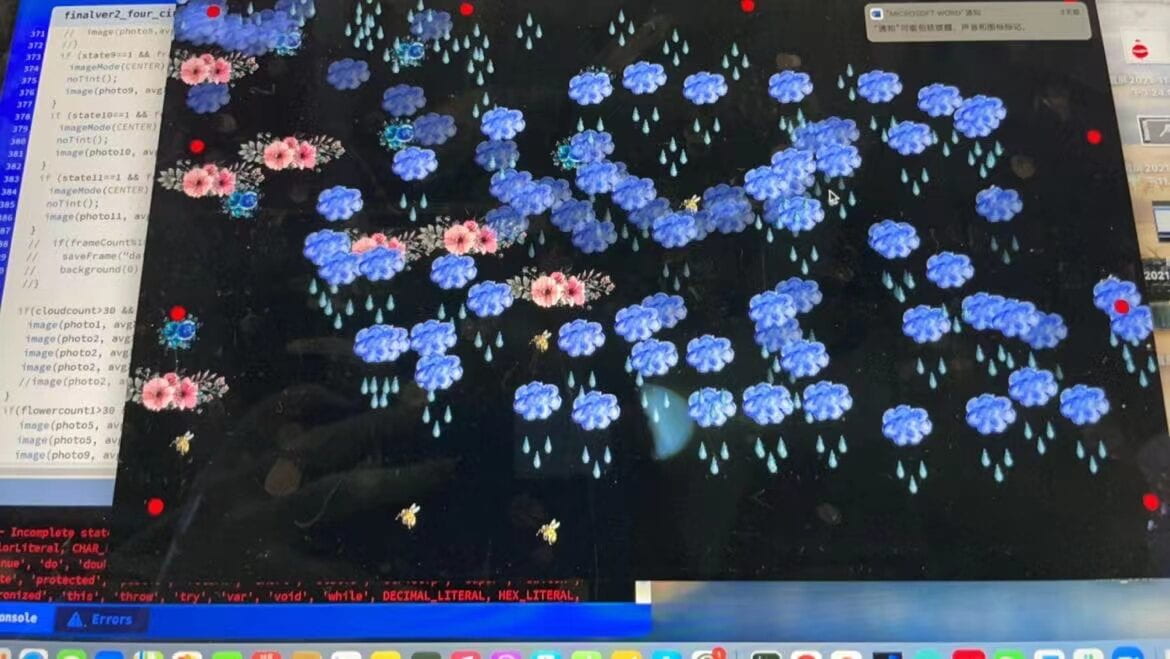

The internal interaction is absolutely one of the most important parts of our project. But we met difficulties here. We were thinking of counting the time since a certain pen image was triggered and make the effects to be triggered after a period, but it was hard to simply use the framecount () function to fulfill that. Cathy tried hard on it and sought help from some professionals, but the function takes some time to work and when it was put into a loop, the whole process was greatly slowed down and was not even able to support the color tracking. So, we turned to Professor Eric for help, and he provided a super precise and useful solution! It was to set a count for each image and make the count plus 1 after each trigger happened (state turn to 1 in our case) and as the count number accumulate and reach a certain number, following effects are triggered.

(rain triggered successfully!!)

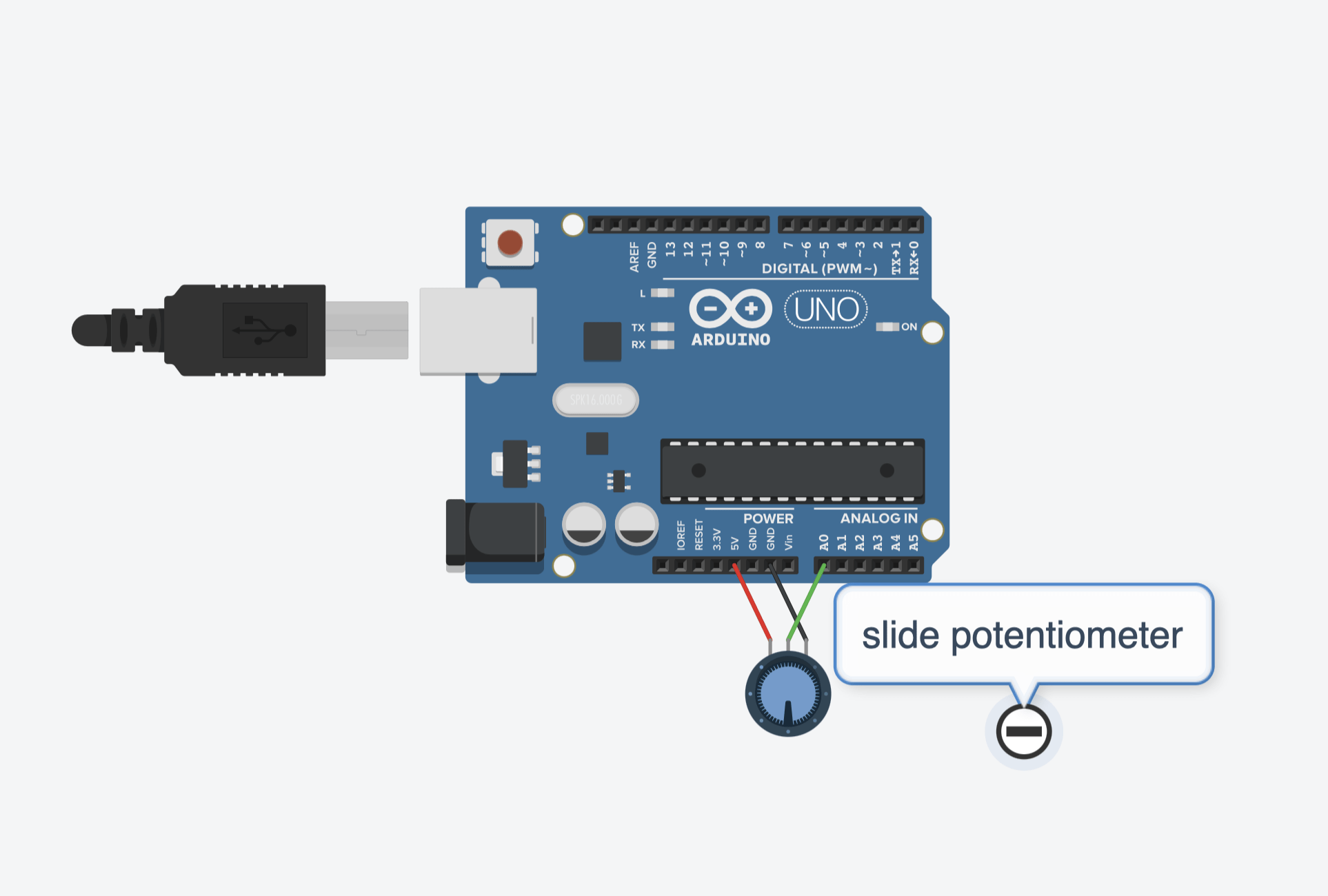

Cathy mainly took the responsibility of the code for Arduino. This time we chose to use a slide potentiometer to change the color of elements in processing. We chose this because we wanted to have some gradually varied effect other than sudden changes supported by button stuffs and a slide potentiometer is easier for users to use and interact upon than a traditional potentiometer. Originally, we planned to use the slide potentiometer to change the color of the whole background color so that users can choose what time it is in their drawing (e.g., orange for dawn, sky-blue for noon…). However, since we were using a projector to present the drawing, changing the background color into delicate colors other than black would make the images drawn vague, thus making the overall visual experience worse. So, we decided to apply the color change only to the sun to make sure the visual effect.

Fabrication…

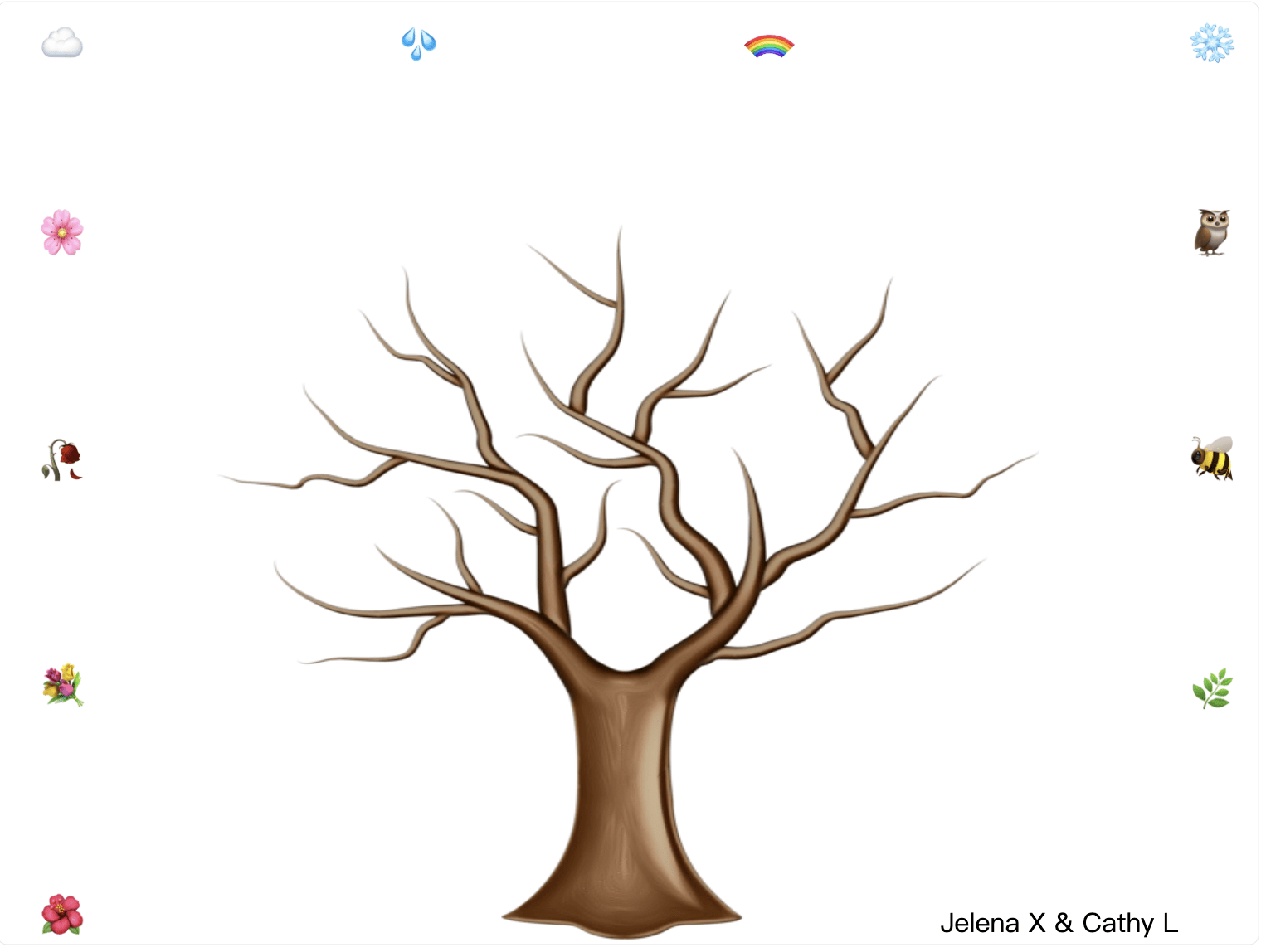

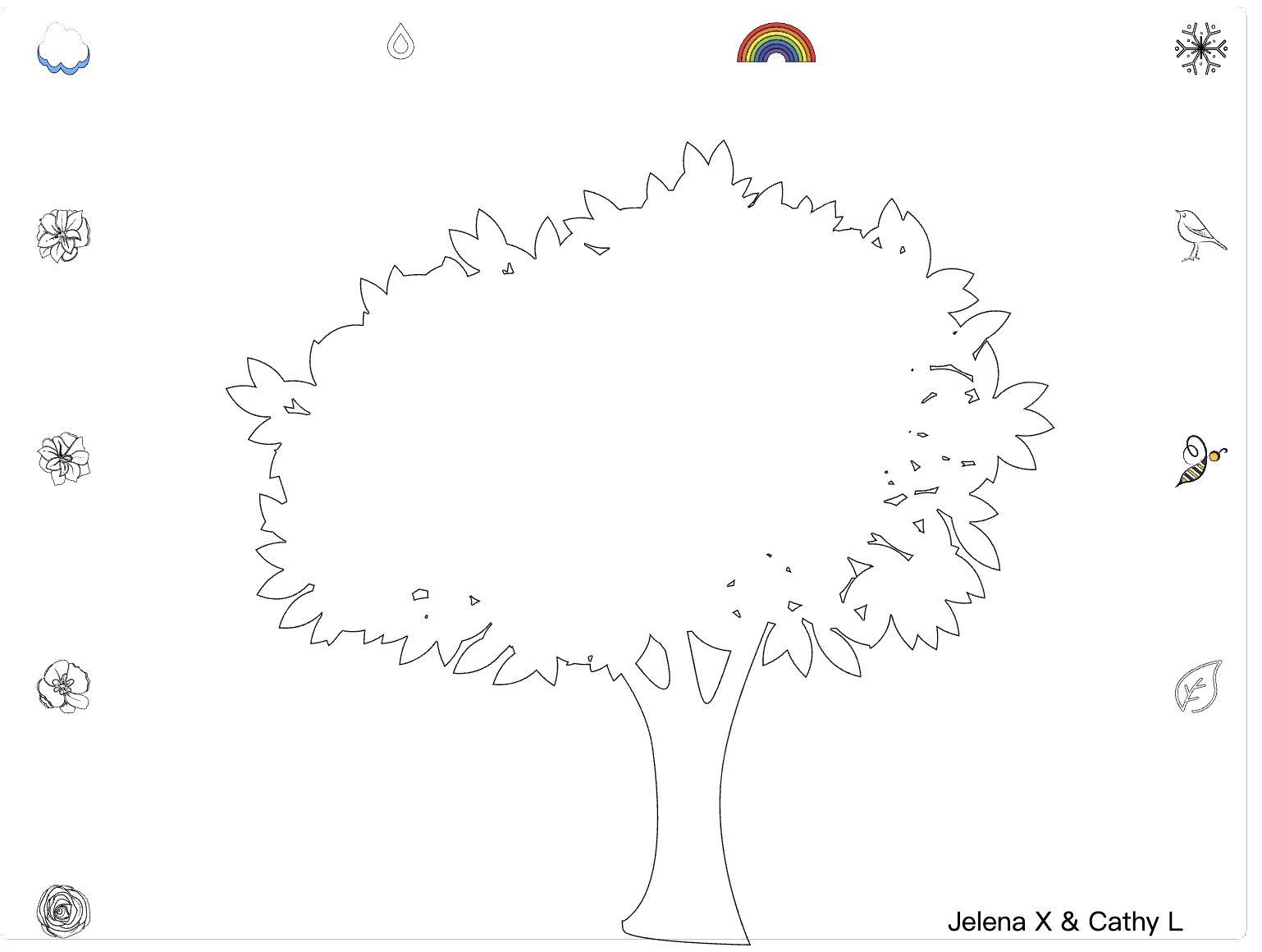

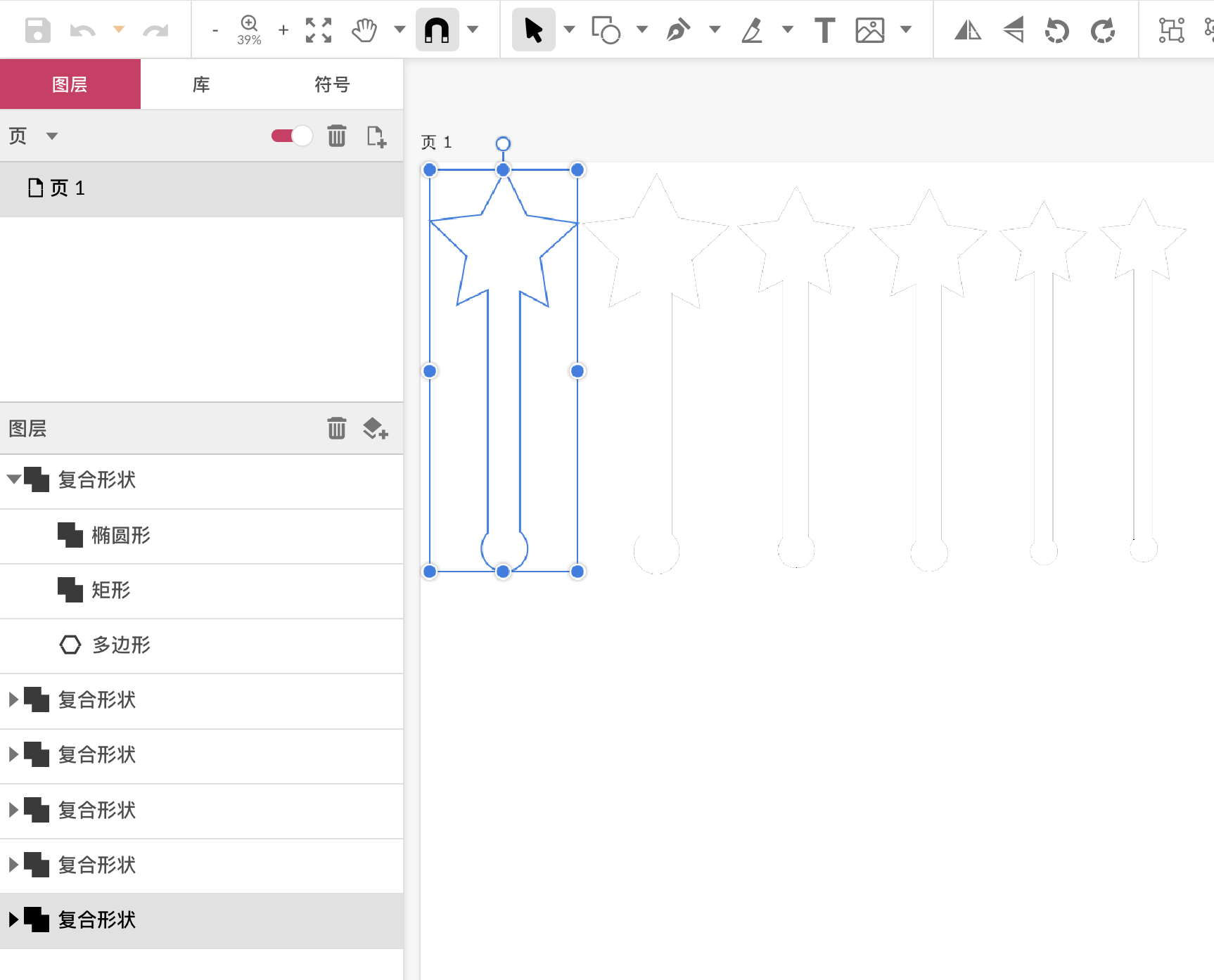

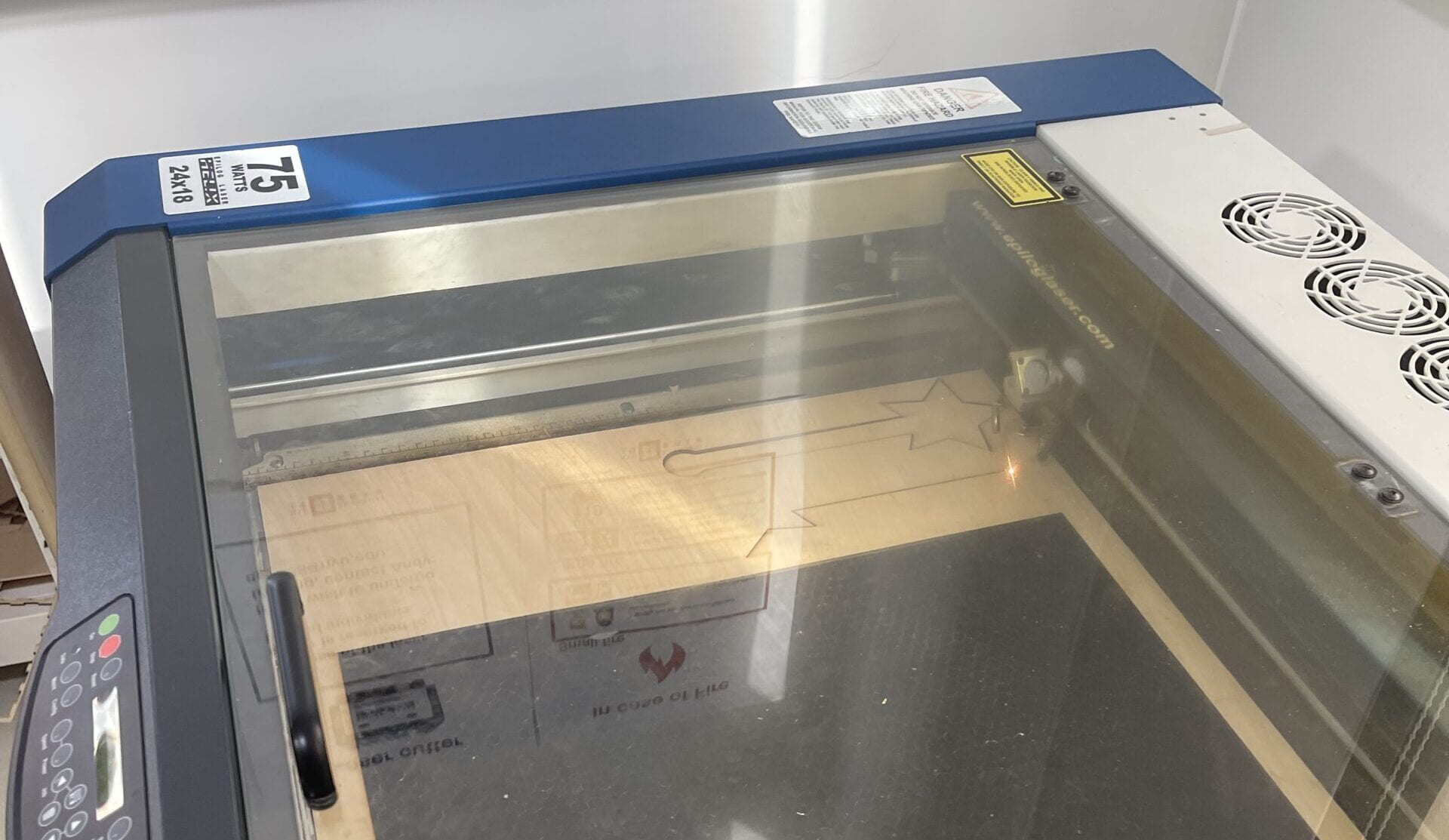

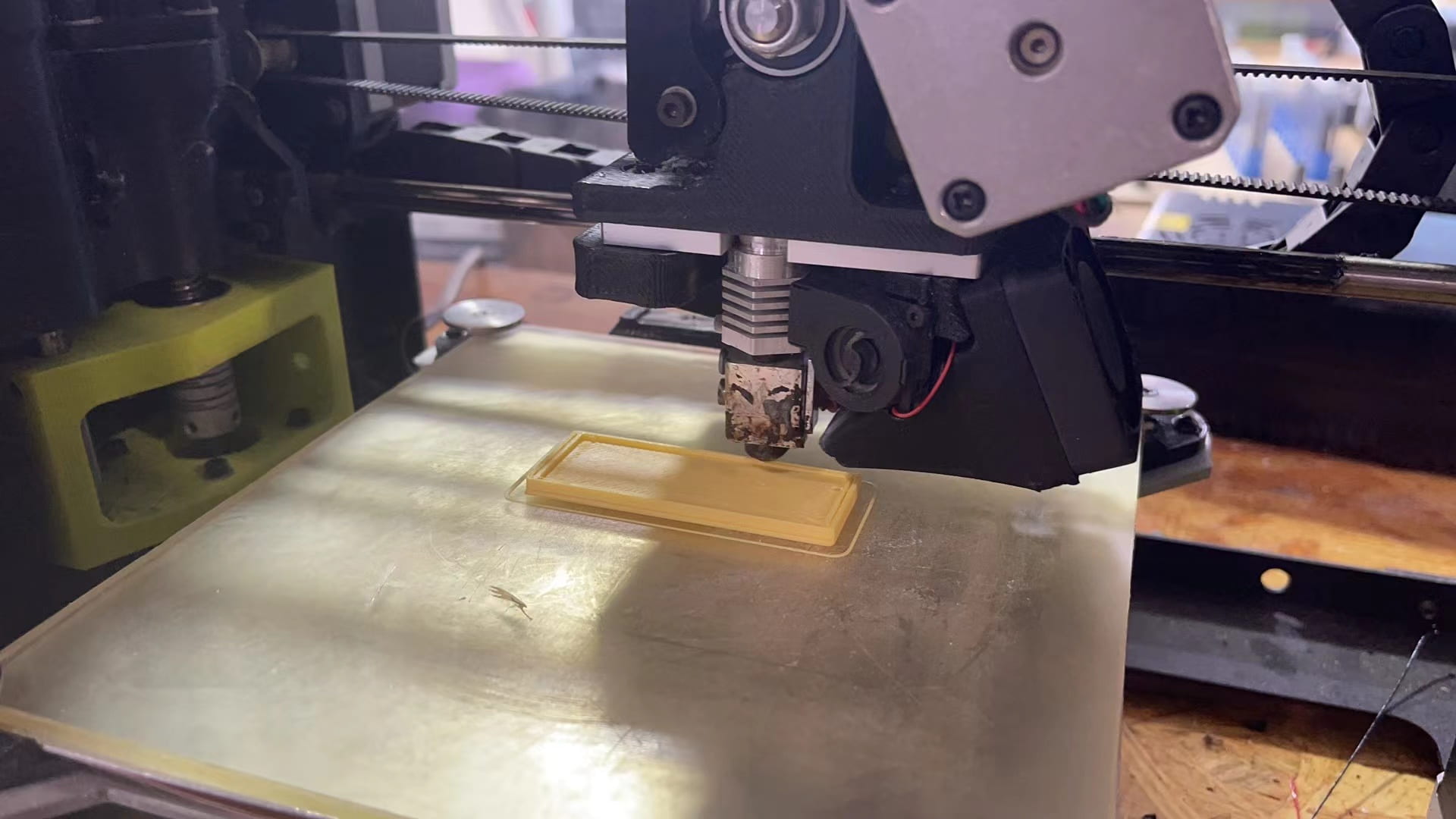

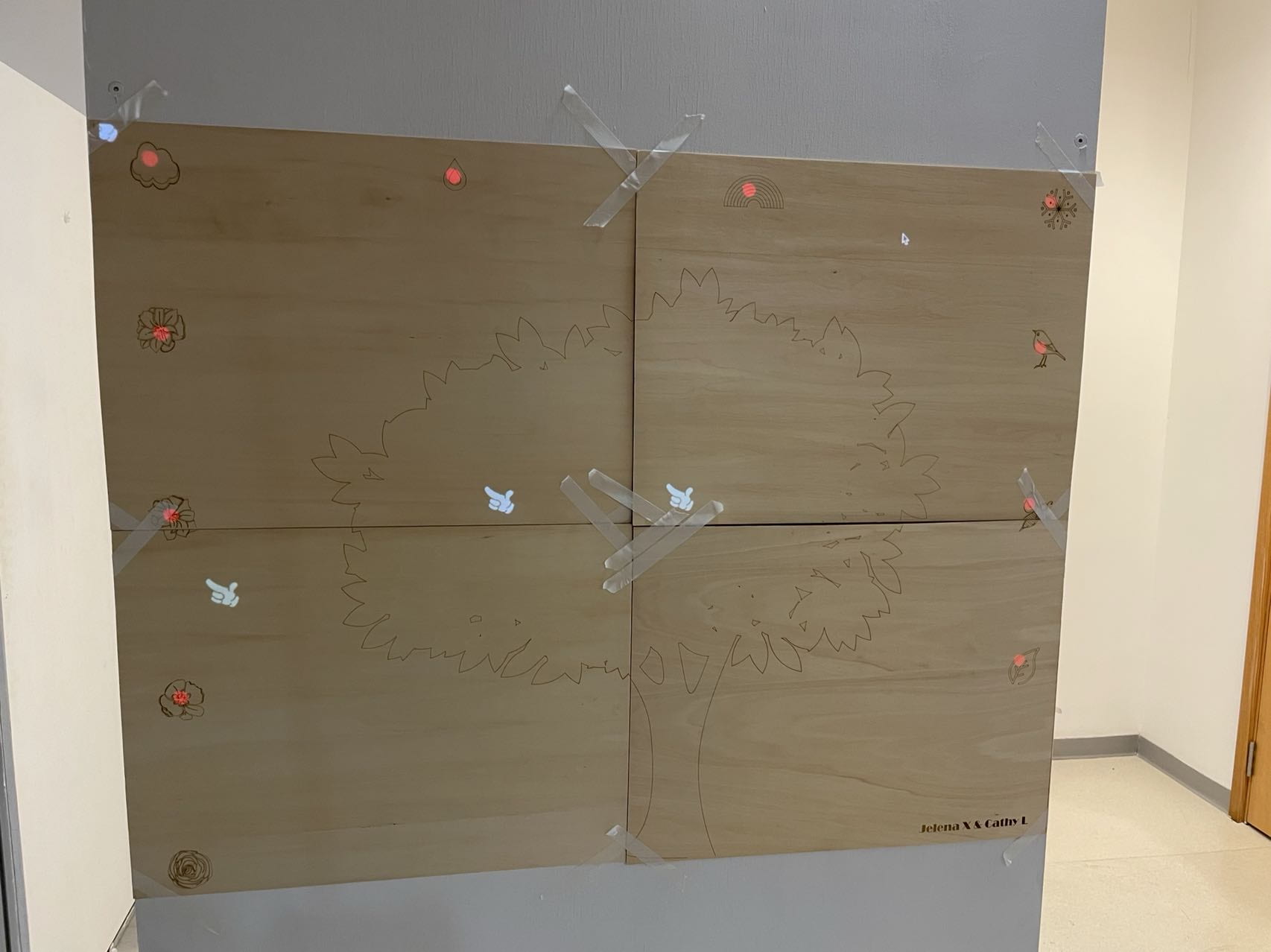

The most important part for our fabrication must be the huge laser-cut “canvas”. The first model I made included a lot of images and since we want it to be super big as the size of for standard boards (48×36 inch total), so it would take as long as two hours to complete the whole cutting process. So, Andy suggested me to change all the images into vectors so it would no longer engrave each pixel but go through vectors. In this way, we can simply adjust the depth of cutting and greatly elevate the speed.

👆The original version of design

👆The vector version of design

When designing the board, I forgot to take the range of projector as well as the into consideration,so when I glued everything up to the huge board, the lower part couldn’t be projected. So, I decided to tailor the code to the board. It took quite a while to adjust all the dots to the right place.

—CONCLUSIONS—

Our project is supposed to remind people of the relation between human and nature as well as the relation between people. We wanted to show that everything has links with something else and also links within during an immersive experience. I think the project to most extent achieved the stated goal as after the audience played the project, they mostly commented that they felt calm and relief during the process.

My definition of interaction includes the following parts

- “A performance which is engaging and where audience is the cast.”

In the project, everything appearing on the screen is a result of the movement of the user, which is to say, the user is “forced” to be fully engaged in the whole process to keep it running. Also, the dark atmosphere and the background music are also set to provide an immersive experience to enhance engagement.

- “blend several of the five senses”

In the project, visual and auditory experiences are closely generated.

- “user-project interaction and inner-project interaction should all exist”

In this case, user-project interaction happens with the color tracking and transformation into pen image as well as the movement in slide potentiometer’s affecting the color of sun. Inner-project interaction happens with all kinds of triggering like rain comes with sound; cloud accumulates to get rain; flowers gather and draws birds and bees…

- “encourage exploration”

In our project, we provide users with ten kinds of different images to choose from, and all of them are located at the edges and corners of the board. So the audience should try to explore more movements so as to trigger certain images.

- “highly customized”

Our project is definitely highly customized as every user is drawing on their own mood and choosing the pen images on their own.

If there is more time, I’d like to integrate the laser-cut board with a digital display screen but still with the frame with image hints on it. Also, the color-tracking system was highly relying on the outer condition like light condition and the color of clothe the user is wearing. So, if there is more time, maybe changing the color movement into body movement tracking will be better and also make the scale of the project larger. Moreover, even though the blob function didn’t work well before, I think there’s a way to optimize it and make it more stable, so that the project can support multiple users at a time.

What I really want to call for through this project is that it is important to slow down a bit during the endless rush of life. Slow down, look back and observe people and scenery around you and it will always give you some new insight. Nowadays, it is also a severe problem that people are alienating themselves with the nature and each other.It is glad to see more people being aware of it and hopefully, people can feel the strength of such relation and collaboration through our project.

The biggest takeaway I got from this project is that when designing an art project, it is always important and necessary to think over the relationship between each link and always make sure that every setting and design make sense for a “brand new” user to easily figure out what is going on and give them idea on what they should try and explore the next step. If we had thought about it before, we won’t have had the logic slip remain unsolved until user testing.

In conclusion, I’ve learned a lot from the project. Being responsible for the processing part gave me a great chance to advance my coding skills. After the final project, I found myself more comfortable with writing a code on my own according to my needs other than merely understanding and editing examples (which I did a lot during midterm). Also, I can feel my understanding about interaction kept deepening through my whole learning process throughout the semester. Also, from the final project, I think I touched part of the essence of artistic creation. The visual part is absolutely important, but what really inject spirit into the project is the concept and idea of the designer, which reflect their perspective of the society and solicitude for certain group of people and social problems.

CODE(Processing):

// IMA NYU Shanghai // Interaction Lab //This example is to send multiple values from Processing to Arduino and Arduino to Processing. import processing.serial.*; import processing.video.*; // Variable for capture device Capture video; int NUM_OF_VALUES_FROM_PROCESSING = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/ int NUM_OF_VALUES_FROM_ARDUINO = 1; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/ Serial myPort; String myString; int processing_values[]; /** this array stores values you might want to send to Arduino **/ int sensorValues[]; /** this array stores values from Arduino **/ float a,b,c; // A variable for the color we are searching for. color trackColor; float threshold; PImage background1; PImage photo0; PImage photo1; PImage photo2; PImage photo3; PImage photo4; PImage photo5; PImage photo6; PImage photo7; PImage photo8; PImage photo9; PImage photo10; PImage photo11; PImage photo12; int state0 = 1; int state1 = 0; int state2 = 0; int state3 = 0; int state4 = 0; int state5 = 0; int state6 = 0; int state7 = 0; int state8 = 0; int state9 = 0; int state10= 0; int state11= 0; int cloudcount=0; int raindropcount=0; int flowercount1=0; int flowercount2=0; int flowercount3=0; int num = 30; float mx[] = new float[num]; float my[] = new float[num]; void setup() { fullScreen(); setupSerial(); background(0); //image(background1, 0, 0, 1440, 840); //size(640, 480); //video = new Capture(this, width, height); String[] cameras = Capture.list(); video = new Capture(this, cameras[1]); video.start(); // Start off tracking for red trackColor = color(255, 255, 255); background1= loadImage("background.png"); photo0= loadImage("photo0.png"); photo1= loadImage("cloud1.png"); photo2= loadImage("raindrop.png"); photo3= loadImage("rainbow1.png"); photo4= loadImage("snow.png"); photo5= loadImage("flower1.png"); photo6= loadImage("flower2.png"); photo7= loadImage("flower4.png"); photo8= loadImage("flower3.png"); photo9= loadImage("bird.png"); photo10=loadImage("bee.png"); photo11=loadImage("grass1.png"); photo12=loadImage("sun.png"); image(background1, 0, 0, 1440, 900); } void captureEvent(Capture video) { // Read image from the camera video.read(); } void draw() { getSerialData(); a = map(sensorValues[0], 0, 1023, 100, 200); b= map(sensorValues[0], 0, 1023, 0, 200); println(a); tint(a); //color c=color(#F7C10C); imageMode(CENTER); image(photo12,150,150,150,150); fill(b+240,b+108,b+7); noStroke(); circle(150,150,70); video.loadPixels(); imageMode(CORNER); //image(video, 0, 0); //background(0); if (frameCount<1000){ fill(255, 255, 255); circle(60, 40, 100);//cloud1 circle(460, 40, 100);//raindrop circle(900, 40, 100);//rainbow1 circle(1400, 40, 100);//snow if (frameCount<200){ textSize(128); fill(255); text("choose one weather",200,400); }else{ textSize(128); fill(0); text("choose one weather",200,400); } } if (frameCount>1000 && frameCount<2000){ fill(255,255,255); circle(60, 270, 100);//flower1 circle(60, 530, 100);//flower2 circle(60, 800, 100);//flower3 //circle(60,870,20);//flower4 fill(0, 0, 0); circle(60, 40, 100);//cloud1 circle(460, 40, 100);//raindrop circle(900, 40, 100);//rainbow1 circle(1400, 40, 100);//snow } if (frameCount>2000 && frameCount<3000){ fill(255,255,255); circle(1400, 270, 100);//bird circle(1400, 530, 100);//bee circle(1400, 800, 100);//grass1 fill(0, 0, 0); circle(60, 40, 100);//cloud1 circle(460, 40, 100);//raindrop circle(900, 40, 100);//rainbow1 circle(1400, 40, 100);//snow circle(60, 270, 100);//flower1 circle(60, 530, 100);//flower2 circle(60, 800, 100);//flower3 } if(frameCount>3000){ fill(255,255,255); circle(60, 40, 100);//cloud1 circle(460, 40, 100);//raindrop circle(900, 40, 100);//rainbow1 circle(1400, 40, 100);//snow circle(60, 270, 100);//flower1 circle(60, 530, 100);//flower2 circle(60, 800, 100);//flower3 circle(1400, 270, 100);//bird circle(1400, 530, 100);//bee circle(1400, 800, 100);//grass1 } threshold = 30; //threshold = map(mouseX, 0, width, 0, 200); // begin the x and y for region accumulation at 0 float avgX = 0; float avgY = 0; int count = 0; // Begin loop to walk through every pixel for (int y = 0; y < video.height; y ++ ) { for (int x = 0; x < video.width; x ++ ) { int loc = x + y*video.width; // What is current color color currentColor = video.pixels[loc]; float r1 = red(currentColor); float g1 = green(currentColor); float b1 = blue(currentColor); float r2 = red(trackColor); float g2 = green(trackColor); float b2 = blue(trackColor); // Using euclidean distance to compare colors float d = dist(r1, g1, b1, r2, g2, b2); // If current color is within threshold then add the x coordinate and increment the count of found pixels if (d < threshold) { avgX += x; avgY += y; count++; } } } // if any points were found with similar color, average the coordinates to try to find center of region if (count > 0 ) { avgX = avgX/count; avgY = avgY/count; avgX= video.width-avgX; avgX=map(avgX, 0, video.width, 0, width); avgY=map(avgY, 0, video.height, 0, height); // Draw a circle at the tracked center //fill(trackColor); //strokeWeight(4.0); //stroke(0); //ellipse(avgX, avgY, 16, 16); //image(photo0,avgX,avgY,100,100); } // weather_upper if (avgX>=10 && avgX<100 && avgY<90 ) { state0 = 0; state1 = 1; state2 = 0; state3 = 0; state4 = 0; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } if (avgX>=410 && avgX<500 && avgY<90) { state0 = 0; state1 = 0; state2 = 1; state3 = 0; state4 = 0; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } if (avgX>=850 && avgX<950 && avgY<90) { state0 = 0; state1 = 0; state2 = 0; state3 = 1; state4 = 0; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } if (avgX>=1340 && avgY<90) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 1; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } //flowers_left vertical if (avgY>=220 && avgY<320 && avgX<80) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 0; state5 = 1; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } if (avgY>=480 && avgY<580 && avgX<80) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 0; state5 = 0; state6 = 1; state7 = 0; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } if (avgY>=750 && avgY<850 && avgX<80) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 0; state5 = 0; state6 = 0; state7 = 1; state8 = 0; state9 = 0; state10 = 0; state11 = 0; } //if(avgY>=770 && avgY<870 && avgX<70){ // state0 = 0; // state1 = 0; // state2 = 0; // state3 = 0; // state4 = 0; // state5 = 0; // state6 = 0; // state7 = 0; // state8 = 1; // state9 = 0; // state10 = 0; // state11 = 0; //} // right_vertical if (avgY>=220 && avgY<320 && avgX>1350) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 0; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 1; state10 = 0; state11 = 0; } if (avgY>=480 && avgY<580 && avgX>1350) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 0; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10= 1; state11= 0; } if (avgY>=750 && avgY<850 && avgX>1350) { state0 = 0; state1 = 0; state2 = 0; state3 = 0; state4 = 0; state5 = 0; state6 = 0; state7 = 0; state8 = 0; state9 = 0; state10= 0; state11= 1; } if (state0 == 1 ) { // int which = frameCount % num; // mx[which] =avgX; // my[which] = avgY; // for (int i = 0; i < num; i++) { // // which+1 is the smallest (the oldest in the array) // int index = (which+1 + i) % num; imageMode(CENTER); tint(255, 127); image(photo0,avgX,avgY,4.7,6); //image(photo0, mx[index], my[index], 47, 46); // } } if (state1 == 1 && frameCount%10==0) { imageMode(CENTER); tint(255, 127); image(photo1, avgX, avgY, 67, 54); cloudcount++; } if (state2==1 && frameCount%10==0) { imageMode(CENTER); noTint(); image(photo2, avgX, avgY-20, 9.5, 23); image(photo2, avgX-15, avgY, 9.5, 23); image(photo2, avgX+15, avgY, 9.5, 23); image(photo2, avgX, avgY+20, 9.5, 23); raindropcount++; } if (state3==1 && frameCount%50==0) { imageMode(CENTER); noTint(); image(photo3, avgX, avgY, 200, 200); } if (state4==1 && frameCount%30==0) { imageMode(CENTER); tint(255, 127); image(photo4, avgX, avgY, 318, 258); } if (state5==1 && frameCount%15==0) { imageMode(CENTER); noTint(); image(photo5, avgX, avgY, 160, 76); flowercount1++; } if (state6==1 && frameCount%15==0) { imageMode(CENTER); noTint(); image(photo6, avgX, avgY, 69, 58); flowercount2++; } if (state7==1 && frameCount%15==0) { imageMode(CENTER); noTint(); image(photo7, avgX, avgY, 49, 70); flowercount3++; } //if(state8==1 && frameCount%10==0){ // imageMode(CENTER); // noTint(); // image(photo8,avgX,avgY,100,100); //} if (state9==1 && frameCount%10==0) { imageMode(CENTER); noTint(); image(photo9, avgX, avgY, 120, 90); } if (state10==1 && frameCount%10==0) { imageMode(CENTER); noTint(); image(photo10, avgX, avgY, 44, 58); } if (state11==1 && frameCount%10==0) { imageMode(CENTER); noTint(); image(photo11, avgX, avgY, 65, 94); } // if(frameCount%10000==0){ // saveFrame("data/screenshot1"); // background(0); //} if(cloudcount>30 && frameCount%10==0 && state1==1){ image(photo1, avgX, avgY, 67, 54); image(photo2, avgX, avgY+80, 9.5, 23); image(photo2, avgX-15, avgY+60, 9.5, 23); image(photo2, avgX+15, avgY+60, 9.5, 23); //image(photo2, avgX, avgY, 9.5, 23); } if(flowercount1>30 && frameCount%20==0 && state5==1){ image(photo5, avgX, avgY, 160, 76); image(photo9, avgX+100, avgY-100, 120, 90); } if(flowercount2>30 && frameCount%20==0 && state6==1){ image(photo6, avgX, avgY, 69, 58); image(photo10, avgX+40, avgY-40, 44, 58); } if(raindropcount>30 && frameCount%20==0 && state3==1){ image(photo3, avgX, avgY, 160, 76); image(photo9, avgX+100, avgY-100, 120, 90); } //void mouseClicked() { // // Save color where the mouse is clicked in trackColor variable // int loc = mouseX + mouseY*video.width; // trackColor = video.pixels[loc]; //} //receive the values from Arduino //getSerialData(); //use the values from arduino //this is an example /* //sensorValues[1] are the values from the button if (sensorValues[1] == 1) { fill(random(255), random(255), random(255)); } //sensorValues[0] are the values from the potentiometer float r = map(sensorValues[0], 0, 1023, 0, width); circle(width/2, height/2, r); // give values to the variables you want to send here //change the code according to your project if (mousePressed) { processing_values[0] = 1; } else { processing_values[0] = 0; } processing_values[1] = int(map(mouseX, 0, width, 0, 255)); */ //end of example // send the values to Arduino. sendSerialData(); } void setupSerial() { printArray(Serial.list()); myPort = new Serial(this, Serial.list()[2], 9600); // WARNING! // You will definitely get an error here. // Change the PORT_INDEX to 0 and try running it again. // And then, check the list of the ports, // find the port "/dev/cu.usbmodem----" or "/dev/tty.usbmodem----" // and replace PORT_INDEX above with the index number of the port. myPort.clear(); // Throw out the first reading, // in case we started reading in the middle of a string from the sender. myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII myString = null; processing_values = new int[NUM_OF_VALUES_FROM_PROCESSING]; sensorValues = new int[NUM_OF_VALUES_FROM_ARDUINO]; } void getSerialData() { while (myPort.available() > 0) { myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII if (myString != null) { print("from arduino: "+ myString); String[] serialInArray = split(trim(myString), ","); if (serialInArray.length == NUM_OF_VALUES_FROM_ARDUINO) { for (int i=0; i<serialInArray.length; i++) { sensorValues[i] = int(serialInArray[i]); } } } } } void sendSerialData() { String data = ""; for (int i=0; i<processing_values.length; i++) { data += processing_values[i]; //if i is less than the index number of the last element in the values array if (i < processing_values.length-1) { data += ","; // add splitter character "," between each values element } //if it is the last element in the values array else { data += "\n"; // add the end of data character "n" } } //write to Arduino myPort.write(data); print("to arduino: "+ data); // this prints to the console the values going to arduino }

CODE(Arduino):

// IMA NYU Shanghai // Interaction Lab //This example is to send multiple values from Processing to Arduino and Arduino to Processing. #define NUM_OF_VALUES_FROM_PROCESSING 1 /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/ #define PIN_SLIDE_POT_A A0 /** DO NOT REMOVE THESE **/ int tempValue = 0; int valueIndex = 0; /* This is the array of values storing the data from Processing. */ int processing_values[NUM_OF_VALUES_FROM_PROCESSING]; void setup() { Serial.begin(9600); pinMode(PIN_SLIDE_POT_A, INPUT ); // this block of code is an example of an LED, a DC motor, and a button /* pinMode(13, OUTPUT); pinMode(9, OUTPUT); pinMode(2, INPUT); */ //end of example } void loop() { // to receive a value from Processing getSerialData(); int value_slide_pot_a = analogRead(PIN_SLIDE_POT_A); // Serial.print("Slide Pot value: "); Serial.print(value_slide_pot_a); Serial.println(); // add your code here // use elements in the values array //and print values to send to Processing // this is an example: /* //example of using received values and turning on an LED if (processing_values[0] == 1) { digitalWrite(13, HIGH); } else { digitalWrite(13, LOW); } analogWrite(9, processing_values[1]); // too fast communication might cause some latency in Processing // this delay resolves the issue. delay(10); //end of example of using received values //example of sending the values to Processing int sensor1 = analogRead(A0); // a potentiometer int sensor2 = digitalRead(2); // the button // send the values keeping this format Serial.print(sensor1); Serial.print(","); // put comma between sensor values Serial.print(sensor2); Serial.println(); // add linefeed after sending the last sensor value // end of example sending values */ // end of example } //receive serial data from Processing void getSerialData() { while (Serial.available()) { char c = Serial.read(); //switch - case checks the value of the variable in the switch function //in this case, the char c, then runs one of the cases that fit the value of the variable //for more information, visit the reference page: https://www.arduino.cc/en/Reference/SwitchCase switch (c) { //if the char c from Processing is a number between 0 and 9 case '0'...'9': //save the value of char c to tempValue //but simultaneously rearrange the existing values saved in tempValue //for the digits received through char c to remain coherent //if this does not make sense and would like to know more, send an email to me! tempValue = tempValue * 10 + c - '0'; break; //if the char c from Processing is a comma //indicating that the following values of char c is for the next element in the values array case ',': processing_values[valueIndex] = tempValue; //reset tempValue value tempValue = 0; //increment valuesIndex by 1 valueIndex++; break; //if the char c from Processing is character 'n' //which signals that it is the end of data case '\n': //save the tempValue //this will b the last element in the values array processing_values[valueIndex] = tempValue; //reset tempValue and valueIndex values //to clear out the values array for the next round of readings from Processing tempValue = 0; valueIndex = 0; break; } } }

Pictures & Videos:

Works collection from IMA Fall Show:

—References—

Image sources : https://clipart.info/watercolorpngclipart

Sound Sources:https://freesound.org/people/jmiddlesworth/sounds/364663/

https://freesound.org/people/taylordonj/sounds/521364/

https://freesound.org/people/InspectorJ/sounds/401277/

https://prosearch.tribeofnoise.com/artists/show/29656

Special Thanks To…

Professor Eric Parren—— You are so nice and erudite. I was always shocked when you solve the problems that bother me whole night within minutes and I’ve really learned a lot from them. Your unique humor made the course even more interesting and attractive. Thank you so much for teaching me so many interesting and useful skills and knowledge. Thank you for giving me a wonderful first-semester experience in IMA!

Professor Andy Garcia—— You helped a lot in each and every project I’ve made. From the huge heart for midterm to the perfume bottle for the DIC project and to the great canvas for the final, everything was so wonderful!

All the great fellows and LAs—— Winny, Skye, Candy, Pato, Sylvia… I always come to you with tons of strange questions and you are always helping me with great patience, that really helped a lot!!

Cathy Luo—— My great partner from midterm to final. Still remembering all the late nights we spent together fixing and working on our project. That’s a wonderful experience!

Kaylee Xu—— We haven’t been partner even once but we seem to have both been an important member of each other’s project. It’s soooo great to have you all the time!

————————Great Memories @ Ixlab————————