A. LIKE the post? – Jiasheng Li (Jason) – Andy Garcia

(Presentation)

B. CONCEPTION AND DESIGN

This project is an installation that physicalizes the action of posting filtered photos on social media and the diverse motives behind the LIKEs for the posts besides the appreciation for its content. It explores the multifaceted nature of identity representation and interactions in the digital realm. My definition of interaction is “a reciprocal input and output cycle between the users(human) and the system(non-human), which could still linger on in users’ minds after the immediate resonance”. This project aims to establish the mutual loop between machines and humans and leave a lasting impression, aligning with my previous interpretation.

LIKE doesn’t mean “like”. The inspiration is from a storyline in the 2024 Taiwanese series Imperfect Us: Chien Ching-fen taps LIKE for the fancy posts of Rebecca, her husband’s ex. The motive behind this particular digital interaction is not out of genuine love for the content but out of jealousy and the subtle observation sign “I am reading your post, Rebecca”. It made me reflect on the social media interactions. Technically, people should LIKE the post for the content. However, in many cases, people LIKE others’ posts for various reasons, such as conformity to friendship. I talked to my friends and professor about the concept, and fortunately, they all shared these situations in life. Therefore, I would like to capture and exaggerate the nuanced intentions.

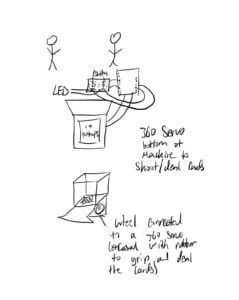

At the beginning of the project, I wanted to involve two users, one for posting a photo and the other for explaining their motives behind the LIKE.

(Initial Design)

Professor Andy Garcia pointed out that people might not reveal their real intentions if they were friends or family members of the one who posted the pictures. Therefore, instead of the literal physicalization of social media, I chose to use a slot machine as an analogy with the randomness and diversity of the viewer feedback. Since the slot machine is not the live depiction of instant viewer feedback, the project is adjusted to one user.

(Updated Design)

The updated design for the project proposal phase is as follows. The user operates the slide potentiometers as the filters and presses a button to freeze the camera on the computer as if they are taking a picture and posting social media blogs. Then they pull the lever, with a tilted sensor connected, to generate three random emojis that illustrate different reasons other people LIKE their posts besides the appreciation of the pictures. The whole takeaway for the audience is that we present the “filtered” us in the virtual world since the digital world can never show our multi-dimensional identity while the viewers’ LIKES might not be visceral with various other considerations.

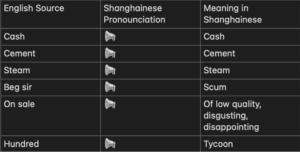

During the User Testing, most feedback is about the interpretations of the emojis. The users would like the emojis to be explained through the installation itself rather than me explaining the LIKE psychology. So I decided to make the interface pseudo-Instagram with comments that explain the emojis. Eventually, many users realized that the comments were aligned with the slot machine outcomes. For instance, ❤️🩹 stands for “I LIKE it to pretend that we are fine”; 🙇♂️ stands for “I LIKE it to follow the trend”; 🤔 stands for “I LIKE it to look smart tho I don’t get it”.

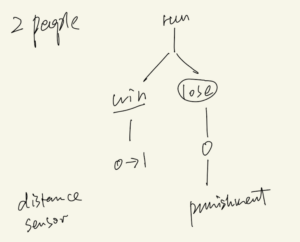

(Flowchart)

In the end, I wish my understanding of interaction could be executed. The audience can work with the system and the system deals with the input; the system outputs new instructions and the audience continues the conversation. Hopefully, they can reflect on the daily uses of social media. It does not have to be a satire on the two-sided dishonesty. After all, social media is already in a virtual world where authenticity is infeasible and LIKE is just an oversimplified function in social media.

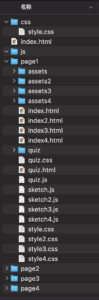

C. FABRICATION AND PRODUCTION:

Before User Testing:

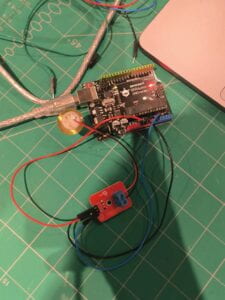

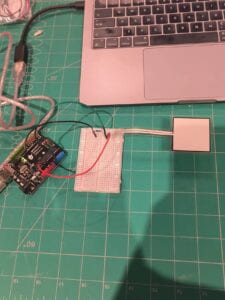

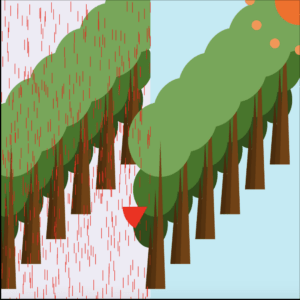

First I tried out a potentiometer and mapped the electricity value to the filter function.

(Circuit)

(Video: Potentiometer to Filter)

The key issue here is to map the filter value for POSTERIZE to a smaller range such as 2 to 16 instead of the whole range of 0 to 256. The 0 to 256 range is too broad to have visual effects.

int f = (int) map(arduino_values[0], 0, 1023, 16, 2); filter(POSTERIZE, f);

Furthermore, I encountered the problem that the camera only froze once if it only detects the HIGH and LOW for the button current.

Thankfully, IMA Fellow Kevin prompted me to draw inspiration from the millis() that records the previous value. Therefore, I introduced v to track and store the button situation and the camera state. In addition, the value for the POSTERIZE filter should be stored in the if-conditions to stop the change in the filter after the photo is taken.

(Video: Freeze the camera)

if (v == 0) { // update filter only when camera is running

pf = (int) map(arduino_values[0], 0, 1023, 16, 2);

}

filter(POSTERIZE, pf);

//button capture

if (arduino_values[1] == 1 && prev == 0) {

if (v == 0) {

cam.stop();

v = 1; // photo taken

} else {

cam.start();

v = 0; // camera on again

}

}

prev = arduino_values[1]; // update the previous button state

}

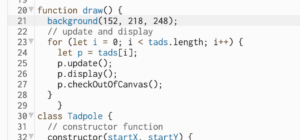

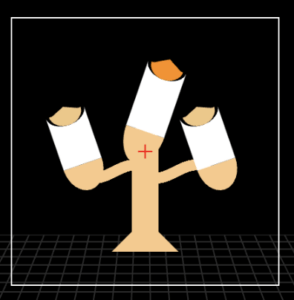

What’s more, I learned from the YouTube video Processing 3 – Slot machine | Game Designing #10 | HBY coding academic and embedded the slot machine by Processing. The main trick is to load pictures into arrays and make them move in the Y direction. If the previous picture exceeds a certain position, it will be replaced by the next one.

if (y[i]>20) {

//set the image to the initial position

y[i]=0;

//prepare the next image

idx[i]++;

//if the user press the stop button, then all the reels will reduce the speed

if (stop_button==1 && acc_y[i]>0) {

acc_y[i] -= random(5, 10);

if (acc_y[i] < 0) {

acc_y[i] = 0; // Ensuring that the speed does not go negative

}

}

}

}

(Video: Slot Machine without Arduino)

After User Testing:

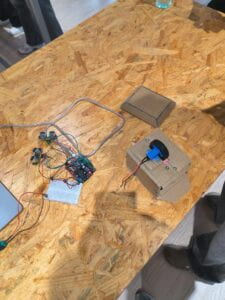

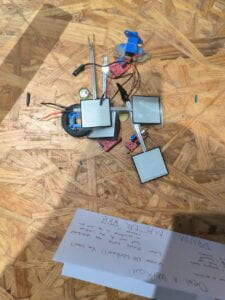

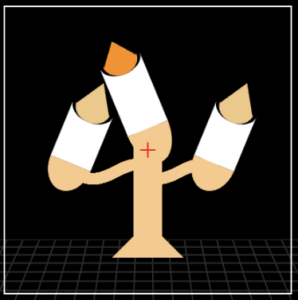

As I stated before, the feedback from User Testing is mostly about the physicalization of the pseudo-phone and the slot machine, and the readable interpretation of the emojis. Following my original flow chart, I separated the project into three parts: pseudo-phone, computer screen, and slot machine. Since people can use social media platforms on computers, I decided not to cover the computer but let it be exposed.

For the pseudo-phone, I added two extra slide potentiometers and an external camera. Adding two new potentiometers diversified the filter outcomes and the external camera makes the pseudo-phone resemble smartphones better. I chose the slide potentiometers instead of other potentiometers because the horizontal adjustment for this kind of potentiometers is closer to people trying the degree of filters on their phones.

By using the wooden panel and the laser cutting, I managed to hide the potentiometers, the button, and the camera. Most importantly, the design for the panel should be slightly larger than the actual size to help the gadgets smoothly plugged into the panel.

(Failed Pseudo-Phone)

(Video: Laser Cutting)

(Pseudo-Phone)

I had trouble using the Processing code for the variety of filter effects. According to Processing reference for filter() function, only 3 modes THRESHOLD POSTERIZE, and BLUR take parameters while THRESHOLD will alter the photo into black and white rather than a colored version. In other words, I could only use filter function twice. To map the third potentiometer, I used tint().

if (v == 0) {

tf = (int) map(arduino_values[3], 0, 1023, 0, 255);

}

tint(255, tf);

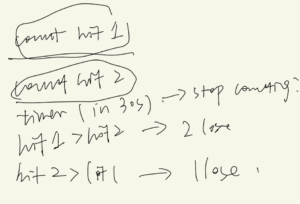

After finishing the pseudo-phone, I turned to the slot machine lever. Thanks to IMA fellow Shengli, I experimented with the tilt sensor. Fortunately, I found some wooden stickers and rubber bands for the prototype. The tilt sensor is used because it can detect whether the lever is tilted or not. It is the most intuitive choice.

(Video: Lever Test)

Then with the help of Professor Garcia, I finished the production by cutting some slots to stabilize the rubber bands.

(Video: Tilt Sensor Test)

I included a second button to physicalize the action to stop the slot machine. (Video: Stop the Slot Machine)

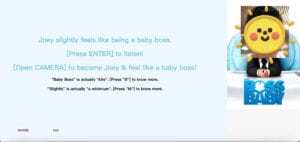

During User Testing, users also suggested I should make the interface resemble social media. So I decided to make it more like an Instagram page.

After the camera is frozen, the screen will pop up an interface that looks like Instagram with a random number of likes and comments. I chose Alex as the username since it is a gender neutral name.

(Interface after camera frozen)

The comments will reveal the meaning of the emojis that stand for the motives behind the LIKES when the slot machine stopped.

(Interface after slot machine stopped)

Since this procedure involves many steps, including detecting whether the slot machine stopped, detecting the final results, and displaying the final results, I used different functions to make my code more organized. Several arrays are introduced to preload the possible outcomes and the detection for the final outcomes.

//slot machine meanings

String[] descriptions = {

"I LIKE it for the content",

"I LIKE it to follow the trend",

"I LIKE it to pretend that we are fine",

"I LIKE it to look smart tho I don't get it",

"I LIKE it unconsciously when I am scrolling social media",

"I LIKE it to let you know that I READ your post"

};

String[] names = {

"Harrison",

"Rebecca",

"Victoria"

};

boolean[] reelStopped = {false, false, false};

int[] results = new int[3]; // Results of the spin

//final result

boolean allReelsStopped() {

return acc_y[0] == 0 && acc_y[1] == 0 && acc_y[2] == 0;

}

//display results

void displayResults() {

for (int i = 0; i < 3; i++) {

int finalidx = idx[i] % 6; // Assuming you have 6 descriptions

String resultText = descriptions[finalidx];

textSize(20);

fill(0); // Black text

// Adjust text position according to your canvas setup

text(names[i], (-cam.width+25)*0.075+60, 680 + i * 30);

push();

fill(100, 98,98);

text(resultText, (-cam.width+25)*0.075+140, 680 + i * 30);

pop();

}

}

The overall procedure for my project is as follows.

(Video: Demo)

D. CONCLUSIONS:

(Presentation)

The goal of this project is to physicalize and gamify the online social media post-and-like function that involves the installation and audience, leaving the audience lingering on the inauthenticity of digital representation and the various motives behind LIKE. The audience interacted with the project just as expected since these gadgets are really self-explanatory. They adjusted the filters and took pictures. Then they pull the lever and get the motives behind the likes. I believe that my project results align with my definition of interaction. There are many considerable improvements that can be made if there were more time, for example: adding the wires could be hidden more appropriately; the slot machine could have special effects if the three emojis were the same; the interface could be more Instagram-like; more sensors could be introduced to physicalize more social media functions; the slot machine could also be physicalized, etc. In general, I consider this project a success. Despite numerous setbacks and challenges throughout the process, each obstacle I overcame contributed to my growth and enhancement of my problem-solving abilities. The coding skills and craft skills I learned could be translated into my future creative process. The biggest takeaway I believe would be the idea that the project does not have to be an exact revival of the real world, certain exaggerations and analogies could make the project profound and the production process easier.

(Full Demonstration)

(IMA Show)

E. DISASSEMBLY:

F. APPENDIX

Credit: Youtube user @hbycodingacademic7667 (Processing Code); Professor Andy Garcia (Fabrication)

FULL CODE

Arduino

int buttonPin1 = 7;

int buttonPin2 = 4;

int buttonState1 = LOW;

int buttonState2 = LOW;

int previousState1 = LOW;

int tiltPin= 2;

boolean tiltState = 0;

void setup() {

Serial.begin(9600);

pinMode(buttonPin1, INPUT);

pinMode(buttonPin2, INPUT);

pinMode(tiltPin, INPUT);

}

void loop() {

// to send values to Processing assign the values you want to send

// this is an example:

int button1 = digitalRead(7);

int filter1 = analogRead(A1);

int filter2 = analogRead(A2);

int filter3 = analogRead(A3);

int tilt = digitalRead(2);

int button2 = digitalRead(4);

// send the values keeping this format

Serial.print(button1);

Serial.print(","); // put comma between sensor values

Serial.print(filter1);

Serial.print(","); // put comma between sensor values

Serial.print(filter2);

Serial.print(","); // put comma between sensor values

Serial.print(filter3);

Serial.print(","); // put comma between sensor values

Serial.print(tilt);

Serial.print(","); // put comma between sensor values

Serial.print(button2);

Serial.println(); // add linefeed after sending the last sensor value

// too fast communication might cause some latency in Processing

// this delay resolves the issue

delay(20);

//button

buttonState1 = digitalRead(buttonPin1);

buttonState2 = digitalRead(buttonPin2);

// end of example sending values

}

Processing

import processing.serial.*;

Serial serialPort;

int NUM_OF_VALUES_FROM_ARDUINO = 6; /* CHANGE THIS ACCORDING TO YOUR PROJECT */

/* This array stores values from Arduino */

int arduino_values[] = new int[NUM_OF_VALUES_FROM_ARDUINO];

int pf = 0;

int bf = 0;

int tf = 0;

int prev = 0;

int pre = 0;

int v = 0;

int lx = 640; //lever x

int ly = 250; //lever y

int likes = 0;

int coms = 0;

//camera

import processing.video.*;

String[] cameras = Capture.list();

Capture cam;

//slot machine

//declare N-elements array for reading image

PImage [] img = new PImage [9];

int width=300/2, height=200/2;

//slot machine sound

import processing.sound.*;

// declare a SoundFile object

SoundFile sound;

//slot machine meanings

String[] descriptions = {

"I LIKE it for the content",

"I LIKE it to follow the trend",

"I LIKE it to pretend that we are fine",

"I LIKE it to look smart tho I don't get it",

"I LIKE it unconsciously when I am scrolling social media",

"I LIKE it to let you know that I READ your post"

};

String[] names = {

"Harrison",

"Rebecca",

"Victoria"

};

boolean[] reelStopped = {false, false, false};

int[] results = new int[3]; // Results of the spin

void setup() {

//white color background

background(255);

size(725, 1000);

//camera

//cam = new Capture(this, cameras[0]);

// If this doesn't work, try one of the following lines instead:

//cam = new Capture(this, cameras[0], 30); // for all OS

cam = new Capture(this, "pipeline:avfvideosrc device-index=0"); // for macOS (try different indices too)

//cam = new Capture(this, "pipeline:kvvideosrc device-index=0"); // for Windows (try different indices too)

cam.start();

printArray(Serial.list());

// put the name of the serial port your Arduino is connected

// to in the line below - this should be the same as you're

// using in the "Port" menu in the Arduino IDE

serialPort = new Serial(this, "/dev/cu.usbmodem101", 9600);

//reading N images

for (int i=0; i<6; i++) img[i] = loadImage((i+1)+".png");

img[7] = loadImage("lever.png");

img[8] = loadImage("stop.png");

//sound

sound = new SoundFile(this, "slot.mp3");

}

//array for three different reels

//y => the y position of an image

//acc_y => the speedup for scrolling down an image

int y[]={0, 0, 0}, acc_y[]={0, 0, 0}, idx[]={0, 0, 0};

int stop_button=0;

void draw() {

background(255, 255, 255);

push();

if (cam.available()) {

cam.read();

}

scale(-1, 1);

if (v == 0) {

tf = (int) map(arduino_values[3], 0, 1023, 0, 255);

}

tint(255, tf);

//image(cam, -640, 0);

image(cam, -cam.width, 50);

//filter

// receive the values from Arduino

getSerialData();

// use the values like this:

////float x = map(arduino_values[0], 0, 1023, 0, width);

if (v == 0) { // Update filter only when camera is running

pf = (int) map(arduino_values[1], 0, 1023, 2, 16); // Update posterize filter setting

bf = (int) map(arduino_values[2], 1023, 0, 0, 8); // Update blur filter setting

}

filter(POSTERIZE, pf); // Apply the stored or current posterize filter

filter(BLUR, bf);

//filter(THRESHOLD, tf);

//button capture

// Handle button toggle for camera control

if (arduino_values[0] == 1 && prev == 0) {

// Toggle the video state with each button press

if (v == 0) {

cam.stop();

v = 1; // Camera is now off

likes = int(random(3, 1000));

coms = int(random(3, likes+1));

} else {

cam.start();

v = 0; // Camera is now on

}

}

prev = arduino_values[0]; // Update the previous button state

filter(POSTERIZE, 255); // Apply the stored or current posterize filter

filter(BLUR, 0);

pop();

//3 reels

for (int i=0; i<3; i++) {

//show two images on the canvas

image(img[(idx[i])%6], i*(width-100)-50, y[i]+500);

//scroll down with speeds

y[i]+=acc_y[i];

//refresh

if (y[i]>20) {

//set the image to the initial position

y[i]=0;

//prepare the next image

idx[i]++;

//if the user press the stop button, then all the reels will reduce the speed

if (stop_button==1 && acc_y[i]>0) {

acc_y[i] -= random(5, 10);

if (acc_y[i] < 0) {

acc_y[i] = 0; // Ensuring that the speed does not go negative

}

} //a decreased value

}

}

pressed();

if (stop_button == 1 && allReelsStopped()&&v==1) {

displayResults();

}

}

void getSerialData() {

while (serialPort.available() > 0) {

String in = serialPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (in != null) {

print("From Arduino: " + in);

String[] serialInArray = split(trim(in), ",");

if (serialInArray.length == NUM_OF_VALUES_FROM_ARDUINO) {

for (int i=0; i<serialInArray.length; i++) {

arduino_values[i] = int(serialInArray[i]);

}

}

}

}

}

void pressed() {

//current lever state

int current = arduino_values[4];

if (v==1) {

image(img[7], lx, ly);

image(img[8], lx+5, ly+10); //heart

push();

scale(-0.075, 0.075);

image(cam, -cam.width+25, 100);

pop();

push();

textSize(40);

fill(0, 0, 0);

text("Alex", (-cam.width+25)*0.075+100, 40);

pop();

push();

fill(0, 0, 0);

textSize(20);

text(likes + " likes", (-cam.width+25)*0.075+60, 600);

text("Alex", (-cam.width+25)*0.075+60, 630);

pop();

push();

fill(100, 98, 98);

textSize(20);

text("check out my new profile pic!!!", (-cam.width+25)*0.075+100, 630);

text("View all " + coms + " comments", (-cam.width+25)*0.075+60, 650);

pop();

if (current == 1 && pre == 0) {

stop_button=0;

println("pressed the lever\n");

for (int i=0; i<3; i++) {

acc_y[i]+=random(10, 50); //random number

}

// if the sound is not already playing

if (sound.isPlaying() == false) {

// start playing it

sound.play();

}

}

}

pre = current;

//button2

if (arduino_values[5] == 1) {

println("pressed the stop button\n");

stop_button = 1;

if (sound.isPlaying() == true) {

// start playing it

sound.stop();

}

}

}

//final result

boolean allReelsStopped() {

return acc_y[0] == 0 && acc_y[1] == 0 && acc_y[2] == 0;

}

//display results

void displayResults() {

for (int i = 0; i < 3; i++) {

int finalidx = idx[i] % 6; // Assuming you have 6 descriptions

String resultText = descriptions[finalidx];

textSize(20);

fill(0); // Black text

// Adjust text position according to your canvas setup

text(names[i], (-cam.width+25)*0.075+60, 680 + i * 30);

push();

fill(100, 98, 98);

text(resultText, (-cam.width+25)*0.075+140, 680 + i * 30);

pop();

}

}

🙇♂️”I LIKE it to follow the trend”,

❤️🩹”I LIKE it to pretend that we are fine”,

🤔”I LIKE it to look smart tho I don’t get it”,

🎲”I LIKE it unconsciously when I am scrolling social media”,

🤳“I LIKE it to let you know that I READ your post”