Cape Your Emotion – Yijia Chen – Gottfried Haiden

- CONCEPTION AND DESIGN:

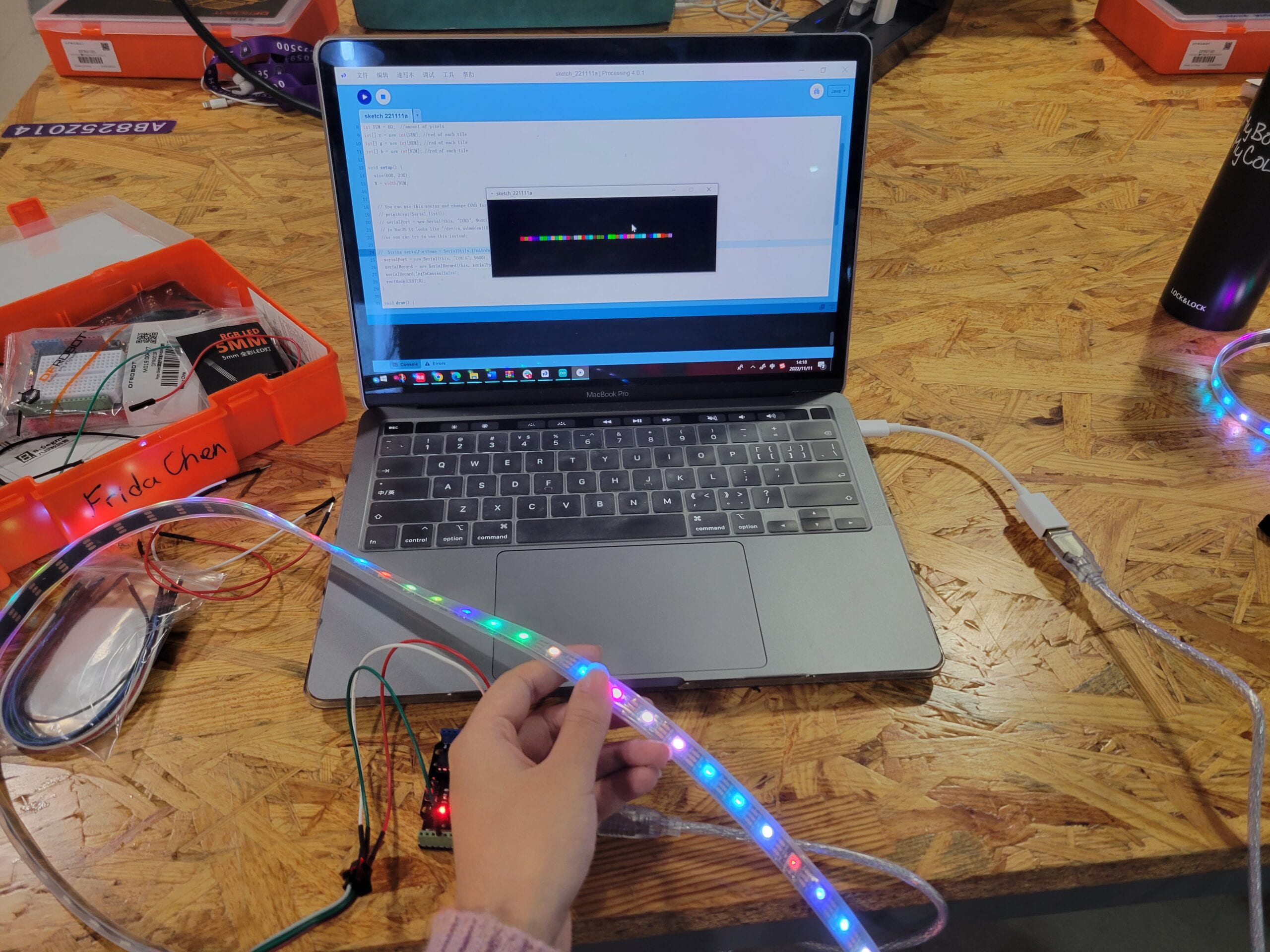

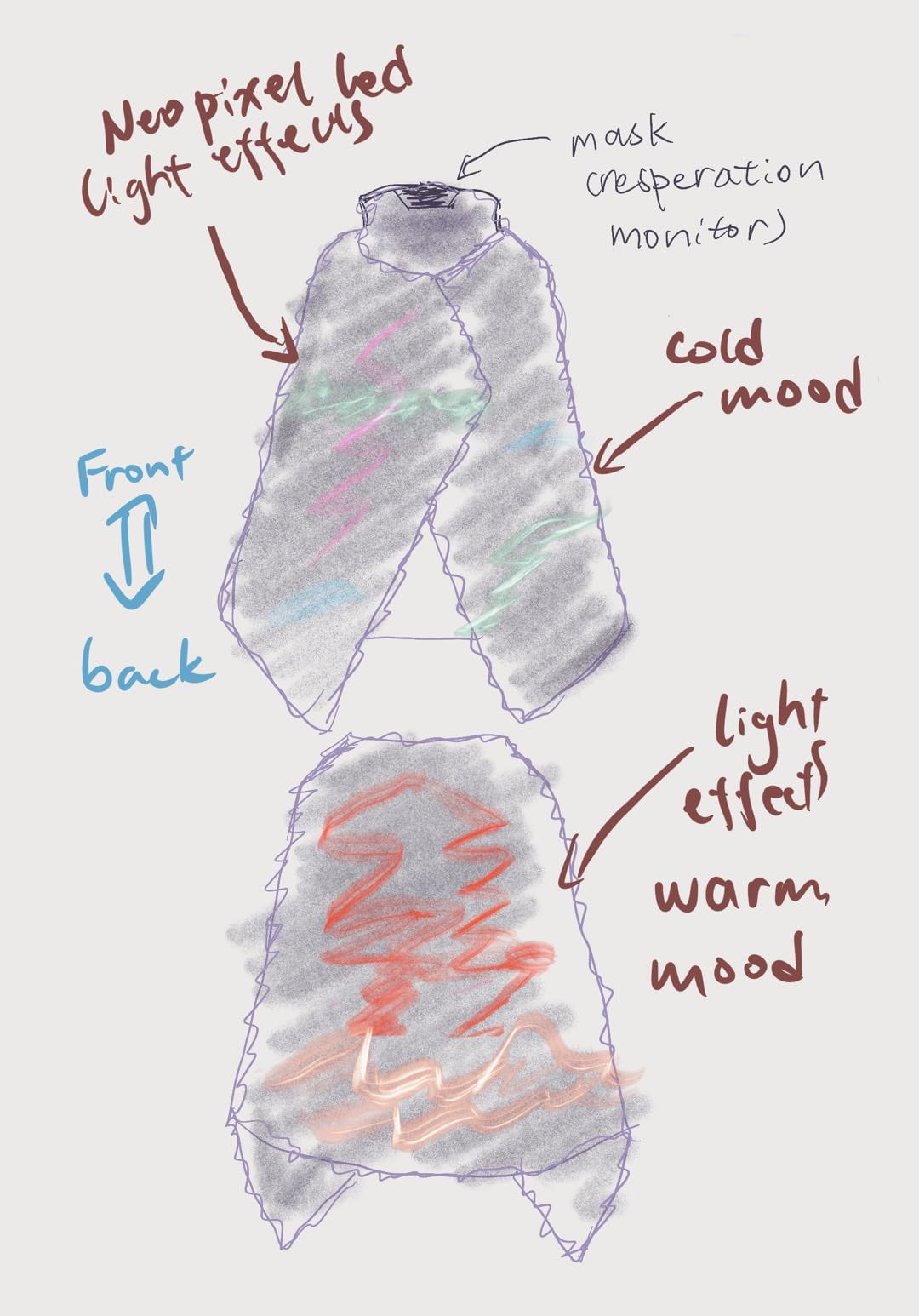

The first inspiration for this project is Jian Yu’s guest talk in an IMA workshop on wearable devices, where the artist introduces the concept of wearable accessories and clothes with LEDs. The decision of making a cape came from our shared experiences of being introverts; we conceive a wearable interactive device that can visualize the user’s emotional mood, therefore inviting more engagement between people who feel the same way. Using the pulse sensor to monitor the user’s heart rate and transform it into Neopixel LEDs light effects, our intention is to visualize emotions and break the social isolation of people, especially in this context of great pandemics.

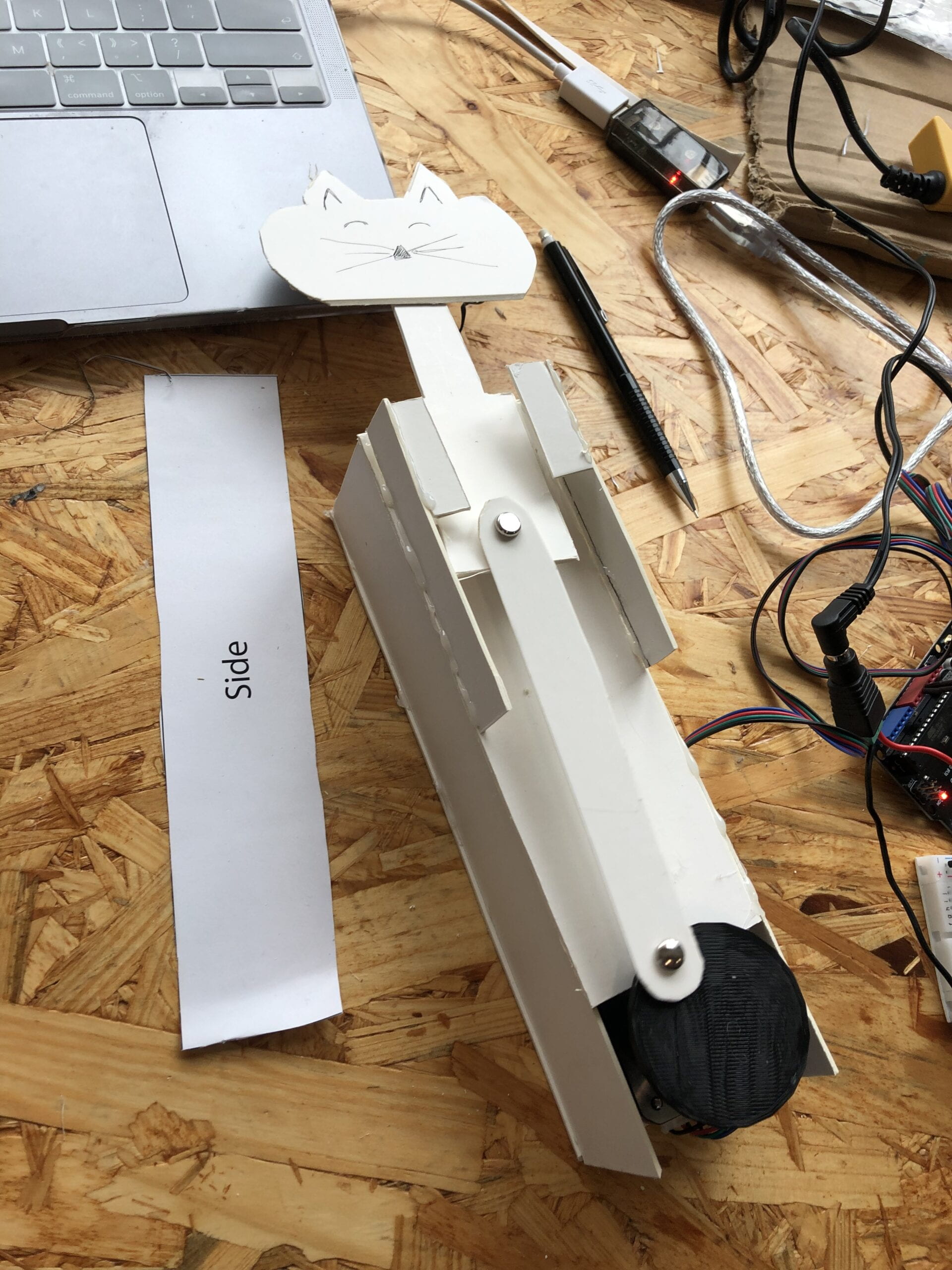

During the process of user testing, some of the users pointed out that the heart rate is reflecting more on the mental status of the user instead of the emotional mood, which inspired us to do a little research on the relation between heart rate and mental health. Therefore, on the back of the cape, we designed the side-face silhouette shape for the LED strip to suggest the spirit of the user, which also looks like a bird flying when the user moves. Although the user can not see it directly, the people around can observe the light and describe it to the user. The user test also noticed there might not be enough feedback to the user directly if the main body of the light effect is behind. In respect of that, on the left front of the cape, we add an 8*8 LED matrix that displays a beating heart so that the user can easily access their current heart rate if needed.

We believe that through the visualizing of the heart rate, this device reflects more on the emotional stability of the user, creating an opportunity for those who feel hard to express themselves, to be noticed by people around them. This gesture appeals to people to raise awareness of mental health and care for people around them, which goes beyond human-machine interaction.

- FABRICATION AND PRODUCTION:

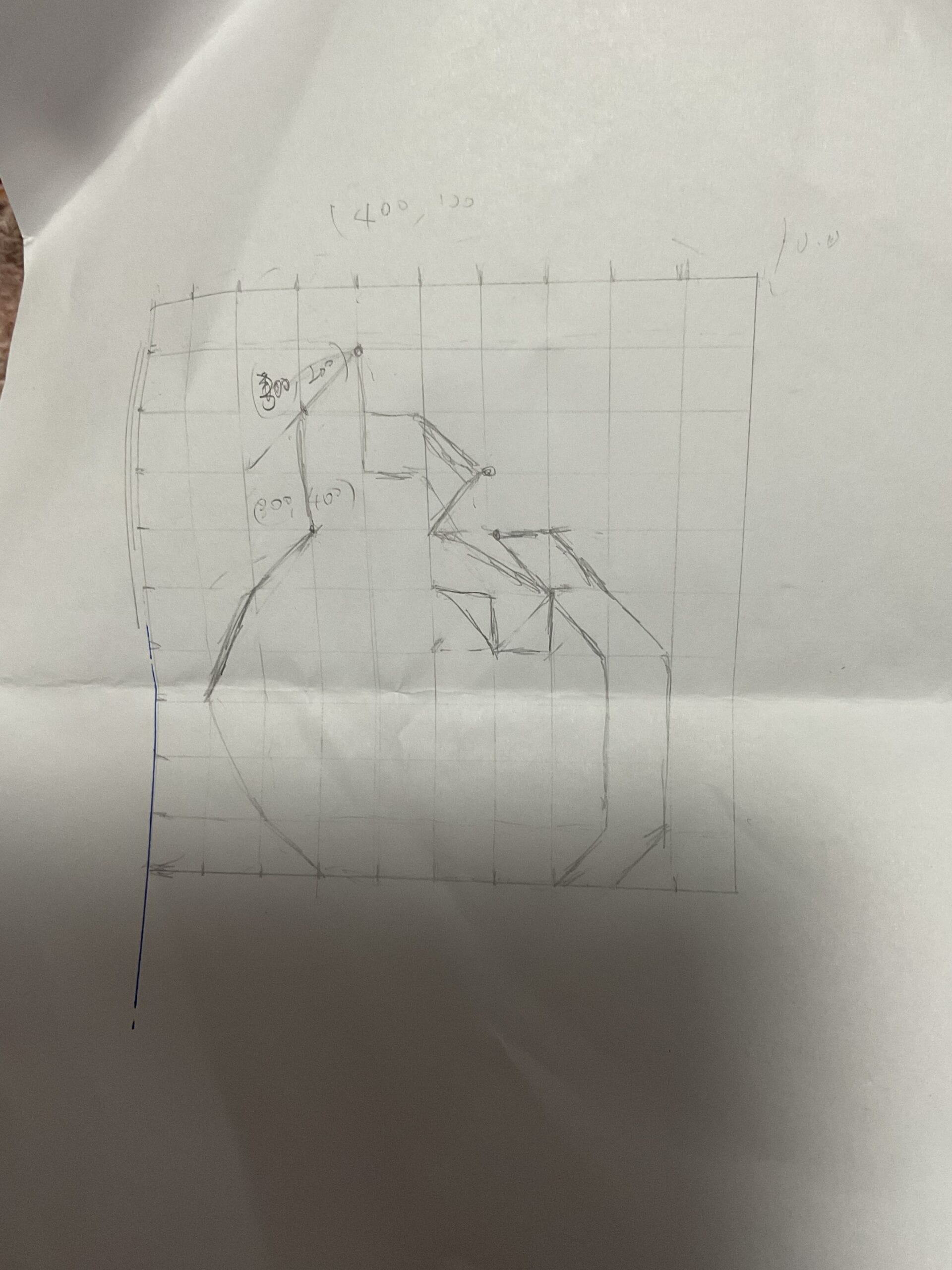

Since this is an untethered wearable device, the first step is to determine the fabric we want to use in making the cape. I drew a sketch for the pattern and the shape of the cape, then we both ordered two capes with different kinds of fabrics to test their light transmittance in terms of placing the Neopixel strip inside the materials and finally chose the most appropriate one. This fabric adds a hazy, diffusing effect to the LEDs. We also learned to use the sewing machine, but the shape we designed for the light strip is a little bit complicated and we had to seek some professional help in finishing it.

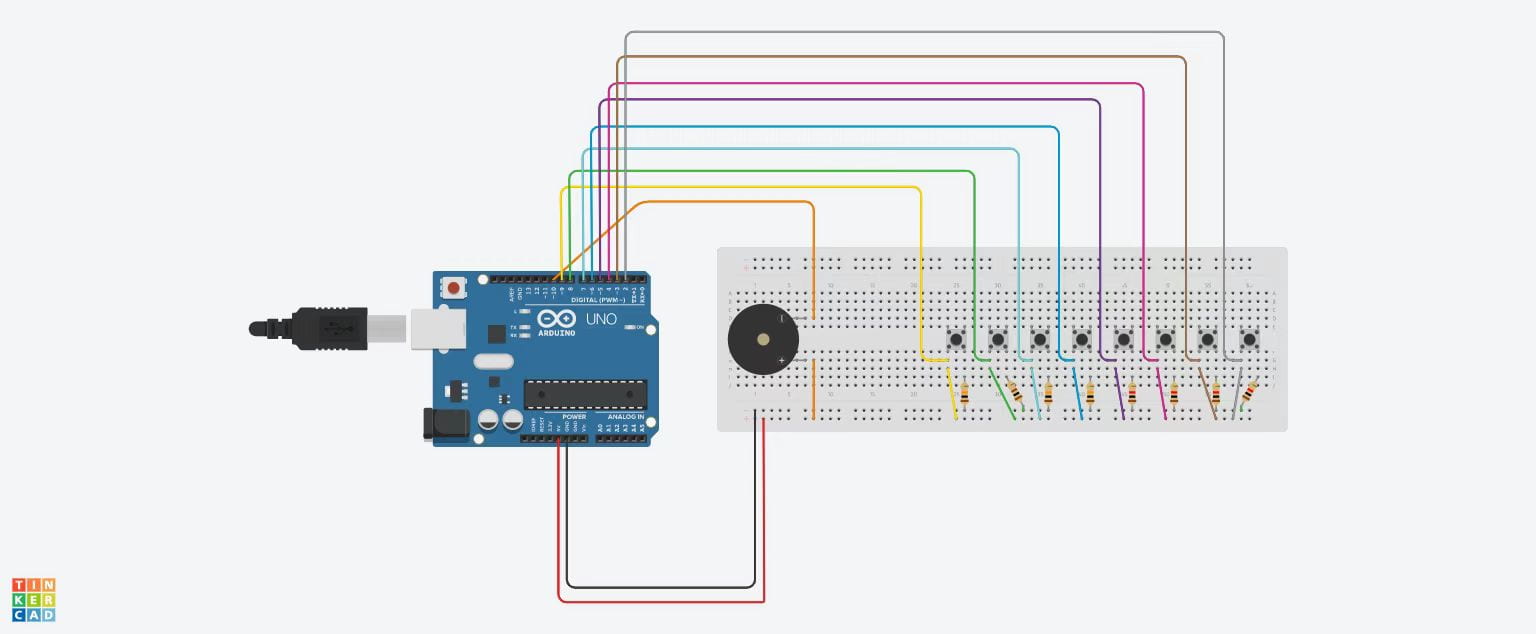

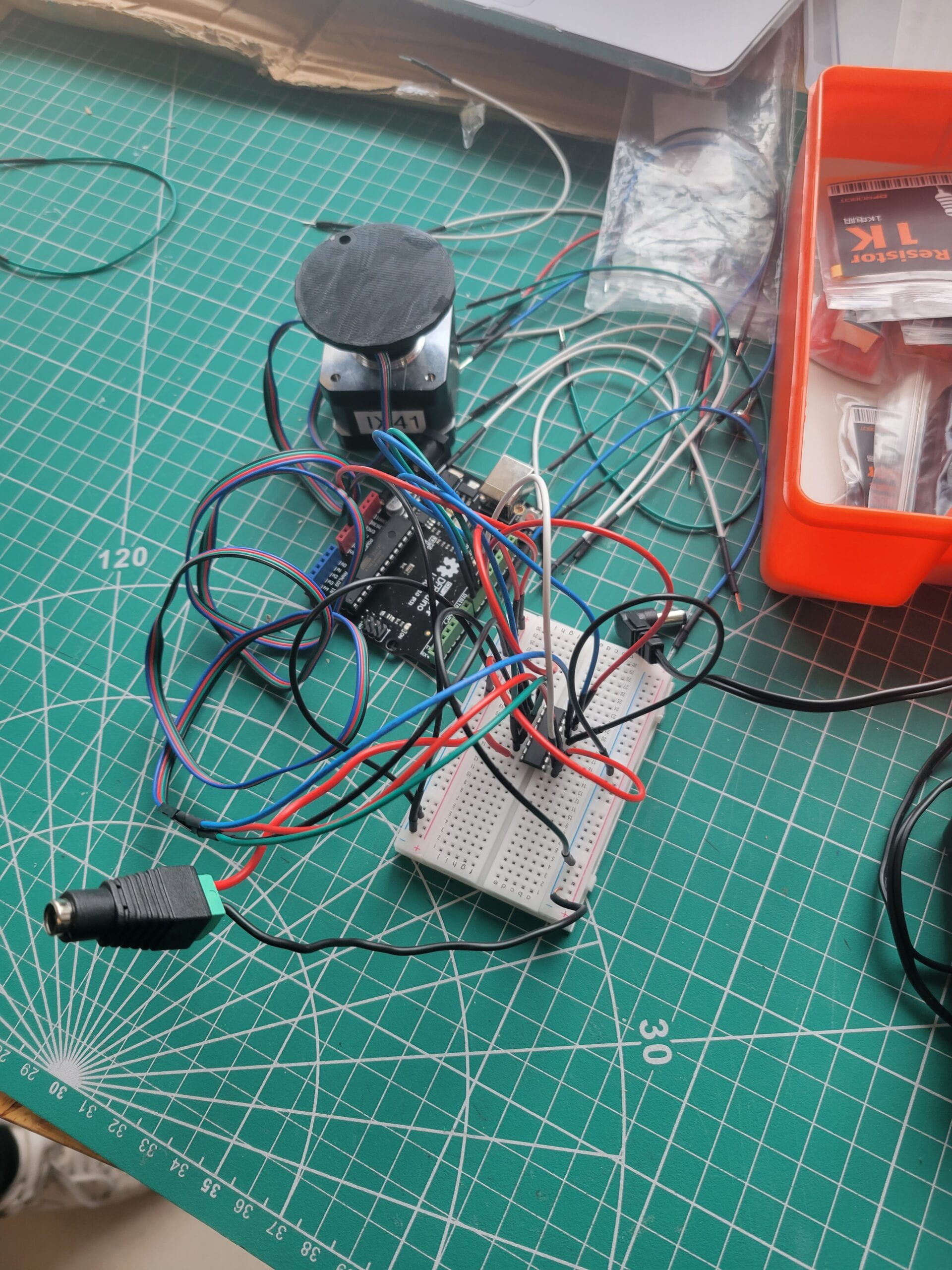

For the sensors, we started by using the finger-clipping heart rate sensor, which failed to process the data in the way we want, so we switched to the pulse sensor. It gives us feedback per second so that we could build a light effect on the heartbeat that is similar to a breathing light; it also allowed us to divide different heart rates into different levels and assign different colors for them.

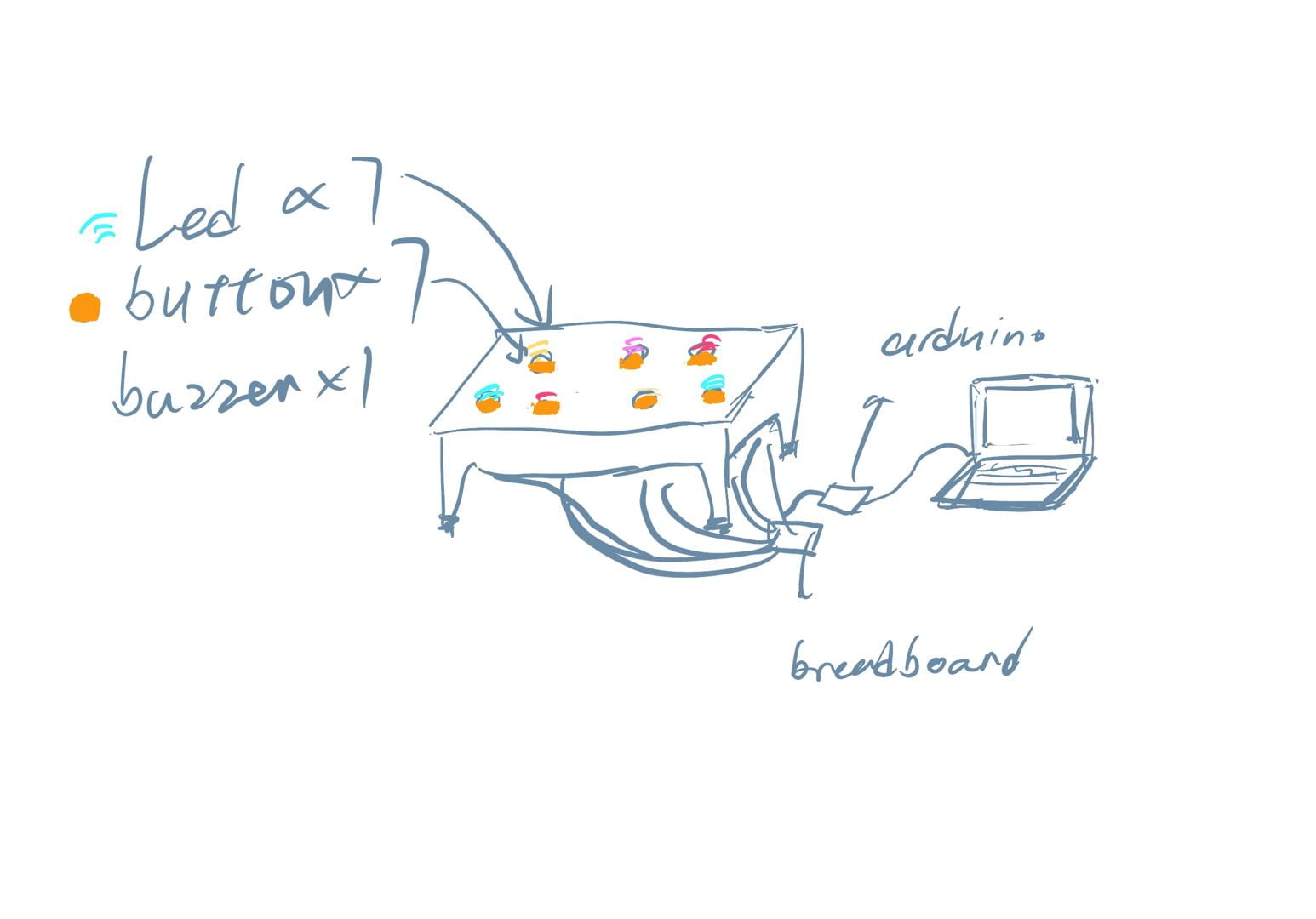

We also spent a lot of time finalizing the overall apparel design, a large part of it is the cable management which was a major issue during our user testing. The pulse sensor is supposed to be strapped tightly around the user’s right finger. So we used long wires and used sewing and glue guns to make sure the cable didn’t disconnect. We also added two pockets inside to hold the portable power. To simplify the circuit and get rid of the breadboard so that the user won’t be burdened by the weight too much, we did 3-to-1 soldering and make sure they won’t tangle up easily in different motions.

- CONCLUSIONS:

Our sole goal for this project is through visualizing the heart rate, this device can reflect on the emotional stability of the user, which creates an opportunity for those who feel hard to express themselves to be noticed and cared for by people around them. During our final presentation, our users engaged in active conversations with the audience when they trying to figure out what the visualization in the back is, which I believe is the exact the point of our design. One of our audience pointed out that this device might be useful in gym work-out, for the screen in the traditional design for portable heart monitoring sports devices are often too small and inconvenient to look at during exercising. Another audience noticed how it could be used in hospitals for people in need so that nurses and doctors can check on their patients’ statuses more easily. They all indicated the practical potential of our design.

If there is more time, we could design the light effect more delicate and indicative, incorporating more research on this medical aspect. We also thought about adding processing extensions like some small game using the heartbeat as an input, but it seems to be a little bit jarring with the device’s nature as an untethered wearable device. In conclusion, I believe this device has achieved what I envisioned for it as an interactive installation: it invites interaction constantly and not only on a human-machine level but more deeply on a human-human level.

(For some reason uploading new images in this new post kept failing for days, so these videos are all I got for now and I hope they can make up as visual references. )

- ANNEX

#include

#define NUM_LEDS1 60

#define NUM_LEDS2 64

#define DATA_PIN1 3

#define DATA_PIN2 6

CRGB leds1[NUM_LEDS1];

CRGB leds2[NUM_LEDS2];

int fade = 0;

int heart[14] = { 11, 12, 13, 17, 21, 25, 29, 34, 38, 42, 46, 50, 51, 52 };

/* Getting_BPM_to_Monitor prints the BPM to the Serial Monitor, using the least lines of code and PulseSensor Library.

* Tutorial Webpage: https://pulsesensor.com/pages/getting-advanced

*

--------Use This Sketch To------------------------------------------

1) Displays user's live and changing BPM, Beats Per Minute, in Arduino's native Serial Monitor.

2) Print: "♥ A HeartBeat Happened !" when a beat is detected, live.

2) Learn about using a PulseSensor Library "Object".

4) Blinks LED on PIN 13 with user's Heartbeat.

--------------------------------------------------------------------*/

#define USE_ARDUINO_INTERRUPTS true // Set-up low-level interrupts for most acurate BPM math.

#include // Includes the PulseSensorPlayground Library.

// Variables

const int PulseWire = 0; // PulseSensor PURPLE WIRE connected to ANALOG PIN 0

const int LED13 = 13; // The on-board Arduino LED, close to PIN 13.

int Threshold = 550; // Determine which Signal to "count as a beat" and which to ignore.

// Use the "Gettting Started Project" to fine-tune Threshold Value beyond default setting.

// Otherwise leave the default "550" value.

PulseSensorPlayground pulseSensor; // Creates an instance of the PulseSensorPlayground object called "pulseSensor"

int desiredBrightness = 64;

void setup() {

Serial.begin(9600); // For Serial Monitor

FastLED.setBrightness(50);

FastLED.addLeds<NEOPIXEL, DATA_PIN1>(leds1, NUM_LEDS1); // GRB ordering is assumed

FastLED.addLeds<NEOPIXEL, DATA_PIN2>(leds2, NUM_LEDS2);

// Configure the PulseSensor object, by assigning our variables to it.

pulseSensor.analogInput(PulseWire);

pulseSensor.blinkOnPulse(LED13); //auto-magically blink Arduino's LED with heartbeat.

pulseSensor.setThreshold(Threshold);

// Double-check the "pulseSensor" object was created and "began" seeing a signal.

if (pulseSensor.begin()) {

Serial.println("We created a pulseSensor Object !"); //This prints one time at Arduino power-up, or on Arduino reset.

}

}

void loop() {

for (int j; j < NUM_LEDS2; j++) {

leds2[j] = CRGB::Black;

}

for (int j; j < 14; j++) { leds2[heart[j]] = CRGB::Red; } FastLED.show(); delay(50); int myBPM = pulseSensor.getBeatsPerMinute(); // Calls function on our pulseSensor object that returns BPM as an "int". // "myBPM" hold this BPM value now. if (pulseSensor.sawStartOfBeat()) { desiredBrightness = 64; // Constantly test to see if "a beat happened". Serial.println("♥ A HeartBeat Happened ! "); // If test is "true", print a message "a heartbeat happened". Serial.print("BPM: "); // Print phrase "BPM: " Serial.println(myBPM); // Print the value inside of myBPM. } if (desiredBrightness > 0) {

desiredBrightness = desiredBrightness - 4;

}

FastLED.setBrightness(desiredBrightness);

FastLED.show();

delay(10);

if (myBPM >= 55 && myBPM < 70) {

for (int i = 0; i < NUM_LEDS1; i++) { // and set the amount of "red" to be proportional // to the heartbeat value leds1[i] = CRGB::DeepSkyBlue; } // update the NeoPixel chain FastLED.show(); // and sleep a bit delay(10); } else if (myBPM >= 70 && myBPM < 90) {

for (int i = 0; i < NUM_LEDS1; i++) { // and set the amount of "red" to be proportional // to the heartbeat value // leds[i] = blend(CRGB::DeepSkyBlue, CRGB::Amethyst, fade); leds1[i] = CRGB::DarkOrchid; } // update the NeoPixel chain FastLED.show(); // and sleep a bit delay(10); } else if (myBPM >= 90 && myBPM < 110) {

for (int i = 0; i < NUM_LEDS1; i++) { // and set the amount of "red" to be proportional // to the heartbeat value // leds[i] = blend(CRGB::Amethyst, CRGB::LemonChiffon, fade); leds1[i] = CRGB::DarkOrange; } // update the NeoPixel chain FastLED.show(); // and sleep a bit delay(10); } else if (myBPM >= 110 && myBPM < 125) {

for (int i = 0; i < NUM_LEDS1; i++) { // and set the amount of "red" to be proportional // to the heartbeat value // leds[i] = blend(CRGB::LemonChiffon, CRGB::DeepPink, fade); leds1[i] = CRGB::FireBrick; } // update the NeoPixel chain FastLED.show(); // and sleep a bit delay(10); } else if (myBPM >= 125 && myBPM < 140) {

for (int i = 0; i < NUM_LEDS1; i++) {

// and set the amount of "red" to be proportional

// to the heartbeat value

// leds[i] = blend(CRGB::DeepPink, CRGB::FireBrick, fade);

leds1[i] = CRGB::Red;

}

// update the NeoPixel chain

FastLED.show();

// and sleep a bit

delay(10);

}

}