Project1: Generative Visuals

Real-time Visualization of Ballade No.1 by Chopin

Link to My Project: Part1 & Part2

Description & Concept

In this project, I want to generate 2D visuals based on real-time microphone input of my performance on the classical piano solo “Ballade No.1” by Chopin. I designed the visual elements, rhythm, and color tones according to my feelings when playing the piece. I want to convey a sense of deep but obscure sorrow and generate a flashback effect as if someone telling an old story with emotions. Through integrating the frequency and notes as parameters into the shape- and color-coding, I synchronized the transformation of these visual elements with my performance. However, due to inaccuracies of frequency detection and calculation time, the generated visuals do not efficiently convey the feelings. The shapes and transformations also need further improvements. Nevertheless, this project will serve as my first step for classical music visualization, a topic I will continue to explore and dig deep into.

Demo

Development and Technical Implementation

1. Conceptual development

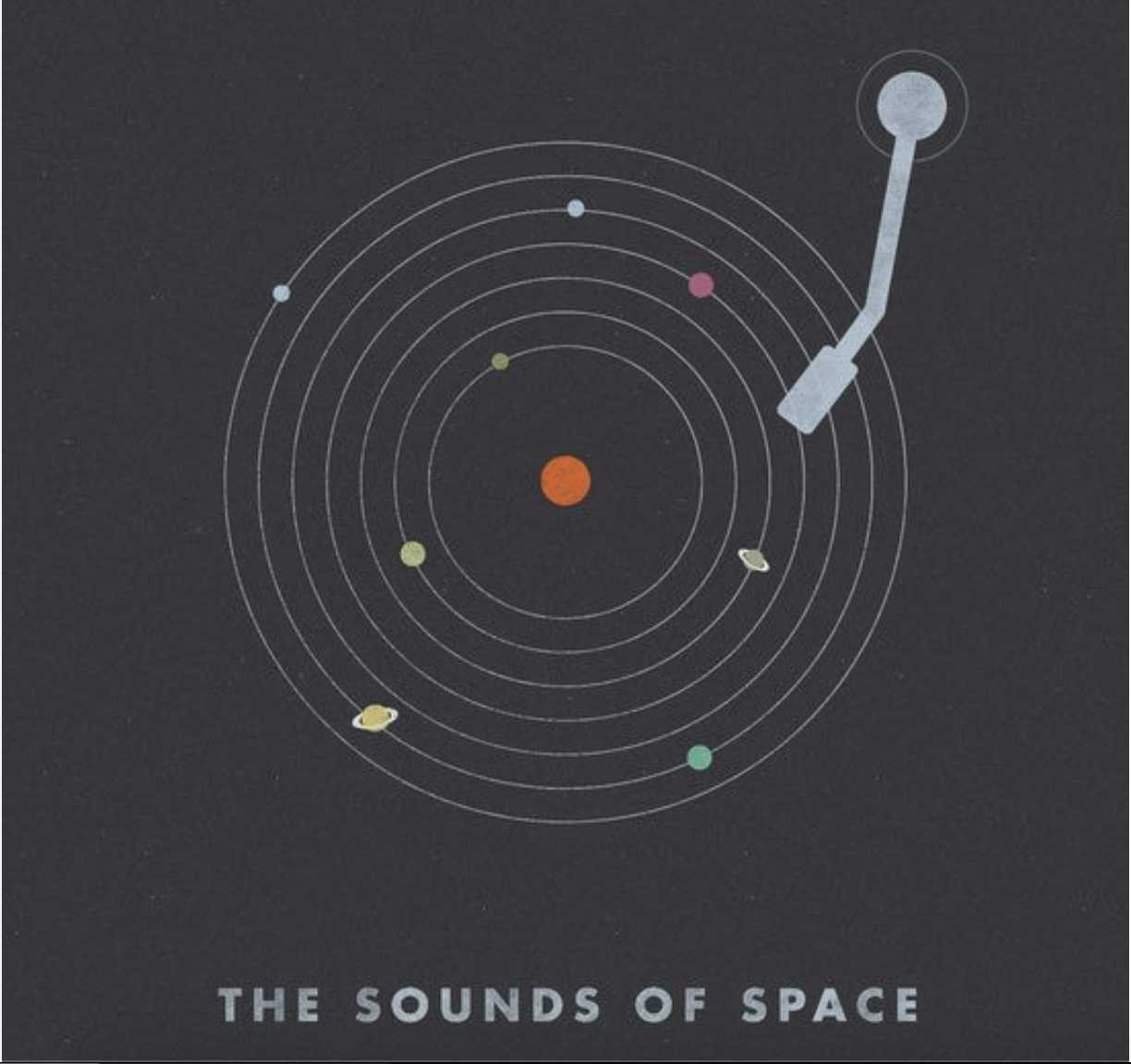

In Case Study, I was inspired by “Creative Audio Visualizer” on tympanus.net and decided to generate visuals through classical music. As an amateur piano player and a fan of Chopin’s music, I want to visualize Chopin’s 4 Ballades (narrative piano solos, usually based on narrative poems). These pieces are full of variation and have notes that stand out to form the melodic line, so I hoped to pinpoint these keynotes and generate visual transformation according to the melodic line. I chose Ballade No.1 for visualization because the melodic line is easier to capture (especially at the beginning). After researching online, I wanted to begin my visualization following this poster’s idea, because I felt that the beginning of Ballade No.1 is like a flashback with a dark and struggling tone. I wanted to convey the feeling of an elderly telling a very old story, with tears in his eyes. And the space poster, with these stars, slowly orbiting around the sun, related me to such a feeling, as it was the presence of the universe telling its long history since the Big Bang.

At first, I only wanted to develop one sketch and use it throughout my 8-minute-long performance. However, my laptop started screaming after running for about 20 seconds in my first test and I had to stop the program for fear of a breakdown. Also, the note detection model frequently failed to start from the 34th bar, where the playing speed and the number of chords increases. So I started to brainstorm parts 2, 3, and 4 for the rest part of Ballade No.1.

In part 2, I’m inspired by the problems I met in W3 Recitation, where I failed to create constantly popping and fading bubbles. I fixed that problem utilizing a particle system and generate the following visual:

This floating-bubbles-effect corresponds well to part 2 of Ballade, as the constantly popping effect resembles the accelerated speed and the music structure. Also, the music gave me a feeling of emotional eruption, as if an old man finally decided to reveal the whole story.

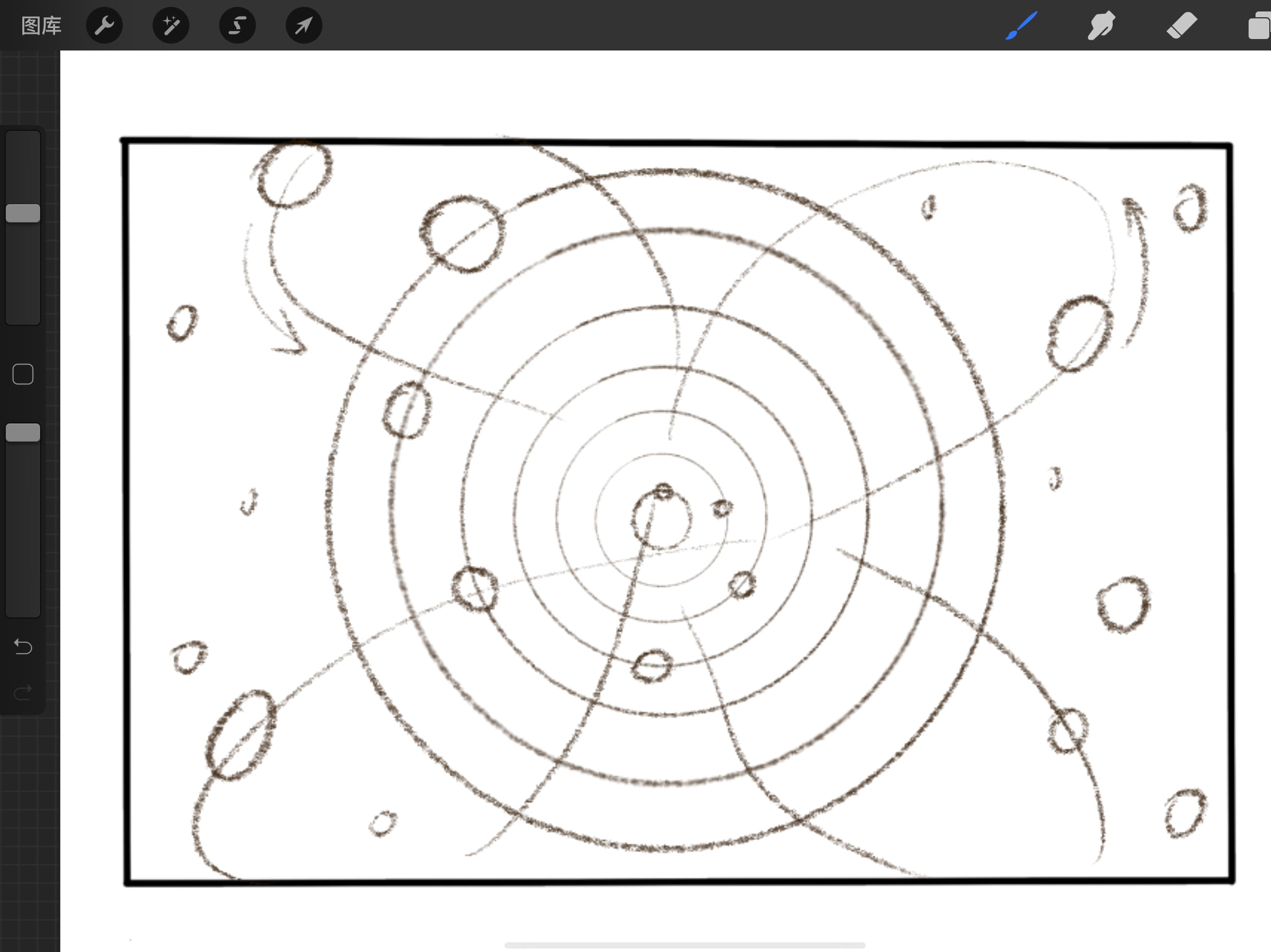

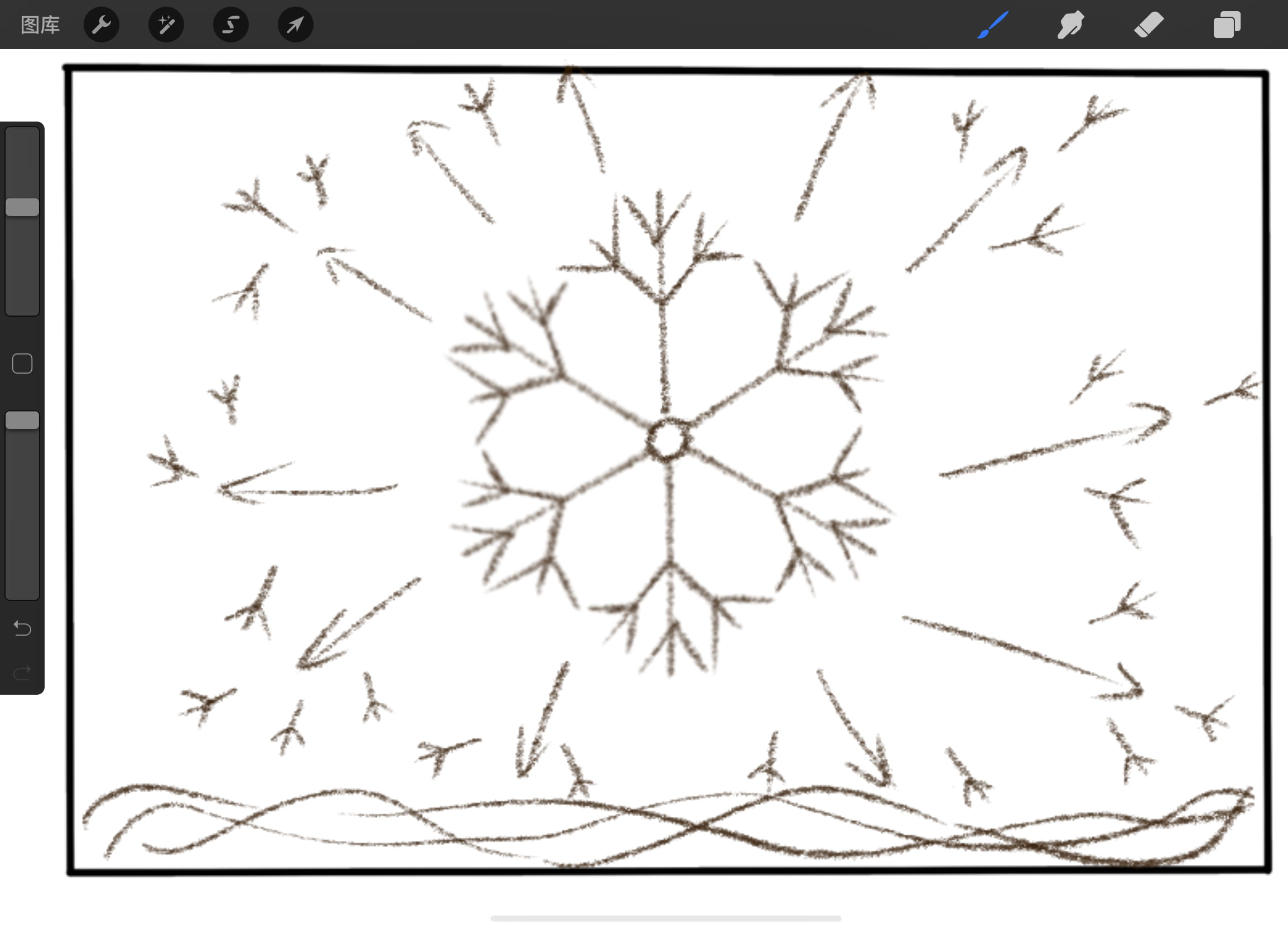

Based on the above thoughts, I sketched the following draft for my part1 & part2 visualization.

Part1:  Part2:

Part2:

2. List of techniques and equipment

Techniques: p5 libraries; video editing; live time performance recording.

Equipment: Tascam; laptop; smartphone.

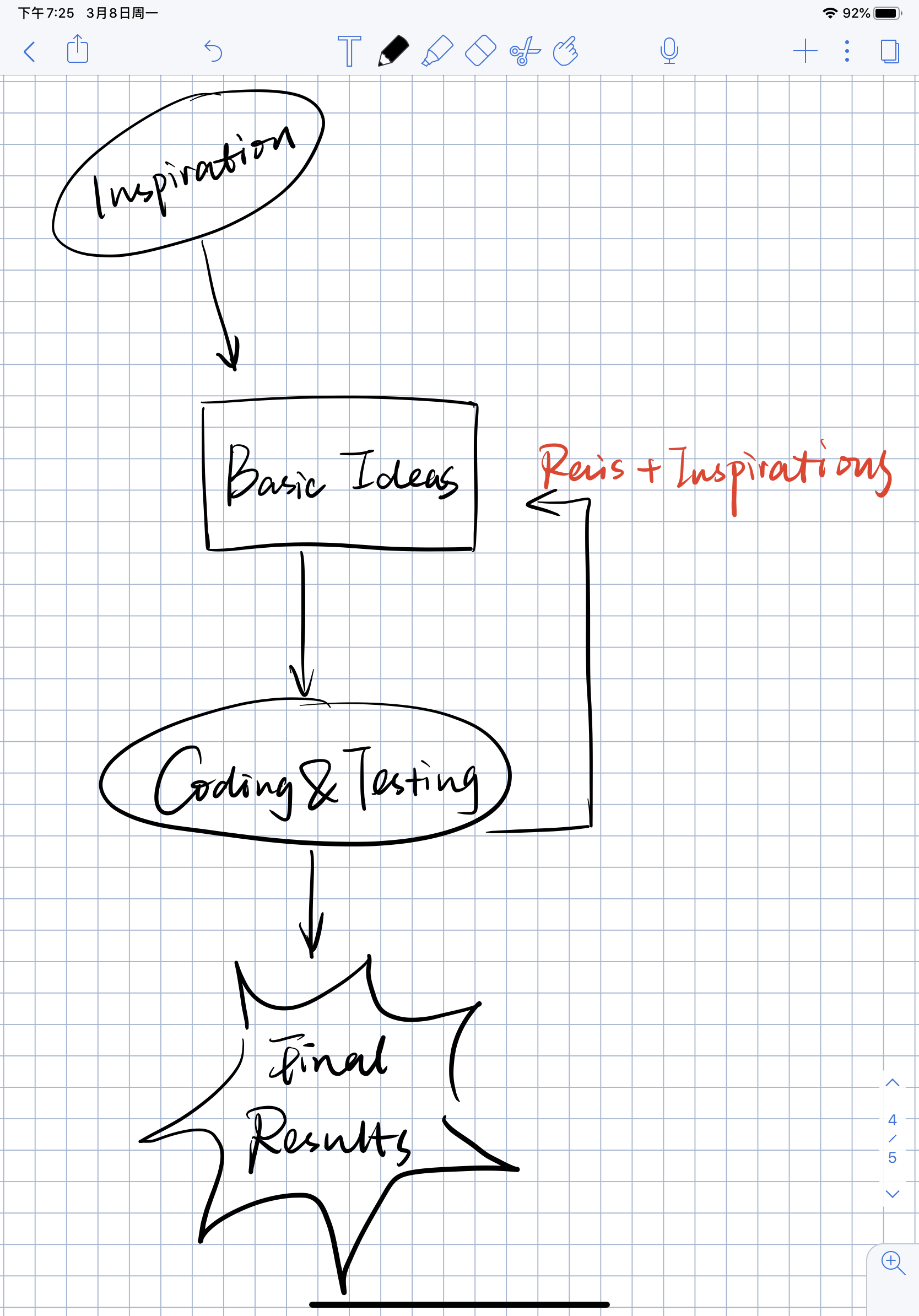

3. Workflow Diagram

4. Coding

part1:

Part2:

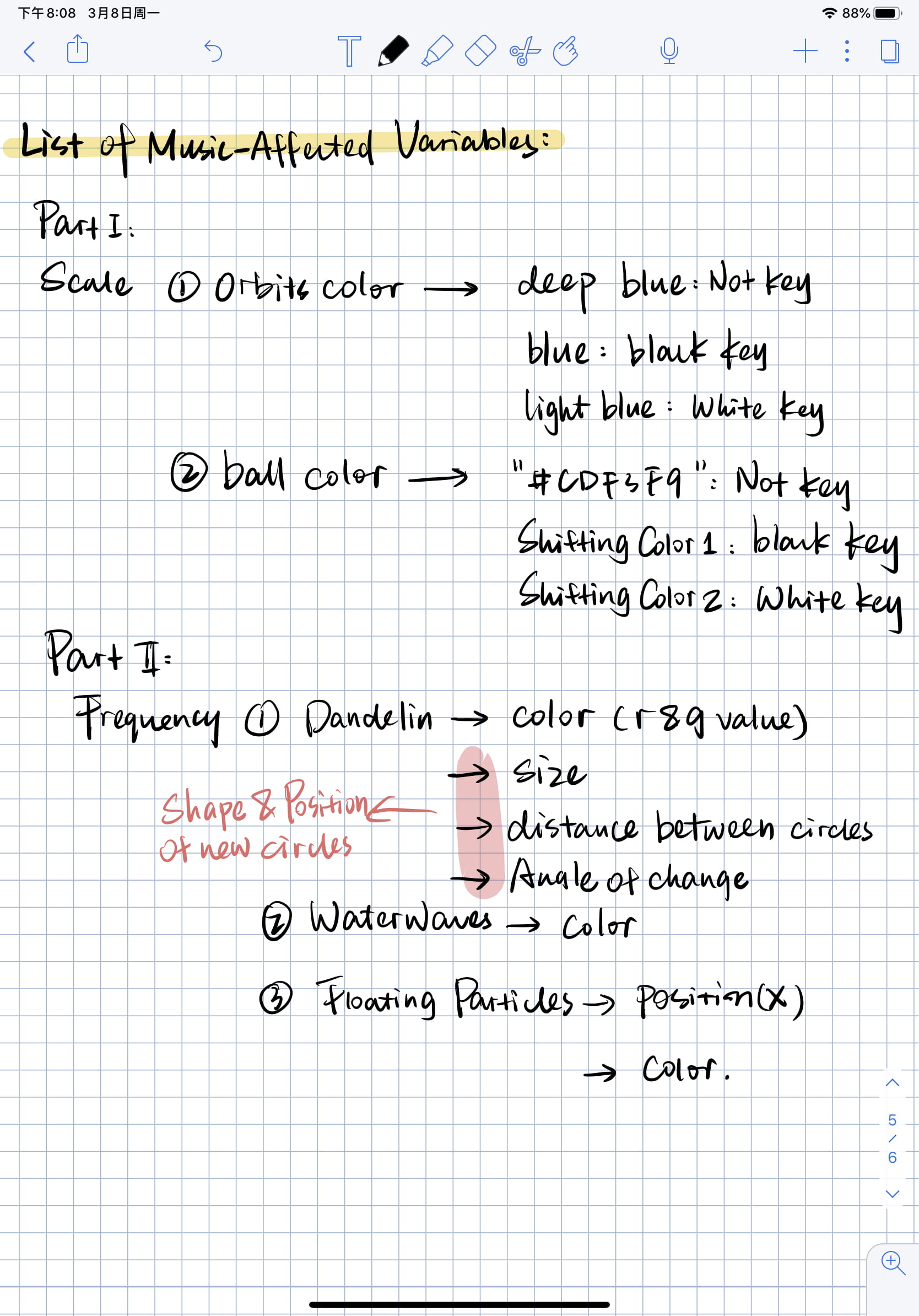

I attached a list of variables affected by the music here:

Failure and Success

(1) Importing the p5 sound library & ml5 model:

At first, I want to import the ml5 model to OnlineProcessing. But it doesn’t allow importing other libraries.

So I switched to Web Editor and copied the HTML embedded code following the Coding Train tutorial. I failed again because I imported the p5 library twice (as there was already a p5 library code).

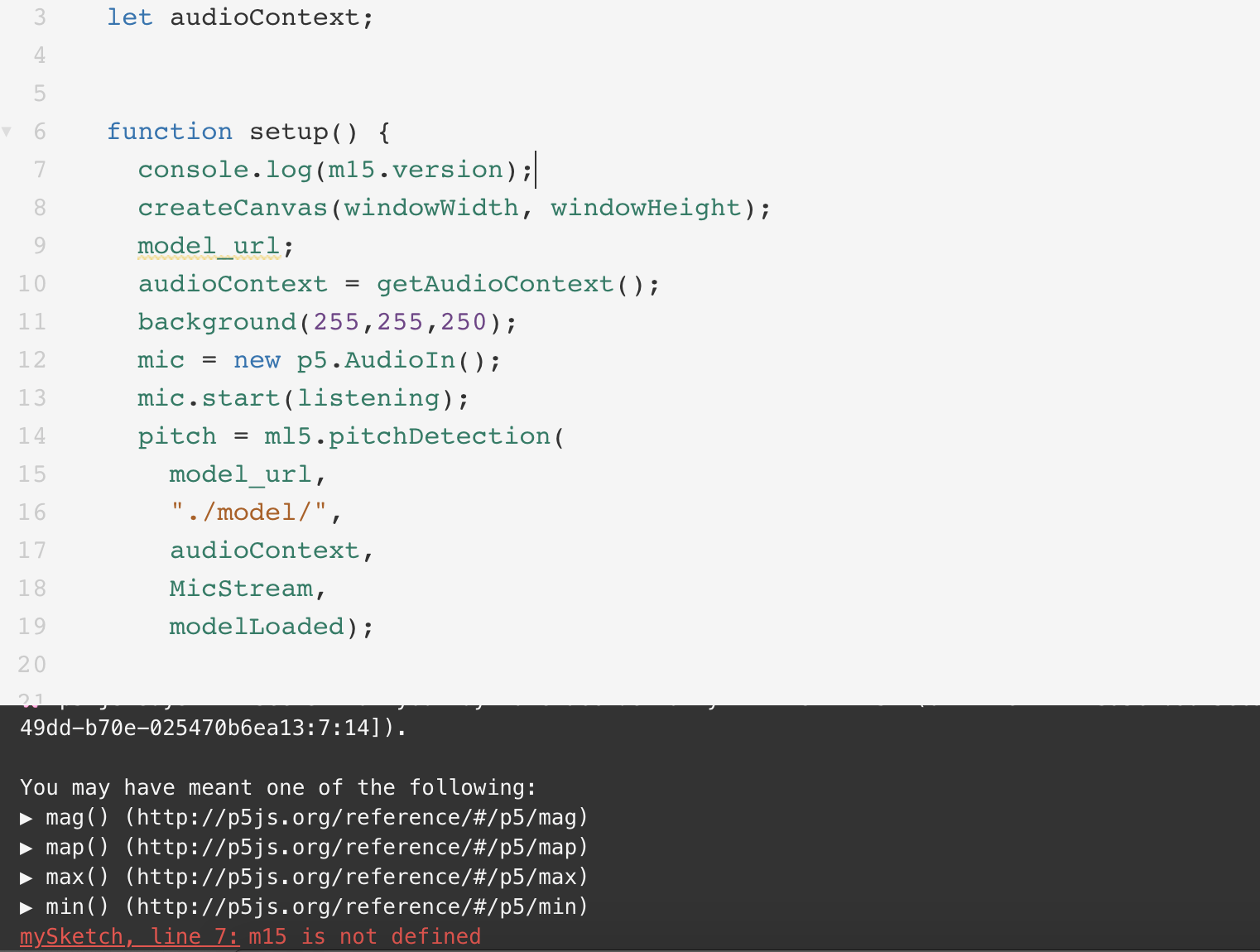

After importing the model, I kept getting this error message:

I was confused because I only have 38 lines of code at that time. Also, every time I ran the program, the error line changed. I guessed that the error message might come from ml5’s source code. So I went to the official website and checked the piano pitch detection example. I copied the HTML file as well as the js codes from the example, and the program was successfully run.

(2) 360-degree flowing effect

I tried to create floating particles around the center dandelion by changing the this.x and this.y value to sin(freq) + r and cos(freq) + r. But for some reasons the position of particles didn’t change as I wanted.

(3) Particles generation

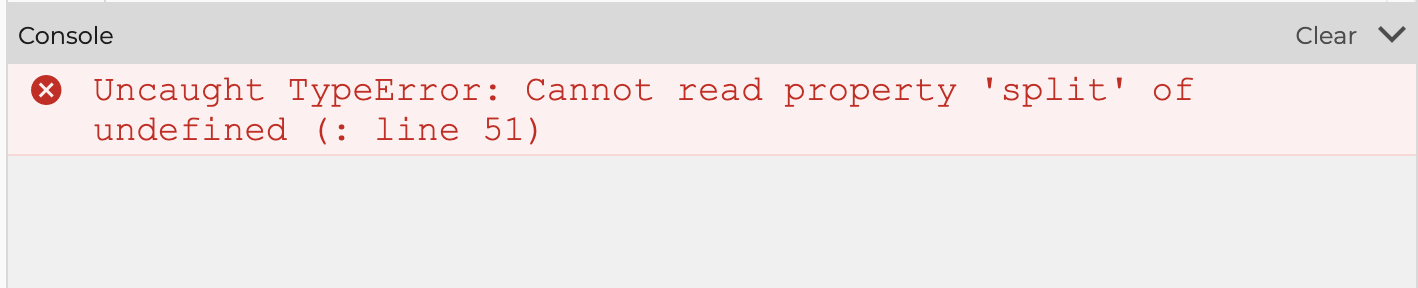

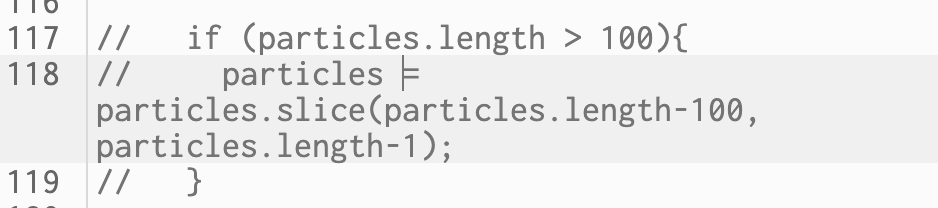

When generating the particles, I didn’t use the alpha value at first for aesthetic considerations. But as the video tutorial used alpha as a signal for deleting the bubble from the array, I had to figure out another way to do that. Here’re the several versions I tried:

But they all led to the following visual:

Finally, I compromised and used the alpha value.

(4) Frequency & pitch detection

After consulting with Professor Moon, I decided to use p5 ml5 model for pitch detection and note allocation. During the testing and learning of this model, I met three problems:

1. It took time for the model to react to and interpret the frequencies detected. Some frequencies couldn’t be captured during fast-speed playing.

2. Mixing high and low frequencies (through left- and right-hand synchronized playing and chords) resulted in neutralized frequencies, even if I stressed some of the notes while playing.

3. The highest keys and lowest keys returned “null”, indicating that the detectable frequency range didn’t cover the 88 keys of the piano.

I cannot solve the first and second problems because they are related to the ml5 model. But I managed to solve the third problem (sadly, after the demo is submitted) by changing the mapping range. The position change is more obvious now (can be viewed in the code exhibition above).

(5) Running the real-time-performance length exceeds my laptop’s capacity

To solve this problem, I reduced the unnecessary elements and functions (such as reducing the number of waterWaves and the spinning balls). Also, I split the performance into smaller parts, so that each part does not last longer than 2 mins.

(6) Transition

I intended to create a transition with a mouse brush. But the brush couldn’t cover the orbiting stars and their paths.

5. Fabrication

Part 1

Testing with bright colors:

Testing with orbiting balls:

Creating bubbles that respond to the frequencies:

6. Help Credits

Importing p5 sound library: p5.js refrence & Coding Train tutorial: p5.js sound tutorial

Importing the ml5 model: ml5.org & Coding Train tutorial: Ukulele Tuner

Frequency to MIDI notes and scale array: Web Editor: PitchDetection_Piano by ml5

Particle system: Coding Train tutorial: Simple Particle System

Dandelion & waterWaves: Sine, Cosine, Noise and Recursion Workshop by Moon

Reflection

Different from the previous assignments, I didn’t fully plan out the visual effects and the animation. Rather, I put the frequencies and scale analysis at different positions until I got the effect I like. In this process, I spent most of my time adjusting parameters and assigning variables, which I found really interesting. I got more familiar with the functions of each line of code in this way. However, there’re drawbacks. I deviated greatly from the original goal to visualize the melodic line and feelings I experienced in Ballade No.1, especially in the second part. The overall visual effect doesn’t correspond well to the music and the changes seemed abrupt and awkward. Improvements should be made on the shape design and variation smoothing.

Future Plan

1. Re-sketch part2: I failed to generate floating particles around the center dandelion (as sketched before coding). The first step will be to solve this problem and revise the basic shape.

2. Adding music-related parameters: I only used the frequency (and its interpreted scale) for synchronizing the visuals in this project. I will integrate more sound variables such as amplitude into the project and make the transformation more diverse.

3. Coherence in visual design: In this project, I only managed to finish the first two parts of visualization, and the two parts are not coherent. In the future, as I develop parts 3 & 4, I will consider using a dominant theme that can form a narration throughout the piece.

4. Animated creature attempt: I may try using multiple functions to create an animated creature that can move and respond to the music.

5. 3D object attempt: I may try to use 3D object for transformation.