EMIN – Pilgrimage

Project Description

The Final project created by Ken and Me is a NIME music project incorporating elements from visual arts and dancing. The primary concept embedded in this project was to use the ideology of NIME, and the ontological composition of music to explore the area of religious music, which is conventionally considered to have little connection with various innovative music forms and experimentations. With the help of this final NIME project, I intend to encourage people to think about the contradiction and peace lying between experimental music and conventional serious music. The hope is that every form of music can be experimented, and translated into a language of a new era.

There are basically four different inspirations for this music project, two of which are actually from non-NIME artists. On the other hand, the two NIME artists, Steve Reich and Eliane Radigue have greatly inspired this project in different ways. To be more specific, I am largely influenced by Reich’s ideology of musical process, which serves as one of the basic inspirations telling us how to perform Pilgrimage and how the procedure should be. Eliane, however, interests me with her symbolistic minimalism, yet what impresses me are the direct feelings I get from her music piece. The sacred, intangible, peaceful, and hypnotic delivery from her piece has set, to a very large extent, the keynote and underlying tone of our final project.

The other two inspiration sources are Hans Zimmer’s What Are You Going to Do When You Are Not Saving the World? and Joel Corelitz’s Invitation. Frankly speaking, I have listened to these two pieces for at least 10 hours in total, and I have learned, respectively, a lot about composition and music delivery from Hans and Joel.

Perspective and Context

Discussing and sharing the connections and other interrelationships of ideas between our project and the course materials have really been rather easy because the entire development of this project has been based on some of these ideologies.

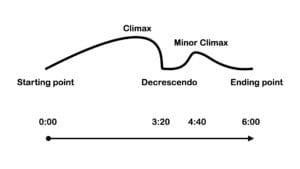

I didn’t mention Michael Nyman and John Cage in the previous section because their ideas didn’t give me much inspiration about the performance, but they remain those who have influenced me most in this performance. The composition of Pilgrimage is largely based on ideas in Nyman’s ideas in his article Towards a Definition of Experimental Music, where he talks about the concept of time in composing. He put forward the idea that time shouldn’t be linear in the performance, or at least we shouldn’t perceive time as linear. This serves as the ideology for the whole when we were at the first stage of the development and thinking about performing music that represents TIME. (However, we switched to the idea of religious music because we all agreed to explore something new, instead of something that seems new and abstract but is really a bit cliche.) On the other hand, even if we didn’t apply time as the main theme for our final project, the concept of time and Nyman’s ideas still exist in the composition. In order to grant the audience the ability to perceive the music piece as a whole and to perceive time in a nonlinear way, we incorporated a lot of elements that constantly reoccur in the performance, like some of the bass and harmony. However, at the same time, we also would like to deliver the concept of linear time, and therefore we decided to make a decrescendo (a stop) in our music where only piano harmony exists. Such decrescendo would in a way, give the audience a feeling that the music is still proceeding in a way, but not always revolving around several components regardless of time. In terms of the composition, we would like to incorporate Nyman’s idea about time, but the would also like to deviate slightly from this idea. Therefore, the final result is that non-linear and linear components can be experienced at the same time in our performance.

When I was looking at Tanaka’s expressive performance, I knew that I would definitely apply this kind of stage presence into our final project, and we did. We started to think about how can we impress the audience apart from the music, and Ken thought of the idea of dancing and soft circuit, which is probably the thing that he is most familiar with in NIME. He was thinking of wearing some of the soft circuits on his arms and wave to control the soft circuit, and this idea has been well illustrated in the performance at Elevator. However, it is not enough if the stage presence can only be called impressive. It should also be logical. Tanaka said in the essay that “In our art form, there is a balance between logic and intuition.” The presence should convince the audience that it is logical, apart from being intuitive. The connection between the presence and performance must be formed, and the interesting result was that Ken and I spent some time thinking of the proper postures he should hold to connect to the concept of Pilgrimage, and at the same time, precisely control the soft circuit.

Development and Technical Implementation

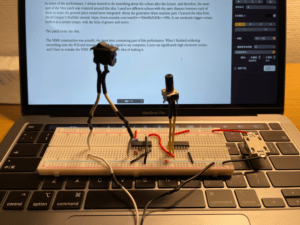

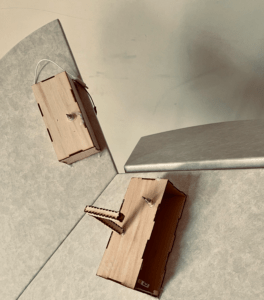

In this section detailing the construction and the development of our project, I would like to first discuss the choice of using breadboards instead of soldered PCBs to assemble the NIME instrument. The truth is that I have made quite a lot of PCBs soldered with complicated circuits, but every time I connected the soldered PCB to whatever a speaker or the audio interface, the input signal became rather vague, accompanying many electronic noises. (I really think that this was not caused by misconnection because I almost checked 1000 times and made the same circuits on the breadboards at the same time.) On the other hand, when I was checking the circuits connected using brass wires on the breadboard, I was surprised to find that it can make quite a clear sound and deliver less noise (which was really strange). In order to yield the best delivery, I chose to use breadboards instead of PCBs.

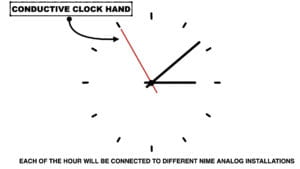

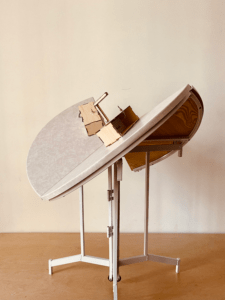

The choice of incorporating the table into our performances was actually decided in the first stage of our consideration. However, after we received the table from Taobao, we discovered that it would be rather hard to DIY the table into part of the instrument because it was so firm. (We then switched the plan and decided to perform on the table because 1. It was convenient to put everything related to the instrument part onto the table and 2. We thought this can deliver an eye-catching effect because the table can be folded in a really creative way.

At the start of the instrument construction, I decided to use the instrument to create a hypnotic frequency. Therefore, the primary components of the NIME instrument include a pitch-tracking circuit controlled by soft circuits and a pitch division circuit. The sound of the NIME instrument has, I think, met my expectation. In terms of the parts concerned with the input signal, I used ten different echoes to create the desired effects. Actually, a lot of things in the Max 8 patch include echoes or such effects to connect with the concept of Pilgrimage, and I think such an attempt to deliver a feeling of religious music has succeeded.

As discussed before, I decided to use breadboards instead of PCBs because of ht sound quality. However, this doesn’t mean that there would be no soldering work. Different components like potentiometer and switches need to be first soldered individually with the wires. To avoid electronic interferences, I connect every soldering point with a heat shrink. (I even bought all of the tools needed for this project, say, the soldering iron, heat gun, heat-shrinks, etc. The result is that my dorm looks like a tiny fabrication lab and I am actually able to do anything related to the construction job in my dorm.)

Documentations Here:

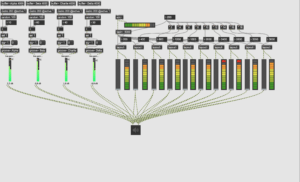

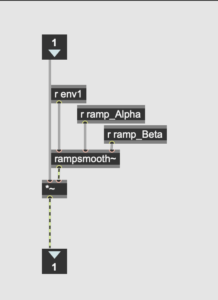

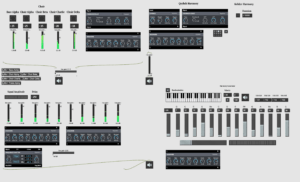

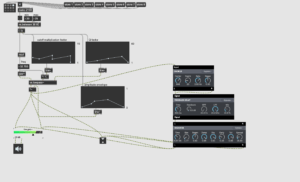

Now let’s talk about something that retaliated to the general Max patch building. The final patch and all of the data in the effects in the performances look like this.

Documentations Here:

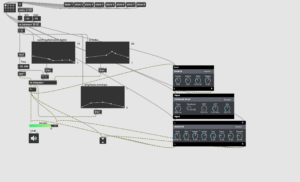

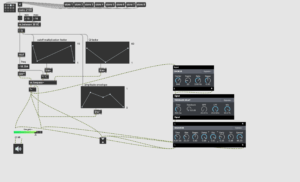

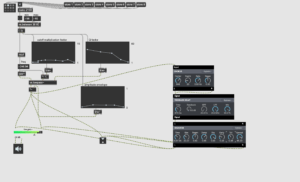

In terms of these data, I specifically spent one hour and a half to adjust different effects to make sure that everything in the Max 8 patch works together under similar tones. The way to control the Max 8 patch during the performance was to use the live agin~ item, which has the ability to separately change different volumes. (The item gain~ changes all the volumes together and similarly, the item ezdac~ will control all of the sound output together.)

The patch includes five different b-patchers. (Actually, there were six, but I don’t like the sound of one patch and deleted it.)

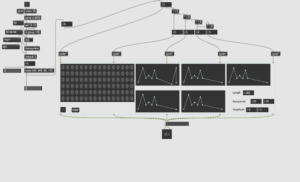

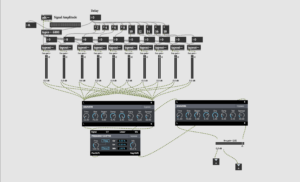

The one that relates to the signal input lies in the bottom left corner. The logic behind this patch was actually very, very simple because it only included different echoes and effects. However, after the construction of the Max patch and the performance, I felt that simplicity might even be better. This part accounts for the general main theme existing in our performance and I was actually quite satisfied with the result it delivers.

Documentations Here:

In terms of the harmony generator on the bottom right corner, I have to give credit to Kparker830, who is the original creator of the patch. I always wanted to incorporate some well-generated harmony into the final project that continuously alters and sounds very peaceful. However, I always failed to get the desired effect and I was surprised to find this harmony generator created by Kparker830.

Link: https://www.youtube.com/watch?v=N2LZdXUO_zo

Documentations Here:

The only thing I changed in this patch is to include two new ways to control harmony. There are two different choices. I can either let the generator randomly generate different pitches or use the signal number to control the pitch. In the performance, we used the first way in the first half of the performance and the second way in the second half of the performance.

However, I really feel uncomfortable when I was able to generate such beautiful music using other’s tools. Therefore, I decided to always make the existence of the harmony vague, and only sometimes use it to be the background music.

The patch on the top right corner is actually also a harmony generator learned in the makenote and kslider tutorial. However, I wasn’t quite satisfied with the initial effect because it sounded too simple, without too many alterations. I was inspired by David Farrell’s way to control different notes.

Link: https://www.youtube.com/watch?v=wXlBpC_DLC0

Documentations Here:

I used his method of using a random dual gate to make the general music more diverse and deliver a feeling of different layers.

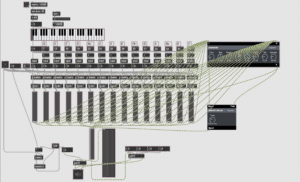

The beat/frequency generator stands next to it. This part is actually using the course materials (Class 10) to create some background music that always exists.

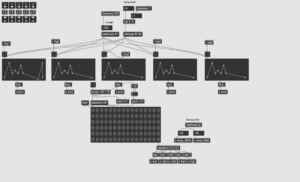

Documentations Here:

I made 8 different beats with different frequencies and effects. Honestly speaking, I made a guess that the bass system in Elevator would be rather powerful and therefore made a lot of low-frequency beats. This turned out to be a wise move (Yes!). The beats coming out of the sound system in the Elevator were very, very strong and I could literally feel the vibrations of the table, floor, and ceiling during the performance.

The last patch stands for the choir machine, which has been used in the last performance and learned from David Cooper, https://www.youtube.com/watch?v=IYAwtRxZiAY&t=311s.

I used four different buffers here to control four different machines. To be more specific, one buffer is a piano note, which is recorded with an electronic piano for echo effects. One of the two choir buffers is made by multiple samples recorded with my voice and integrated and altered together in Adobe Audition. The last two buffers are found in the sound library in GarageBand.

I was very careful with the use of the choir machine because I thought it would catch most people’s attention and therefore, let them overlook the NIME melody and other interactive sounds. As a result, I decided to only use the choir machine as the introduction and climax of the second half of the performance.

All of the patches are controlled via bpatchers and used presentation modes. In the performance, everything is controlled by live gain~ and the audio interface to make sure the input and output levels are correct. Personally speaking, I think different objects interact harmoniously with one another during the performance.

In terms of the individual, I was in charge of the Max 8 patch construction and part of the instrument construction, and Ken was in charge of some of the instrument production and the part of stage presence. To deliver the best of ourselves, we worked together for a day to think of the best composition and visual presence.

Performance

Most things went well in Elevator. In other words, the instrument normally functions and my computer didn’t crash. However, during the class rehearsal, there was something wrong with our NIME instrument, that a disconnection occurred and we had to move aside a whole to fix our instrument.

In the performance, the best parts are, I think the expressive beats that almost turn the ceiling of the Elevator, the working NIME melody, and the kslider harmony between the two music parts, which really delivered a sense of quietness.

My part in the performance was to control the Max patch using the MIDI mix and the overall volume using the audio interface. Ken was doing his “contemporary dance” and controlling some of the harmonies. In the performance, I was 100 percent focused and really nervous, and luckily there was no major accident.

However, there was definitely something that can do better. For instance, I need to look away from the computer screen for a while, maybe close my eyes to fully immerse myself in the performance, instead of being this nervous. On the other hand, I think Ken can probably also do better with the soft circuit expression because some of the postures and moves in the performances were actually meaningless and didn’t change the signal number. Nonetheless, he has made everyone at Elevator remember us.

Conclusion

What did I learn in this performance or this semester? I was also asking myself this question when I finished my performance. As said before, the intention of the final project presented by Ken and I was to present religious music in a NIME way and put forward the relationship between experimental music and conventional serious music. We would like to try something new in something old, and what I have learned from this process is that music and actually an art form without a form.

It doesn’t depend on how we create and present the music, however, it’s about selection and integration. Any attempt to create music and perform it is an attempt of selecting different music components and integrate them together. In some ways, we are just choosing to deliver the final piece in one way, from infinite choices. This is probably the essence of music, and via this performance, I have managed to discover a clearer idea of how things are integrated and selected.

There are definitely things to be celebrated after a semester’s learning. I think Ken and I both improved a lot in the knowledge of Max, soldering, and music. Our abilities to tolerate stress are also improved. The biggest success, on the other hand, is the whole performance. Despite all of the minor mistakes, I would mark the performance given by Ken and me, and everyone’s performance, as a huge success.

However, there are definitely things that are not that good but can be improved in the future. The first is the soldering stuff. In the summer vacation, I would probably try to figure out a way to solder every component onto PCBs aiming for a solid connection. Even if in the case of this performance, the breadboard made better sounds, it was also more vulnerable and fragile, as the situation shown in the rehearsal.

The second thing to improve is probably the stage presence. Maybe there is a better way for Ken to use the soft circuits to control different sounds in a more stable and clear way, and this will be further explored.

After all, I would still regard this as a generally successful experience and a great, unforgettable memory.

The legendary journey of Pilgrimage ends, but also starts from here.