Project: Water Battle- Alex and Bruce- Professor Rodolfo Cossovich

Conception and Design:

The project “Water Battle” is a two-men interactive game. Each player will take one water pipe as the controller and sway it. The water flow will be displayed in accordance with the direction and the movement of these water pipes. The players will need to use the water flow to score with the ball. The be more specific, one ball will show up in the center of the processing sketch and each player has a gate to keep. Their job is to try to push the ball, using water flow, into the other gate and at the same time, try their best to defend the other player’s attack.

The final project looks like this. Picture taken by Bruce at the IMA End of Semester Show.

This project was greatly inspired by the interaction project Bubbles’ Cellar. The idea embedded in it really inspired me and Alex a lot. To start with, the final project is actually the final evolution of our original ideas and it looks way much more different from what we have originally thought, which I will describe in the following paragraphs. However, all of our previous ideas share one thing in common—the connection, integration, and combination of real-world activities and the virtual world.

Let me be more specific. The first idea made by Alex and I is the “Dancing Shoes”, by which users can generate music by the movement of the shoes, but we soon changed our direction because we realized that this was only a poor imitation of the NIKE Music Shoes made by Rhizomatiks Design. Furthermore, I also thought that this project isn’t fully aligned with my definition of interaction, whose key factors are interpreted by simultaneous and continuous interactive activities, unpredictability, or in other words, the ability to provide different users different experiences.

We soon came up with a new idea. We decided to create a Flappy-Bird-like game. The users will use their voice to control the bird from falling or hitting the barriers. The game was inspired by the experience and difficulties Alex had in the choir. He found it really hard to get the correct pitch and therefore wanted to create a new experience to help music lovers to match their pitches. At first, we all thought this was a great idea and decided it to be our final project, but our ideas changed a little bit after the in-class presentation of our ideas. To start with, the Flappy Bird has been a really over popularized game and after sharing our ideas and listening to Professor Rudi’s advice, I felt something is missing, because following this direction, we will only make another kind of music game that barely has any difference compared to the existing ones. We all agreed that this isn’t something that we would like to create in our final project.

The idea has, again, evolved after a sharing and discussion with Professor Rudi. We thought about the idea of making a Pachinko-like project. It’s a little bit hard to explain. In general, the Pachinko will be displayed in the two-dimensional sketch of processing and several dots will be used to show the pillars inside the Pachinko. Sensors will be attached to the user’s hand, and they need to make the movement, as if they were throwing the ball from the top to the bottom into the processing sketch, to create a ball in the sketch. According to different positions the ball is finally located, different music will be generated. This was still, at that time, a very exciting project for Alex and me. However, after serious consideration, we changed our idea again, because I think, the existence of this project is actually the integration of all the ingredients I needed to satisfy my definition of interaction. The integration itself doesn’t make any sense. I mean, why will music be generated when balls fall to the ground? The project actually lacked coherence.

After this idea, we went in an improper direction and wanted to create a kind of musical instrument. The users will use the movement of the right hand to control the volume and the left hand to control the frequency. However, we soon found out, thanks to Professor Eric, this is greatly similar to the music instrument Theremin. We are not satisfied with the fact of initiating something that exists, and therefore we changed our direction again.

However, we still couldn’t jump out of the thinking mode, and. Came up with the idea of “Void Music Shapes”, which has been described detailedly in the documentation blog sharing my ideas on the final project. Generally speaking, the users will create different shapes, by the movement of throwing, into the processing sketch, and music will be generated when they hit the ground and bounce against each other. This project is actually an inspiration for Mutual input sessions, and we found ourselves again, imitating something that exists, and integrating all the factors together.

I was thinking really hard about these previous concepts. I said at the beginning of my blog that along with all these ideas, they share one thing in common, that the connection, combination, and integration of the real world activities and the digital world. However, the ideas mentioned above share one missing part together, the proper connection with these two things. Just as I have mentioned, the point that music will be generated as a response to the fact of the ball hitting the ground doesn’t make any sense. If I were to create music, I should modify the input experience that makes users feel that they are really creating music. However, the original input method, such as the behavior of throwing, is actually based on the ideology of game creation instead of music expression. This is the missing part. They are two different matters, and it doesn’t seem reasonable when the behavior of throwing is forced to generate music.

Therefore, Alex and I have focused the direction on game creation. Based on this point, I thought further and came up with the prototype idea of “Water Battle”. Initially, what I thought was to create a game, where players need to score with the ball by throwing tiny balls at it. We had been working on this idea since then. The prototype was completed before the user test.

At the user test, most players think this is an interesting game, but still, the data included in the project was improper and as a result, the interaction was slow or incorrect. We didn’t think of altering the game at that time, but some time later. Honestly speaking, the realization of our final project may be a little bit dramatic because one mistake was made in the test and the background of all tiny balls are left on the screen. We suddenly felt like this was like water flow! All we need to do next is to find a proper way to create the input experience. The solution we thought of was using the water pipe so that the users will feel the water is (really) generated by the movement of the water pipe and we found this extremely effective.

This is the (very long and complicated) process of coming up with the idea and design of our final object.

Fabrication and Production:

The production process started with the prototype idea of “Water Battle”. Since we wanted to allow users to feel they were really throwing the balls into the computer, we needed something that can detect the movement. The accelerometer has hence become the first choice. However, I initially used the 3-axis digital accelerometer and the data it sent was really causing unstable outcomes.

By the way, I wanted to thank one IMA fellow here (So Sorry that I don’t know his name) because he has greatly helped us to figure out how to send the modified data from Arduino to Processing.

Thanks to the hard work of Alex and Professor Rudi, we found the correct type of sensor. To make the data more stable and its usage more practical, we decide only to detect the data from one horizontal level instead of three, but this can cause trouble, which occurred in the presentation and the IMA show, that many players don’t know how to control the water pipe because they couldn’t find the correct horizontal level. When trying to test the game, we find the sensor takes a short time to send stable signals, so we add an instruction part before the game so that the players can continue with the game after they have stabilized their nozzles.

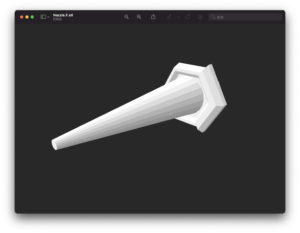

To make the experience more real, we decided to 3D print a nozzle for the water pipe and the eventual interface looks great. In the assembling process, we hide the sensors inside of the water pipe, so users will feel this is just a water pipe from the appearance.

In order to achieve a better presentation effect and a more immersive experience for users, we intend to use the projector to project the game screen on the ground (as is shown in the picture included in the beginning).

Conclusion:

The project “Water Battle” intends to create a new experience for users to connect the real world with the digital and virtual world through properly based on the similarities that the real-world behavior and the virtual response share. We would say, this kind of experience is like the combination of VR and AR. To start with, you will need a helmet or a device with a lidar to use VR or AR, and the experience is limited because we can only use limited senses to feel VR or AR. However, what we intend to create is the experience that enables users to immerse themselves in it, and feel the connection. It will all be carried out in the real world, and the virtual processing sketch was just adding new possibilities to the real world. It allows users to form a simultaneous and continuous interaction. Also, because this is a game, the result won’t be static and is in accordance with the different behaviors of the two users. Last, it satisfies the, probably the most important, final definition of interaction, that it has built a proper connection between the input and the output to form a stage of coexistence of the two users and the game. Though this project can be improved. The correct horizontal level should be highlighted so that players will get a clearer image of what they are doing, and thanks to the advice from Siyuan, music should be added to make the experience more immersive, but sincerely speaking, it has been greatly pleasant to work with Alex, and thanks to Professor Rudi, Oliver and Eric, this project has been, I think, a success.

Technical Documentation:

Arduino Code Here:

#include “DFRobot_BNO055.h”

#include “Wire.h”

typedef DFRobot_BNO055_IIC BNO;

BNO bno(&Wire, 0x28);

// show last sensor operate status

void printLastOperateStatus(BNO::eStatus_t eStatus)

{

switch (eStatus) {

case BNO::eStatusOK: Serial.println(“everything ok”); break;

case BNO::eStatusErr: Serial.println(“unknow error”); break;

case BNO::eStatusErrDeviceNotDetect: Serial.println(“device not detected”); break;

case BNO::eStatusErrDeviceReadyTimeOut: Serial.println(“device ready time out”); break;

case BNO::eStatusErrDeviceStatus: Serial.println(“device internal status error”); break;

default: Serial.println(“unknow status”); break;

}

}

void setup()

{

Serial.begin(9600);

bno.reset();

while (bno.begin() != BNO::eStatusOK) {

Serial.println(“bno begin faild”);

printLastOperateStatus(bno.lastOperateStatus);

delay(2000);

}

Serial.println(“bno begin success”);

}

void loop()

{

BNO::sEulAnalog_t sEul;

sEul = bno.getEul();

Serial.print(sEul.pitch, 3);

Serial.print(“,”);

Serial.print(sEul.roll, 3);

Serial.print(“,”);

Serial.print(sEul.head, 3);

Serial.println(” “);

delay(80);

}

Processing Code Here:

// IMA NYU Shanghai

// Interaction Lab

// For receiving multiple values from Arduino to Processing

/*

* Based on the readStringUntil() example by Tom Igoe

* https://processing.org/reference/libraries/serial/Serial_readStringUntil_.html

*/

import processing.serial.*;

String myString = null;

Serial myPort;

String myString2 = null;

Serial myPort2;

int NUM_OF_VALUES = 3;

int[] sensorValues;

int[] sensorValues2;

float []x1=new float[100000];

float []y1=new float[100000];

float []x2=new float[100000];

float []y2=new float[100000];

float previousvalues0=0;

float previousvalues2=0;

float previousvalues0vy=0;

float previousvalues2vy=0;

float vx=10;

float vy1[]=new float[100000];

float vy2[]=new float[100000];

float vxbig=0;

float vybig=0;

int i1=0;

int i2=0;

int R=100;

float xbig=-200;

float ybig=-200;

int socre1=0;

int socre2=0;

int test1=1;

int test2=1;

int k=300;

int a=1;

void setup() {

fullScreen();

background(0);

setupSerial();

setupSerial2();

noStroke();

}

void keyPressed() {

previousvalues0=0;

previousvalues2=0;

previousvalues0vy=0;

previousvalues2vy=0;

vx=10;

vxbig=0;

vybig=0;

i1=0;

i2=0;

R=100;

xbig=width/2;

ybig=height/2;

socre1=0;

socre2=0;

test1=1;

test2=1;

k=0;

a=0;

}

void draw() {

updateSerial();

updateSerial2();

k++;

println(“1: “);

printArray(sensorValues);

println(“2: “);

printArray(sensorValues2);

background(255);

fill(255, 0, 0);

rect(0, height/2-120, 20, 250);

rect(width, height/2-120, -20, 250);

if(a==1){

textSize(50);

fill(255, 0, 0);

text(“TRY TO STABLIZE YOUR NOZZLE!”, width/2-380, height/2);

}

if(k<60){

textSize(100);

fill(255, 0, 0);

text(“3”, width/2-30, height/2); }

if(60<=k&&k<120){

textSize(100);

fill(255, 0, 0);

text(“2”, width/2-30, height/2); }

if(120<=k&&k<180){

textSize(100);

fill(255, 0, 0);

text(“1”, width/2-30, height/2); }

if(180<=k&&k<300){

textSize(100);

fill(255, 0, 0);

text(“START!”, width/2-165, height/2); }

if(k>=300){

x1[i1]=0;

y1[i1]=height/2;

vy1[i1]=map(sensorValues[2], 50, 250, -20, 20);

i1=i1+1;

x2[i2]=width;

y2[i2]=height/2;

vy2[i2]=map(sensorValues2[2], 110, 310, 20, -20);

i2=i2+1;

for (int m=0; m<i1; m++) {

if (x1[m]>=-100) {

x1[m]=x1[m]+vx;

y1[m]=y1[m]+vy1[m];

if (dist(x1[m], y1[m], xbig, ybig)<R) {

x1[m]=-200;

vxbig=vxbig+vx/10;

vybig=vybig+vy1[m]/10;

if (vxbig>50) {

vxbig=50;

}

if (vxbig<-30) {

vxbig=-30;

}

if (vybig>30) {

vybig=50;

}

if (vybig<-30) {

vybig=-30;

}

}

fill(0, 255, 255);

circle(x1[m], y1[m], 20);

}//hit

}

for (int n=0; n<i2; n++) {

if (x2[n]<=width+100) {

x2[n]=x2[n]-vx;

y2[n]=y2[n]+vy2[n];

if (dist(x2[n], y2[n], xbig, ybig)<R) {

x2[n]=width+200;

vxbig=vxbig-vx/10;

vybig=vybig+vy1[n]/10;

if (vxbig>50) {

vxbig=50;

}

if (vxbig<-30) {

vxbig=-30;

}

if (vybig>30) {

vybig=50;

}

if (vybig<-30) {

vybig=-30;

}

}

}

fill(0, 0, 255);

circle(x2[n], y2[n], 20);

}

if (xbig<0||xbig>width) {

vxbig=-1*vxbig;

}

if (ybig<0||ybig>height) {

vybig=-1*vybig;

}

xbig=xbig+vxbig;

ybig=ybig+vybig;

fill(150, 200, 0);

circle(xbig, ybig, R);

if ( dist(xbig, ybig, 0, height/2)<2*R&&test1==1 ) {

background(0);

socre1++;

test1=0;

xbig=width/2;

ybig=height/2;

}

if ( dist(xbig, ybig, 0, height/2)<R ) {

test1=1;

}

if ( dist(xbig, ybig, width, height/2)<2*R&&test2==1 ) {

background(0);

socre2++;

test2=0;

xbig=width/2;

ybig=height/2;

}

if ( dist(xbig, ybig, 0, height/2)<R ) {

test2=1;

}

textSize(32);

fill(255, 0, 0);

text(“Player1”, width/2-320, 60);

text(“Vs”, width/2, 60);

text(“Player2”, width/2+240, 60);

if (socre1>0||socre2>0) {

background(255);

//rotate(90);

textSize(60);

text(“CONGRATULATIONS!”, width/2-300, height/2);

if (socre1>socre2) {

text(“WINNER:PLAYER 2”, width/2-260, height/2+120);

} else {

text(“WINNER:PLAYER 1”, width/2-260, height/2+120);

}

}

}

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[3], 9600);

myPort.clear();

myString = myPort.readStringUntil( 10 );

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void setupSerial2() {

printArray(Serial.list());

myPort2 = new Serial(this, Serial.list()[ 4], 9600);

myPort2.clear();

myString2 = myPort2.readStringUntil( 10 );

myString2 = null;

sensorValues2 = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 );

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

void updateSerial2() {

while (myPort2.available() > 0) {

myString2 = myPort2.readStringUntil( 10 );

if (myString2 != null) {

String[] serialInArray2 = split(trim(myString2), “,”);

if (serialInArray2.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray2.length; i++) {

sensorValues2[i] = int(serialInArray2[i]);

}

}

}

}

}

Sorry, but I had to do this the stupid way because this website crushes every time I pasted the HTML.

Leave a Reply