Touch Me Not- A melodic monster

In this project, I fused, technology, and art to craft an immersive experience. Utilizing Neopixels for LED visuals, real-time music analysis, and user interaction, the suspended monster-like creature engages users at eye level. This installation, triggered by touch and sound, aims to raise awareness about mental health.

In this project, I fused, technology, and art to craft an immersive experience. Utilizing Neopixels for LED visuals, real-time music analysis, and user interaction, the suspended monster-like creature engages users at eye level. This installation, triggered by touch and sound, aims to raise awareness about mental health.

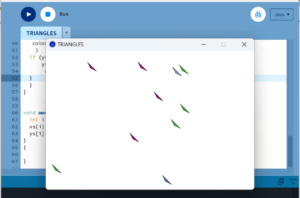

The project plan involves audio signal processing research, Arduino prototyping, 3D modeling, and a Processing script for real-time visuals. Emphasizing engagement and personalization, the design prioritizes an intuitive interface and responsive interaction for a visually appealing yet somewhat disturbing encounter.

I divided cardboards into three pieces to form the rectangular body of the monster’s mouth gluing them together.

Afterward, I sautered the touch sensors into the ears to give them an earring-like functionality.Following that, I laser cut a box to house the entire installation. To enhance the aesthetics, I concealed the wires by wrapping them with a black cloth and painted the inside of the box for a visually appealing finish.

Afterward, I sautered the touch sensors into the ears to give them an earring-like functionality.Following that, I laser cut a box to house the entire installation. To enhance the aesthetics, I concealed the wires by wrapping them with a black cloth and painted the inside of the box for a visually appealing finish.

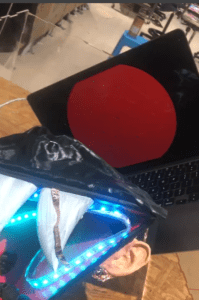

Like all good things come to an end this project is no different. Here is a picture of the project dismantled and the items returned.

Thank you to my professor and the LAs for all the support and guidance.

Thank you to my professor and the LAs for all the support and guidance.