The MusEDLab and Soundfly just launched Theory For Producers, an interactive music theory course. The centerpiece of the interactive component is a MusEDLab tool called the aQWERTYon. You can try it by clicking the image below.

In this post, I’ll talk about why and how we developed the aQWERTYon.

One of our core design principles is to work within our users’ real-world technological limitations. We build tools in the browser so they’ll be platform-independent and accessible anywhere there’s internet access (and where there isn’t internet access, we’ve developed the “MusEDLab in a box.”) We want to find out what musical possibilities there are in a typical computer with no additional software or hardware. That question led us to investigate ways of turning the standard QWERTY keyboard into a beginner-friendly instrument. We were inspired in part by GarageBand’s Musical Typing feature.

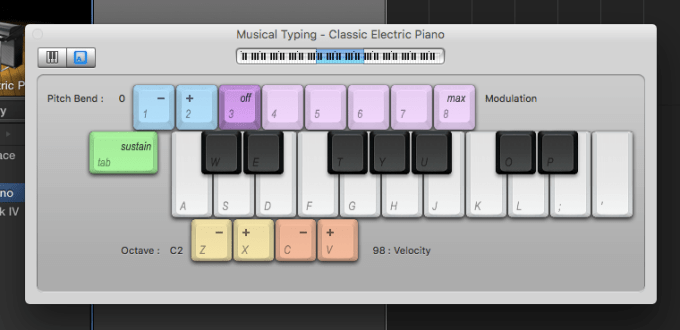

If you don’t have a MIDI controller, Apple thoughtfully made it possible for you to use your computer keyboard to play GarageBand’s many software instruments. You get an octave and a half of piano, plus other useful controls: pitch bend, modulation, sustain, octave shifting and simple velocity control. Many DAWs offer something similar, but Apple’s system is the most sophisticated I’ve seen.

Handy though it is, Musical Typing has some problems as a user interface. The biggest one is the poor fit between the piano keyboard layout and the grid of computer keys. Typing the letter A plays the note C. The rest of that row is the white keys, and the one above it is the black keys. You can play the chromatic scale by alternating A row, Q row, A row, Q row. That basic pattern is easy enough to figure out. However, you quickly get into trouble, because there’s no black key between E and F. The QWERTY keyboard gives no visual reminder of that fact, so you just have to remember it. Unfortunately, the “missing” black key happens to be the letter R, which is GarageBand’s keyboard shortcut for recording. So what inevitably happens is that you’re hunting for E-flat or F-sharp and you accidentally start recording over whatever you were doing. I’ve been using the program for years and still do this routinely.

Rather than recreating the piano keyboard on the computer, we drew on a different metaphor: the accordion.

We wanted to have chords and scales arranged in an easily discoverable way, like the way you can easily figure out the chord buttons on the accordion’s left hand. The QWERTY keyboard is really a staggered grid four keys tall and between ten and thirteen keys wide, plus assorted modifier and function keys. We decided to use the columns for chords and the rows for scales.

For the diatonic scales and modes, the layout is simple. The bottom row gives the notes in the scale starting on 1^. The second row has the same scale shifted over to start on 3^. The third row starts the scale on 5^, and the top row starts on 1^ an octave up. If this sounds confusing when you read it, try playing it, your ears will immediately pick up the pattern. Notes in the same column form the diatonic chords, with their roman numerals conveniently matching the number keys. There are no wrong notes, so even just mashing keys at random will sound at least okay. Typing your name usually sounds pretty cool, and picking out melodies is a piece of cake. Playing diagonal columns, like Z-S-E-4, gives you chords voiced in fourths. The same layout approach works great for any seven-note scale: all of the diatonic modes, plus the modes of harmonic and melodic minor.

Pentatonics work pretty much the same way as seven-note scales, except that the columns stack in fourths rather than fifths. The octatonic and diminished scales lay out easily as well. The real layout challenge lay in one strange but crucial exception: the blues scale. Unlike other scales, you can’t just stagger the blues scale pitches in thirds to get meaningful chords. The melodic and harmonic components of blues are more or less unrelated to each other. Our original idea was to put the blues scale on the bottom row of keys, and then use the others to spell out satisfying chords on top. That made it extremely awkward to play melodies, however, since the keys don’t form an intelligible pattern of intervals. Our compromise was to create two different blues modes: one with the chords, for harmony exploration, and one just repeating the blues scale in octaves for melodic purposes. Maybe a better solution exists, but we haven’t figured it out yet.

When you select a different root, all the pitches in the chords and scales are automatically changed as well. Even if the aQWERTYon had no other features or interactivity, this would still make it an invaluable music theory tool. But root selection raises a bigger question: what do you do about all the real-world music that uses more than one scale or mode? Totally uniform modality is unusual, even in simple pop songs. You can access notes outside the currently selected scale by pressing the shift keys, which transposes the entire keyboard up or down a half step. But what would be really great is if we could get the scale settings to change dynamically. Wouldn’t it be great if you were listening to a jazz tune, and the scale was always set to match whatever chord was going by at that moment? You could blow over complex changes effortlessly. We’ve discussed manually placing markers in YouTube videos that tell the aQWERTYon when to change its settings, but that would be labor-intensive. We’re hoping to discover an algorithmic method for placing markers automatically.

The other big design challenge we face is how to present all the different scale choices in a way that doesn’t overwhelm our core audience of non-expert users. One solution would just be to limit the scale choices. We already do that in the Soundfly course, in effect; when you land on a lesson, the embedded aQWERTYon is preset to the appropriate scale and key, and the user doesn’t even see the menus. But we’d like people to be able to explore the rich sonic diversity of the various scales without confronting them with technical Greek terms like “Lydian dominant”. Right now, the scales are categorized as Major, Minor and Other, but those terms aren’t meaningful to beginners. We’ve been discussing how we could organize the scales by mood or feeling, maybe from “brightest” to “darkest.” But how do you assign a mood to a scale? Do we just do it arbitrarily ourselves? Crowdsource mood tags? Find some objective sorting method that maps onto most listeners’ subjective associations? Some combination of the above? It’s an active area of research for us.

This issue of categorizing scales by mood has relevance for the original use case we imagined for the aQWERTYon: teaching film scoring. The idea behind the integrated video window was that you would load a video clip, set a mode, and then improvise some music that fit the emotional vibe of that clip. The idea of playing along with YouTube videos of songs came later. One could teach more general open-ended composition with the aQWERTYon, and in fact our friend Matt McLean is doing exactly that. But we’re attracted to film scoring as a gateway because it’s a more narrowly defined problem. Instead of just “write some music”, the challenge is “write some music with a particular feeling to it that fits into a scene of a particular length.

Would you like to help us test and improve the aQWERTYon, or to design curricula around it? Would you like to help fund our programmers and designers? Please get in touch.