This post documents my final project for User Experience Design with June Ahn

Overview of the problem

The aQWERTYon is a web-based music performance and theory learning interface designed by the NYU Music Experience Design Lab. The name is a play on “QWERTY accordion.” The aQWERTYon invites novices to improvise and compose using a variety of scales and chords normally available only to advanced musicians. Notes map onto the computer keyboard such that the rows play scales and the columns play chords. The user can not play any wrong notes, which encourages free and playful exploration. The aQWERTYon has a variety of instrument sounds to choose from, and it can also act as a standard MIDI controller for digital audio workstations (DAWs) like GarageBand, Logic, and Ableton Live. As of this writing, there have been aQWERTYon 32,000 sessions.

One of our core design principles is to work within our users’ real-world technological limitations. We build tools in the browser so they will be platform-independent and accessible anywhere where there is internet access. Our aim with the aQWERTYon was to find the musical possibilities in a typical computer with no additional software or hardware. That question led us to investigate ways of turning the standard QWERTY keyboard into a beginner-friendly instrument.

While the aQWERTYon has been an effective tool in classrooms and online, it has some design deficiencies as well. It is difficult for unassisted users to figure out what the app is for. While its functionality is easily discovered through trial and error, its musical applications are less self-explanatory. Some of this is due to the intrinsic complexity of music theory and all the daunting terminology that comes with it. But some of it is the lack of context and guidance we provide to new users.

The conjecture

This assignment coincided with discussions already taking place in the lab around redesigning the aQW. Many of those focused on a particular element of the user interface, the scale picker.

The user has a variety of scales to choose from, ranging from the familiar to the exotic. However, these scales all have impenetrable names. How are music theory novices supposed to make sense of names like harmonic minor or Lydian mode? How would they know to choose one scale or another? We debated the least off-putting way of presenting these choices: should we represent them graphically? Associate each one with a well-known piece of music? Or just list them alphabetically? I proposed a system of graphical icons showing the notes comprising each scale. While novices will find them no more intelligible than the names, the hope is that they would be sufficiently visually appealing to invite users to explore them by ear.

Conversations with June helped me understand that there are some broader and more profound user experience problems to solve before users ever get to the scale picker. What is the experience of simply landing on the app for the first time? How do people know what to do? From this conversation came the germ of a new idea, a landing page offering a tutorial or introduction. We want users to have a feeling of discovery, a musical “aha moment”, the chance to be a musical insider. The best way to do that seemed to be to give users a playlist of preset songs to jam with.

User characteristics and personas

There are three major user groups for the aQWERTYon, who I will describe as students, teachers, and explorers.

Students and teachers

Students use the aQW in a guided and structured setting: a classroom, a private lesson, or an online tutorial. There are several distinct user personas: elementary, middle and high school students, both mainstream and with special needs; college students; and online learners, mostly adults. Each student persona has its corresponding teacher persona. For example, I use the aQW with my music technology students at Montclair State University and NYU, and with some private students.

The aQW’s biggest fan is MusEDLab partner Matt McLean, who teaches at the Little Red Schoolhouse and runs a nonprofit organization called the Young Composers and Improvisors Workshop. Matt uses the aQW to teach composition in both settings, in person and online. He has documented his students’ use of the aQW extensively. Some examples:

Explorers

I use the term explorers to describe people who use the aQW without any outside guidance. Explorers do not fit into specific demographic groups, but they center around two broad, overlapping personas: bedroom producers and music theory autodidacts. Explorers may find the aQW via a link, a social media posting, or a Google search. We know little about these users beyond what is captured by Google Analytics. However, we can make some assumptions based on our known referral sources. For example, this blog is a significant driver of traffic to the aQW. I have numerous posts on music theory and composition that link to the aQW so that readers can explore the concepts for themselves. My blog readership includes other music educators and some professional musicians, but the majority are amateur musicians and very enthusiastic listeners. These are exactly the users we are trying to serve: people who want to learn about music independently, either for creative purposes or to simply satisfy curiosity.

While I am a music educator, I have spent most of my life as a self-taught bedroom producer, so I identify naturally with the explorers. I have created several original pieces of music with the aQW, both for user testing purposes and to show its creative potential. While I have an extensive music theory background, I am a rudimentary keyboard player at best. This has limited my electronic music creation to drawing in the MIDI piano roll with the mouse pointer, since I can not perform my ideas on a piano-style controller. The aQW suits my needs perfectly, since I can set it to any scale I want and shred fearlessly. Here is an unedited improvisation I performed using a synthesizer instrument I created in Ableton Live:

My hope is that more would-be explorers feel invited to use the aQW for similar creative purposes in their own performance and composition.

Tasks and Scenarios

It is possible to configure the aQWERTYon via URL parameters to set the key and scale, and to hide components of the user interface. When teachers create exercises or assignments, they can link or embed the aQW with its settings locked to keep students from getting lost or confused. However, this does not necessarily invite the user to explore or experiment. Here is an example of an aQW preset to accompany a Beyoncé song. This preset might be used for a variety of pedagogical tasks, including learning some or all of the melody, creating a new countermelody, or improvising a solo. The harmonic major scale is not one that is usually taught, but it a useful way to blend major and minor tonalities. Students might try using more standard scales like major or harmonic minor, and listen for ways that they clash with Beyoncé’s song.

Tasks and scenarios for explorers might include creating a melody, bassline or chords for an original piece of music. For example, a self-taught dance music producer might feel limited by the scales that are easiest to play on a piano-style keyboard (major, natural minor, pentatonics) and be in search of richer and more exotic sounds. This producer might play their track in progress and improvise on top using different scale settings.

One of the users I tested with suggested an alternative explorer use case. He is an enthusiastic amateur composer and arranger, who is trying to arrange choral versions of pop and rock songs. He is a guitarist who has little formal music theory knowledge. He might use the aQW to try out harmonic ideas by ear, write down note names that form pleasing combinations, and then transfer them to the guitar or piano-based MIDI controller.

Understanding the problem

In the age of the computer and the internet, many aspects of music performance, composition and production are easy to self-teach. However, music theory remains an obstacle for many bedroom producers and pop musicians (not to mention schooled musicians!) There are so many chords and scales and rules and technical vocabulary, all of which have to be applied in all twelve keys. To make matters worse, terminology hangs around long after its historical context has disappeared. We no longer know what the Greek modes sound like, but we use their names to describe modern scales. C-sharp and D-flat were different pitches in historical tuning systems, but now both names describe the same pitch. The harmonic and melodic minor scales are named after a stylistic rule for writing melodies that was abandoned hundreds of years ago. And so on.

Most existing theory resources draw on the Western classical tradition, using examples and conventions from a repertoire most contemporary musicians and listeners find unfamiliar. Furthermore, these resources presume the ability to read standard music notation. Web resources that do address popular music are usually confusing and riddled with errors. I have worked with Soundfly to fill this vacuum by creating high-quality online courses aimed at popular musicians. Even with the best teaching resources, though, theory remains daunting. Exploring different chords and scales on an instrument requires significant technical mastery, and many musicians give up before ever reaching that point.

The aQW is intended to ease music theory learning by making scales and chords easy to discover even by complete novices. Our expectation is that after explorers are able to try theory ideas out in a low-pressure and creative setting, they will be motivated to put them to work playing instruments, composing or producing. Alternatively, users can simply perform and compose directly with the aQW itself.

Social and technical context

Most computer-based melody input systems are modeled on the piano. This is most obvious for hardware, since nearly all MIDI controllers take the form of literal piano keyboards. It is also true for software, which takes the piano keyboard as the primary visualization scheme for pitch. For example, the MIDI editor in every DAW displays pitches on a “piano roll”.

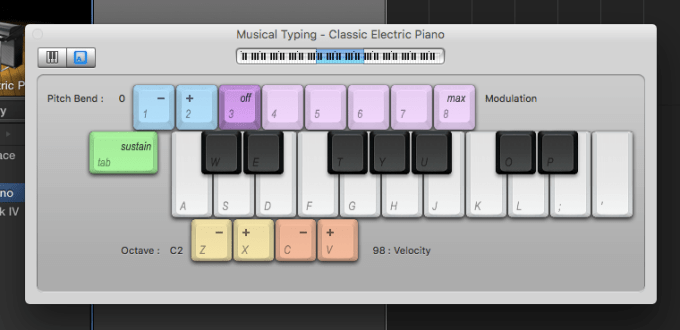

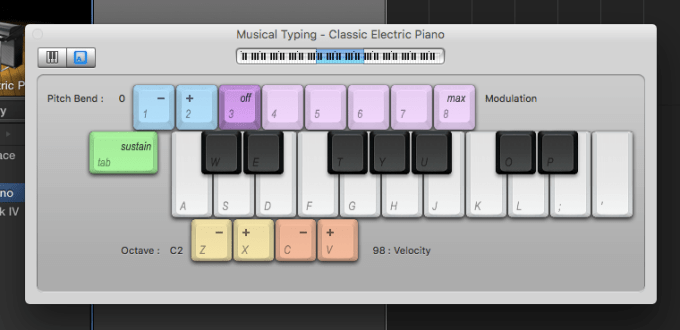

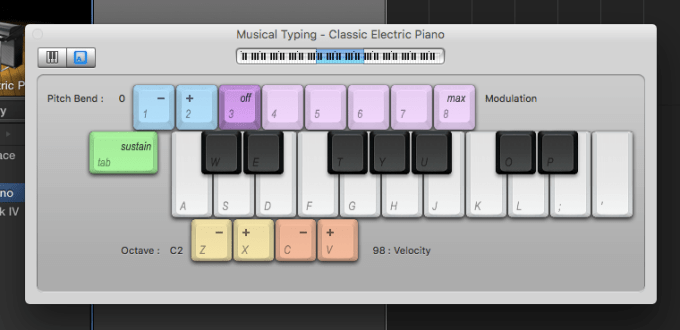

Some DAWs include a “musical typing” feature that maps the piano layout to the QWERTY keyboard, as an expediency for users who either lack MIDI hardware controllers, or who do not have them on hand. Apple’s GarageBand uses the ASDFG row of the keyboard for the white keys and the QWERTY row for the black keys. They use the other rows for such useful controls as pitch bend, modulation, sustain, octave shifting and simple velocity control.

Useful and expedient though it is, Musical Typing has some grave shortcomings as a user interface. It presumes familiarity with the piano keyboard, but is not very playable for users do who possess that familiarity. The piano layout makes a poor fit for the grid of computer keys. For example, there is no black key on the piano between the notes E and F, but the QWERTY keyboard gives no visual reminder of that fact, so it is necessary to just remember it. Unfortunately, the “missing” black key between E and F happens to be the letter R, which is GarageBand’s keyboard shortcut for recording. While hunting for E-flat or F-sharp, users are prone to accidentally start recording over their work. I have been using GarageBand for seven years and still do this routinely.

Ableton’s Push controller represents an interesting break with MIDI controller orthodoxy. It is a grid of 64 touch pads surrounded by various buttons, knobs and sliders.

The pads were designed to trigger samples and loops like a typical drum machine, but Ableton also includes a melody mode for the Push. By default, it maps notes to the grid in rows staggered by fourths, which makes the layout identical to the bottom four strings of the guitar. This is quite a gift for guitarists like me, since I can use my familiar chord and scale fingerings, rather than hunting and pecking for them on the piano. Furthermore, the Push can be set so that the pads play only the notes within a particular scale, giving a “no wrong notes” experience similar to the aQWERTYon. Delightful though this mode is, however, it is imperfect. Root notes of the scale are colored blue, and other notes are colored white. While this makes the roots easy to distinguish, it is not so easy to visually differentiate the other pitches.

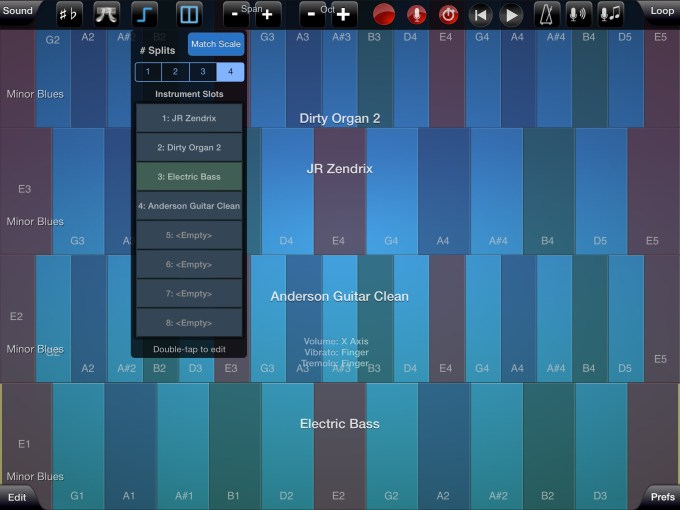

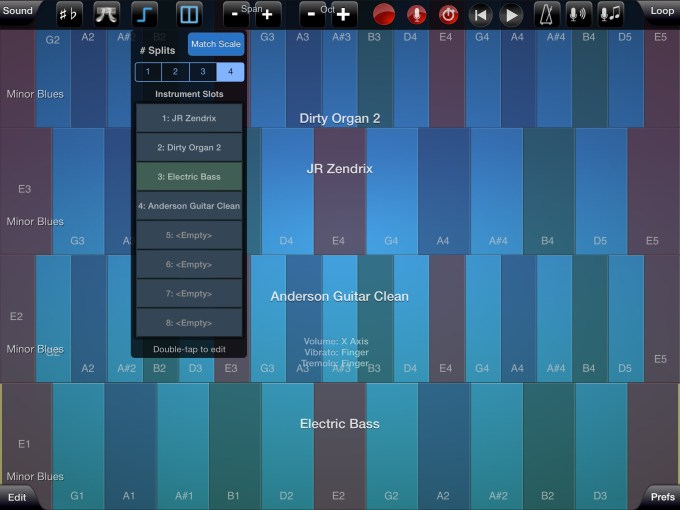

Touchscreen devices like the iPhone and iPad open up additional new possibilities for melodic interfaces. Many mobile apps continue to use the piano keyboard for note input, but some take advantage of the touchscreen’s unique affordances. One such is Thumbjam, which enables the user to divide the screen into slices of arbitrary thickness that can map to any arbitrary combination of notes.

The app offers hundreds of preset scales to choose from. The user may have a small range of notes, each of which is large and easy to distinguish, or a huge range of notes, each of which occupies a narrow strip of screen area. Furthermore, the screen can be split to hold four different scales, played from four different instruments. While all of this configurability is liberating, it is also overwhelming. Also, the scales are one-dimensional lines; there is no easy way to play chords and arpeggios.

Evaluation criteria

Is the aQW’s potential obvious enough to draw in explorers and educators? Will it be adopted as a tool for self-teaching? Does it invite playful exploration and experimentation? Is it satisfying for real-world musical usage? Is the UI self-explanatory, or at least discoverable? Is the music theory content discoverable? Have we identified the right user persona(s)? Is the aQW really a tool for beginners? Or is it an intermediate music theory learning tool? Or an advanced composition tool? Is the approach of a “playlist” of example songs the right one? Which songs, artists and genres should we include on the landing page? How many presets should we include? Should we limit it to a few, or should we offer a large, searchable database? And how do we deal with the fact that many songs require multiple scales to play?

Proposed solution

I tested several interactive wireframes of this landing page concept. Click the image to try it yourself:

The first wireframe had nine preset songs. I wanted to offer reasonable musical diversity without overwhelming the user. The tenth slot linked to the “classic” aQW, where users are free to select their own video, scale, root, and so on. I chose songs that appealed to me (and presumably other adult explorers), along with some current pop songs familiar to younger users. I wanted to balance the choices by race, gender, era, and genre. I was also bound by a musical constraint: all songs need to be playable using a single scale in a single key. The initial preset list was:

- Adele – “Send My Love (To Your New Lover)”

- Mary J Blige – “Family Affair”

- Miles Davis – “Sssh/Peaceful”

- Missy Elliott – “Get Ur Freak On”

- Björk – “All Is Full Of Love”

- Michael Jackson – “Don’t Stop ’Til You Get Enough”

- Katy Perry – “Teenage Dream”

- AC/DC – “Back In Black”

- Daft Punk – “Get Lucky”

After a few test sessions, it became apparent that no one was clicking Mary J Blige. Also, the list did not include any current hip-hop. I therefore replaced her with Chance The Rapper. I initially offered a few sentences of instruction, but feedback from my MusEDLab colleagues encouraged me to reduce the prompt down to just a few words: “Pick a song, type, jam.”

Further testing showed that while adults are willing to try out any song, familiar or not, children and teens are much choosier. Therefore, I added two more presets, “Hotline Bling” by Drake and “Formation” by Beyoncé. The latter song proved problematic, however, because its instrumental backing is so sparse and minimal that it is difficult to hear how other notes might fit into it. I ultimately swapped it for “Single Ladies.” I had rejected this song initially, because it uses the idiosyncratic harmonic major scale. However, I came to see this quirk as a positive bonus–since one of our goals is to encourage users to explore new sounds and concepts, a well-known and well-loved song using an unusual scale is a rare gift.

User testing protocol

I used a think-aloud protocol, asking testers to narrate their thought processes as they explored the app. I recorded the one-on-one sessions using Screenflow. When testing with groups of kids, this was impractical, so instead I took notes during and after each session. For each user, I opened the interactive wireframe, and told them, “This is a web based application for playing music with your computer keyboard. I’m going to ask you to tell me what you see on the screen, what you think it does, and what you think will happen when you click things.” I did not offer any other explanation or context, because I wanted to see whether the landing page was self-explanatory and discoverable. I conducted informal interviews with users during and after the sessions as well.

User testing results

I tested with ten adults and around forty kids. The adults ranged in age from early twenties to fifties. All were musicians, at varying levels of ability and training, mostly enthusiastic amateurs. Sessions lasted for twenty or thirty minutes. There were two groups of kids: a small group of eighth graders at the Little Red Schoolhouse, and a large group of fourth graders from PS 3 who were visiting NYU. These testing sessions were shorter, ten to fifteen minutes each.

Discovering melodies

It is possible to play the aQW by clicking the notes onscreen using the mouse, though this method is slow and difficult. Nevertheless, a number of the younger testers did this, even after I suggested that it would be easier on the keyboard.

An adult tester with some keyboard and guitar experience told me, “This is great, it’s making me play patterns that I normally don’t play.” He was playing on top of the Miles Davis track, and he was quickly able to figure out a few riffs from Miles’ trumpet solo.

Discovering chords

Several testers systematically identified chords by playing alternating notes within a row, while others discovered them by holding down random groups of keys. None of the testers discovered that they could easily play chords using columns of keys until I prompted them to do so. One even asked, “Is there a relationship between keys if I play them vertically? I don’t know enough about music to know that.” After I suggested he try the columns, he said, “If I didn’t know [by ear] how chords worked, I’d miss the beauty of this.” He compared the aQW to GarageBand’s musical typing: “This is not that. This is a whole new thing. This is chord oriented. As a guitarist, I appreciate that.” The message is clear: we need to make the chords more obvious, or more actively assist users in finding them.

Other theory issues

For the most part, testers were content to play the scales they were given, though some of the more expert musicians changed the scales before even listening to the presets. However, not everyone realized that the presets were set to match the song. A few asked me: “How do I know what key this song is in?” We could probably state explicitly that the presets line up automatically.

In general, adult testers found the value of the aQW as a theory learning tool to be immediately apparent. One told me: “If I had this when I was a kid, I would have studied music a lot. I used to hate music theory. I learned a lot of stuff, but the learning process was awful… Your kids’ generation will learn music like this (snaps fingers).”

Sounds

The aQW comes with a large collection of SoundFonts, and users of all ages enjoyed auditioning them, sometimes for long periods of time. Sometimes they apologized for how fascinating they found the sounds to be. But it is remarkable to have access to so many instrument timbres so effortlessly. Computers turn us all into potential orchestrators, arrangers, and sound designers.

Screen layout

The more design-oriented testers appreciated the sparseness and minimalism of the graphics, finding them calming and easy to understand.

Several testers complained that the video window takes up too much screen real estate, and is placed too prominently. Two commented that videos showing live performers, like “Back In Back,” were valuable because that helped with timekeeping and inspiration. Otherwise, however, testers found the videos to either be of little value or actively distracting. One suggested having the videos hidden or minimized by default, with the option to click to expand them. Others requested that the video be below the keyboard and other crucial controls. Also, the eighth graders reported that some of the video content was distracting because of its content, for example the partying shown in “Teenage Dream.” Unsuitable content will be an ongoing issue using many of the pop songs that kids like.

Technical browser issues

Having the aQWERTYon run in the browser has significant benefits, but a few limitations as well. Because the URL updates every time the parameters change, clicking the browser’s Back button does not produce the expected behavior–it might take ten or fifteen clicks to actually return to a previous page. I changed the links in later versions so each one opens the aQW in a new tab so the landing page would always be available. However, web audio is very memory-intensive, and the aQW will function slowly or not at all if it is open in more than one tab simultaneously.

Song choices

The best mix of presets is always going to depend on the specific demographics of any given group of users. However, the assortment I arrived at was satisfying enough for the groups I tested with. Miles Davis and Björk do not have the wide appeal of Daft Punk or Michael Jackson, but their presence was very gratifying for the more hipster-ish testers. I was extremely impressed that an eighth grader selected the Miles song, though this kid turns out to be the son of a Very Famous Musician and is not typical.

Recording functionality

Testers repeatedly requested the ability to record their playing. The aQW did start out with a very primitive recording feature, but it will require some development to make it usable. The question is always, how much functionality is enough? Should users be able to overdub? If so, how many tracks? Is simple recording enough, or would users need to able to mix, edit, and select takes?

One reason that recording has been a low development priority is that users can easily record their performances via MIDI into any DAW or notation program. The aQW behaves as if it were a standard MIDI controller plugged into the computer. With so many excellent DAWs in the world, it seems less urgent for us to replicate their functionality. However, there is one major limitation of recording this way: it captures the notes being played, but not the sounds. Instead, the DAW plays back the MIDI using whatever software instruments it has available. Users who are attached to a specific SoundFont cannot record them unless they use a workaround like Soundflower. This issue will require more discussion and design work.

New conjectures and future work

One of my most significant user testers for the landing page wireframe was Kevin Irlen, the MusEDLab’s chief software architect and main developer of the aQW itself. He found the landing page concept sufficiently inspiring that he created a more sophisticated version of it, the app sequencer:

We can add presets to the app sequencer using a simple web form, which is a significant improvement over the tedious process of creating my wireframes by hand. The sequencer pulls images automatically from YouTube, another major labor-saver. Kevin also added a comment field, which gives additional opportunity to give prompts and instructions. Each sequencer preset generates a unique URL, making it possible to generate any number of different landing pages. We will be able to create custom landing pages focusing on different artists, genres or themes.

Songs beyond the presets

Testing with the fourth graders showed that we will need to design a better system for users who want to play over songs that we do not include among the presets. That tutorial needs to instruct users how to locate YouTube URLs, and more dauntingly, how to identify keys and scales. I propose an overlay or popup:

Keyfinding

Testing with fourth graders also showed that helping novice users with keyfinding may not be as challenging as I had feared. The aQW defaults to the D minor pentatonic scale, and that scale turns out to fit fairly well over most current pop songs. If it doesn’t, some other minor pentatonic scale is very likely to work. This is due to a music-theoretical quirk of the pentatonic scale: it happens to share pitches with many other commonly-used scales and chords. As long as the root is somewhere within the key, the minor pentatonic will sound fine. For example, in C major:

- C minor pentatonic sounds like C blues

- D minor pentatonic sounds like Csus4

- E minor pentatonic sounds like Cmaj7

- F minor pentatonic sounds like C natural minor

- G minor pentatonic sounds like C7sus4

- A minor pentatonic is the same as C major pentatonic

- B minor pentatonic sounds like C Lydian mode

We are planning to revamp the root picker to show both a larger piano keyboard and a pitch wheel. We also plan to add more dynamic visualization options for notes as they are played, including a staff notation view, the chromatic circle, and the circle of fifths. The aQW leaves several keys on the keyboard unused, and we could use them for additional controls. For example, we might use the Control key to make note velocities louder, and Option to make them quieter. The arrow keys might be used to cycle through the scale menu and to shift the root.

Built-in theory pedagogy

There is a great deal of opportunity to build more theory pedagogy on top of the aQW, and to include more of it within the app itself. We might encourage chord playing by automatically showing chord labels at the top of each column. We might include popups or links next to each scale giving some explanation of why they sound the way they do, and to give some suggested musical uses. One user proposes a game mode for more advanced users, where the scale is set to chromatic and players must identify the “wrong” or outside notes. Another proposes a mode similar to Hooktheory, where users could sequence chord progressions to play on top of.

Rhythmic assistance

A few testers requested some kind of help or guidance with timekeeping. One suggested a graphical score in the style of Guitar Hero, or a “follow the bouncing ball” rhythm visualization. Another pointed out that an obvious solution would be to incorporate the Groove Pizza, perhaps in miniature form in a corner of the screen. Synchronizing all of this to YouTube videos would need to be done by hand, so far as I know, but perhaps an automated solution exists. Beat detection is certainly an easier MIR challenge than key or chord detection. If we were able to automatically sync to the tempo of a song, we could add the DJ functionality requested by one tester, letting users add cue points, loop certain sections, and slow them down.

Odds and ends

One eighth grader suggested that we make aQW accounts with “musical passwords.”

An adult tester referred to the landing page as the “Choose Your Own Adventure screen.” The idea of musical adventure is exactly the feeling I was hoping for.

In addition to notes on the staff, one tester requested a spectrum visualizer. This is perhaps an esoteric request, but real-time spectrograms are quite intuitive and might be useful.

Finally, one tester made a comment that was striking in its broader implications for music education: “I’m not very musical, I don’t really play an instrument, so these kinds of tricks are helpful for me. It didn’t take me long to figure out how the notes are arranged.” This person is a highly expert producer, beatmaker and live performer using Ableton Live. I asked how he came to this expertise, and he said he felt compelled to learn it to compensate for his lack of “musicianship”. It makes me sad that such a sophisticated musician does not realize that his skills “count”. In empowering music learners with the aQW, I also hope we are able to help computer musicians value themselves.