My students are currently hard at work writing pop songs, many of them for the first time. For their benefit, and for yours, I thought I’d write out a beginner’s guide to contemporary songwriting. First, some points of clarification:

- This post only talks about the instrumental portion of the song, known as the track. I don’t deal with vocal parts or lyric writing here.

- This is not a guide to writing a great pop song. It’s a guide to writing an adequate one. Your sense of what makes a song good will probably differ from mine, whereas most of us can agree on what makes a song adequate. To make a good song, you’ll probably need to pump out a bunch of bad ones first to get the hang of the process.

- This is not a guide to writing a hit pop song. I have no idea how to do that. If you’re aiming for the charts, I refer you to the wise words of the KLF.

- You’ll notice that I seem to be talking a lot here about production, and that I never mention actual writing. This is because in 2014, songwriting and production are the same creative act. There is no such thing as a “demo” anymore. The world expects your song to sound finished. Also, most of the creativity in contemporary pop styles lies in rhythm, timbre and arrangement. Complex chord progressions and intricate melodies are neither necessary nor even desirable. It’s all in the beats and grooves.

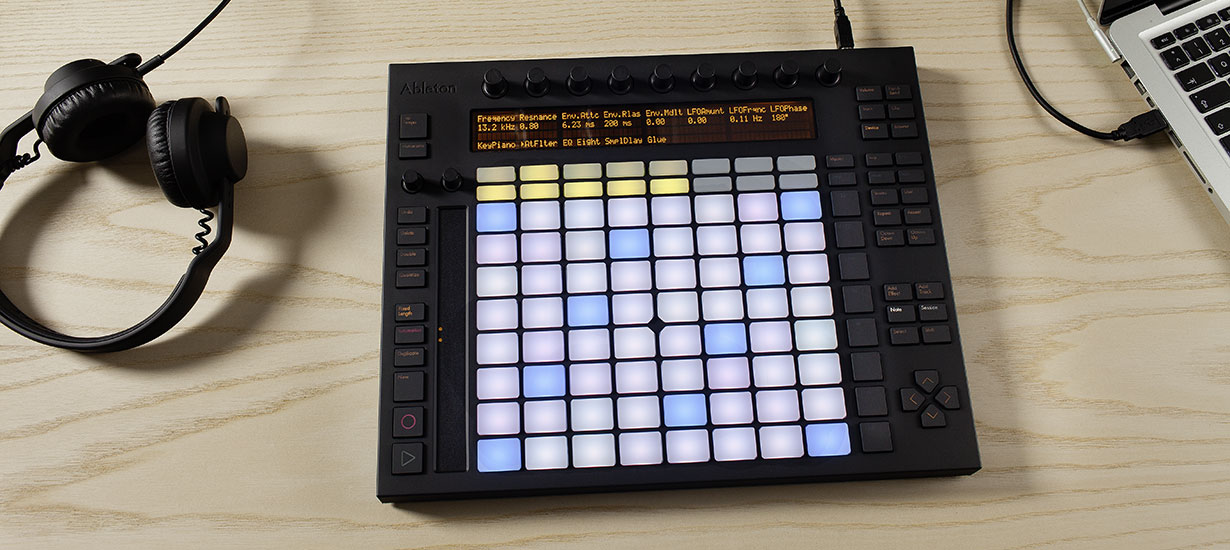

To make a track, you’ll need a digital audio workstation (DAW) and a loop library. I’ll be using GarageBand, but you can use the same methods in Ableton Live, Logic, Reason, Pro Tools, etc. I produced this track for illustration purposes, and will be referring to it throughout the post:

Step one: gather loops

Put together four or eight bars worth of loops that all sound good together. Feel free to use the loops that come with your software, they’re probably a fine starting point. You can also generate your loops by recording instruments and singing, or by sequencing MIDI, or by sampling existing songs. Even if you aren’t working in an electronic medium, you can still gather loops: guitar parts, keyboard parts, bass riffs, drum patterns. Think of this set of loops as the highest-energy part of your song, the last chorus or what have you.

For my example track, I exclusively used GarageBand’s factory loops, which are mostly great if you tweak them a little. I selected a hip-hop beat, some congas, a shaker, a synth bass, some synth chords, and a string section melody. All of these loops are audio samples, except for the synth chord part, which is a MIDI sequence. I customized the synth part so that instead of playing the same chord four times, it makes a little progression that fits the bassline: I – I – bVI – bVII.

Step two: duplicate your loops a bunch of times

I copied my set of loops fifteen times, so the whole tune is 128 bars long. It doesn’t matter at this point exactly how many times you copy everything, so long as you have three or four minutes worth of loops to work with. You can always copy and paste more track if you need to. GarageBand users: note that by default, the song length is set ridiculously short. You’ll need to drag the right edge of your song to give yourself enough room.

Step three: create structure via selective deletion

This is the hard part, and it’s where you do the most actual “songwriting.” Remember how I said that your set of loops was going to be the highest-energy part of the song? You’re going to create all of the other sections by removing stuff. Different subsets of your loop collection will form your various sections: intro, verses, choruses, breakdown, outtro, and so on. These sections should probably be four, eight, twelve or sixteen bars long.

Here’s the structure I came up with on my first pass:

I made a sixteen-bar intro with the synth chords entering first, then the percussion, then the hip-hop drums. The entrance of the bass is verse one. The entrance of the strings is chorus one. For verse two, everything drops out except the drums, congas and bass. Chorus two is twice the length of chorus one, with the keyboard chords out for the first half. Then there’s a breakdown, eight bars of just the bass, and another eight of the bass and drums. Next, there are three more choruses, the first minus the keyboard chords again, the next two with everything (my original loop collection.) Finally, there’s a long outtro, with parts exiting every four or eight bars.

Even experienced songwriters find structure difficult. I certainly do. Building your structure will likely require a lot of trial and error. For inspiration, I recommend analyzing the structure of songs you like, and imitating them. Here’s my collection of particularly interesting song structures. My main piece of advice here is to keep things repetitive. If the groove is happening, people will happily listen to it for three or four minutes with minimal variation.

Trained musicians frequently feel anxious that their song isn’t “interesting” enough, and work hard to pack it with surprises. That’s the wrong idea. Let your grooves breathe. Let the listener get comfortable. This is pop music, it should be gratifying on the first listen. If you feel like your song won’t work without all kinds of intricate musical drama, you should probably just find a more happening set of loops.

Step four: listen and iterate

After leaving my song alone for a couple of days, some shortcomings leaped out at me. The energy was building and dissipating in an awkward, unsatisfying way, and the string part was too repetitive to carry the whole melodic foreground. I decided to rebuild the structure from scratch. I also added another loop, a simple guitar riff. I then cut both the string and guitar parts in half, so the front half of the string loop calls, and the back half of the guitar loop answers. This worked hugely better. Here’s the finished product, the one you hear above:

My final structure goes as follows: the intro is synth chords and guitar, quickly joined by the percussion, then the drum loop. Verse one adds the bass. Chorus one adds the strings, so now we’re at full power. Verse two is a dramatic drop in energy, just the conga and strings, joined halfway through by the drums. Chorus two adds the bass and guitar back in. The breakdown section is eight bars of drums and bass, then eight more bars adding in the strings and percussion. The drums and percussion drop out for a bar right at the end of the section to create some punctuation. Verse three is everything but the synth chords. Choruses three and four are everything. The outtro is a staggered series of exits, rhythm section first, until the guitar and strings are left alone.

So there you have it. Once you’ve committed to your musical ideas, let your song sit for a few days and then go back and listen to it with an ear for mix and space. Try some effects, if you haven’t yet. Reverb and echo/delay always sound cool. Chances are your mix is going to be weak. My students almost always need to turn up their drums and turn down their melodic instruments. Try to push things to completion, but don’t make yourself crazy. Get your track to a place where it doesn’t totally embarrass you, put it on the web, and go start another one.

If reading this inspires you to make a track, please put a link to it in the comments, I’d love to hear it.

![]() What project are you currently working on?

What project are you currently working on?![]()