The wearable is co-created with Zoe Margolis

Dance of the Perlins (an Exploration of Movement)

Dance of the Perlins is a performance that explores the interaction of movement, choreography and dancer triggered particle flow. In the performance, a dancer wears a custom made wearable comprised of two gloves with built-in sensors. The movement data is wirelessly transmitted to a p5 particle flow visualizer triggering different visuals that evolve as the choreography progresses, thereby creating the Dance of the Perlins (in reference to the perlin noise that is used as the basis for the particle movement). The first live performance with the wearable prototype took place at the ITP winter show 2022, where the visuals were projected onto the screen behind the dancer:

Music credit: PROBASS ∆ HARDI – Good Evening (Where Are You From?)

Project details

Prototype development

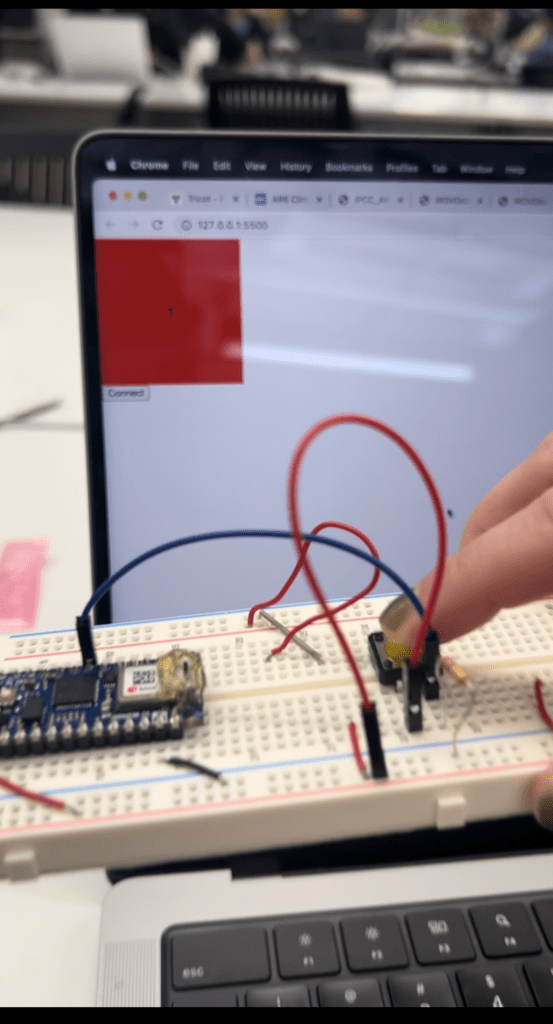

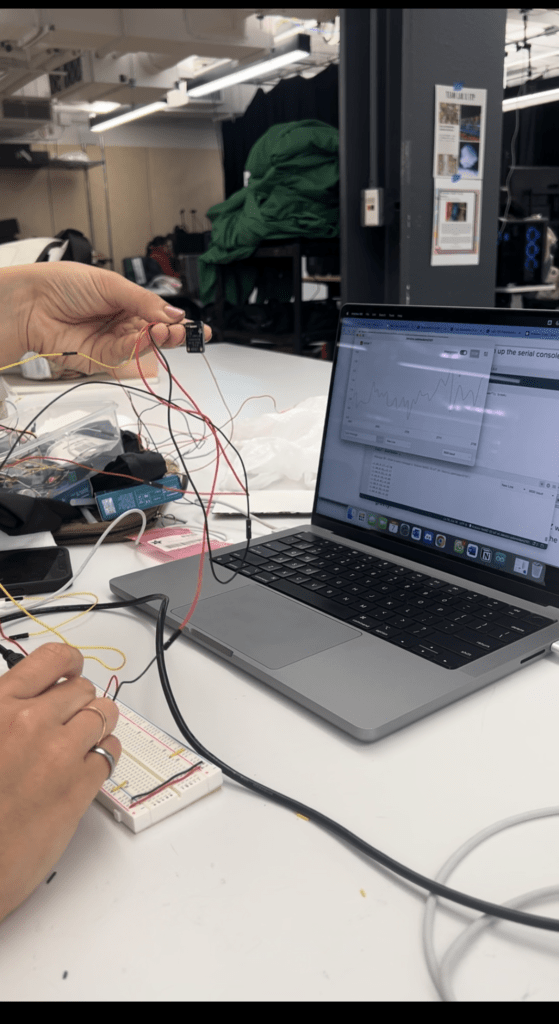

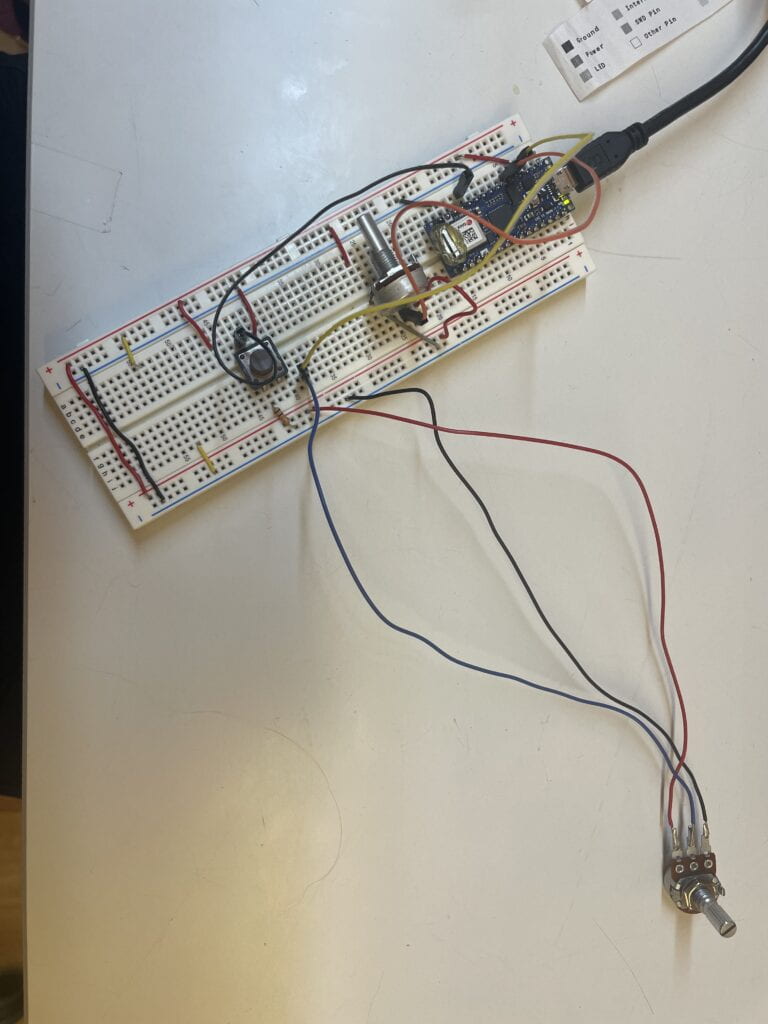

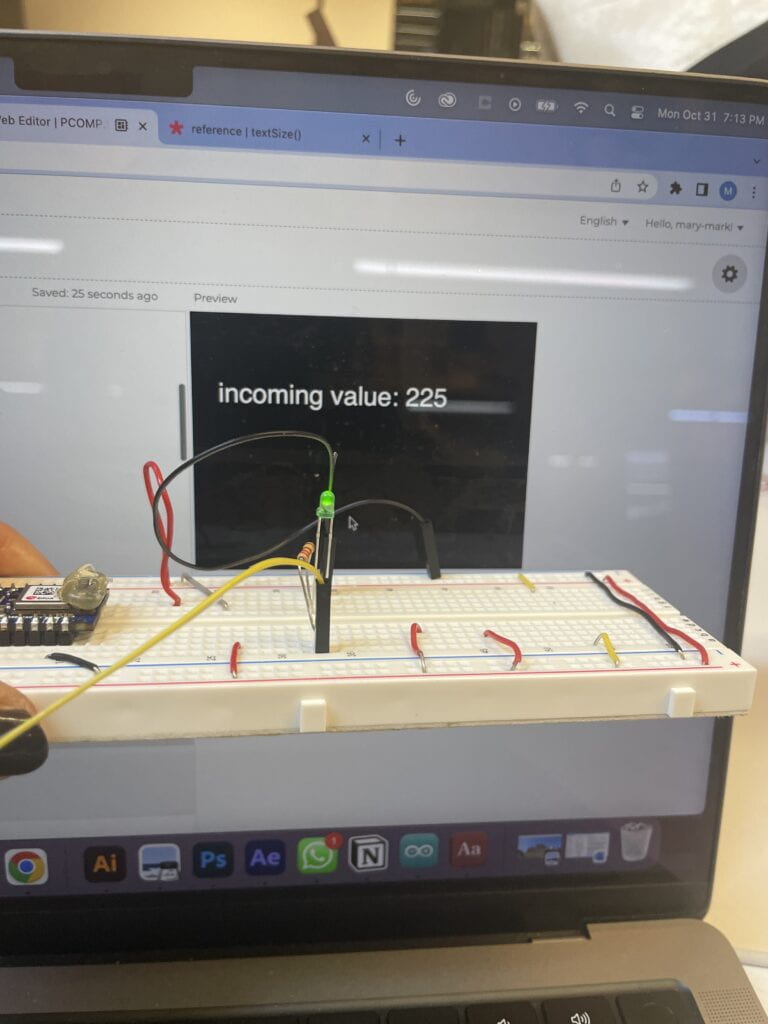

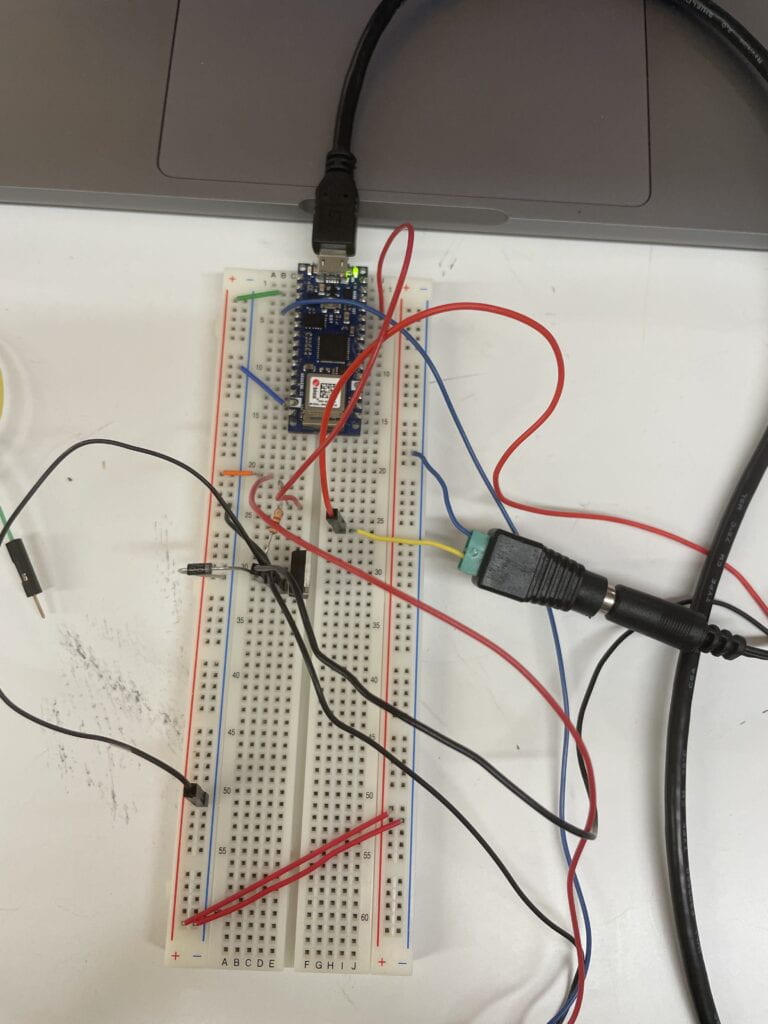

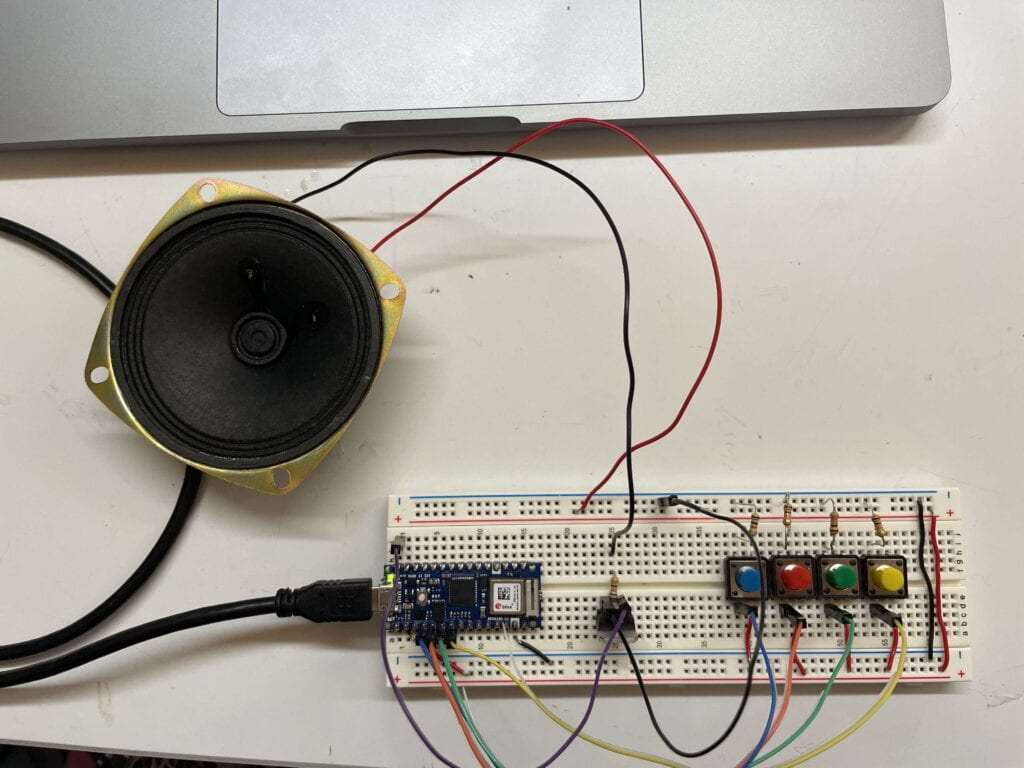

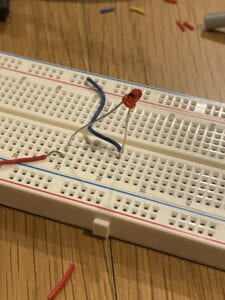

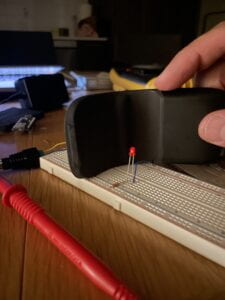

The first prototype consisted of one Adafruit LIS3DH triaxial accelerometer that was taped to the dancer’s wrist, with wires extending to a breadboard with an Arduino Nano 33 IoT (shown below).

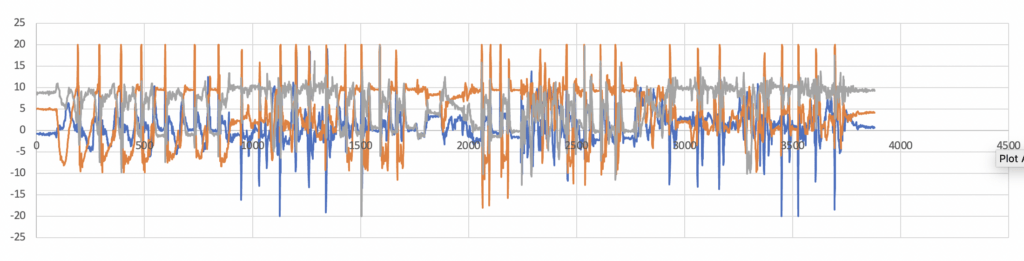

This prototype was used for gathering acceleration and movement data to understand recognizable patterns associated with specific movements.

After gathering the data, I started training a Long Short Term Memory (LSTM) network using tensorflow to detect the moves and trigger controls in real-time. The preliminary results were promising showing that the algorithm can clearly recognize two of the moves which we gathered data for. However, due to project deadlines I left the full development of the ML algorithm for later. Ultimately, we ended up hard-coding the triggers corresponding to specific accelerations or changes in acceleration.

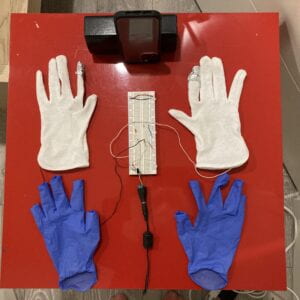

Following the initial user-testing, we decided to add more controls independent of the accelerations. Inspired by one of our classmates’, Dre’s project we added two capacitive resistors on the left hand using stripped wires. Finally, we soldered the wires extending from the sensors on the two gloves to a protoboard and connected it with a 9V battery, such that the device could fit in a pouch situated on the dancer’s lower back. And voila, the final prototype :

The prototype worked quite well, although it was difficult to adjust on the fly :).

The Arduino Code used in this project can be found on my GitHub.

Visualization

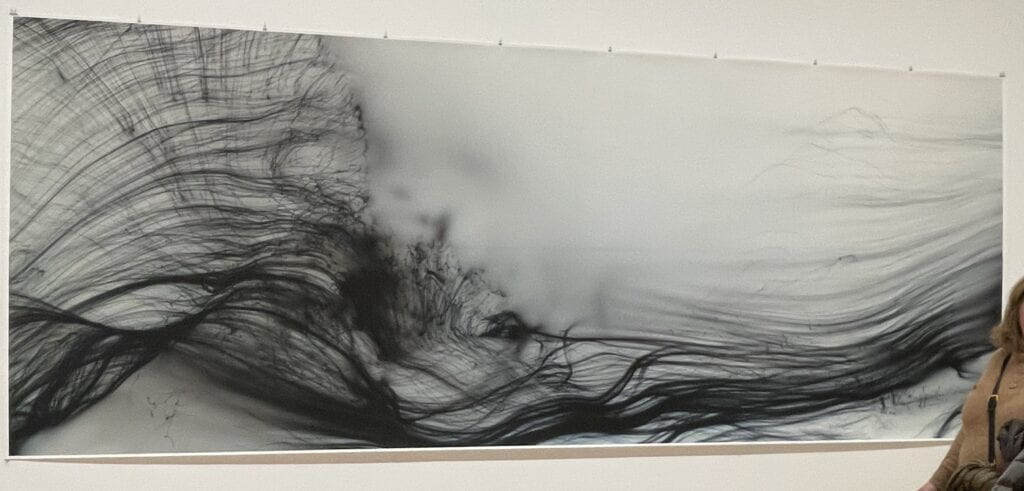

Sometime in November 2022, I visited Wolfgang Tillmans’ exhibit at the MoMA and was mesmerized by his work from the Freischwimmer series (below). This series is comprised of camera-less pictures that he created by shining flashlights at photographic paper in the darkroom. This work really resonated with me since I wanted to create visuals that captured the feeling of effortless movement and abstract flow.

The ultimate visualization engine that I created was largely inspired by Dan Schiffman’s tutorial on Perlin Noise Flow Field and was originally written in p5 web editor. The original sketch (link) is controlled by 10 keys on the keyboard, each corresponding to a change in ‘perlins’ movement. The following 10 commands were used:

-

-

- “-”/”=”: remove/add particles to the sketch (200 at a time)

- “a”/”d”: decrease/increase speed of the particles

- “s”/”w”: downward/upward movement of the particles

- “p”: all directional flow field (default vectors)

- “r”: random direction of the particle movement

- “c”: chaotic movement (rapidly changing vector field direction)

- “q”: color scheme change from white to black background and vice versa

-

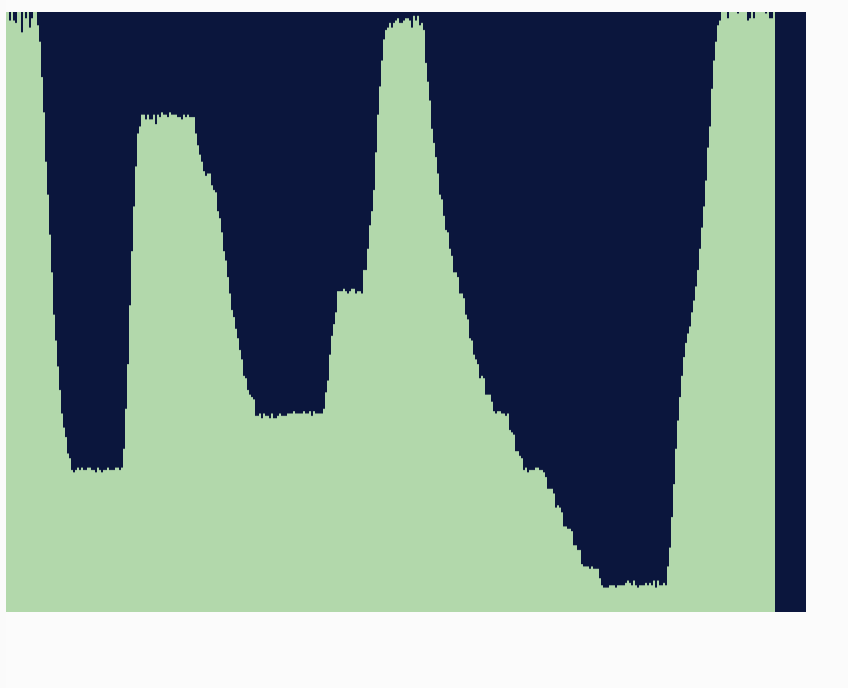

The snapshot below is one of the visualizations created using the control keys.

You can try the visualizer by clicking the interface below, adding particles with the “+” key, and using other keys for control:

The wearable prototype was connected to the p5 sketch via bluetooth, where acceleration and resistive capacitor data triggered different controls . The final files for the p5 sketch can be found on my GitHub.

Choreography and performance

The final puzzle piece was to test the prototype with the visualization engine using movement. Ultimately, I wanted to create a choreography that existed in harmony with the visuals and their evolution.

Choreographing with the wearable was an interesting experience to say the least… The prototype felt like a separate intelligent entity, often misbehaving and not reacting to the moves in the way I wanted. I had to be patient and also adapt my moves so that the visuals behaved and worked with me. I also noticed that many of my moves didn’t work with the visuals and it really constrained my dancing freedom which I did not expect. The following video is a compilation of footage from testing and the choreography process.

Finally, after spending some hours with the device I was able to choreograph a piece in which the gloves worked with me and the visuals felt in harmony with the movement. As per Tom Igoe’s advice, I also had Zoe, my project partner, use the manual keyboard controls in case my movements did not trigger certain visuals.

All-in-all, I performed the piece 8 times during the ITP winter show 2022. Certain times the wearable behaved better than others but regardless I loved each of the performances and developed a very special relationship with the wearable.

Takeaways and future development

Many questions came up during the project development, especially as related to the interaction between the dancer and the wearable. While we designed the wearable to control the visuals, in a way the dancer was controlled and in a way constrained by the wearable. So a question rises: does the dancer control the visuals or do the visuals control the dancer? This power dynamic also changes depending on if the dancer is able to see the visuals or not.

The process of choreography also felt different — I did not feel like I was choreographing by myself but that a had a moody and often constraining partner. The Perlin visualizer ended up dictating the choice of music, moves and performance style.

There were also some unresolved technical issues, primarily the ongoing interruption of the bluetooth signal that sometimes disrupted the performance, in which case the stage manager would take over the controls (thank you Zoe!).

I would like to continue developing this project in several directions. First, I want to finish incorporating machine learning for movement recognition. I hope to introduce controls that are based not only on the dancer’s movement but also experiment with the recognition of the quality, rhythm and emotion of the movement. Another direction that I would like to pursue is to further explore the question of control between the algorithm and the human, through improvisation by both the dancer and the algorithm.