Co-created with Zoe Margolis

For our final project, Zoe and I would like to create a device that helps dancers control visualizations that accompany their performance with movement. One of the inspirations that we explored was the work of Lisa Jamhoury. When we started thinking about such a control device we first considered using tracking sensors and software such as PoseNet or Kinect, but we decided to go a simpler way and use accelerometers. There is something very beautiful about having the piece of technology directly on your body so that you can physically feel connection to the control device. I also personally love signal processing and time series analysis, and the seeming anonymity that comes with it, i.e. no camera tracking, no body posture or face recognition recognition, just acceleration. Maybe it’s something about having worked with earthquakes for almost 10 years that draws me into accelerometers; after all, earthquake records are produced using triaxial accelerometers.

The user of this device is a performer, and they will have two accelerometers attached to their wrist. At later points we might consider introducing more sensors, but for now we start simple. The wires will run underneath a tight bodysuit to the Arduino and a battery that will be housed in a mic belt. The signal in the Arduino will be sent via Bluetooth to a computer which will create visuals using processed signal in either p5 or Touch Designer.

Our workplan for building a prototype and the progress so far is as follows:

- Fabricate the circuit using accelerometers, Arduino, and a battery.

So far we were able to set up one LIS3DH triple-axis accelerometer with an Arduino and read the x, y, z accelerations using I2C synchronous communication. We have not been able to figure out how to connect the second accelerometer yet. The data is currently being output as a string for each reading. For example for the two accelerometers the output will be a time stamp and accelerations for each accelerometer: “t, x1,y1,z1,x2,y2,z2” or “1,-0.84,4.92,8.67,-0.84,4.92,8.67”. - Send the data from accelerometers over Bluetooth to p5.

We have been able to set up Arduino as a peripheral device, and receive one string via Bluetooth on p5. However, at the moment we are having trouble receiving updated data from the Bluetooth. - Gather accelerometer data along with movement ‘labels’ to build a ML algorithm to identify moves.

We have been able to gather the data for two simple and distinct moves for the right hand as a start. The moves are arm raise and circular ‘door opening’. To produce a labeled time series data that has both accelerations and move ‘labels’ we added two buttons to the circuit each representing a move. The following video documents some of the data gathering process.

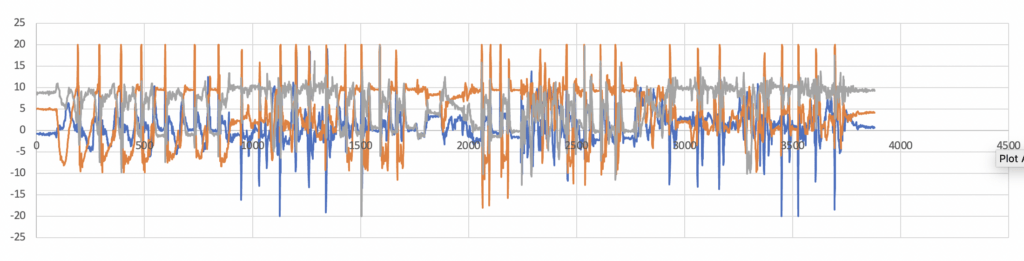

The output file is a .csv file that contain the following data: “t, x1,y1,z1, acc1 move1, acc1 move2, x2, y2, z2, acc2 move1, acc2 move2” or “1129,-9.32,19.58,9.92,0,1,-9.32,19.58,9.92,0,0”. So far we gathered nearly ~4000 data points of the right wrist, which is enough to start experimenting with an ML model. The triple-axis accelerometer data is shown below where you can see clear peaks when the moves were made.

- Train a machine learning model to recognize the moves in real-time.

This will be one of the main focus areas over the next two weeks. We are going to try to build a Long Short Term Memory (LSTM) network using tensorflow, because LTSM tends to work well with sequential data. - Create a p5 or Touch Designer visualization, where certain moves trigger changes.

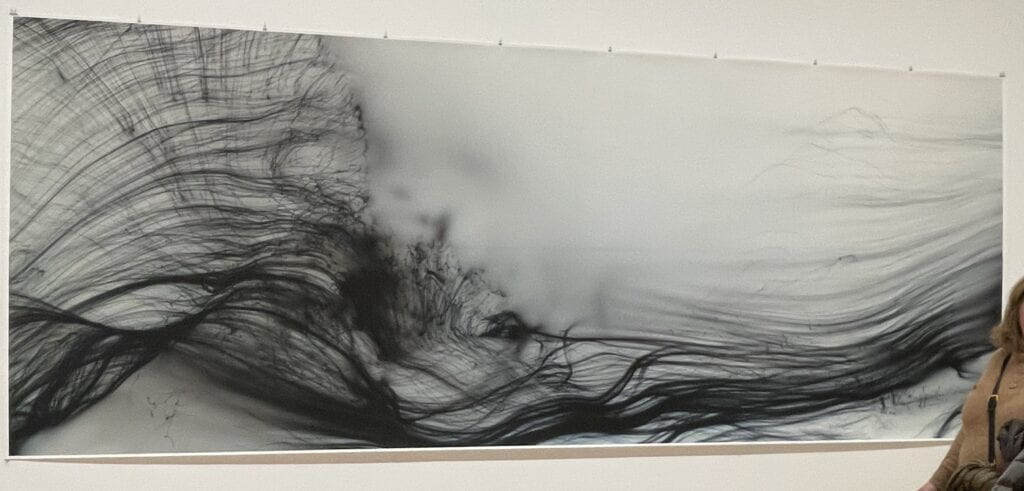

This part of the project hasn’t been started but we did find some visuals inspiration from MoMA’s Wolfgang Tillmans exhibit below. I think we can recreate a similar flowing feeling using Perlin noise in p5.

- Create a more permanent version of the prototype to be worn for a Winter Show performance.

This part of the project hasn’t been started yet.